https://fabian-sp.github.io/

On "infinite" learning-rate schedules and how to construct them from one checkpoint to the next.

fabian-sp.github.io/posts/2025/0...

On "infinite" learning-rate schedules and how to construct them from one checkpoint to the next.

fabian-sp.github.io/posts/2025/0...

Turns out that this behaviour can be described with a bound from *convex, nonsmooth* optimization.

A short thread on our latest paper 🚞

arxiv.org/abs/2501.18965

Turns out that this behaviour can be described with a bound from *convex, nonsmooth* optimization.

A short thread on our latest paper 🚞

arxiv.org/abs/2501.18965

🗞️ short blog post: fabian-sp.github.io/posts/2024/1...

📇 bib files: github.com/fabian-sp/ml-bib

🗞️ short blog post: fabian-sp.github.io/posts/2024/1...

📇 bib files: github.com/fabian-sp/ml-bib

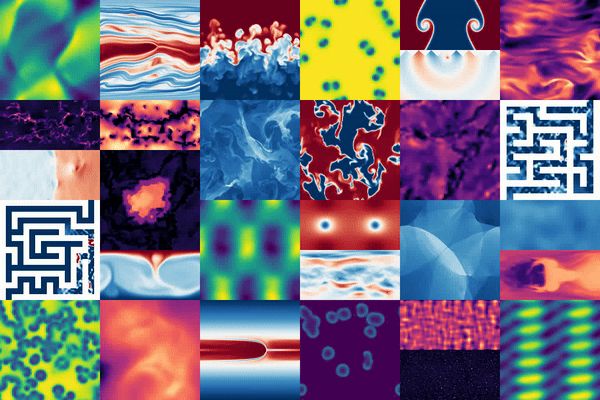

Introducing The Well: 16 datasets (15TB) for Machine Learning, from astrophysics to fluid dynamics and biology.

🐙: github.com/PolymathicAI...

📜: openreview.net/pdf?id=00Sx5...

Introducing The Well: 16 datasets (15TB) for Machine Learning, from astrophysics to fluid dynamics and biology.

🐙: github.com/PolymathicAI...

📜: openreview.net/pdf?id=00Sx5...

Then look up their reviews on ICLR 2025. I find these reviews completely arbitrary most of the times.

Then look up their reviews on ICLR 2025. I find these reviews completely arbitrary most of the times.

1. share

2. let me know if you want to be on the list!

(I have many new followers which I do not know well yet, so I'm sorry if you follow me and are not on here, but want to - drop me a note and I'll add you!)

go.bsky.app/DXdZkzV

1. share

2. let me know if you want to be on the list!

(I have many new followers which I do not know well yet, so I'm sorry if you follow me and are not on here, but want to - drop me a note and I'll add you!)

go.bsky.app/DXdZkzV