We explored this through the lens of MoEs:

We explored this through the lens of MoEs:

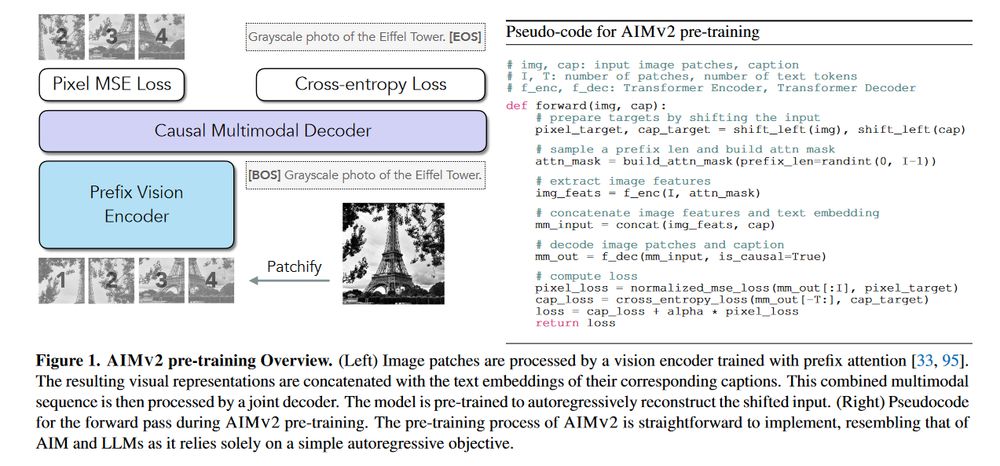

> like CLIP, but add a decoder and train on autoregression 🤯

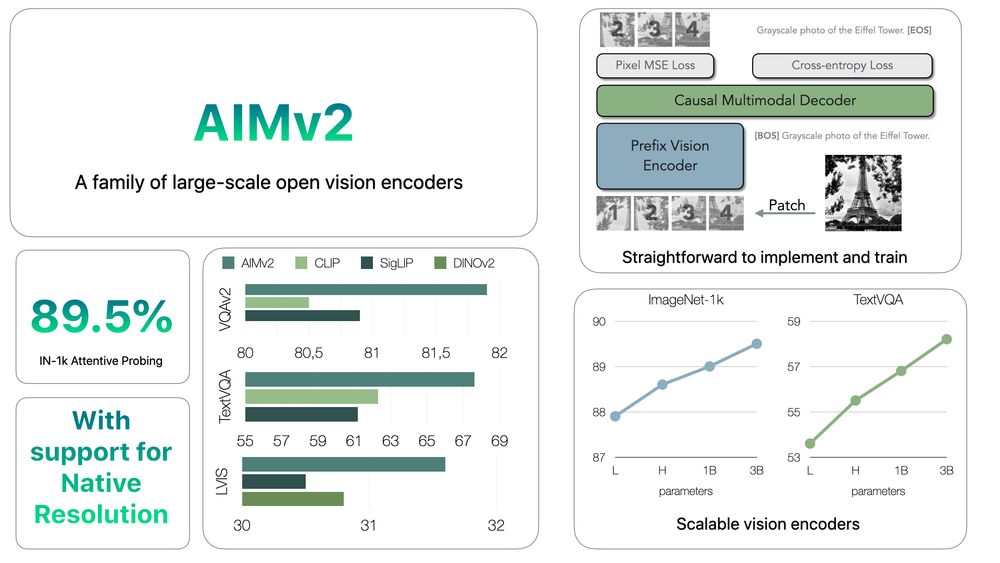

> 19 open models come in 300M, 600M, 1.2B, 2.7B with resolutions of 224, 336, 448

> Loadable and usable with 🤗 transformers huggingface.co/collections/...

> like CLIP, but add a decoder and train on autoregression 🤯

> 19 open models come in 300M, 600M, 1.2B, 2.7B with resolutions of 224, 336, 448

> Loadable and usable with 🤗 transformers huggingface.co/collections/...

Excellent work by @alaaelnouby.bsky.social & team with code and checkpoints already up:

arxiv.org/abs/2411.14402

Excellent work by @alaaelnouby.bsky.social & team with code and checkpoints already up:

arxiv.org/abs/2411.14402

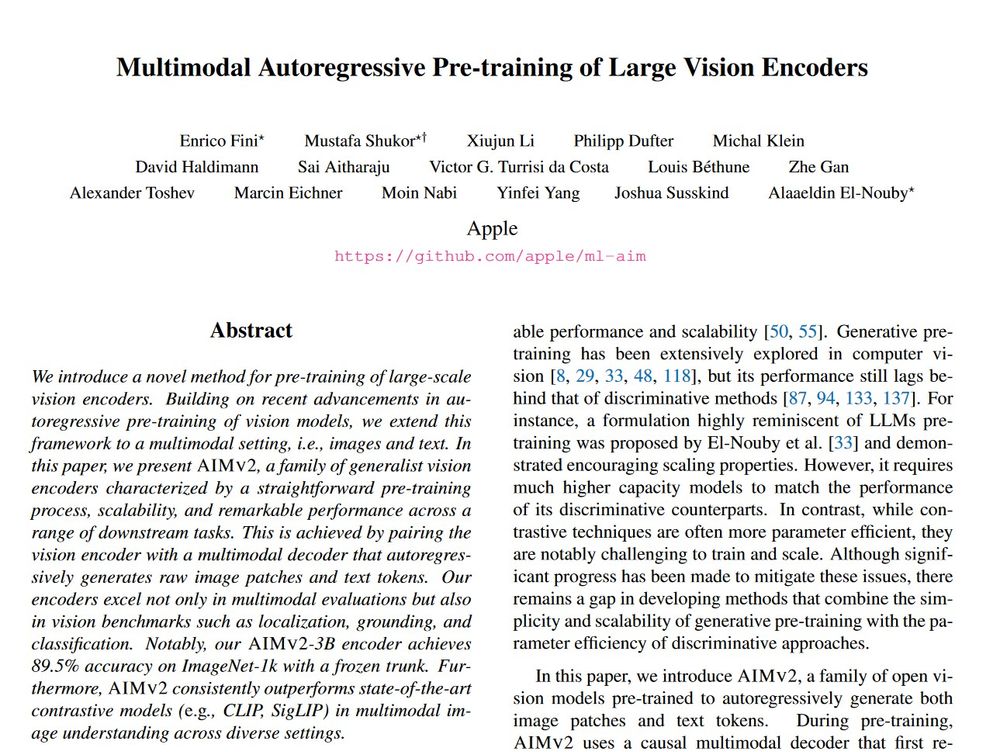

Enrico Fini et 15 al

tl;dr: in title. Scaling laws and ablations.

they claim to be better than SigLIP and DINOv2 for semantic tasks. I would be interested in monodepth performance though.

arxiv.org/abs/2411.14402

Enrico Fini et 15 al

tl;dr: in title. Scaling laws and ablations.

they claim to be better than SigLIP and DINOv2 for semantic tasks. I would be interested in monodepth performance though.

arxiv.org/abs/2411.14402

Delighted to share AIMv2, a family of strong, scalable, and open vision encoders that excel at multimodal understanding, recognition, and grounding 🧵

paper: arxiv.org/abs/2411.14402

code: github.com/apple/ml-aim

HF: huggingface.co/collections/...

Delighted to share AIMv2, a family of strong, scalable, and open vision encoders that excel at multimodal understanding, recognition, and grounding 🧵

paper: arxiv.org/abs/2411.14402

code: github.com/apple/ml-aim

HF: huggingface.co/collections/...