Pawel Brodzinski

@pawelbrodzinski.bsky.social

Leader of an org where anyone can make any decision (Lunar Logic).

Doing anything that no one else wants to do.

A mouthful on product development, org design, lean/agile, IT in general.

Doing anything that no one else wants to do.

A mouthful on product development, org design, lean/agile, IT in general.

Pro tip 1: If escalation is the only way to get competent customer support, people will escalate.

Pro tip 2: If you escalate only when people are assholes to your consultants, they will act like assholes to get help.

Pro tip 2: If you escalate only when people are assholes to your consultants, they will act like assholes to get help.

November 9, 2025 at 2:50 PM

Pro tip 1: If escalation is the only way to get competent customer support, people will escalate.

Pro tip 2: If you escalate only when people are assholes to your consultants, they will act like assholes to get help.

Pro tip 2: If you escalate only when people are assholes to your consultants, they will act like assholes to get help.

AI support:

Me: Stop resetting my password every other week

AI: Here's how you can reset your password

Me: I want to talk to a human

AI: What do you want to do next?

Me: Talk to a human

AI: Do you want to talk to a human?

Me: Yes

Once I get to talk to a human, I'm gonna be pissed off, guaranteed.

Me: Stop resetting my password every other week

AI: Here's how you can reset your password

Me: I want to talk to a human

AI: What do you want to do next?

Me: Talk to a human

AI: Do you want to talk to a human?

Me: Yes

Once I get to talk to a human, I'm gonna be pissed off, guaranteed.

November 9, 2025 at 1:59 PM

AI support:

Me: Stop resetting my password every other week

AI: Here's how you can reset your password

Me: I want to talk to a human

AI: What do you want to do next?

Me: Talk to a human

AI: Do you want to talk to a human?

Me: Yes

Once I get to talk to a human, I'm gonna be pissed off, guaranteed.

Me: Stop resetting my password every other week

AI: Here's how you can reset your password

Me: I want to talk to a human

AI: What do you want to do next?

Me: Talk to a human

AI: Do you want to talk to a human?

Me: Yes

Once I get to talk to a human, I'm gonna be pissed off, guaranteed.

If you develop a popup window to fill in a confirmation code, make sure you set focus on the entry field.

There. You literally save a few seconds for just about any user for just about as many times as they're forced to interact with the form.

Literally, 10s of work. No AI needed. Thank you.

There. You literally save a few seconds for just about any user for just about as many times as they're forced to interact with the form.

Literally, 10s of work. No AI needed. Thank you.

November 7, 2025 at 7:59 PM

If you develop a popup window to fill in a confirmation code, make sure you set focus on the entry field.

There. You literally save a few seconds for just about any user for just about as many times as they're forced to interact with the form.

Literally, 10s of work. No AI needed. Thank you.

There. You literally save a few seconds for just about any user for just about as many times as they're forced to interact with the form.

Literally, 10s of work. No AI needed. Thank you.

Vibe-coded app. Authorization.

"Add localhost:3000/auth/linkedi... to authorized redirect URLs"

It is "works well on my machine" of the AI era :D

"Add localhost:3000/auth/linkedi... to authorized redirect URLs"

It is "works well on my machine" of the AI era :D

November 5, 2025 at 5:39 PM

Vibe-coded app. Authorization.

"Add localhost:3000/auth/linkedi... to authorized redirect URLs"

It is "works well on my machine" of the AI era :D

"Add localhost:3000/auth/linkedi... to authorized redirect URLs"

It is "works well on my machine" of the AI era :D

"Unlike 1999, tech’s profits justify the premium."

Are these profits coming from AI? Save for hardware vendors, which AI wave made fly, what profits are we talking about?

And what happens to Nvidia when it's suddenly clear that OpenAI isn't on a path to profitability?

substack.com/inbox/post/1...

Are these profits coming from AI? Save for hardware vendors, which AI wave made fly, what profits are we talking about?

And what happens to Nvidia when it's suddenly clear that OpenAI isn't on a path to profitability?

substack.com/inbox/post/1...

November 3, 2025 at 8:01 PM

"Unlike 1999, tech’s profits justify the premium."

Are these profits coming from AI? Save for hardware vendors, which AI wave made fly, what profits are we talking about?

And what happens to Nvidia when it's suddenly clear that OpenAI isn't on a path to profitability?

substack.com/inbox/post/1...

Are these profits coming from AI? Save for hardware vendors, which AI wave made fly, what profits are we talking about?

And what happens to Nvidia when it's suddenly clear that OpenAI isn't on a path to profitability?

substack.com/inbox/post/1...

Just thought about it: an LLM is playing One Word Story except all the players are the LLM.

bbbpress.com/2013/01/one-...

bbbpress.com/2013/01/one-...

Drama Game: One Word Story - Beat by Beat Press

Type: Warm-up. Purpose: To work as a team. To work on focus. Materials: A big enough space for the entire class to sit in a circle comfortably. Procedure: Players sit in a circle. One person says a ...

bbbpress.com

November 3, 2025 at 11:44 AM

Just thought about it: an LLM is playing One Word Story except all the players are the LLM.

bbbpress.com/2013/01/one-...

bbbpress.com/2013/01/one-...

Hype wave versus reality.

Upon seeing the chart, I was like:

* Your ARR is not annual (can't be yet)

* Nor is it recurring (can't know yet)

After 10s research (literally clicking the links):

* It's also fake

* And scam

Check your sources, people!

Upon seeing the chart, I was like:

* Your ARR is not annual (can't be yet)

* Nor is it recurring (can't know yet)

After 10s research (literally clicking the links):

* It's also fake

* And scam

Check your sources, people!

November 3, 2025 at 10:32 AM

Hype wave versus reality.

Upon seeing the chart, I was like:

* Your ARR is not annual (can't be yet)

* Nor is it recurring (can't know yet)

After 10s research (literally clicking the links):

* It's also fake

* And scam

Check your sources, people!

Upon seeing the chart, I was like:

* Your ARR is not annual (can't be yet)

* Nor is it recurring (can't know yet)

After 10s research (literally clicking the links):

* It's also fake

* And scam

Check your sources, people!

"We haven't yet shown a single dollar of profit, but we're already too big to fail."

Welcome to 2025.

What do you not understand?

Welcome to 2025.

What do you not understand?

November 3, 2025 at 8:37 AM

"We haven't yet shown a single dollar of profit, but we're already too big to fail."

Welcome to 2025.

What do you not understand?

Welcome to 2025.

What do you not understand?

Here's my little experiment on how proliferated LinkedIn is with automatic AI bots. I would appreciate sharing and/or reacting to it to increase its range:

www.linkedin.com/feed/update/...

Thank you in advance.

www.linkedin.com/feed/update/...

Thank you in advance.

One of the most valuable aspects of an organizational culture is what I call an experimentation mindset. It's about being open and willing to continuously try things and learn from the process.

O...

One of the most valuable aspects of an organizational culture is what I call an experimentation mindset. It's about being open and willing to continuously try things and learn from the process.

Or in...

www.linkedin.com

October 31, 2025 at 2:39 PM

Here's my little experiment on how proliferated LinkedIn is with automatic AI bots. I would appreciate sharing and/or reacting to it to increase its range:

www.linkedin.com/feed/update/...

Thank you in advance.

www.linkedin.com/feed/update/...

Thank you in advance.

@kentbeck.com's post (substack.com/inbox/post/1...) reminded me about my own "disastrous failure" story.

In the end, our clients just ended up with a bit of extra work. Nothing too serious.

In the end, our clients just ended up with a bit of extra work. Nothing too serious.

October 30, 2025 at 5:15 PM

@kentbeck.com's post (substack.com/inbox/post/1...) reminded me about my own "disastrous failure" story.

In the end, our clients just ended up with a bit of extra work. Nothing too serious.

In the end, our clients just ended up with a bit of extra work. Nothing too serious.

That didn't age overly well.

It still somehow escapes me how these English developers build successful products.

It still somehow escapes me how these English developers build successful products.

October 30, 2025 at 4:11 PM

That didn't age overly well.

It still somehow escapes me how these English developers build successful products.

It still somehow escapes me how these English developers build successful products.

For years, statistical forecasting was the way to go for me when someone asked for an estimate.

Then I got back to simple linear approximation.

TL;DR: It's much quicker. And good enough.

www.linkedin.com/feed/update/...

Then I got back to simple linear approximation.

TL;DR: It's much quicker. And good enough.

www.linkedin.com/feed/update/...

October 30, 2025 at 3:30 PM

For years, statistical forecasting was the way to go for me when someone asked for an estimate.

Then I got back to simple linear approximation.

TL;DR: It's much quicker. And good enough.

www.linkedin.com/feed/update/...

Then I got back to simple linear approximation.

TL;DR: It's much quicker. And good enough.

www.linkedin.com/feed/update/...

My not-so-love letter to Lovable's stellar growth.

TL;DR: Lovable's reported growth is a classic vanity metric, and it won't last.

pawelbrodzinski.substack.com/p/lovables-a...

TL;DR: Lovable's reported growth is a classic vanity metric, and it won't last.

pawelbrodzinski.substack.com/p/lovables-a...

Lovable’s ARR is Vanity Metric 2.0

Lovable's claim to be the fastest growing startup ever is based on their Annual Recurring Revenue traction. In their case, however, ARR is basically a vanity metric.

pawelbrodzinski.substack.com

October 29, 2025 at 10:47 AM

My not-so-love letter to Lovable's stellar growth.

TL;DR: Lovable's reported growth is a classic vanity metric, and it won't last.

pawelbrodzinski.substack.com/p/lovables-a...

TL;DR: Lovable's reported growth is a classic vanity metric, and it won't last.

pawelbrodzinski.substack.com/p/lovables-a...

Reposted by Pawel Brodzinski

Debugging is twice as hard as writing the code in the first place. Therefore, if you write the code as cleverly as possible, you are, by definition, not smart enough to debug it. - Brian Kernighan

October 27, 2025 at 3:42 PM

Debugging is twice as hard as writing the code in the first place. Therefore, if you write the code as cleverly as possible, you are, by definition, not smart enough to debug it. - Brian Kernighan

Linear announced "the best feature ever" which is Slack integration that automatically adds a work item based on AI interpreting the conversation.

Well, so much for judgment in product development.

That's a totally new level of AI slop. Work generated by AI for humans :)

Well, so much for judgment in product development.

That's a totally new level of AI slop. Work generated by AI for humans :)

October 27, 2025 at 3:56 PM

Linear announced "the best feature ever" which is Slack integration that automatically adds a work item based on AI interpreting the conversation.

Well, so much for judgment in product development.

That's a totally new level of AI slop. Work generated by AI for humans :)

Well, so much for judgment in product development.

That's a totally new level of AI slop. Work generated by AI for humans :)

Vanity metric: a metric that makes you look good, but doesn't necessarily help to understand company performance or inform its strategy.

ARR should thus be a vanity metric.

It tells nothing about:

* ramping AI infrastructure costs (per user)

* hype-wave effect on future revenue predictions

ARR should thus be a vanity metric.

It tells nothing about:

* ramping AI infrastructure costs (per user)

* hype-wave effect on future revenue predictions

October 27, 2025 at 2:53 PM

Vanity metric: a metric that makes you look good, but doesn't necessarily help to understand company performance or inform its strategy.

ARR should thus be a vanity metric.

It tells nothing about:

* ramping AI infrastructure costs (per user)

* hype-wave effect on future revenue predictions

ARR should thus be a vanity metric.

It tells nothing about:

* ramping AI infrastructure costs (per user)

* hype-wave effect on future revenue predictions

"Goals are tidy. Systems are messy."

We don't fix productivity by sprinkling OKRs at our work.

It won't do much unless we agree on the basics:

What does "in progress" mean?

How do we choose the next most important thing?

What's the most desired outcome of our work?

substack.com/home/post/p-...

We don't fix productivity by sprinkling OKRs at our work.

It won't do much unless we agree on the basics:

What does "in progress" mean?

How do we choose the next most important thing?

What's the most desired outcome of our work?

substack.com/home/post/p-...

You Don’t Rise to the Level of Your Goals, You Flow to the Level of Your Systems

Why great teams don’t chase bigger goals, but build better systems that make ambition sustainable.

substack.com

October 27, 2025 at 11:04 AM

"Goals are tidy. Systems are messy."

We don't fix productivity by sprinkling OKRs at our work.

It won't do much unless we agree on the basics:

What does "in progress" mean?

How do we choose the next most important thing?

What's the most desired outcome of our work?

substack.com/home/post/p-...

We don't fix productivity by sprinkling OKRs at our work.

It won't do much unless we agree on the basics:

What does "in progress" mean?

How do we choose the next most important thing?

What's the most desired outcome of our work?

substack.com/home/post/p-...

"ARR tends to be nothing more than just an appealing lie."

What's reported as annual and recurring these days, surprisingly rarely is either.

substack.com/home/post/p-...

What's reported as annual and recurring these days, surprisingly rarely is either.

substack.com/home/post/p-...

Future Plateau: Neat Example of a Compound Metric

Future Plateau is a practical example of a forward-looking, compound metric, which allows for a neat what-if analysis.

substack.com

October 24, 2025 at 12:11 PM

"ARR tends to be nothing more than just an appealing lie."

What's reported as annual and recurring these days, surprisingly rarely is either.

substack.com/home/post/p-...

What's reported as annual and recurring these days, surprisingly rarely is either.

substack.com/home/post/p-...

“AI Models Can Now Predict What Customers Would Buy—Almost Like Humans”

Now, can they?

TL;DR: Nope.

substack.com/inbox/post/1...

Now, can they?

TL;DR: Nope.

substack.com/inbox/post/1...

Why AI cannot simulate your customers behaviour

"Founders would literally rather boil the ocean than talk to customers."

substack.com

October 24, 2025 at 9:38 AM

“AI Models Can Now Predict What Customers Would Buy—Almost Like Humans”

Now, can they?

TL;DR: Nope.

substack.com/inbox/post/1...

Now, can they?

TL;DR: Nope.

substack.com/inbox/post/1...

So, how do we navigate in a landscape where we can't trust that any bit of content is:

a) created by a human

b) not fake

?

Trust networks. Something we've been doing for millennia.

As a bonus, it's also a damn good antidote for AI slop.

brodzinski.com/2025/10/trus...

a) created by a human

b) not fake

?

Trust networks. Something we've been doing for millennia.

As a bonus, it's also a damn good antidote for AI slop.

brodzinski.com/2025/10/trus...

Trust Networks as Antidote to AI Slop - Pawel Brodzinski on Leadership in Technology

In response to AI slop we will increasingly rely on trust networks in professional dealings. Trust will be the new business currency.

brodzinski.com

October 22, 2025 at 2:51 PM

So, how do we navigate in a landscape where we can't trust that any bit of content is:

a) created by a human

b) not fake

?

Trust networks. Something we've been doing for millennia.

As a bonus, it's also a damn good antidote for AI slop.

brodzinski.com/2025/10/trus...

a) created by a human

b) not fake

?

Trust networks. Something we've been doing for millennia.

As a bonus, it's also a damn good antidote for AI slop.

brodzinski.com/2025/10/trus...

Code review karma: the better code reviews you give, the better ones you receive.

That and more stats behind code reviews): substack.com/inbox/post/1...

That and more stats behind code reviews): substack.com/inbox/post/1...

October 22, 2025 at 9:26 AM

Code review karma: the better code reviews you give, the better ones you receive.

That and more stats behind code reviews): substack.com/inbox/post/1...

That and more stats behind code reviews): substack.com/inbox/post/1...

Find 5 differences.

The top picture is from Musk's tweet mocking the AWS outage.

The bottom is from the original CNBC article.

(And it's not to defend AWS or anything.)

The top picture is from Musk's tweet mocking the AWS outage.

The bottom is from the original CNBC article.

(And it's not to defend AWS or anything.)

October 21, 2025 at 1:39 PM

Find 5 differences.

The top picture is from Musk's tweet mocking the AWS outage.

The bottom is from the original CNBC article.

(And it's not to defend AWS or anything.)

The top picture is from Musk's tweet mocking the AWS outage.

The bottom is from the original CNBC article.

(And it's not to defend AWS or anything.)

Reposted by Pawel Brodzinski

When you're drinking from a firehose, the performance limit in that situation isn't the firehose.

October 20, 2025 at 6:27 PM

When you're drinking from a firehose, the performance limit in that situation isn't the firehose.

Candidates mass applying with AI.

Companies mass rejecting with AI.

(Not so new) hacks to trick the AI tools emerge.

Both hiring and looking for a job is sure fun these days.

Is it only me, or is it not sustainable?

Source: substack.com/inbox/post/1...

Companies mass rejecting with AI.

(Not so new) hacks to trick the AI tools emerge.

Both hiring and looking for a job is sure fun these days.

Is it only me, or is it not sustainable?

Source: substack.com/inbox/post/1...

October 20, 2025 at 2:40 PM

Candidates mass applying with AI.

Companies mass rejecting with AI.

(Not so new) hacks to trick the AI tools emerge.

Both hiring and looking for a job is sure fun these days.

Is it only me, or is it not sustainable?

Source: substack.com/inbox/post/1...

Companies mass rejecting with AI.

(Not so new) hacks to trick the AI tools emerge.

Both hiring and looking for a job is sure fun these days.

Is it only me, or is it not sustainable?

Source: substack.com/inbox/post/1...

Reposted by Pawel Brodzinski

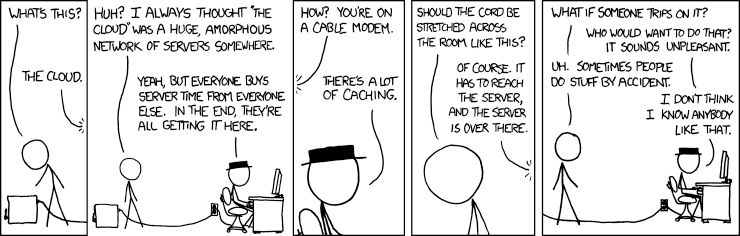

xkcd.com/908/

This classic XKCD cartoon seems...relevant today

This classic XKCD cartoon seems...relevant today

October 20, 2025 at 10:13 AM

xkcd.com/908/

This classic XKCD cartoon seems...relevant today

This classic XKCD cartoon seems...relevant today