Nicola Branchini

@nicolabranchini.bsky.social

🇮🇹 Stats PhD @ University of Edinburgh 🏴

@ellis.eu PhD - visiting @avehtari.bsky.social 🇫🇮

🤔💭 Monte Carlo, probabilistic ML.

Interested in many things relating to probML, keen to learn applications in climate/science.

https://www.branchini.fun/about

@ellis.eu PhD - visiting @avehtari.bsky.social 🇫🇮

🤔💭 Monte Carlo, probabilistic ML.

Interested in many things relating to probML, keen to learn applications in climate/science.

https://www.branchini.fun/about

Pinned

Towards Adaptive Self-Normalized Importance Samplers

The self-normalized importance sampling (SNIS) estimator is a Monte Carlo estimator widely used to approximate expectations in statistical signal processing and machine learning.

The efficiency of S...

arxiv.org

🚨 New paper: “Towards Adaptive Self-Normalized IS”, @ IEEE Statistical Signal Processing Workshop.

TLDR;

To estimate µ = E_p[f(θ)] with SNIS, instead of doing MCMC on p(θ) or learning a parametric q(θ), we try MCMC directly on p(θ)| f(θ)-µ | (variance-minimizing proposal).

arxiv.org/abs/2505.00372

TLDR;

To estimate µ = E_p[f(θ)] with SNIS, instead of doing MCMC on p(θ) or learning a parametric q(θ), we try MCMC directly on p(θ)| f(θ)-µ | (variance-minimizing proposal).

arxiv.org/abs/2505.00372

Reposted by Nicola Branchini

Now I'm also looking for a research software engineer to implement a pile of research results to R packages loo, posterior, bayesplot, projpred, priorsense, brms or/and Python packages ArviZ, Bambi and Kulprit. Apply by email with no specific deadline (see contact info at users.aalto.fi/~ave/)

I'm now also looking for a postdoc with strong Bayesian background and interest in developing Bayesian cross-validation theory, methods and software. Apply by email with no specific deadline (see contact information at users.aalto.fi/~ave/).

Others, please share

Others, please share

I'm looking for a doctoral student with Bayesian background to work on Bayesian workflow and cross-validation (see my publication list users.aalto.fi/~ave/publica... for my recent work) at Aalto University.

Apply through the ELLIS PhD program (dl October 31) ellis.eu/news/ellis-p...

Apply through the ELLIS PhD program (dl October 31) ellis.eu/news/ellis-p...

November 3, 2025 at 11:13 AM

Now I'm also looking for a research software engineer to implement a pile of research results to R packages loo, posterior, bayesplot, projpred, priorsense, brms or/and Python packages ArviZ, Bambi and Kulprit. Apply by email with no specific deadline (see contact info at users.aalto.fi/~ave/)

Reposted by Nicola Branchini

A little self promotion: Hai-Dang Dau (NUS) and I recently released this pre-print, which I'm not half proud of.

arxiv.org/abs/2510.07559

The main problem we solve in it is to construct importance weights for Markov chain Monte Carlo. We achieve it via a method we call harmonization by coupling.

arxiv.org/abs/2510.07559

The main problem we solve in it is to construct importance weights for Markov chain Monte Carlo. We achieve it via a method we call harmonization by coupling.

October 20, 2025 at 4:10 PM

A little self promotion: Hai-Dang Dau (NUS) and I recently released this pre-print, which I'm not half proud of.

arxiv.org/abs/2510.07559

The main problem we solve in it is to construct importance weights for Markov chain Monte Carlo. We achieve it via a method we call harmonization by coupling.

arxiv.org/abs/2510.07559

The main problem we solve in it is to construct importance weights for Markov chain Monte Carlo. We achieve it via a method we call harmonization by coupling.

It's always amusing to traumatise people by explaining how surprise oral tests work in italian high school

November 3, 2025 at 8:58 AM

It's always amusing to traumatise people by explaining how surprise oral tests work in italian high school

Reposted by Nicola Branchini

Idealized science: take walk in the park, think very hard, be struck by brilliant insight. Marvel.

Science frequently: it is week three of being unable to reproduce my experiment from six months ago. Was I wrong then or am I wrong now? Is it just noise? I have not seen the sun.

Science frequently: it is week three of being unable to reproduce my experiment from six months ago. Was I wrong then or am I wrong now? Is it just noise? I have not seen the sun.

November 1, 2025 at 6:45 PM

Idealized science: take walk in the park, think very hard, be struck by brilliant insight. Marvel.

Science frequently: it is week three of being unable to reproduce my experiment from six months ago. Was I wrong then or am I wrong now? Is it just noise? I have not seen the sun.

Science frequently: it is week three of being unable to reproduce my experiment from six months ago. Was I wrong then or am I wrong now? Is it just noise? I have not seen the sun.

Reposted by Nicola Branchini

The Principles of Diffusion Models

It traces the core ideas that shaped diffusion modeling and explains how today’s models work, why they work, and where they’re heading.

www.arxiv.org/abs/2510.21890

It traces the core ideas that shaped diffusion modeling and explains how today’s models work, why they work, and where they’re heading.

www.arxiv.org/abs/2510.21890

October 29, 2025 at 3:19 AM

The Principles of Diffusion Models

It traces the core ideas that shaped diffusion modeling and explains how today’s models work, why they work, and where they’re heading.

www.arxiv.org/abs/2510.21890

It traces the core ideas that shaped diffusion modeling and explains how today’s models work, why they work, and where they’re heading.

www.arxiv.org/abs/2510.21890

From the reviewer's side, I quite like that TMLR has no explicit "accept - reject" decision/button. Rather, two important text boxes about whether the paper has clear/convincing evidence and requested changes.

This framing mitigates system 1 thinking of seeing "reject/accept" decisions

This framing mitigates system 1 thinking of seeing "reject/accept" decisions

October 21, 2025 at 9:45 AM

From the reviewer's side, I quite like that TMLR has no explicit "accept - reject" decision/button. Rather, two important text boxes about whether the paper has clear/convincing evidence and requested changes.

This framing mitigates system 1 thinking of seeing "reject/accept" decisions

This framing mitigates system 1 thinking of seeing "reject/accept" decisions

Reposted by Nicola Branchini

The true academic method: overthink, underdeliver, cite yourself.

October 14, 2025 at 10:25 AM

The true academic method: overthink, underdeliver, cite yourself.

Reposted by Nicola Branchini

24. arxiv.org/abs/2510.00389

'Zero variance self-normalized importance sampling via estimating equations'

- Art B. Owen

Even with optimal proposals, achieving zero variance with SNIS-type estimators requires some innovative thinking. This work explains how an optimisation formulation can apply.

'Zero variance self-normalized importance sampling via estimating equations'

- Art B. Owen

Even with optimal proposals, achieving zero variance with SNIS-type estimators requires some innovative thinking. This work explains how an optimisation formulation can apply.

October 4, 2025 at 4:03 PM

24. arxiv.org/abs/2510.00389

'Zero variance self-normalized importance sampling via estimating equations'

- Art B. Owen

Even with optimal proposals, achieving zero variance with SNIS-type estimators requires some innovative thinking. This work explains how an optimisation formulation can apply.

'Zero variance self-normalized importance sampling via estimating equations'

- Art B. Owen

Even with optimal proposals, achieving zero variance with SNIS-type estimators requires some innovative thinking. This work explains how an optimisation formulation can apply.

Reposted by Nicola Branchini

I'm looking for a doctoral student with Bayesian background to work on Bayesian workflow and cross-validation (see my publication list users.aalto.fi/~ave/publica... for my recent work) at Aalto University.

Apply through the ELLIS PhD program (dl October 31) ellis.eu/news/ellis-p...

Apply through the ELLIS PhD program (dl October 31) ellis.eu/news/ellis-p...

ELLIS PhD Program: Call for Applications 2025

The ELLIS mission is to create a diverse European network that promotes research excellence and advances breakthroughs in AI, as well as a pan-European PhD program to educate the next generation of AI...

ellis.eu

October 6, 2025 at 9:28 AM

I'm looking for a doctoral student with Bayesian background to work on Bayesian workflow and cross-validation (see my publication list users.aalto.fi/~ave/publica... for my recent work) at Aalto University.

Apply through the ELLIS PhD program (dl October 31) ellis.eu/news/ellis-p...

Apply through the ELLIS PhD program (dl October 31) ellis.eu/news/ellis-p...

Posting a few nice importance sampling-related finds

"Value-aware Importance Weighting for Off-policy Reinforcement Learning"

proceedings.mlr.press/v232/de-asis...

"Value-aware Importance Weighting for Off-policy Reinforcement Learning"

proceedings.mlr.press/v232/de-asis...

October 4, 2025 at 4:01 PM

Posting a few nice importance sampling-related finds

"Value-aware Importance Weighting for Off-policy Reinforcement Learning"

proceedings.mlr.press/v232/de-asis...

"Value-aware Importance Weighting for Off-policy Reinforcement Learning"

proceedings.mlr.press/v232/de-asis...

Reposted by Nicola Branchini

It’s a JAX, JAX, JAX, JAX World

statmodeling.stat.columbia.edu/2025/10/03/i...

statmodeling.stat.columbia.edu/2025/10/03/i...

It’s a JAX, JAX, JAX, JAX World | Statistical Modeling, Causal Inference, and Social Science

statmodeling.stat.columbia.edu

October 3, 2025 at 10:55 PM

It’s a JAX, JAX, JAX, JAX World

statmodeling.stat.columbia.edu/2025/10/03/i...

statmodeling.stat.columbia.edu/2025/10/03/i...

Reposted by Nicola Branchini

I am happy to announce that the Workshop on Emerging Trends in Automatic Control will take place at Aalto University on Sept 26.

Speakers include Lihua Xie, Karl H. Johansson, Jonathan How, Andrea Serrani, Carolyn L. Beck, and others.

#ControlTheory #AutomaticControl #AaltoUniversity #IEEE

Speakers include Lihua Xie, Karl H. Johansson, Jonathan How, Andrea Serrani, Carolyn L. Beck, and others.

#ControlTheory #AutomaticControl #AaltoUniversity #IEEE

September 8, 2025 at 12:32 PM

I am happy to announce that the Workshop on Emerging Trends in Automatic Control will take place at Aalto University on Sept 26.

Speakers include Lihua Xie, Karl H. Johansson, Jonathan How, Andrea Serrani, Carolyn L. Beck, and others.

#ControlTheory #AutomaticControl #AaltoUniversity #IEEE

Speakers include Lihua Xie, Karl H. Johansson, Jonathan How, Andrea Serrani, Carolyn L. Beck, and others.

#ControlTheory #AutomaticControl #AaltoUniversity #IEEE

Reposted by Nicola Branchini

Just finished delivering a course on 'Robust and scalable simulation-based inference (SBI)' at Greek Stochastics. This covered an introduction to SBI, open challenges, and some recent contributions from my own group.

The slides are now available here: fxbriol.github.io/pdfs/slides-....

The slides are now available here: fxbriol.github.io/pdfs/slides-....

August 28, 2025 at 11:46 AM

Just finished delivering a course on 'Robust and scalable simulation-based inference (SBI)' at Greek Stochastics. This covered an introduction to SBI, open challenges, and some recent contributions from my own group.

The slides are now available here: fxbriol.github.io/pdfs/slides-....

The slides are now available here: fxbriol.github.io/pdfs/slides-....

Reposted by Nicola Branchini

The countdown is on!

Join us in 48 hours for a special announcement about Hollow Knight: Silksong!

Premiering here: youtu.be/6XGeJwsUP9c

Join us in 48 hours for a special announcement about Hollow Knight: Silksong!

Premiering here: youtu.be/6XGeJwsUP9c

Hollow Knight: Silksong - Special Announcement

YouTube video by Team Cherry

youtu.be

August 19, 2025 at 2:33 PM

The countdown is on!

Join us in 48 hours for a special announcement about Hollow Knight: Silksong!

Premiering here: youtu.be/6XGeJwsUP9c

Join us in 48 hours for a special announcement about Hollow Knight: Silksong!

Premiering here: youtu.be/6XGeJwsUP9c

Reposted by Nicola Branchini

Turing Lectures at ICTS

www.youtube.com/watch?v=_fF6...

www.youtube.com/watch?v=mGuK...

www.youtube.com/watch?v=yRDa...

www.youtube.com/watch?v=_fF6...

www.youtube.com/watch?v=mGuK...

www.youtube.com/watch?v=yRDa...

The Mathematics of Large Machine Learning Models (Lecture 1) by Andrea Montanari

YouTube video by International Centre for Theoretical Sciences

www.youtube.com

August 19, 2025 at 2:58 AM

Today I learnt this Galileo Galilei quote:

"I value more the finding of a truth, even if about something trivial, than the long disputing of the greatest questions without attaining any truth at all"

Feels like we could use some of that in research tbh..

"I value more the finding of a truth, even if about something trivial, than the long disputing of the greatest questions without attaining any truth at all"

Feels like we could use some of that in research tbh..

August 8, 2025 at 5:20 PM

Today I learnt this Galileo Galilei quote:

"I value more the finding of a truth, even if about something trivial, than the long disputing of the greatest questions without attaining any truth at all"

Feels like we could use some of that in research tbh..

"I value more the finding of a truth, even if about something trivial, than the long disputing of the greatest questions without attaining any truth at all"

Feels like we could use some of that in research tbh..

It is somewhat amusing to see other reviewers confidently and insistingly rejecting alternative proposals (in suitable settings) to SGD/Adam in VI/divergence minimization problems

August 7, 2025 at 12:11 PM

It is somewhat amusing to see other reviewers confidently and insistingly rejecting alternative proposals (in suitable settings) to SGD/Adam in VI/divergence minimization problems

Flying today towards Chicago 🌆 for MCM 2025

fjhickernell.github.io/mcm2025/prog...

Will give a talk on our recent/ongoing works on self-normalized importance sampling, including learning a proposal with MCMC and ratio diagnostics.

www.branchini.fun/pubs

fjhickernell.github.io/mcm2025/prog...

Will give a talk on our recent/ongoing works on self-normalized importance sampling, including learning a proposal with MCMC and ratio diagnostics.

www.branchini.fun/pubs

MCM 2025 - Program | MCM 2025 Chicago

MCM 2025 -- Program.

fjhickernell.github.io

July 24, 2025 at 9:06 AM

Flying today towards Chicago 🌆 for MCM 2025

fjhickernell.github.io/mcm2025/prog...

Will give a talk on our recent/ongoing works on self-normalized importance sampling, including learning a proposal with MCMC and ratio diagnostics.

www.branchini.fun/pubs

fjhickernell.github.io/mcm2025/prog...

Will give a talk on our recent/ongoing works on self-normalized importance sampling, including learning a proposal with MCMC and ratio diagnostics.

www.branchini.fun/pubs

This new ICML paper (openreview.net/forum?id=Tzf...) reinforces and insists on the notion that one would want to replace the posterior predictive P* := ∫ p(y|θ) p(θ|D) ,

with P^{q} := ∫ p(y|θ) q(θ) , with q(θ) ≈ p(θ|D), then estimate _that_ with MC.

You know me. I don't get it.

What do I miss?

with P^{q} := ∫ p(y|θ) q(θ) , with q(θ) ≈ p(θ|D), then estimate _that_ with MC.

You know me. I don't get it.

What do I miss?

Understanding the difficulties of posterior predictive estimation

Predictive posterior densities (PPDs) are essential in approximate inference for quantifying predictive uncertainty and comparing inference methods. Typically, PPDs are estimated by simple Monte...

openreview.net

July 5, 2025 at 11:03 AM

This new ICML paper (openreview.net/forum?id=Tzf...) reinforces and insists on the notion that one would want to replace the posterior predictive P* := ∫ p(y|θ) p(θ|D) ,

with P^{q} := ∫ p(y|θ) q(θ) , with q(θ) ≈ p(θ|D), then estimate _that_ with MC.

You know me. I don't get it.

What do I miss?

with P^{q} := ∫ p(y|θ) q(θ) , with q(θ) ≈ p(θ|D), then estimate _that_ with MC.

You know me. I don't get it.

What do I miss?

Reposted by Nicola Branchini

Here's how the gradient flow for minimizing KL(pi, target) looks under the Fisher-Rao metric. I thought some probability mass would be disappearing on the left and appearing on the right (i.e. teleportation), like a geodesic under the same metric, but I was very wrong... What's the right intuition?

June 13, 2025 at 4:29 PM

Here's how the gradient flow for minimizing KL(pi, target) looks under the Fisher-Rao metric. I thought some probability mass would be disappearing on the left and appearing on the right (i.e. teleportation), like a geodesic under the same metric, but I was very wrong... What's the right intuition?

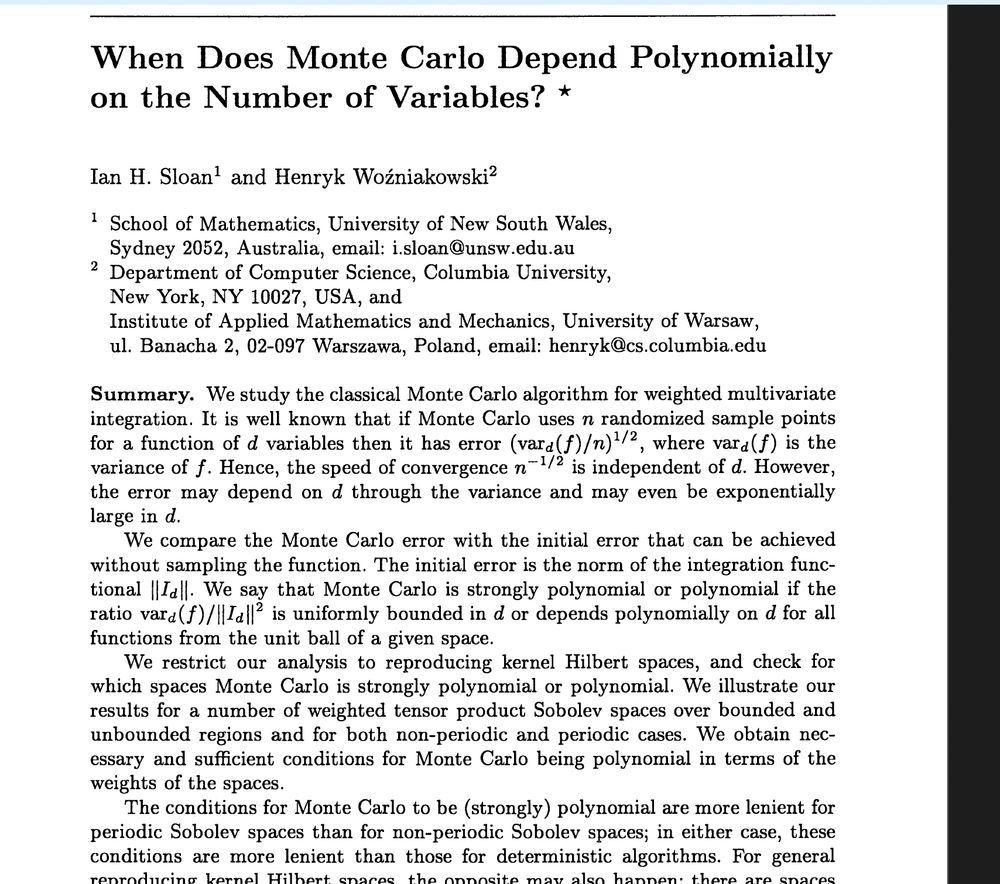

Wish I had found this when I got started : P (or, maybe not)

June 13, 2025 at 3:16 PM

Wish I had found this when I got started : P (or, maybe not)

Reposted by Nicola Branchini

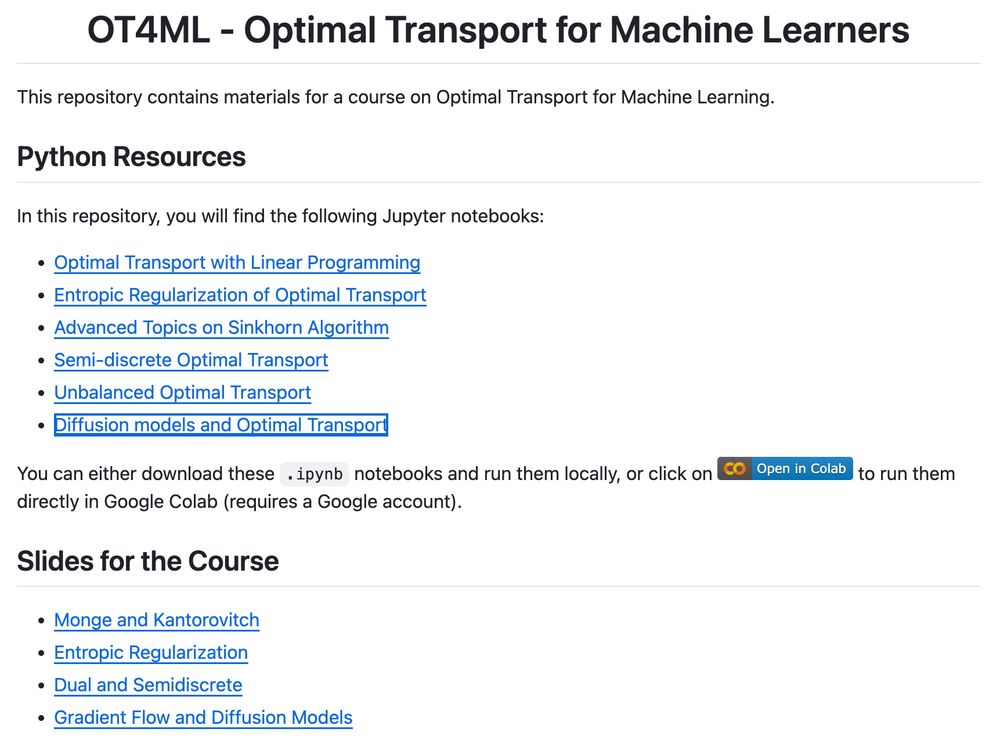

I have cleaned up the notebooks for my course on Optimal Transport for Machine Learners and added links to the slides and lecture notes. github.com/gpeyre/ot4ml

May 25, 2025 at 9:12 AM

I have cleaned up the notebooks for my course on Optimal Transport for Machine Learners and added links to the slides and lecture notes. github.com/gpeyre/ot4ml

Reposted by Nicola Branchini

🚨 New paper: “Towards Adaptive Self-Normalized IS”, @ IEEE Statistical Signal Processing Workshop.

TLDR;

To estimate µ = E_p[f(θ)] with SNIS, instead of doing MCMC on p(θ) or learning a parametric q(θ), we try MCMC directly on p(θ)| f(θ)-µ | (variance-minimizing proposal).

arxiv.org/abs/2505.00372

TLDR;

To estimate µ = E_p[f(θ)] with SNIS, instead of doing MCMC on p(θ) or learning a parametric q(θ), we try MCMC directly on p(θ)| f(θ)-µ | (variance-minimizing proposal).

arxiv.org/abs/2505.00372

Towards Adaptive Self-Normalized Importance Samplers

The self-normalized importance sampling (SNIS) estimator is a Monte Carlo estimator widely used to approximate expectations in statistical signal processing and machine learning.

The efficiency of S...

arxiv.org

May 2, 2025 at 1:29 PM

🚨 New paper: “Towards Adaptive Self-Normalized IS”, @ IEEE Statistical Signal Processing Workshop.

TLDR;

To estimate µ = E_p[f(θ)] with SNIS, instead of doing MCMC on p(θ) or learning a parametric q(θ), we try MCMC directly on p(θ)| f(θ)-µ | (variance-minimizing proposal).

arxiv.org/abs/2505.00372

TLDR;

To estimate µ = E_p[f(θ)] with SNIS, instead of doing MCMC on p(θ) or learning a parametric q(θ), we try MCMC directly on p(θ)| f(θ)-µ | (variance-minimizing proposal).

arxiv.org/abs/2505.00372

Reposted by Nicola Branchini

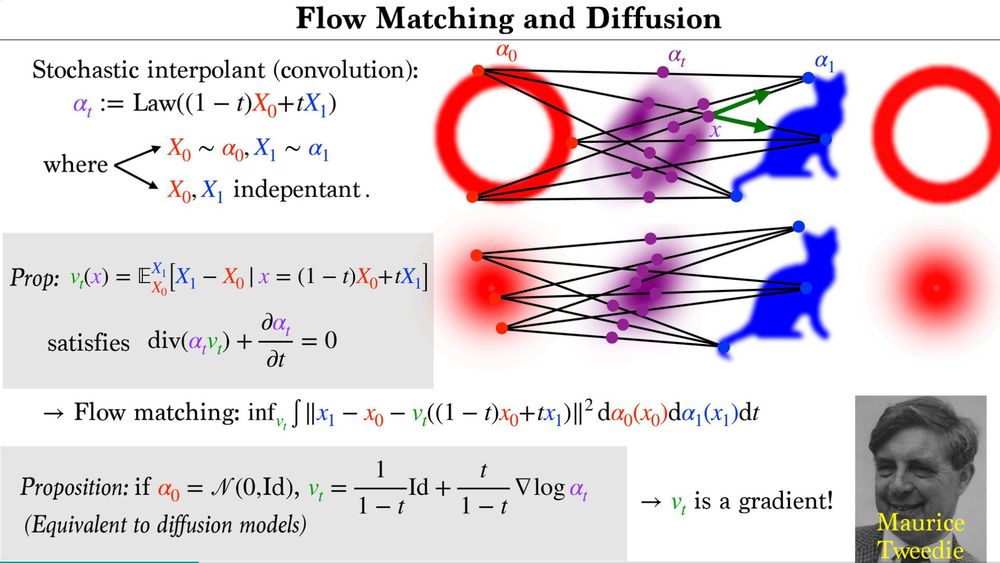

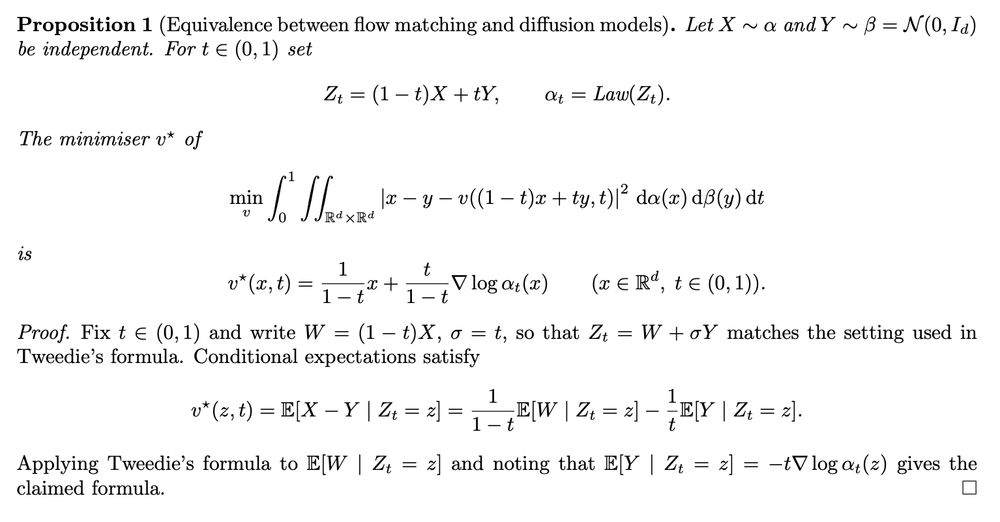

If one of the two distributions is an isotropic Gaussian, then flow matching is equivalent to a diffusion model. This is known as Tweedie's formula. In particular, the vector field is a gradient vector, as in optimal transport. speakerdeck.com/gpeyre/compu...

May 31, 2025 at 10:16 AM

If one of the two distributions is an isotropic Gaussian, then flow matching is equivalent to a diffusion model. This is known as Tweedie's formula. In particular, the vector field is a gradient vector, as in optimal transport. speakerdeck.com/gpeyre/compu...