Nicola Branchini

@nicolabranchini.bsky.social

🇮🇹 Stats PhD @ University of Edinburgh 🏴

@ellis.eu PhD - visiting @avehtari.bsky.social 🇫🇮

🤔💭 Monte Carlo, probabilistic ML.

Interested in many things relating to probML, keen to learn applications in climate/science.

https://www.branchini.fun/about

@ellis.eu PhD - visiting @avehtari.bsky.social 🇫🇮

🤔💭 Monte Carlo, probabilistic ML.

Interested in many things relating to probML, keen to learn applications in climate/science.

https://www.branchini.fun/about

October 4, 2025 at 4:01 PM

October 4, 2025 at 4:01 PM

October 4, 2025 at 4:01 PM

Posting a few nice importance sampling-related finds

"Value-aware Importance Weighting for Off-policy Reinforcement Learning"

proceedings.mlr.press/v232/de-asis...

"Value-aware Importance Weighting for Off-policy Reinforcement Learning"

proceedings.mlr.press/v232/de-asis...

October 4, 2025 at 4:01 PM

Posting a few nice importance sampling-related finds

"Value-aware Importance Weighting for Off-policy Reinforcement Learning"

proceedings.mlr.press/v232/de-asis...

"Value-aware Importance Weighting for Off-policy Reinforcement Learning"

proceedings.mlr.press/v232/de-asis...

Wish I had found this when I got started : P (or, maybe not)

June 13, 2025 at 3:16 PM

Wish I had found this when I got started : P (or, maybe not)

You are free to estimate your integrals by approximating p without weighting.

I am free to clean my room by throwing everything inside the closet.

I am free to clean my room by throwing everything inside the closet.

February 27, 2025 at 9:12 PM

You are free to estimate your integrals by approximating p without weighting.

I am free to clean my room by throwing everything inside the closet.

I am free to clean my room by throwing everything inside the closet.

Do I have a reading problem

February 15, 2025 at 5:44 PM

Do I have a reading problem

Thank you, I will check the thesis !

Regarding the paper: I was particularly interested in what they mention as future work as far as I understand

Regarding the paper: I was particularly interested in what they mention as future work as far as I understand

February 12, 2025 at 10:20 AM

Thank you, I will check the thesis !

Regarding the paper: I was particularly interested in what they mention as future work as far as I understand

Regarding the paper: I was particularly interested in what they mention as future work as far as I understand

nice idea.. seen it before 😉

December 22, 2024 at 3:58 PM

nice idea.. seen it before 😉

Other toy example visualizing the effect of tail dependence in a ratio

December 11, 2024 at 5:33 PM

Other toy example visualizing the effect of tail dependence in a ratio

Sometimes a ratio estimate will be better than another one even if the individual num/den estimators perform similarly-because a correlation between num/denom induces some error cancellation.

So looking only at k-hat values doesn't fully explain the diff. in performance of two competing estimators.

So looking only at k-hat values doesn't fully explain the diff. in performance of two competing estimators.

December 11, 2024 at 5:25 PM

Sometimes a ratio estimate will be better than another one even if the individual num/den estimators perform similarly-because a correlation between num/denom induces some error cancellation.

So looking only at k-hat values doesn't fully explain the diff. in performance of two competing estimators.

So looking only at k-hat values doesn't fully explain the diff. in performance of two competing estimators.

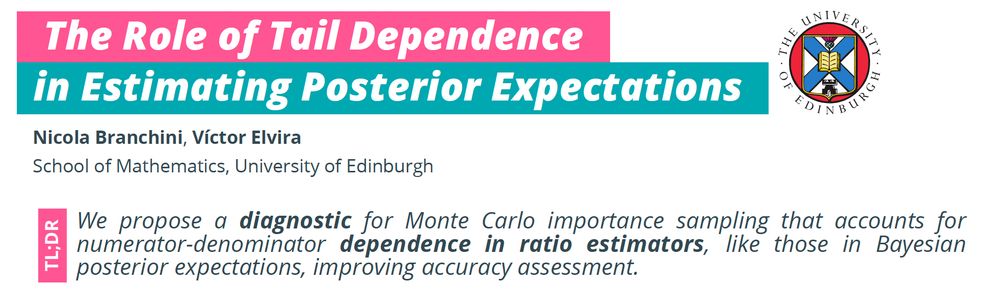

When the target quantity of interest is a ratio (like posterior predictives), we actually have 2 sets of weights - for num. and denom.

The reliability of the two estimators, i.e., of num. and denom., can be *individually* assessed with the k-hat diagnostic of Vehtari et al (2024, JMLR).

The reliability of the two estimators, i.e., of num. and denom., can be *individually* assessed with the k-hat diagnostic of Vehtari et al (2024, JMLR).

December 11, 2024 at 5:25 PM

When the target quantity of interest is a ratio (like posterior predictives), we actually have 2 sets of weights - for num. and denom.

The reliability of the two estimators, i.e., of num. and denom., can be *individually* assessed with the k-hat diagnostic of Vehtari et al (2024, JMLR).

The reliability of the two estimators, i.e., of num. and denom., can be *individually* assessed with the k-hat diagnostic of Vehtari et al (2024, JMLR).

More details: consider the general problem of estimating expectations. Most of these (e.g. in Bayesian inf.) involve an intractable normalizing constant so ratios of estimators are used in practice.

Intuitively, reliability of an IS estimator depends on the behavior of the density ratios (weights)

Intuitively, reliability of an IS estimator depends on the behavior of the density ratios (weights)

December 11, 2024 at 5:25 PM

More details: consider the general problem of estimating expectations. Most of these (e.g. in Bayesian inf.) involve an intractable normalizing constant so ratios of estimators are used in practice.

Intuitively, reliability of an IS estimator depends on the behavior of the density ratios (weights)

Intuitively, reliability of an IS estimator depends on the behavior of the density ratios (weights)

Interested in estimating posterior predictives in Bayesian inference? Really want to know if your approximate inference "is working"?

Come to our poster at the NeurIPS BDU workshop on Saturday - see TL;DR below.

Come to our poster at the NeurIPS BDU workshop on Saturday - see TL;DR below.

December 11, 2024 at 5:25 PM

Interested in estimating posterior predictives in Bayesian inference? Really want to know if your approximate inference "is working"?

Come to our poster at the NeurIPS BDU workshop on Saturday - see TL;DR below.

Come to our poster at the NeurIPS BDU workshop on Saturday - see TL;DR below.

These things I usually don't see much discussed. I guess it's not that interesting to look into anything else than the individual variances per dimension (or their average / sum).

November 21, 2024 at 11:37 AM

These things I usually don't see much discussed. I guess it's not that interesting to look into anything else than the individual variances per dimension (or their average / sum).

A bonus of the nice notes by François Portier:

explicit effect of dimension on the error !

@nolovedeeplearning.bsky.social

explicit effect of dimension on the error !

@nolovedeeplearning.bsky.social

November 20, 2024 at 12:31 PM

A bonus of the nice notes by François Portier:

explicit effect of dimension on the error !

@nolovedeeplearning.bsky.social

explicit effect of dimension on the error !

@nolovedeeplearning.bsky.social

The above early reference of 1966 starts explicitly calling it "adaptive" and mentions the idea appeared already in 1954, but did not receive much attention.

November 20, 2024 at 12:09 PM

The above early reference of 1966 starts explicitly calling it "adaptive" and mentions the idea appeared already in 1954, but did not receive much attention.

- artowen.su.domains/mc/Ch-var-ad...

- the notes on Monte Carlo here: sites.google.com/site/fportie...

- arxiv.org/abs/2102.05407

All have different (complementary) material. The basic concept is super general (maybe too general I suspect @nolovedeeplearning.bsky.social may argue :D ).

- the notes on Monte Carlo here: sites.google.com/site/fportie...

- arxiv.org/abs/2102.05407

All have different (complementary) material. The basic concept is super general (maybe too general I suspect @nolovedeeplearning.bsky.social may argue :D ).

November 20, 2024 at 12:06 PM

- artowen.su.domains/mc/Ch-var-ad...

- the notes on Monte Carlo here: sites.google.com/site/fportie...

- arxiv.org/abs/2102.05407

All have different (complementary) material. The basic concept is super general (maybe too general I suspect @nolovedeeplearning.bsky.social may argue :D ).

- the notes on Monte Carlo here: sites.google.com/site/fportie...

- arxiv.org/abs/2102.05407

All have different (complementary) material. The basic concept is super general (maybe too general I suspect @nolovedeeplearning.bsky.social may argue :D ).

November 20, 2024 at 11:21 AM