🔗 Yes! Checkout our paper: arxiv.org/abs/2509.02815

🔗 Yes! Checkout our paper: arxiv.org/abs/2509.02815

Check out our work on Embodiment Scaling Laws @CoRL2025

We investigate cross-embodiment learning as the next axis of scaling for truly generalist policies 📈

🔗 All details: embodiment-scaling-laws.github.io

Check out our work on Embodiment Scaling Laws @CoRL2025

We investigate cross-embodiment learning as the next axis of scaling for truly generalist policies 📈

🔗 All details: embodiment-scaling-laws.github.io

We also explored architectures like Universal Neural Functionals (UNF) and action-based representations ("Probing").

And yes, our scaled EPVFs are competitive with PPO and SAC in their final performance.

We also explored architectures like Universal Neural Functionals (UNF) and action-based representations ("Probing").

And yes, our scaled EPVFs are competitive with PPO and SAC in their final performance.

Key ingredients for stability and performance are weight clipping and using uniform noise scaled to the parameter magnitudes.

Our ablation studies show just how critical these components are. Without them, performance collapses.

Key ingredients for stability and performance are weight clipping and using uniform noise scaled to the parameter magnitudes.

Our ablation studies show just how critical these components are. Without them, performance collapses.

We see strong scaling effects when using MJX to rollout up to 4000 differently perturbed policies in parallel.

This explores the policy space effectively and large batches drastically reduce the variance of the resulting gradients.

We see strong scaling effects when using MJX to rollout up to 4000 differently perturbed policies in parallel.

This explores the policy space effectively and large batches drastically reduce the variance of the resulting gradients.

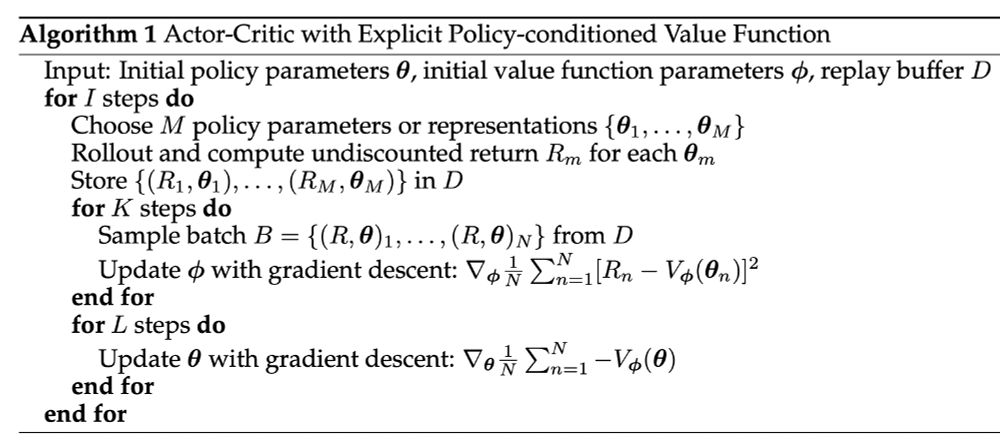

This unlocks fully off-policy learning and policy parameter space exploration using any policy data and leads to the probably most simple DRL algorithm one can imagine:

This unlocks fully off-policy learning and policy parameter space exploration using any policy data and leads to the probably most simple DRL algorithm one can imagine:

Imagine a value function that understands the policy's parameters directly: V(θ).

This allows for direct, gradient-based policy updates:

Imagine a value function that understands the policy's parameters directly: V(θ).

This allows for direct, gradient-based policy updates:

We're showing how to make Explicit Policy-conditioned Value Functions V(θ) (originating from Faccio & Schmidhuber) work for more complex control tasks. The secret? Massive scaling!

We're showing how to make Explicit Policy-conditioned Value Functions V(θ) (originating from Faccio & Schmidhuber) work for more complex control tasks. The secret? Massive scaling!

We train an omnidirectional locomotion policy directly on a real quadruped in just a few minutes 🚀

Top speeds of 0.85 m/s, two different control approaches, indoor and outdoor experiments, and more! 🤖🏃♂️

We train an omnidirectional locomotion policy directly on a real quadruped in just a few minutes 🚀

Top speeds of 0.85 m/s, two different control approaches, indoor and outdoor experiments, and more! 🤖🏃♂️