🔗 Yes! Checkout our paper: arxiv.org/abs/2509.02815

🔗 Yes! Checkout our paper: arxiv.org/abs/2509.02815

Check out our work on Embodiment Scaling Laws @CoRL2025

We investigate cross-embodiment learning as the next axis of scaling for truly generalist policies 📈

🔗 All details: embodiment-scaling-laws.github.io

Check out our work on Embodiment Scaling Laws @CoRL2025

We investigate cross-embodiment learning as the next axis of scaling for truly generalist policies 📈

🔗 All details: embodiment-scaling-laws.github.io

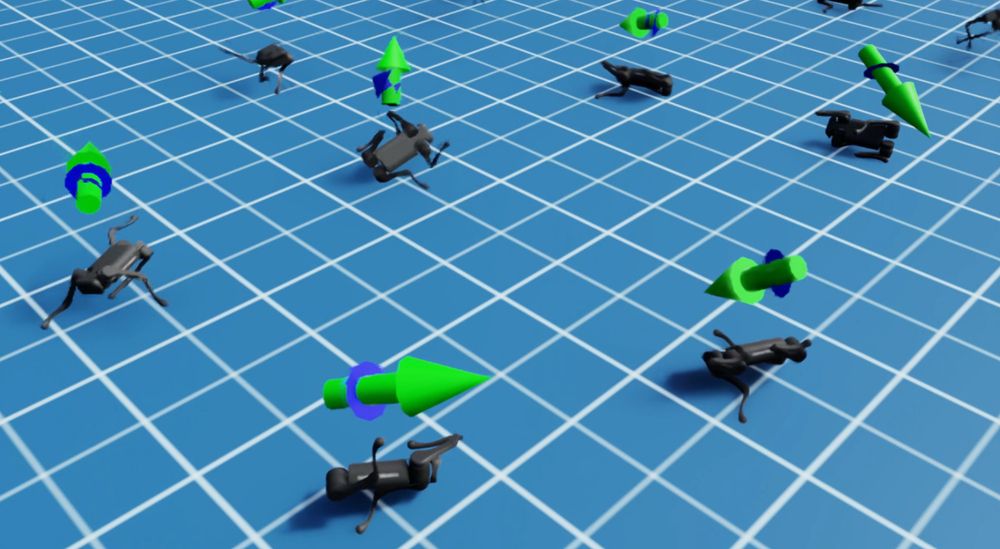

Part II of my blog series "Getting SAC to Work on a Massive Parallel Simulator" is out!

I've included everything I tried that didn't work (and why Jax PPO was different from PyTorch PPO)

araffin.github.io/post/tune-sa...

Part II of my blog series "Getting SAC to Work on a Massive Parallel Simulator" is out!

I've included everything I tried that didn't work (and why Jax PPO was different from PyTorch PPO)

araffin.github.io/post/tune-sa...

We're showing how to make Explicit Policy-conditioned Value Functions V(θ) (originating from Faccio & Schmidhuber) work for more complex control tasks. The secret? Massive scaling!

We're showing how to make Explicit Policy-conditioned Value Functions V(θ) (originating from Faccio & Schmidhuber) work for more complex control tasks. The secret? Massive scaling!

We train an omnidirectional locomotion policy directly on a real quadruped in just a few minutes 🚀

Top speeds of 0.85 m/s, two different control approaches, indoor and outdoor experiments, and more! 🤖🏃♂️

We train an omnidirectional locomotion policy directly on a real quadruped in just a few minutes 🚀

Top speeds of 0.85 m/s, two different control approaches, indoor and outdoor experiments, and more! 🤖🏃♂️

New blog post: Getting SAC to Work on a Massive Parallel Simulator (part I)

araffin.github.io/post/sac-mas...

New blog post: Getting SAC to Work on a Massive Parallel Simulator (part I)

araffin.github.io/post/sac-mas...