People working at universities are pushed so incredibly hard to ensure that every study is a breakthrough that they just...lie. All the time. Probably without even realizing it.

www.standard.co.uk/news/tech/im...

People working at universities are pushed so incredibly hard to ensure that every study is a breakthrough that they just...lie. All the time. Probably without even realizing it.

www.standard.co.uk/news/tech/im...

OpenAI did not disclose this in the video. Sam said they didn’t target the test.

Never trust a staged demo.

Never trust a product you haven’t tried.

Never trust OpenAI.

OpenAI did not disclose this in the video. Sam said they didn’t target the test.

Never trust a staged demo.

Never trust a product you haven’t tried.

Never trust OpenAI.

Thousands?

(It’s already been hundreds.)

Thousands?

(It’s already been hundreds.)

I wrote about how journals seemingly aren't enforcing their AI policies, according to a new study: www.chronicle.com/article/scho...

I wrote about how journals seemingly aren't enforcing their AI policies, according to a new study: www.chronicle.com/article/scho...

The Hidden Autopilot Data That Reveals Why Teslas Crash | WSJ

”Computer vision is such a deeply flawed technology” – Missy Cummings, fighter pilot and director of George Mason University's Autonomy and Robotics Center

YouTube: per.ax/aptesla

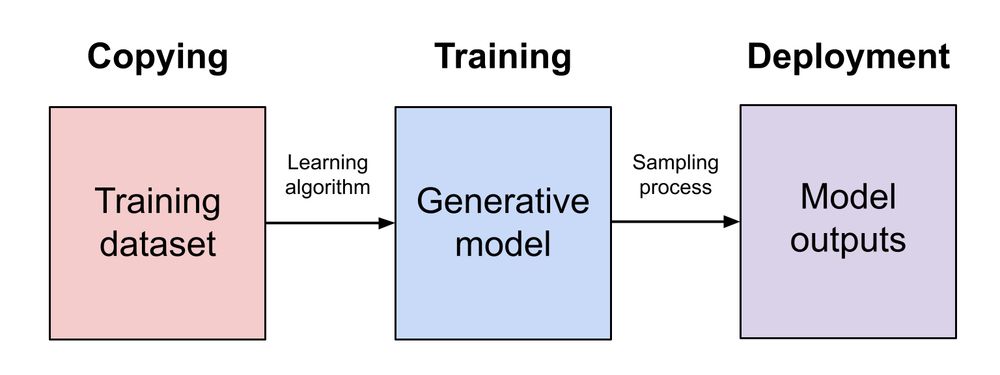

suchir.net/fair_use.html

suchir.net/fair_use.html

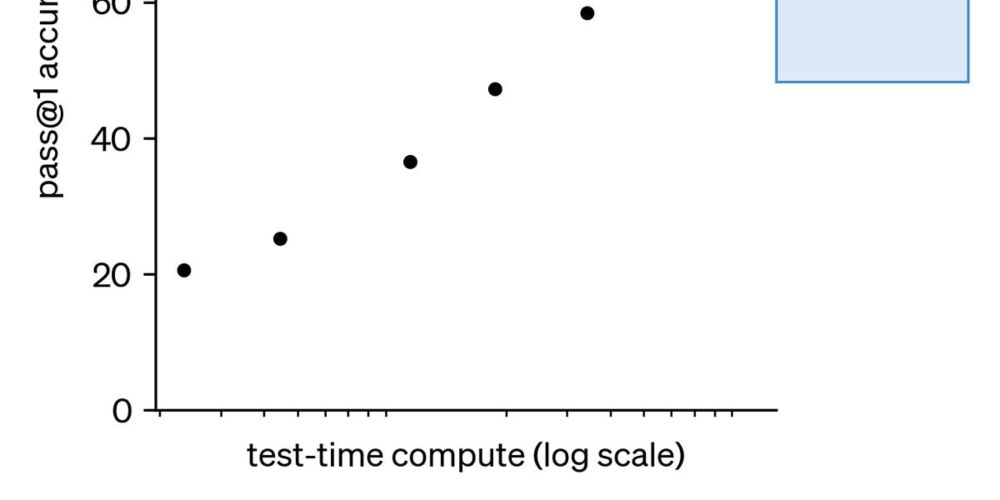

Evidence of productivity gains is mixed - yet hypey takes continue to dominate in the media.

dais.ca/reports/wait...

Evidence of productivity gains is mixed - yet hypey takes continue to dominate in the media.