If you’ve worked on something similar and are happy to share a code template or know of good resources, we’d really appreciate it! 🙂

"Hierarchical dynamic coding coordinates speech comprehension in the brain"

with dream team @alecmarantz.bsky.social, @davidpoeppel.bsky.social, @jeanremiking.bsky.social

Summary 👇

1/8

"Hierarchical dynamic coding coordinates speech comprehension in the brain"

with dream team @alecmarantz.bsky.social, @davidpoeppel.bsky.social, @jeanremiking.bsky.social

Summary 👇

1/8

Out now in @plos.org Computational Biology

journals.plos.org/ploscompbiol...

w/ @giuliamz.bsky.social et al. @cimecunitrento.bsky.social

🧠🧪 #psychscisky #neuroskyence

TL;DR 🧵👇

Out now in @plos.org Computational Biology

journals.plos.org/ploscompbiol...

w/ @giuliamz.bsky.social et al. @cimecunitrento.bsky.social

🧠🧪 #psychscisky #neuroskyence

TL;DR 🧵👇

Vital for understanding language disorders & improving diagnostics.👇

www.ru.nl/en/research/...

Vital for understanding language disorders & improving diagnostics.👇

www.ru.nl/en/research/...

www.tandfonline.com/toc/hrls20/c...

www.tandfonline.com/toc/hrls20/c...

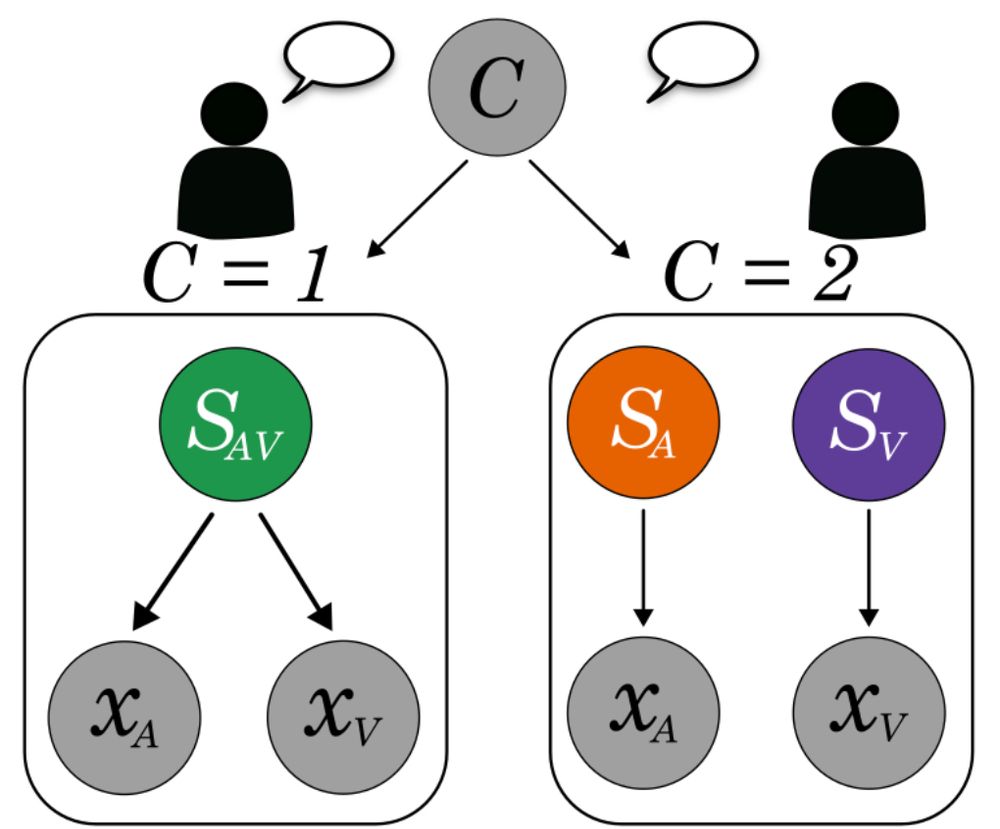

We take an ecological and multimodal neuroscience approach to study mutual prediction and social coordination when learning with others.

It took 5 full years for this one! Full open-access pre-print: osf.io/preprints/ps...

We take an ecological and multimodal neuroscience approach to study mutual prediction and social coordination when learning with others.

It took 5 full years for this one! Full open-access pre-print: osf.io/preprints/ps...

See you at ISGS11 in Hong Kong 🌟

See you at ISGS11 in Hong Kong 🌟

Don’t miss it — join us as we celebrate outstanding student research! ✨

Keep an eye on our website to stay in the loop and catch all the updates www.isgs10.nl/home

Don’t miss it — join us as we celebrate outstanding student research! ✨

Keep an eye on our website to stay in the loop and catch all the updates www.isgs10.nl/home

Read more: markdingemanse.net/futures/news...

#linguistics #interaction #sts #emca #hci

Read more: markdingemanse.net/futures/news...

#linguistics #interaction #sts #emca #hci

www.ru.nl/en/working-a...

www.ru.nl/en/working-a...

Iconic gestures are produced before the associated words and support prediction, but how does this work in children learning words?

Check out our new paper led by @marinewang.bsky.social @eddonnellan.bsky.social: osf.io/preprints/ps...

Iconic gestures are produced before the associated words and support prediction, but how does this work in children learning words?

Check out our new paper led by @marinewang.bsky.social @eddonnellan.bsky.social: osf.io/preprints/ps...

doi.org/10.1177/09567976251331041

doi.org/10.1177/09567976251331041

openletter.earth/against-lang...

www.mpi.nl/career-educa...

www.mpi.nl/career-educa...

doi.org/10.1080/0163853X.2025.2467605

doi.org/10.1080/0163853X.2025.2467605

doi.org/10.1080/0163853X.2024.2413314

doi.org/10.1080/0163853X.2024.2413314

github.com/ShoAkamine/w...

github.com/ShoAkamine/w...

doi.org/10.1177/09567976251331041

doi.org/10.1177/09567976251331041

Read it here in Psychological Science 👉 doi.org/10.1177/0956..., with @lindadrijvers.bsky.social and @judithholler.bsky.social

Read it here in Psychological Science 👉 doi.org/10.1177/0956..., with @lindadrijvers.bsky.social and @judithholler.bsky.social