ARR will now fully adopt the LaTeX template to streamline formatting and reduce review workload.

Starting March 2026, submissions using the MS Word template will be desk-rejected.

Check details here: aclrollingreview.org/discontinuat...

#ARR #NLProc

ARR will now fully adopt the LaTeX template to streamline formatting and reduce review workload.

Starting March 2026, submissions using the MS Word template will be desk-rejected.

Check details here: aclrollingreview.org/discontinuat...

#ARR #NLProc

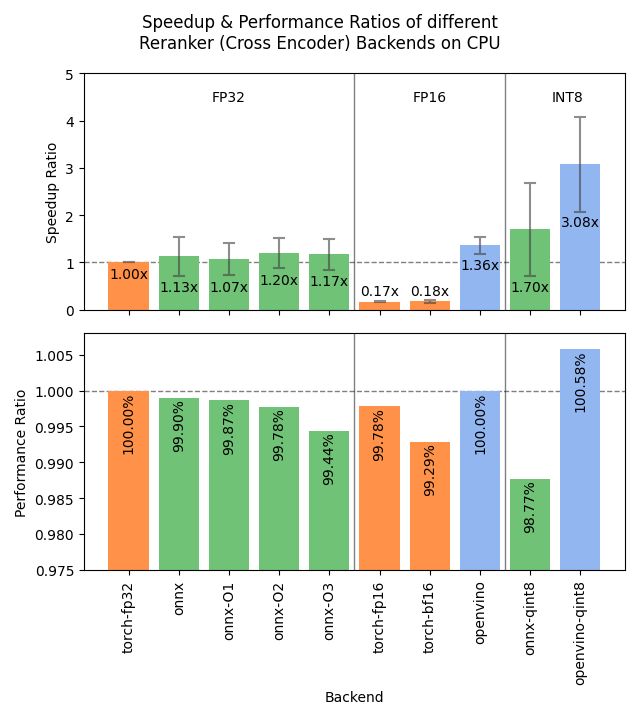

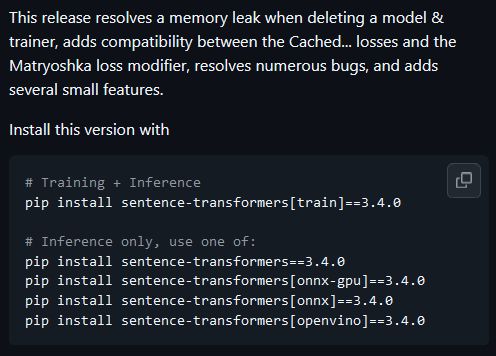

Their blogpost covers all changes, including easier evaluation, multimodal support, rerankers, new interfaces, documentation, dataset statistics, a migration guide, etc.

🧵

Their blogpost covers all changes, including easier evaluation, multimodal support, rerankers, new interfaces, documentation, dataset statistics, a migration guide, etc.

🧵

#MLSky

#MLSky

If you intend to commit your paper to ACL 2026 and require an invitation letter for visa purposes, please fill out the visa request form as soon as possible.

(docs.google.com/forms/d/e/1F...)

#ARR #ACL #NLProc

If you intend to commit your paper to ACL 2026 and require an invitation letter for visa purposes, please fill out the visa request form as soon as possible.

(docs.google.com/forms/d/e/1F...)

#ARR #ACL #NLProc

tudatalib.ulb.tu-darmstadt.de/handle/tudat...

📊 𝗡𝗲𝘄𝗹𝘆 𝗮𝗱𝗱𝗲𝗱 𝗔𝗖𝗟 𝟮𝟬𝟮𝟱 𝗱𝗮𝘁𝗮:

✅ 𝟮𝗸 papers

✅ 𝟮𝗸 reviews

✅ 𝟴𝟰𝟵 meta-reviews

✅ 𝟭.𝟱𝗸 papers with rebuttals

(1/🧵)

tudatalib.ulb.tu-darmstadt.de/handle/tudat...

📊 𝗡𝗲𝘄𝗹𝘆 𝗮𝗱𝗱𝗲𝗱 𝗔𝗖𝗟 𝟮𝟬𝟮𝟱 𝗱𝗮𝘁𝗮:

✅ 𝟮𝗸 papers

✅ 𝟮𝗸 reviews

✅ 𝟴𝟰𝟵 meta-reviews

✅ 𝟭.𝟱𝗸 papers with rebuttals

(1/🧵)

Details in 🧵

Details in 🧵

I am serving as an AC for #ICML2025, seeking emergency reviewers for two submissions

Are you an expert of Knowledge Distillation or AI4Science?

If so, send me DM with your Google Scholar profile and OpenReview profile

Thank you!

I am serving as an AC for #ICML2025, seeking emergency reviewers for two submissions

Are you an expert of Knowledge Distillation or AI4Science?

If so, send me DM with your Google Scholar profile and OpenReview profile

Thank you!

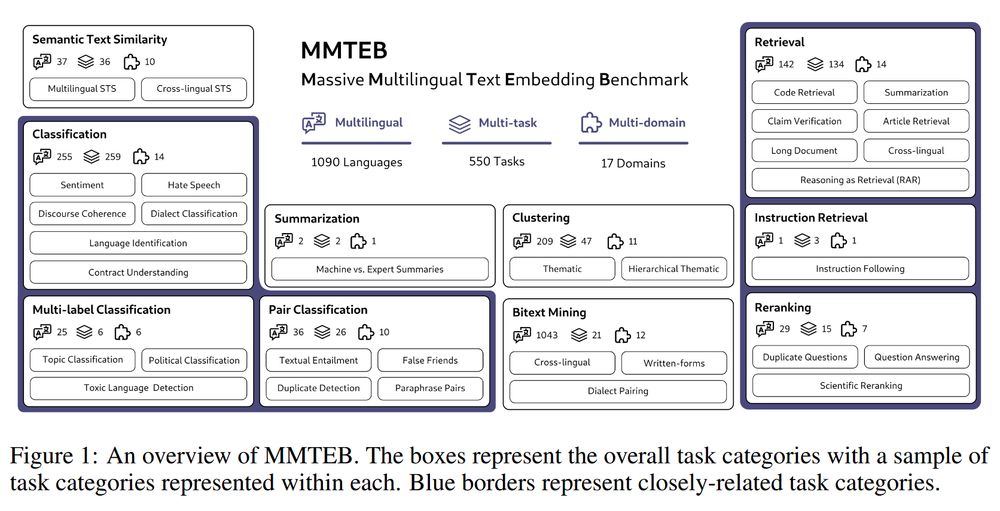

It's a huge collaboration between 56 universities, labs, and organizations, resulting in a massive benchmark of 1000+ languages, 500+ tasks, and a dozen+ domains.

Details in 🧵

It's a huge collaboration between 56 universities, labs, and organizations, resulting in a massive benchmark of 1000+ languages, 500+ tasks, and a dozen+ domains.

Details in 🧵

IBM discovered that early cross encoders layers can produce effective sentence embeddings, enabling 5.15x faster inference while maintaining comparable accuracy to full dual encoders.

📝 arxiv.org/abs/2502.03552

IBM discovered that early cross encoders layers can produce effective sentence embeddings, enabling 5.15x faster inference while maintaining comparable accuracy to full dual encoders.

📝 arxiv.org/abs/2502.03552

Use DeepSeek to generate high-quality training data, then distil that knowledge into ModernBERT for fast, efficient classification.

New blog post: danielvanstrien.xyz/posts/2025/d...

Use DeepSeek to generate high-quality training data, then distil that knowledge into ModernBERT for fast, efficient classification.

New blog post: danielvanstrien.xyz/posts/2025/d...

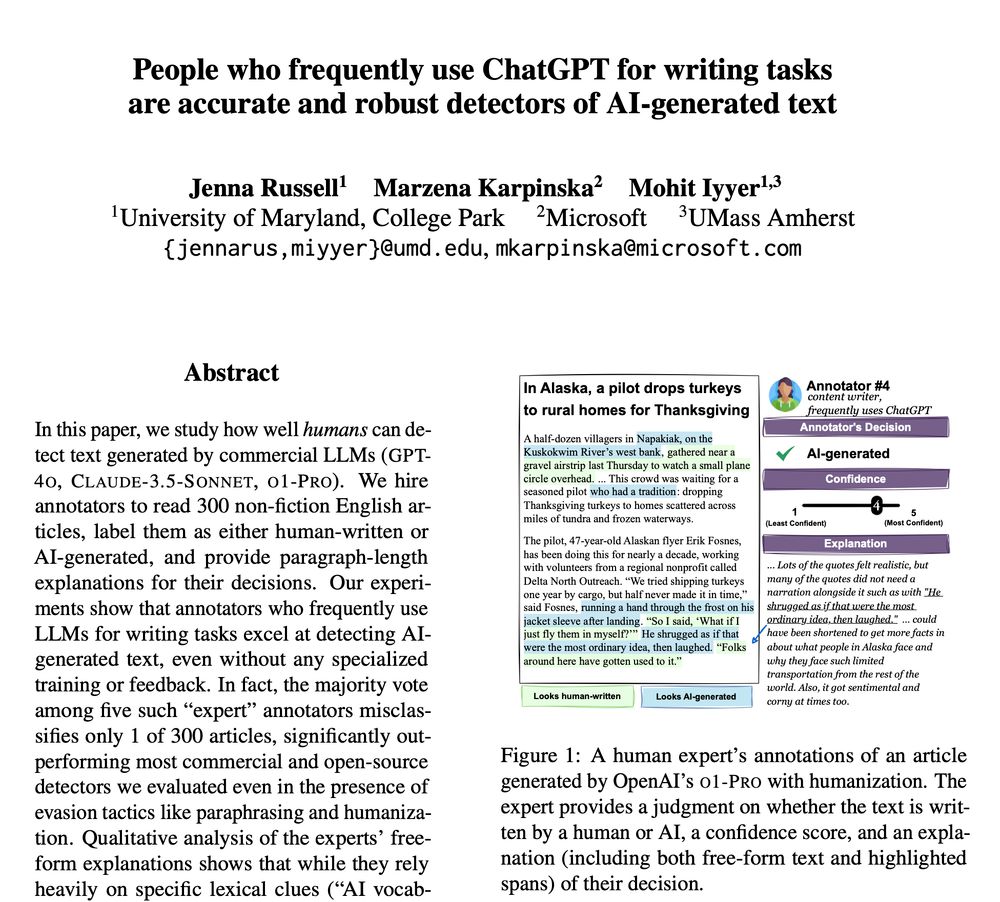

Turns out that while general population is unreliable, those who frequently use ChatGPT for writing tasks can spot even "humanized" AI-generated text with near-perfect accuracy 🎯

Turns out that while general population is unreliable, those who frequently use ChatGPT for writing tasks can spot even "humanized" AI-generated text with near-perfect accuracy 🎯

www.nytimes.com/2025/01/23/t...

www.nytimes.com/2025/01/23/t...

Details in 🧵

Details in 🧵

Details on submissions 👉 sites.google.com/view/repl4nl...

⏰ Deadline January 30

Details on submissions 👉 sites.google.com/view/repl4nl...

⏰ Deadline January 30

Provides a comprehensive analysis on adopting LLMs as embedding models, examining both zero-shot prompting and tuning strategies to derive competitive text embeddings vs traditional models.

📝 arxiv.org/abs/2412.12591

Provides a comprehensive analysis on adopting LLMs as embedding models, examining both zero-shot prompting and tuning strategies to derive competitive text embeddings vs traditional models.

📝 arxiv.org/abs/2412.12591

gift link www.nytimes.com/2024/12/23/s...

gift link www.nytimes.com/2024/12/23/s...

Glad to see ModernBERT recently brought something new to the field. So don't count BERT as GOFAI yet.

www.yitay.net/blog/model-a...

Glad to see ModernBERT recently brought something new to the field. So don't count BERT as GOFAI yet.

www.yitay.net/blog/model-a...

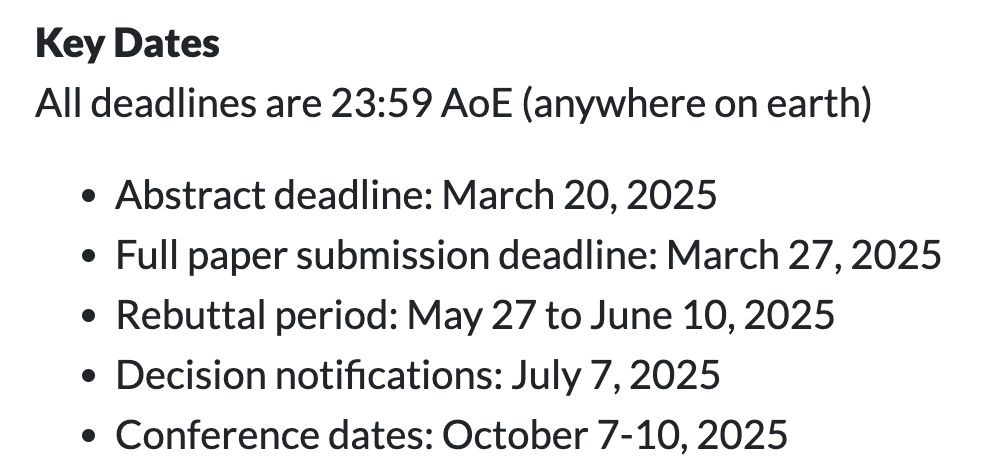

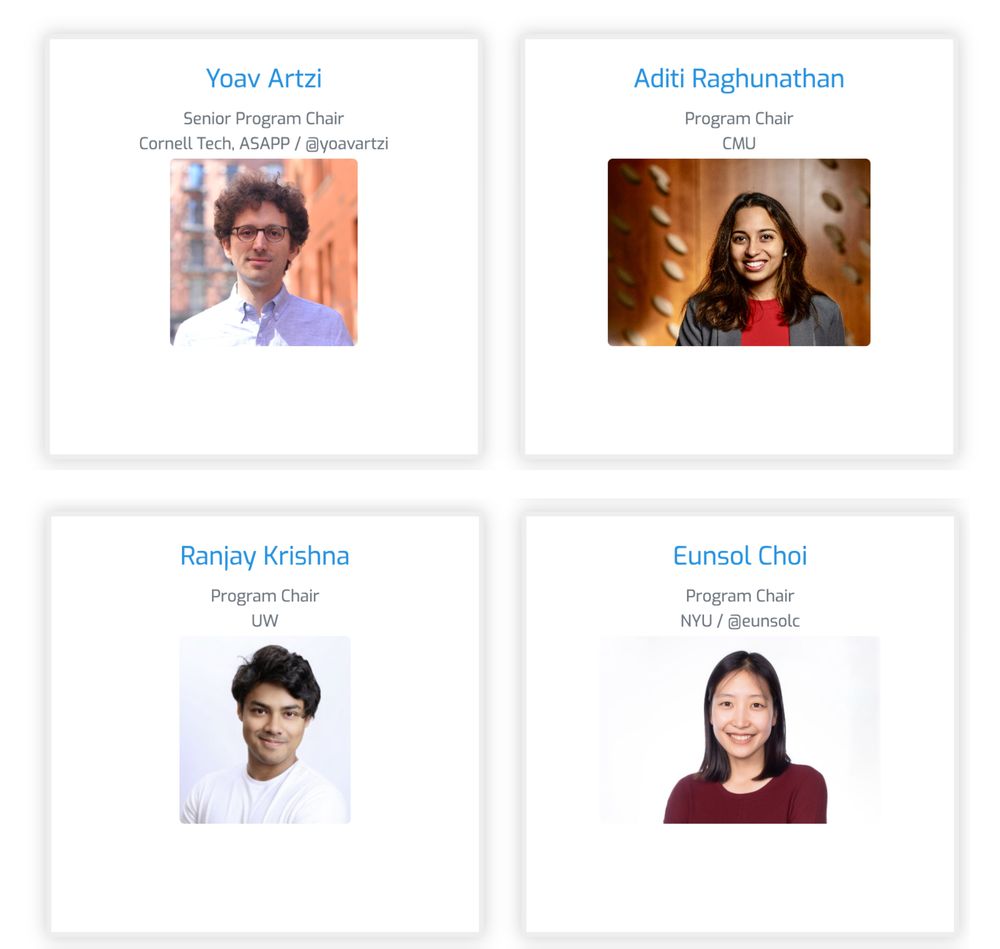

colmweb.org/cfp.html

And excited to announce the COLM 2025 program chairs @yoavartzi.com @eunsol.bsky.social @ranjaykrishna.bsky.social and @adtraghunathan.bsky.social

colmweb.org/cfp.html

And excited to announce the COLM 2025 program chairs @yoavartzi.com @eunsol.bsky.social @ranjaykrishna.bsky.social and @adtraghunathan.bsky.social

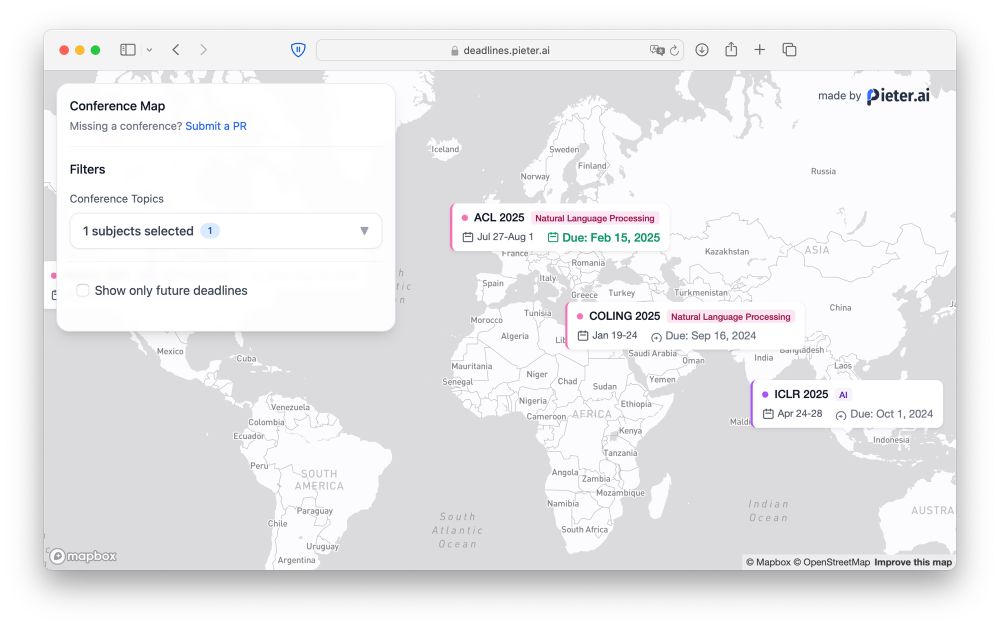

🗺️ Check out my latest side-project: deadlines.pieter.ai

🗺️ Check out my latest side-project: deadlines.pieter.ai

Additional details from the author linked below.

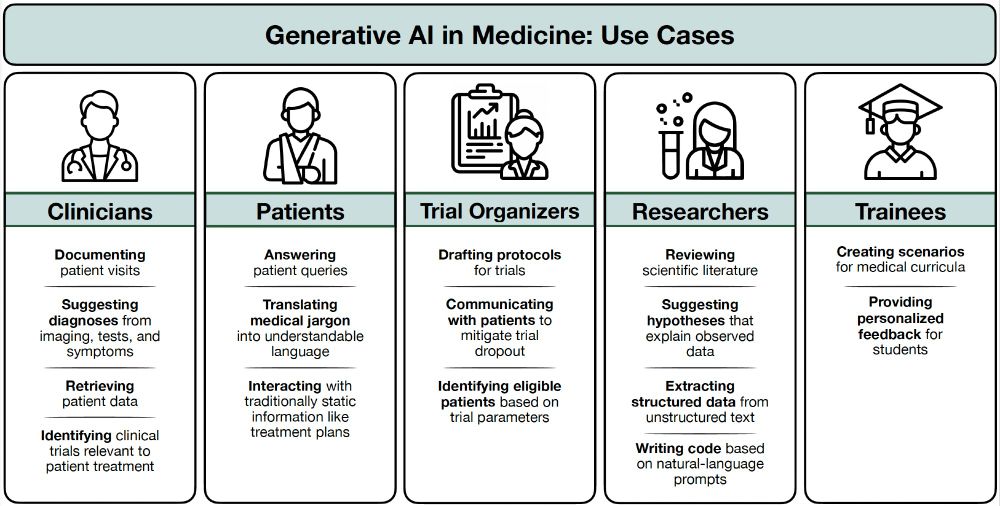

🩺🖥️

Direct link: arxiv.org/abs/2412.10337

Additional details from the author linked below.

🩺🖥️

Direct link: arxiv.org/abs/2412.10337

We trained 2 new models. Like BERT, but modern. ModernBERT.

Not some hypey GenAI thing, but a proper workhorse model, for retrieval, classification, etc. Real practical stuff.

It's much faster, more accurate, longer context, and more useful. 🧵

We trained 2 new models. Like BERT, but modern. ModernBERT.

Not some hypey GenAI thing, but a proper workhorse model, for retrieval, classification, etc. Real practical stuff.

It's much faster, more accurate, longer context, and more useful. 🧵

www.arxiv.org/abs/2412.12119

www.arxiv.org/abs/2412.12119