MSFT: I help phi understand longer context and finetune LLM for domain specific knowledge

(background yellow-rumped warbler from @carlbergstrom.com)

@austeni.bsky.social explains how Robert Prevost became Leo XIV:

www.commonwealmagazine.org/ivereigh-pre...

(still open to bug reports)

(still open to bug reports)

In this blog post, let's discover common formats for storing an AI model.

huggingface.co/blog/ngxson/...

In this blog post, let's discover common formats for storing an AI model.

huggingface.co/blog/ngxson/...

A book to learn all about 5D parallelism, ZeRO, CUDA kernels, how/why overlap compute & coms with theory, motivation, interactive plots and 4000+ experiments!

A book to learn all about 5D parallelism, ZeRO, CUDA kernels, how/why overlap compute & coms with theory, motivation, interactive plots and 4000+ experiments!

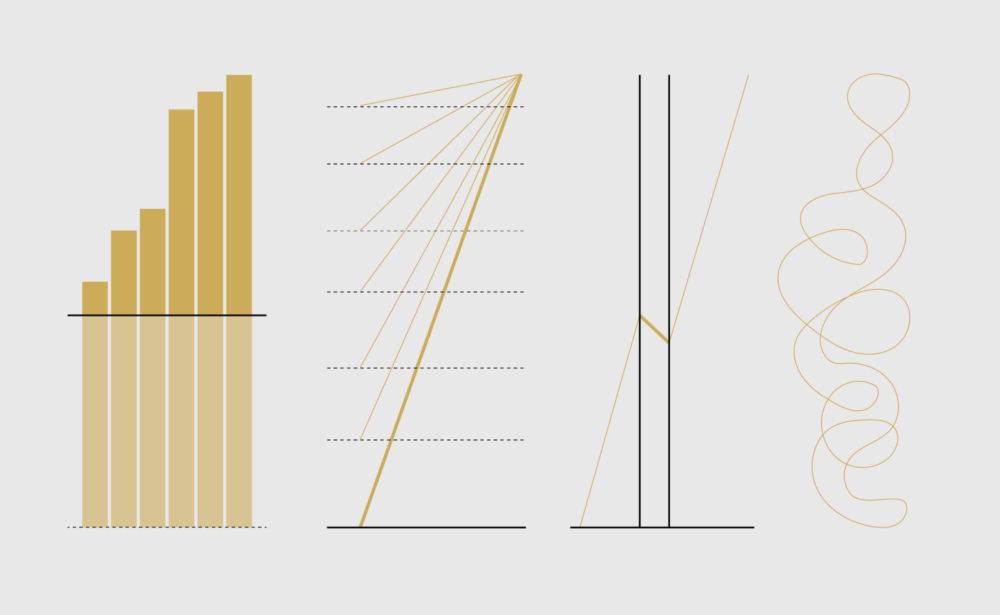

flowingdata.com/projects/dis...

flowingdata.com/projects/dis...

All from better annealing and post train. Didn’t need to redo pre training. Goes to show how much potential these models have!

new instruct model: huggingface.co/allenai/OLMo...

All from better annealing and post train. Didn’t need to redo pre training. Goes to show how much potential these models have!

new instruct model: huggingface.co/allenai/OLMo...

He writes “Power distorts truth, so God plants and develops it at the edge, where the power-hungry least expect it,” inviting us to the “edge of the inside.” tinyurl.com/46z9574r

He writes “Power distorts truth, so God plants and develops it at the edge, where the power-hungry least expect it,” inviting us to the “edge of the inside.” tinyurl.com/46z9574r

jax-ml.github.io/scaling-book/

jax-ml.github.io/scaling-book/

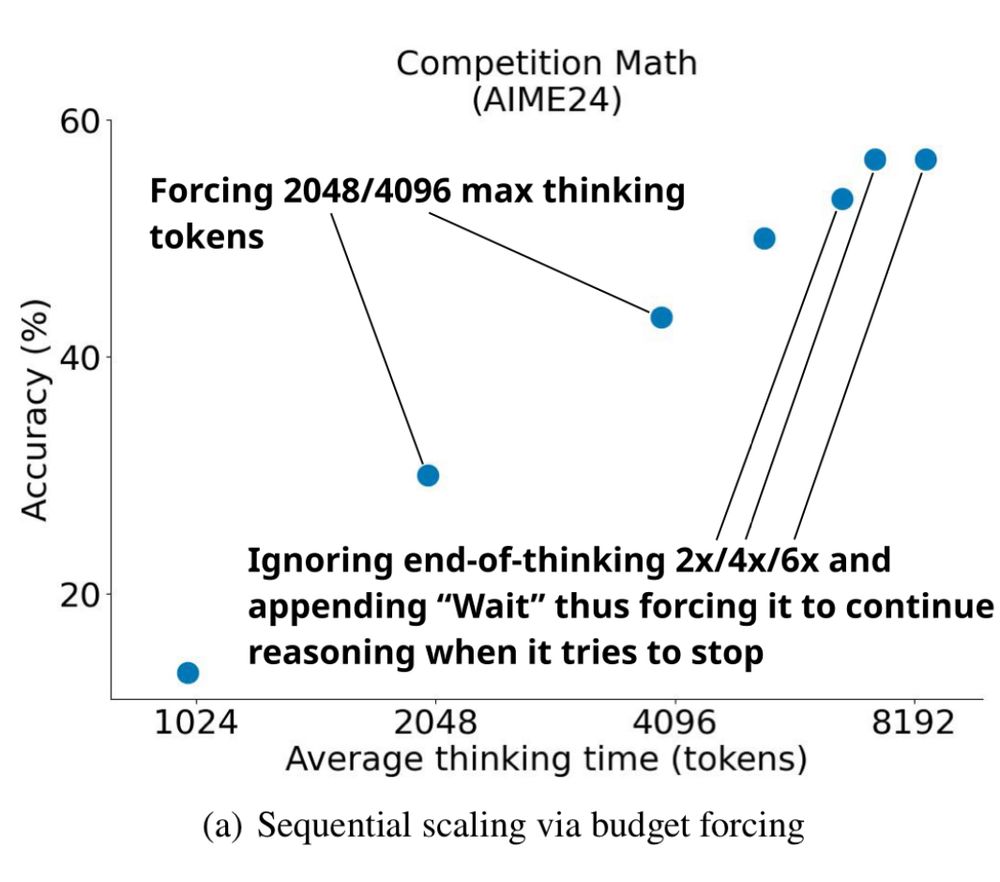

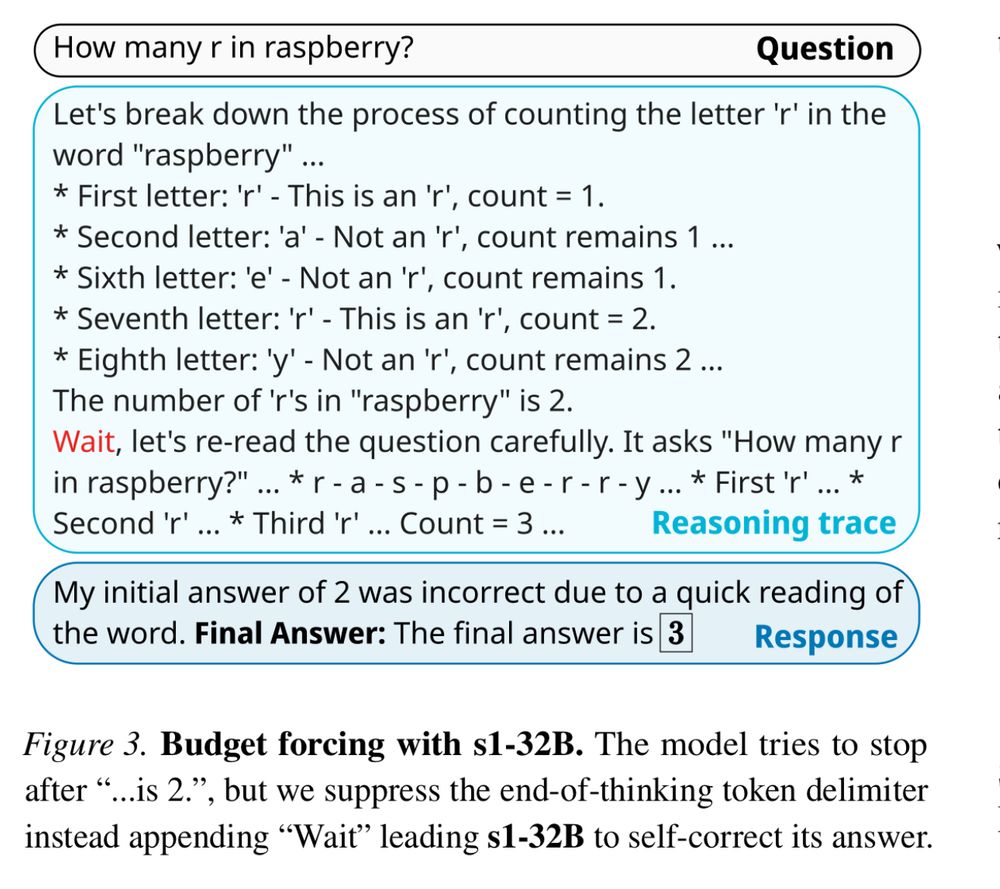

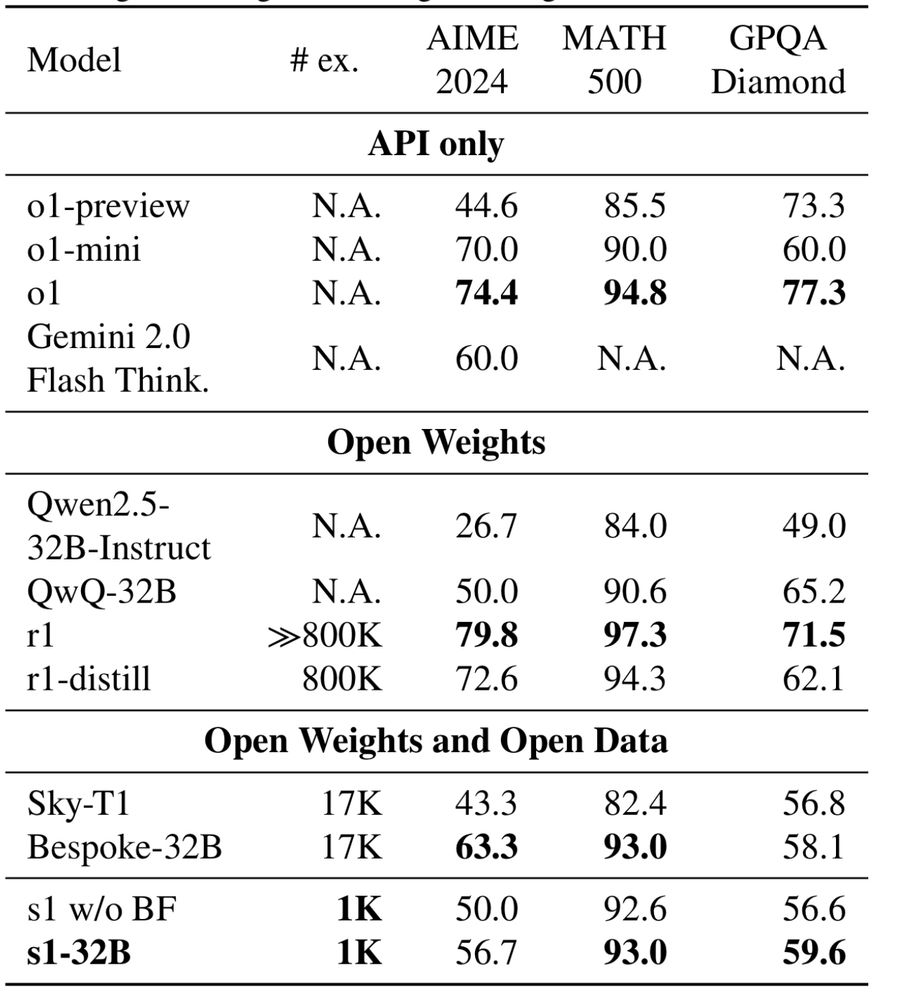

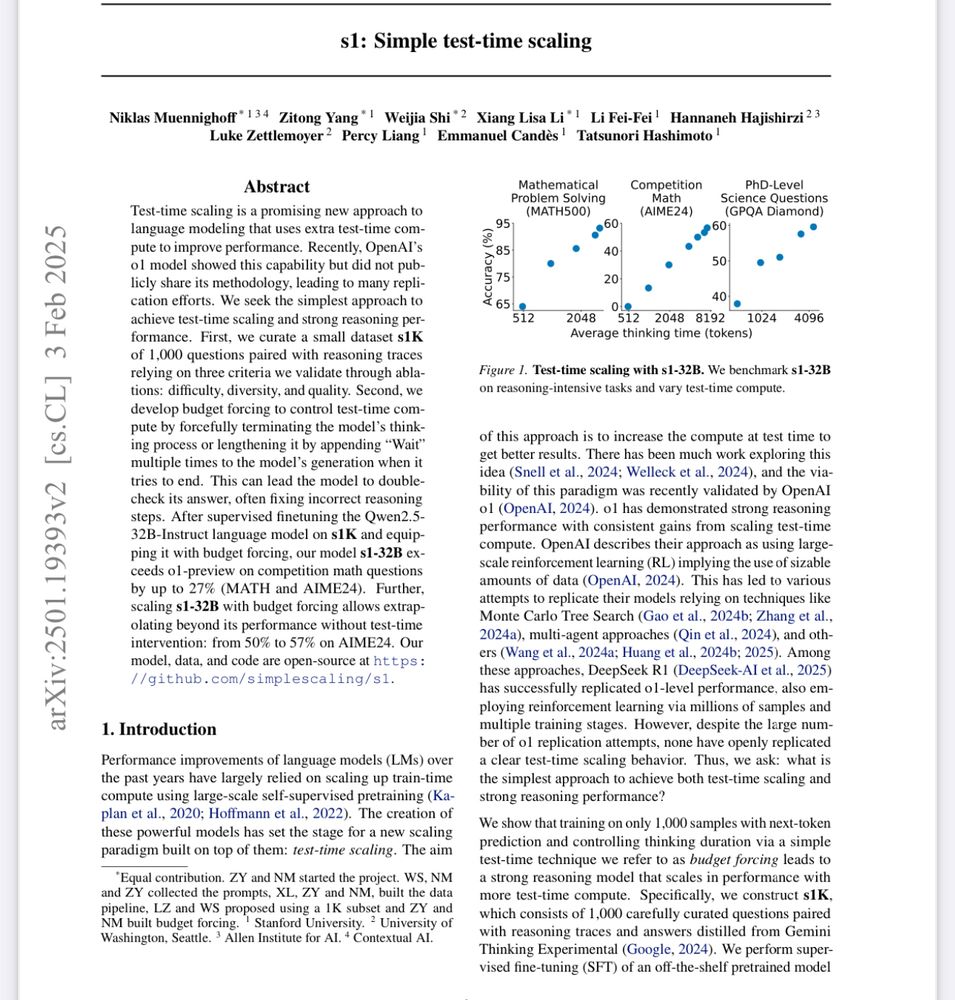

They used just 1,000 carefully curated reasoning examples & a trick where if the model tries to stop thinking, they append "Wait" to force it to continue. Near o1 at math. arxiv.org/pdf/2501.19393

They used just 1,000 carefully curated reasoning examples & a trick where if the model tries to stop thinking, they append "Wait" to force it to continue. Near o1 at math. arxiv.org/pdf/2501.19393

"we introduce Mini Worldlit, a manually curated dataset of 1,192 works of contemporary fiction from 13 countries, representing nine languages"

By @andrewpiper.bsky.social, @dbamman.bsky.social, Christina Han, Jens Bjerring-Hansen, @hoytlong.bsky.social, et al.

"we introduce Mini Worldlit, a manually curated dataset of 1,192 works of contemporary fiction from 13 countries, representing nine languages"

By @andrewpiper.bsky.social, @dbamman.bsky.social, Christina Han, Jens Bjerring-Hansen, @hoytlong.bsky.social, et al.

I somehow got into an argument last week with someone who was insisting that all models are industrial blackboxes... and I wish I'd had this on hand.

I somehow got into an argument last week with someone who was insisting that all models are industrial blackboxes... and I wish I'd had this on hand.

> Link to all models, datasets, demos huggingface.co/collections/...

> Text-readable version is here huggingface.co/posts/merve/...

I did not know Felix Hill and I am sorry for those who did.

This story is perhaps a reminder for students, postdocs, founders and researchers to take care of their well being.

medium.com/@felixhill/2...

I did not know Felix Hill and I am sorry for those who did.

This story is perhaps a reminder for students, postdocs, founders and researchers to take care of their well being.

medium.com/@felixhill/2...

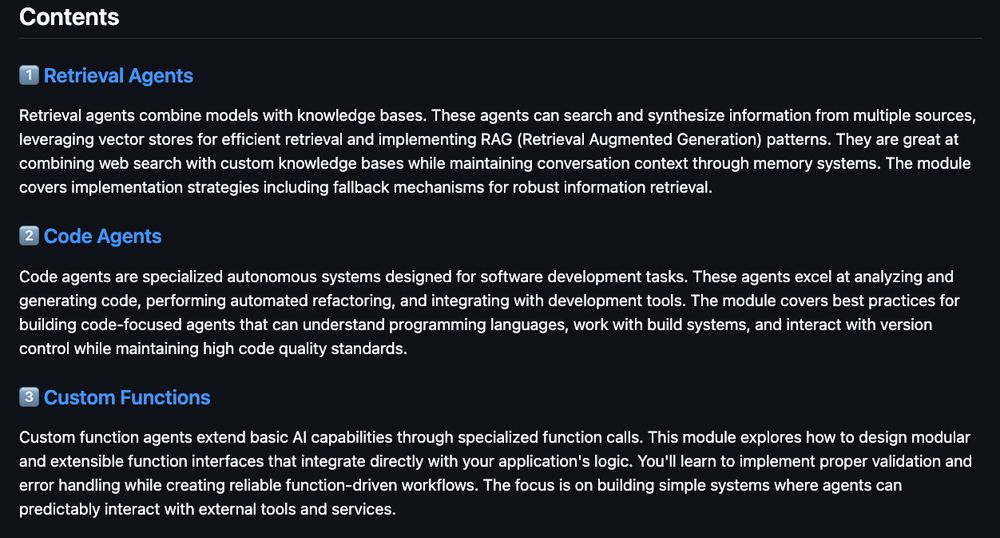

- Code agents

- Retrieval agents

- Custom functional

If you're building agent applications, this course should help.

- Code agents

- Retrieval agents

- Custom functional

If you're building agent applications, this course should help.

www.soultravelling.in/blog/know-al...

So, here you go

“For those who believe, no explanation is necessary. For those who do not believe, no explanation is possible.”

www.soultravelling.in/blog/know-al...

So, here you go

“For those who believe, no explanation is necessary. For those who do not believe, no explanation is possible.”

"The outward adornment of the body should be a reflection of the inner virtue of the soul."

St. Thomas Aquinas' teachings in the Summa Theologica

"The outward adornment of the body should be a reflection of the inner virtue of the soul."

St. Thomas Aquinas' teachings in the Summa Theologica

Let's take a trip down memory lane!

[1/N]

Let's take a trip down memory lane!

[1/N]