🎓PhD from McGill University + Mila

🙇♂️I study Multimodal LLMs, Vision-Language Alignment, LLM Interpretability & I’m passionate about ML Reproducibility (@reproml.org)

🌎https://koustuvsinha.com/

Please consider applying or sharing with colleagues: metacareers.com/jobs/2223953961352324

Please consider applying or sharing with colleagues: metacareers.com/jobs/2223953961352324

We ask 🤔

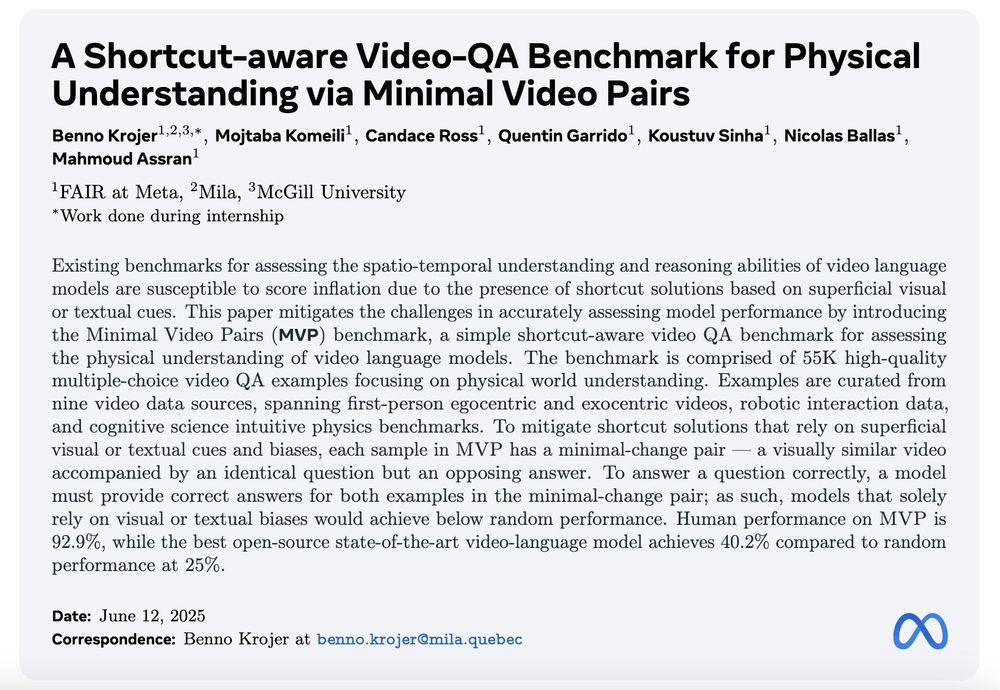

What subtle shortcuts are VideoLLMs taking on spatio-temporal questions?

And how can we instead curate shortcut-robust examples at a large-scale?

We release: MVPBench

Details 👇🔬

We ask 🤔

What subtle shortcuts are VideoLLMs taking on spatio-temporal questions?

And how can we instead curate shortcut-robust examples at a large-scale?

We release: MVPBench

Details 👇🔬

It’s not just a great pedagogic support, but many unprecedented data and experiments presented for the first time in a systematic way.

It’s not just a great pedagogic support, but many unprecedented data and experiments presented for the first time in a systematic way.

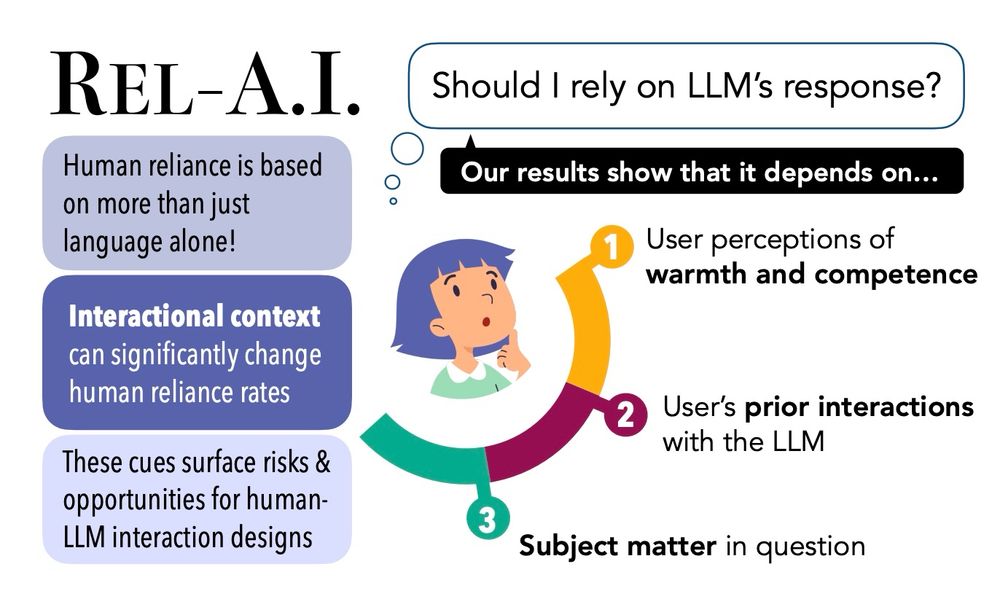

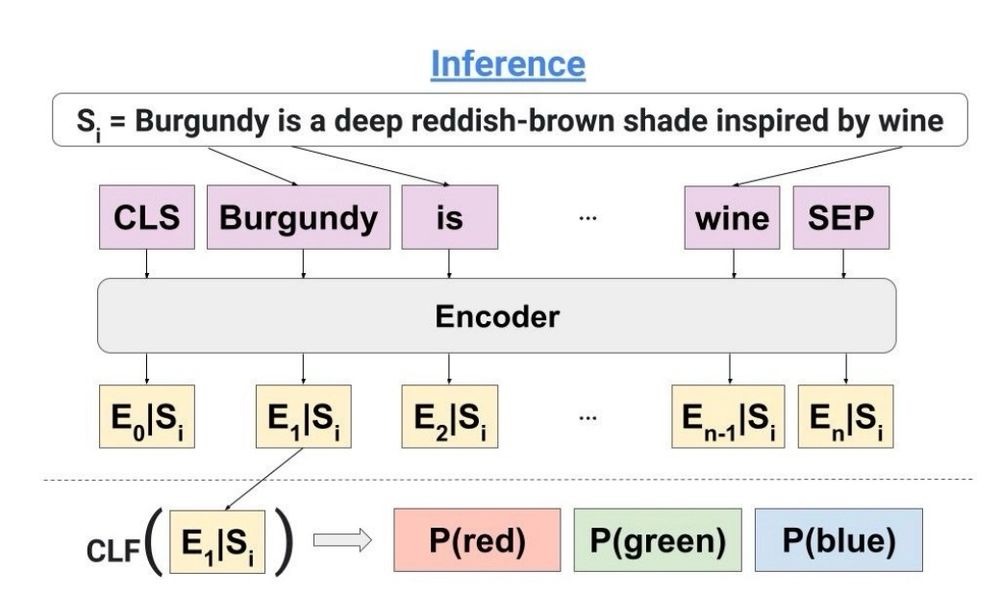

The first reveals how human over-reliance can be exacerbated by LLM friendliness. The second presents a novel computational method for concept tracing. Check them out!

arxiv.org/pdf/2407.07950

arxiv.org/pdf/2502.05704

The first reveals how human over-reliance can be exacerbated by LLM friendliness. The second presents a novel computational method for concept tracing. Check them out!

arxiv.org/pdf/2407.07950

arxiv.org/pdf/2502.05704

His last blog post on the stress of working in AI is very poignant. Apart from the emptiness of working mostly to make billionaires even richer, there's the intellectual emptiness of 'scale is all you need'

I did not know Felix Hill and I am sorry for those who did.

This story is perhaps a reminder for students, postdocs, founders and researchers to take care of their well being.

medium.com/@felixhill/2...

His last blog post on the stress of working in AI is very poignant. Apart from the emptiness of working mostly to make billionaires even richer, there's the intellectual emptiness of 'scale is all you need'

- LLMs can be trained to generate visual embeddings!!

- VQA data appears to help a lot in generation!

- Better understanding = better generation!

- LLMs can be trained to generate visual embeddings!!

- VQA data appears to help a lot in generation!

- Better understanding = better generation!

All credits to @hannahrosekirk.bsky.social A.Whitefield, P.Röttger, A.M.Bean, K.Margatina, R.Mosquera-Gomez, J.Ciro, @maxbartolo.bsky.social H.He, B.Vidgen, S.Hale

Catch Hannah tomorrow at neurips.cc/virtual/2024/poster/97804

All credits to @hannahrosekirk.bsky.social A.Whitefield, P.Röttger, A.M.Bean, K.Margatina, R.Mosquera-Gomez, J.Ciro, @maxbartolo.bsky.social H.He, B.Vidgen, S.Hale

Catch Hannah tomorrow at neurips.cc/virtual/2024/poster/97804

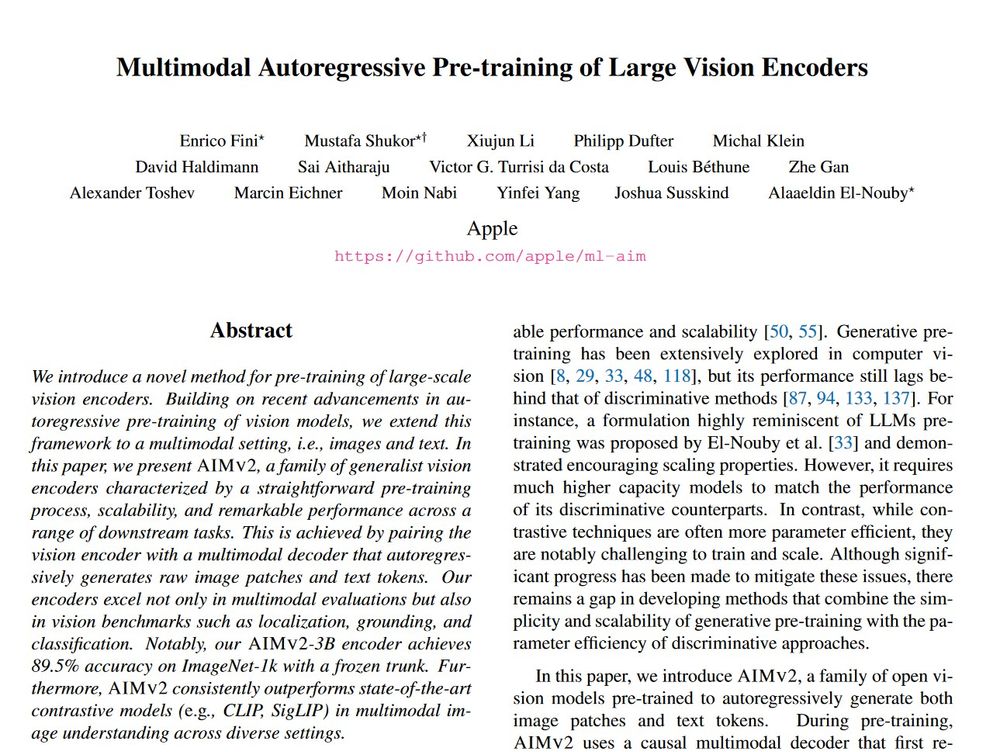

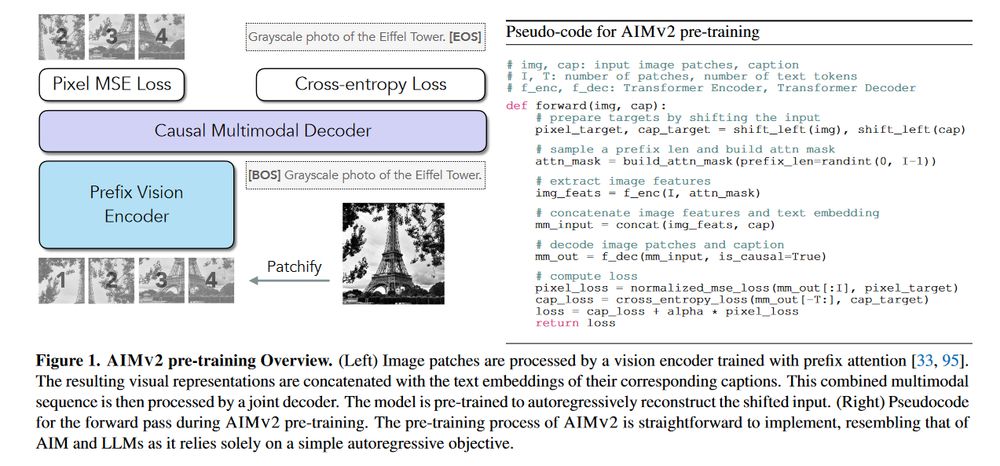

Excellent work by @alaaelnouby.bsky.social & team with code and checkpoints already up:

arxiv.org/abs/2411.14402

Excellent work by @alaaelnouby.bsky.social & team with code and checkpoints already up:

arxiv.org/abs/2411.14402

Procedural knowledge in pretraining drives LLM reasoning ⚙️🔢

🧵⬇️

Procedural knowledge in pretraining drives LLM reasoning ⚙️🔢

🧵⬇️

arxiv.org/abs/2405.07987

arxiv.org/abs/2405.07987

AI: go.bsky.app/SipA7it

RL: go.bsky.app/3WPHcHg

Women in AI: go.bsky.app/LaGDpqg

NLP: go.bsky.app/SngwGeS

AI and news: go.bsky.app/5sFqVNS

You can also search all starter packs here: blueskydirectory.com/starter-pack...

AI: go.bsky.app/SipA7it

RL: go.bsky.app/3WPHcHg

Women in AI: go.bsky.app/LaGDpqg

NLP: go.bsky.app/SngwGeS

AI and news: go.bsky.app/5sFqVNS

You can also search all starter packs here: blueskydirectory.com/starter-pack...