Job currently: Research Scientist (NYC)

Job formerly: NYU Linguistics, MSU Linguistics

Excited to share our new paper, “EvalCards: A Framework for Standardized Evaluation Reporting”is accepted for presentation at the @EurIPSConf workshop on "The Science of Benchmarking and Evaluating AI" .

Excited to share our new paper, “EvalCards: A Framework for Standardized Evaluation Reporting”is accepted for presentation at the @EurIPSConf workshop on "The Science of Benchmarking and Evaluating AI" .

Language models (LMs) are remarkably good at generating novel well-formed sentences, leading to claims that they have mastered grammar.

Yet they often assign higher probability to ungrammatical strings than to grammatical strings.

How can both things be true? 🧵👇

Language models (LMs) are remarkably good at generating novel well-formed sentences, leading to claims that they have mastered grammar.

Yet they often assign higher probability to ungrammatical strings than to grammatical strings.

How can both things be true? 🧵👇

Please RT for reach :)

If you've changed your name and dealt with updating publications, we want to hear your experience. Any reason counts: transition, marriage, cultural reasons, etc.

forms.cloud.microsoft/e/E0XXBmZdEP

Please RT for reach :)

Asst or Assoc.

We have a thriving group sites.utexas.edu/compling/ and a long proud history in the space. (For instance, fun fact, Jeff Elman was a UT Austin Linguistics Ph.D.)

faculty.utexas.edu/career/170793

🤘

Asst or Assoc.

We have a thriving group sites.utexas.edu/compling/ and a long proud history in the space. (For instance, fun fact, Jeff Elman was a UT Austin Linguistics Ph.D.)

faculty.utexas.edu/career/170793

🤘

www.liberalcurrents.com/deflating-hy...

www.liberalcurrents.com/deflating-hy...

Please consider applying or sharing with colleagues: metacareers.com/jobs/2223953961352324

Please consider applying or sharing with colleagues: metacareers.com/jobs/2223953961352324

Mechanistic Interpretability (MI) is quickly advancing, but comparing methods remains a challenge. This year at #BlackboxNLP, we're introducing a shared task to rigorously evaluate MI methods in language models 🧵

Mechanistic Interpretability (MI) is quickly advancing, but comparing methods remains a challenge. This year at #BlackboxNLP, we're introducing a shared task to rigorously evaluate MI methods in language models 🧵

Check it out: ai.meta.com/research/pub...

Check it out: ai.meta.com/research/pub...

(I've hung around interp communities for a while but this is my 1st mech-interp project. feedback much appreciated!)

(I've hung around interp communities for a while but this is my 1st mech-interp project. feedback much appreciated!)

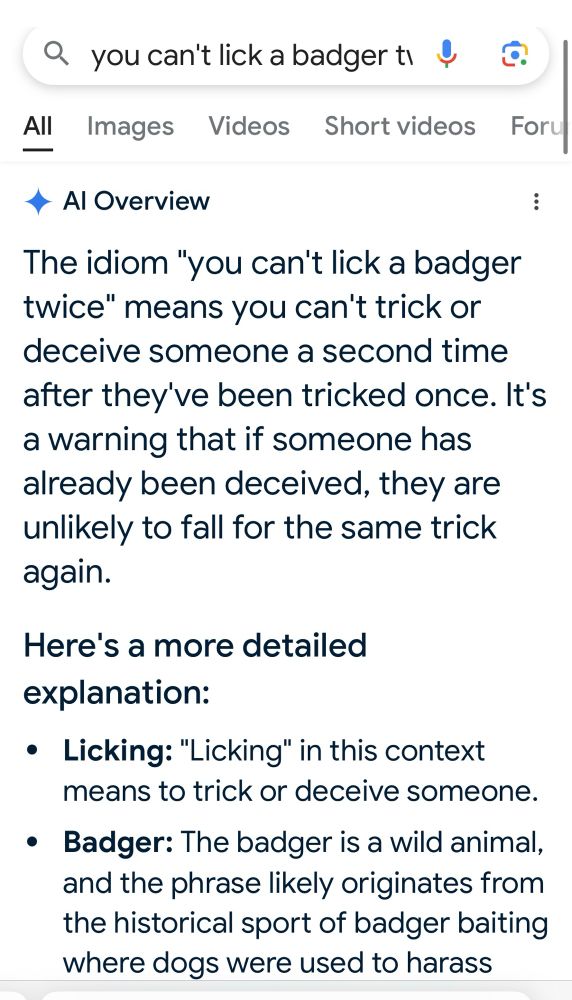

More linguistically: it looks like ending a query with "meaning" triggers the bot to accommodate the presupposition that the input contains an idiom! (Hard to run normal preposition tests here tho)

More linguistically: it looks like ending a query with "meaning" triggers the bot to accommodate the presupposition that the input contains an idiom! (Hard to run normal preposition tests here tho)

w/@giannig.bsky.social @seiller.bsky.social J. Terilla et al.

itsatcuny.org/calendar/202...

w/@giannig.bsky.social @seiller.bsky.social J. Terilla et al.

itsatcuny.org/calendar/202...

The benchmark is designed with @mlcommons.org to assess LLM risk and reliability across 12 hazard categories. AILuminate is available for testing models and helping ensure safer deployment!

The benchmark is designed with @mlcommons.org to assess LLM risk and reliability across 12 hazard categories. AILuminate is available for testing models and helping ensure safer deployment!

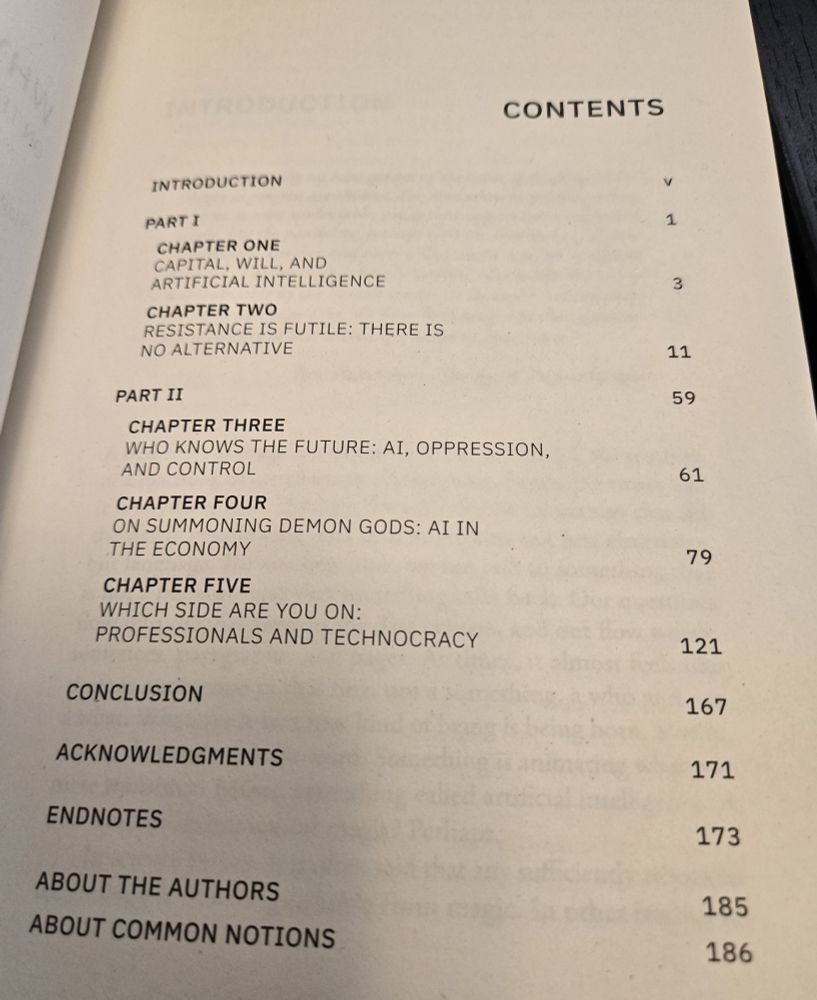

Get it directly from the publisher or wherever you get your books!

www.commonnotions.org/why-we-fear-ai

Get it directly from the publisher or wherever you get your books!

www.commonnotions.org/why-we-fear-ai

Industry insiders @hagenblix.bsky.social and Ingeborg Glimmer dive into the dark, twisted world of AI to demystify the many nightmares we have about it. One of the best ways to face your fear is to confront it—order a copy of WHY WE FEAR AI: buff.ly/1tWhkx8

Industry insiders @hagenblix.bsky.social and Ingeborg Glimmer dive into the dark, twisted world of AI to demystify the many nightmares we have about it. One of the best ways to face your fear is to confront it—order a copy of WHY WE FEAR AI: buff.ly/1tWhkx8

Please go buy their book:

www.amazon.ca/gp/product/1...

Please go buy their book:

www.amazon.ca/gp/product/1...

It's about AI and what peoples' fears about it actually mean.

Go check it out 👇

Amazing job from @commonnotions.bsky.social! Love the cover design from Josh MacPhee <3

Get a copy here:

www.commonnotions.org/why-we-fear-ai

It's about AI and what peoples' fears about it actually mean.

Go check it out 👇

Study 1: ai.meta.com/research/pub...

Study 2: ai.meta.com/research/pub...

Blog 3: ai.meta.com/blog/brain-a...

Study 1: ai.meta.com/research/pub...

Study 2: ai.meta.com/research/pub...

Blog 3: ai.meta.com/blog/brain-a...

- Improving model evaluation using SMART filtering of benchmark datasets (Gupta et al. arxiv.org/pdf/2410.20245)

- On the role of speech data in reducing toxicity detection bias (Bell et al. arxiv.org/pdf/2411.08135)

- Improving model evaluation using SMART filtering of benchmark datasets (Gupta et al. arxiv.org/pdf/2410.20245)

- On the role of speech data in reducing toxicity detection bias (Bell et al. arxiv.org/pdf/2411.08135)

"Why We Fear AI" just went to the printers and comes out in March! You can pre-order it directly at the publisher @commonnotions.bsky.social or wherever you get your books

Quick🧵

And maybe it's generalizing? "implement [AI] safely as they move at pace” www.theguardian.com/politics/2025/jan/12/mainlined-into-uks-veins-labour-announces-huge-public-rollout-of-ai

And maybe it's generalizing? "implement [AI] safely as they move at pace” www.theguardian.com/politics/2025/jan/12/mainlined-into-uks-veins-labour-announces-huge-public-rollout-of-ai