🌐 https://jiaangli.github.io/

📅 July 18, 15:45–17:00

🧠 What if Othello-Playing Language Models Could See?

We show that visual grounding improves prediction & internal structure.♟️

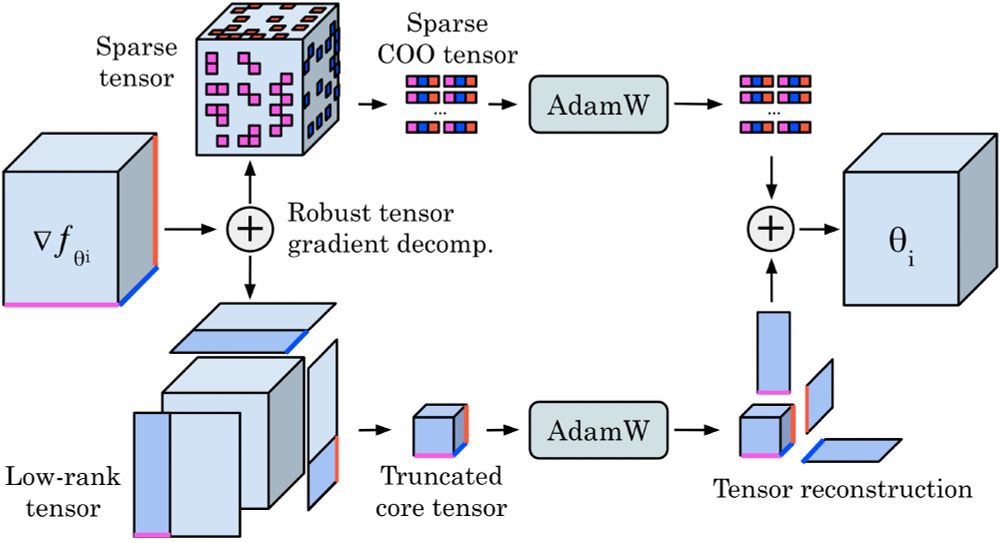

We use a robust decomposition of the gradient tensors into low-rank + sparse parts to reduce optimizer memory for Neural Operators by up to 𝟕𝟓%, while matching the performance of Adam, even on turbulent Navier–Stokes (Re 10e5).

We use a robust decomposition of the gradient tensors into low-rank + sparse parts to reduce optimizer memory for Neural Operators by up to 𝟕𝟓%, while matching the performance of Adam, even on turbulent Navier–Stokes (Re 10e5).

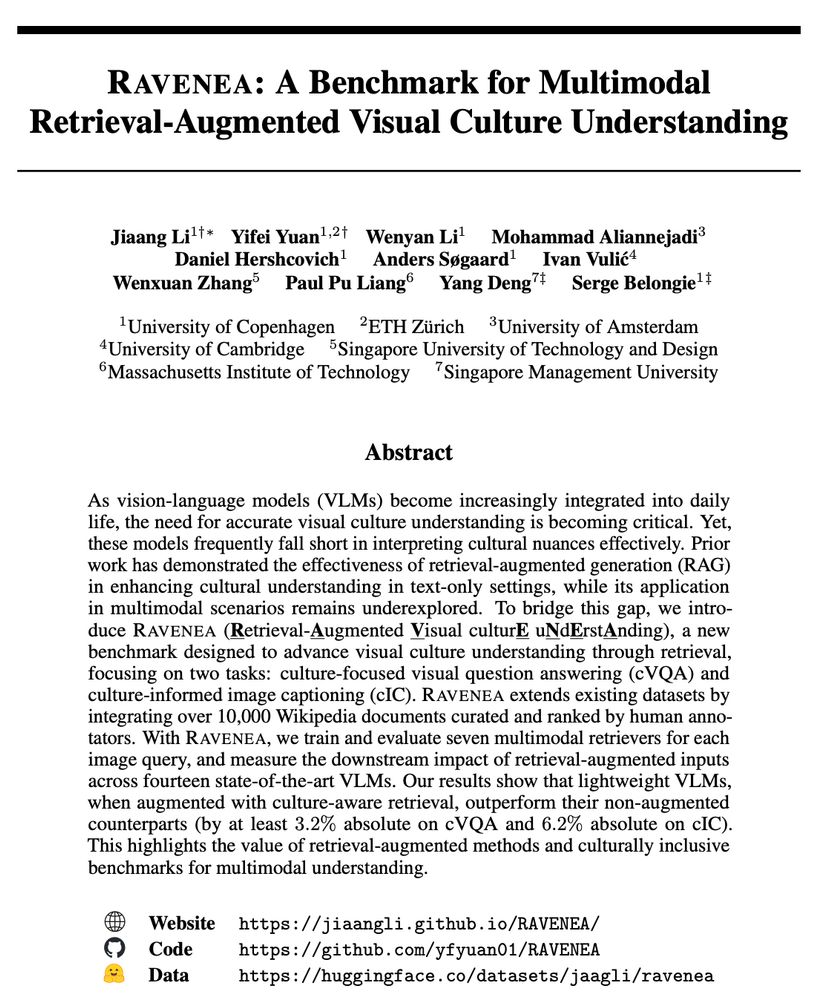

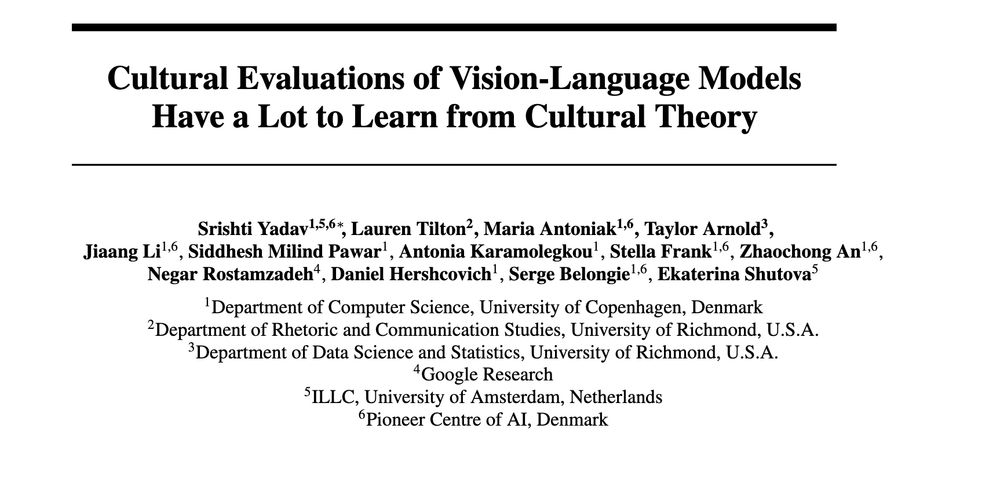

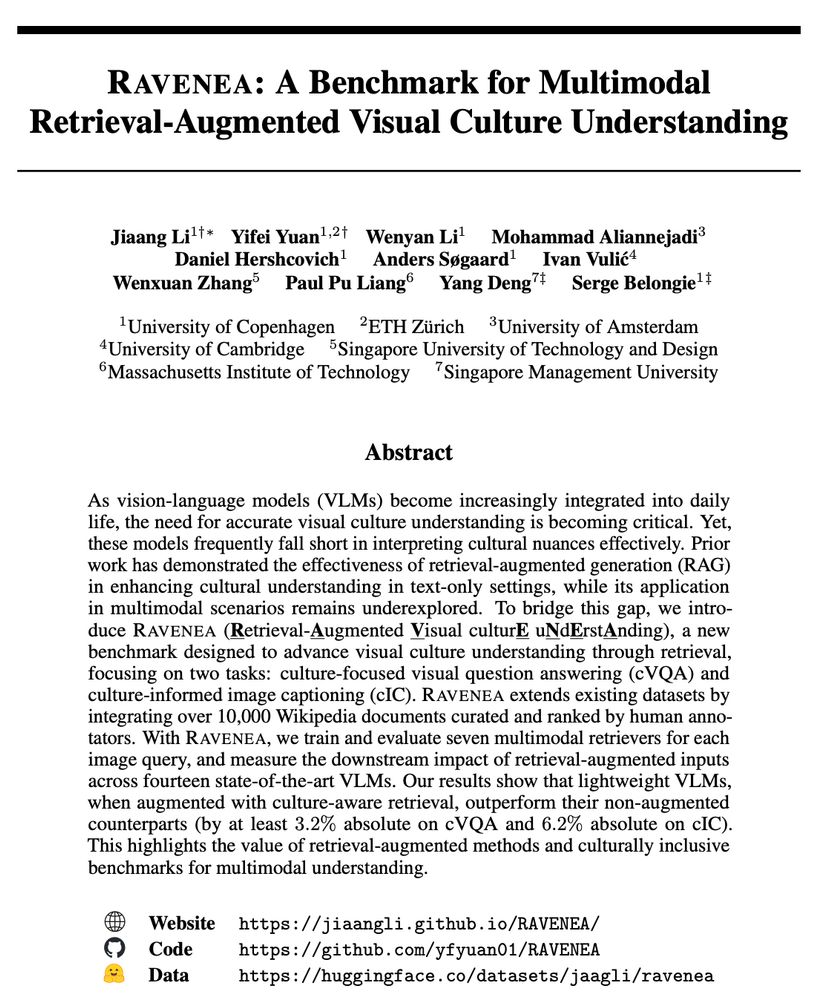

Can Multimodal Retrieval Enhance Cultural Awareness in Vision-Language Models?

Excited to introduce RAVENEA, a new benchmark aimed at evaluating cultural understanding in VLMs through RAG.

arxiv.org/abs/2505.14462

More details:👇

Paper 🔗: arxiv.org/pdf/2505.22793

Paper 🔗: arxiv.org/pdf/2505.22793

Can Multimodal Retrieval Enhance Cultural Awareness in Vision-Language Models?

Excited to introduce RAVENEA, a new benchmark aimed at evaluating cultural understanding in VLMs through RAG.

arxiv.org/abs/2505.14462

More details:👇

Can Multimodal Retrieval Enhance Cultural Awareness in Vision-Language Models?

Excited to introduce RAVENEA, a new benchmark aimed at evaluating cultural understanding in VLMs through RAG.

arxiv.org/abs/2505.14462

More details:👇

Paper link arxiv.org/abs/2503.04421

Paper link arxiv.org/abs/2503.04421

Our model MM-FSS leverages 3D, 2D, & text modalities for robust few-shot 3D segmentation—all without extra labeling cost. 🤩

arxiv.org/pdf/2410.22489

More details👇

Our model MM-FSS leverages 3D, 2D, & text modalities for robust few-shot 3D segmentation—all without extra labeling cost. 🤩

arxiv.org/pdf/2410.22489

More details👇

🔔Our New Preprint:

🚀 New Era of Multimodal Reasoning🚨

🔍 Imagine While Reasoning in Space with MVoT

Multimodal Visualization-of-Thought (MVoT) revolutionizes reasoning by generating visual "thoughts" that transform how AI thinks, reasons, and explains itself.

🔔Our New Preprint:

🚀 New Era of Multimodal Reasoning🚨

🔍 Imagine While Reasoning in Space with MVoT

Multimodal Visualization-of-Thought (MVoT) revolutionizes reasoning by generating visual "thoughts" that transform how AI thinks, reasons, and explains itself.

Are you working on fine-grained visual problems?

This year we have two peer-reviewed paper tracks:

i) 8-page CVPR Workshop proceedings

ii) 4-page non-archival extended abstracts

CALL FOR PAPERS: sites.google.com/view/fgvc12/...

Are you working on fine-grained visual problems?

This year we have two peer-reviewed paper tracks:

i) 8-page CVPR Workshop proceedings

ii) 4-page non-archival extended abstracts

CALL FOR PAPERS: sites.google.com/view/fgvc12/...

(1/5)

(1/5)

We present an empirical evaluation and find that language models partially converge towards representations isomorphic to those of vision models. #EMNLP

📃 direct.mit.edu/tacl/article...

We present an empirical evaluation and find that language models partially converge towards representations isomorphic to those of vision models. #EMNLP

📃 direct.mit.edu/tacl/article...

Boulder is a lovely college town 30 minutes from Denver and 1 hour from Rocky Mountain National Park 😎

Apply by December 15th!

Boulder is a lovely college town 30 minutes from Denver and 1 hour from Rocky Mountain National Park 😎

Apply by December 15th!

Code: github.com/sebulo/LoQT

Code: github.com/sebulo/LoQT