🌐 https://jiaangli.github.io/

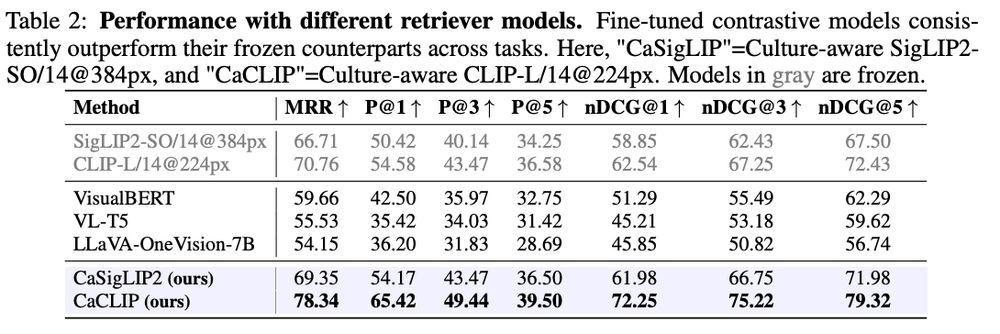

We propose Culture-aware Contrastive (CAC) Learning, a supervised learning framework compatible with both CLIP and SigLIP architectures. Fine-tuning with CAC can help models better capture culturally significant content.

We propose Culture-aware Contrastive (CAC) Learning, a supervised learning framework compatible with both CLIP and SigLIP architectures. Fine-tuning with CAC can help models better capture culturally significant content.

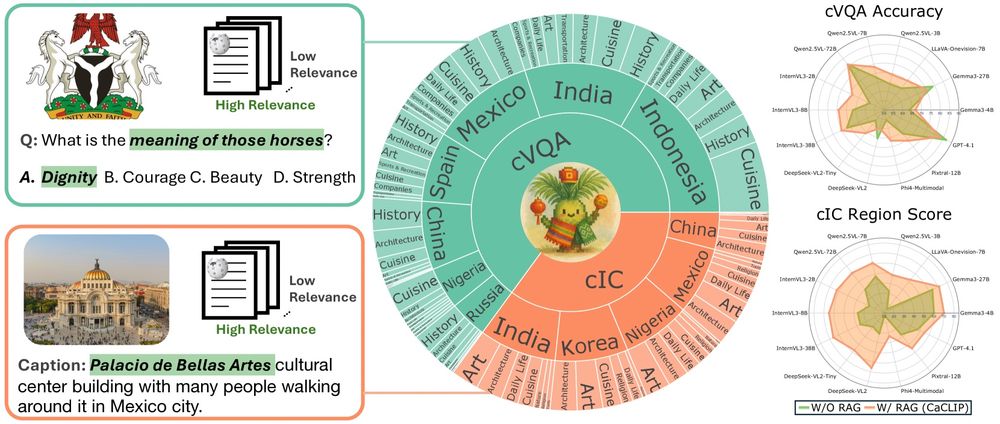

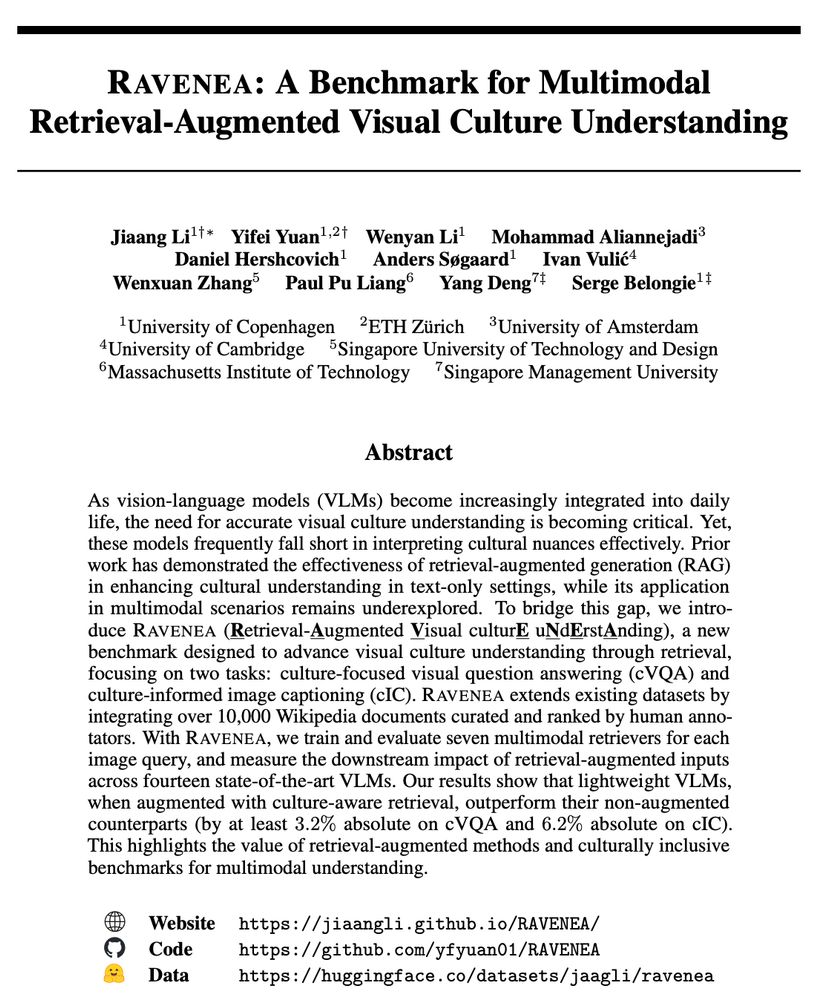

RAVENEA integrates 1,800+ images, 2,000+ culture-related questions, 500+ human captions, and 10,000+ human-ranked Wikipedia documents to support two key tasks:

🎯Culture-focused Visual Question Answering (cVQA)

📝Culture-informed Image Captioning (cIC)

RAVENEA integrates 1,800+ images, 2,000+ culture-related questions, 500+ human captions, and 10,000+ human-ranked Wikipedia documents to support two key tasks:

🎯Culture-focused Visual Question Answering (cVQA)

📝Culture-informed Image Captioning (cIC)

Can Multimodal Retrieval Enhance Cultural Awareness in Vision-Language Models?

Excited to introduce RAVENEA, a new benchmark aimed at evaluating cultural understanding in VLMs through RAG.

arxiv.org/abs/2505.14462

More details:👇

Can Multimodal Retrieval Enhance Cultural Awareness in Vision-Language Models?

Excited to introduce RAVENEA, a new benchmark aimed at evaluating cultural understanding in VLMs through RAG.

arxiv.org/abs/2505.14462

More details:👇

- the LM understanding debate

- the study of emergent properties

- philosophy

🧵(6/8)

- the LM understanding debate

- the study of emergent properties

- philosophy

🧵(6/8)

🧵(5/8)

🧵(5/8)

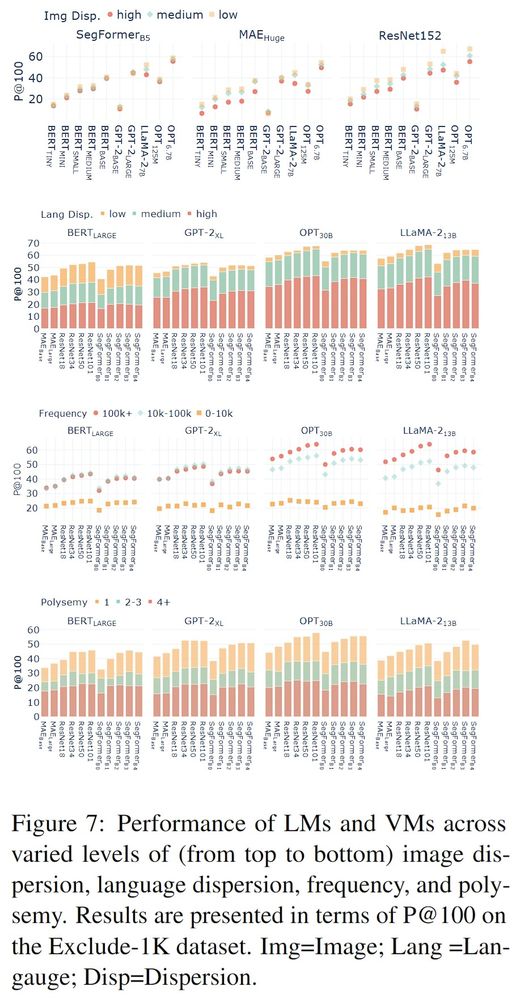

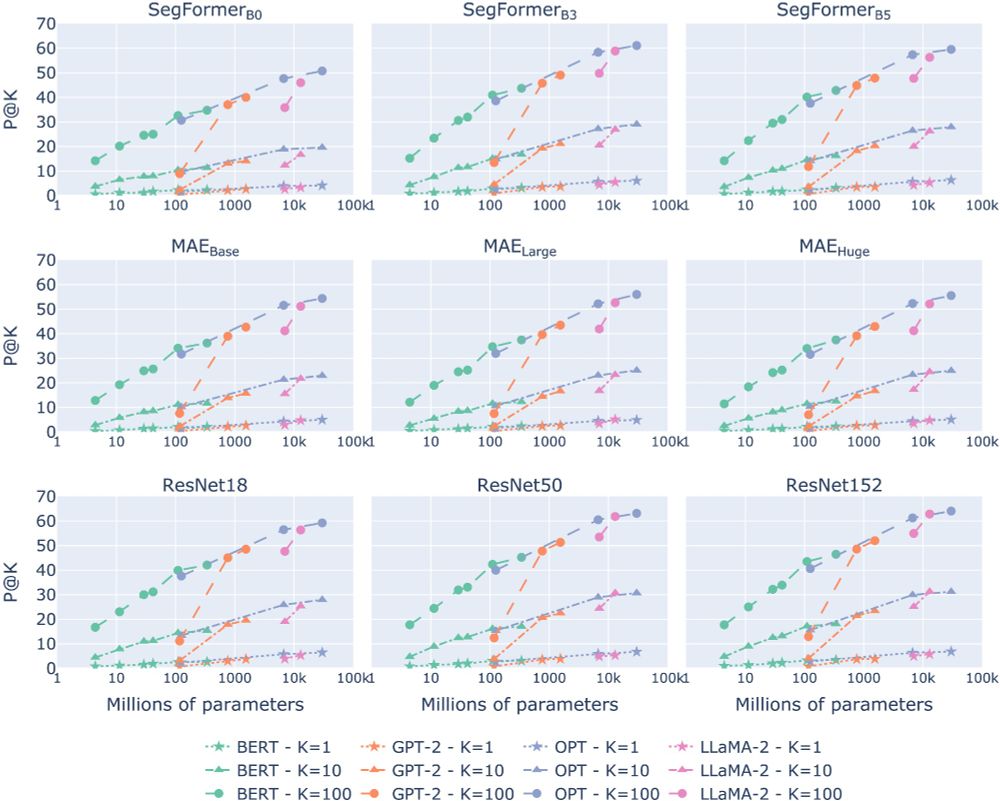

🔍Our experiments show the alignability of LMs

and vision models is sensitive to image and language dispersion, polysemy, and frequency.

🧵(4/8)

🔍Our experiments show the alignability of LMs

and vision models is sensitive to image and language dispersion, polysemy, and frequency.

🧵(4/8)

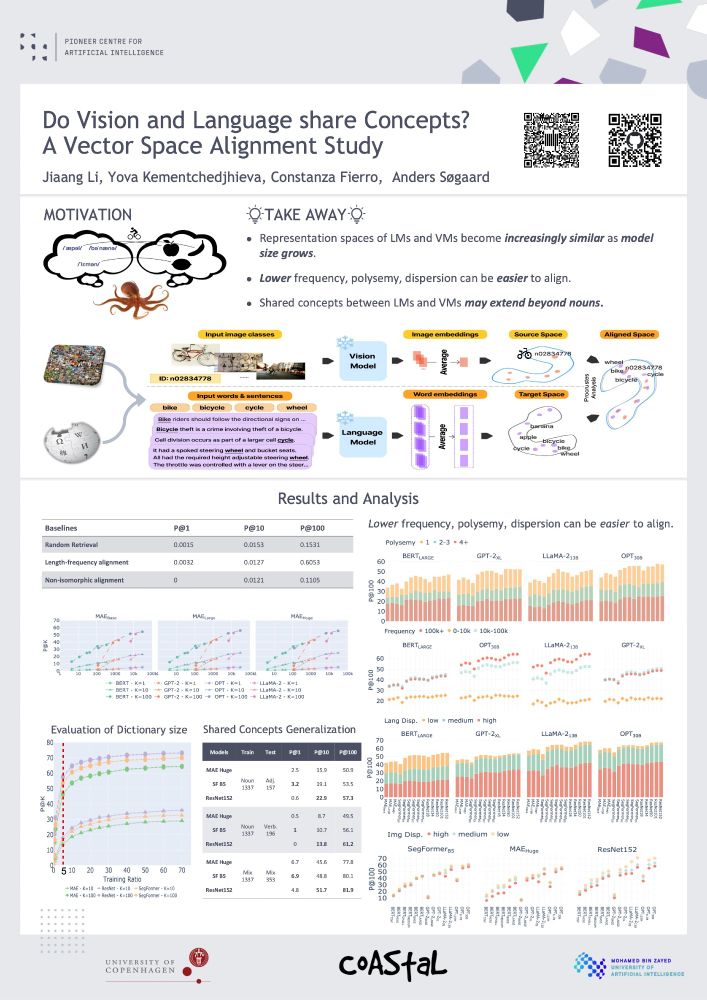

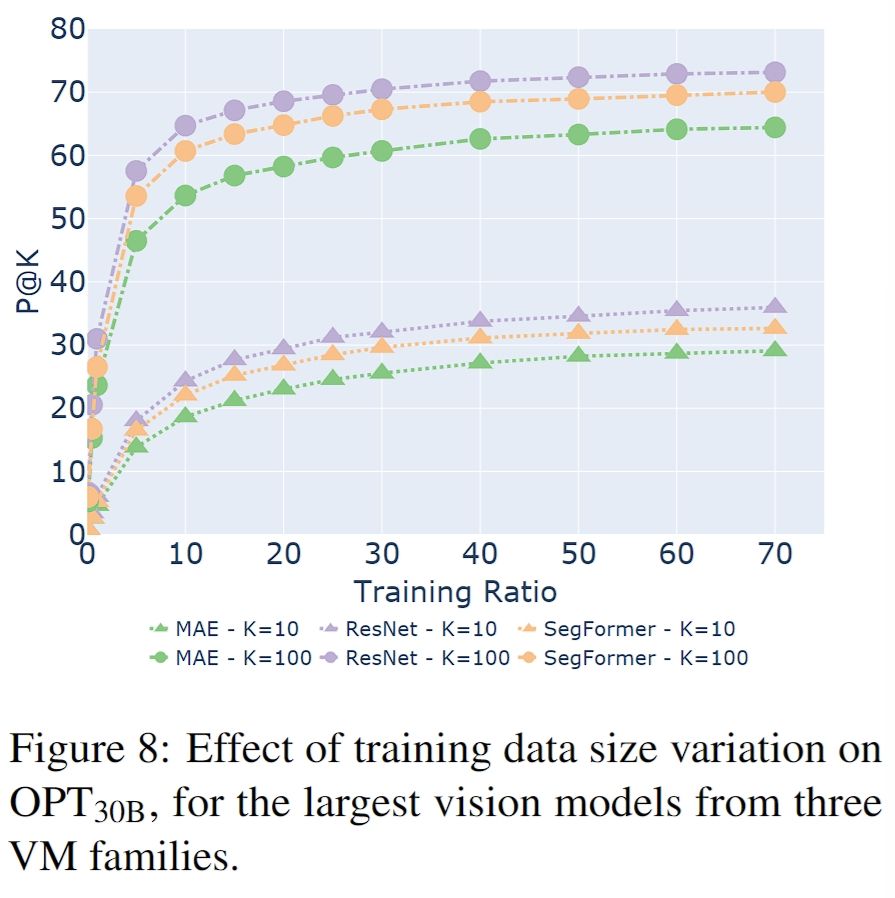

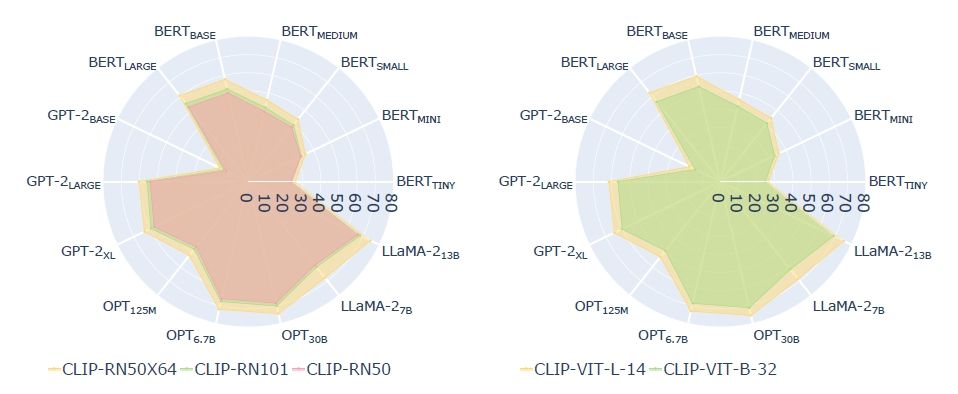

✨LMs converge toward the geometry of visual models as they grow bigger and better.

🧵(3/8)

✨LMs converge toward the geometry of visual models as they grow bigger and better.

🧵(3/8)

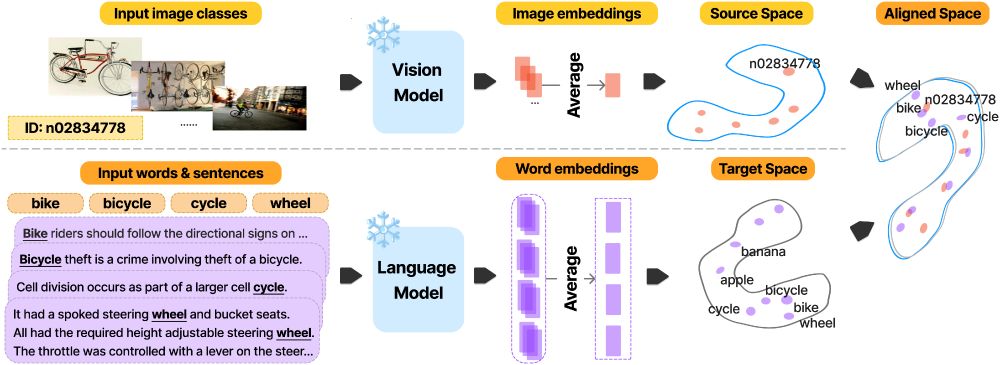

🎯We measure the alignment between vision models and LMs by mapping their vector spaces and evaluating retrieval precision on held-out data.

🧵(2/8)

🎯We measure the alignment between vision models and LMs by mapping their vector spaces and evaluating retrieval precision on held-out data.

🧵(2/8)

We present an empirical evaluation and find that language models partially converge towards representations isomorphic to those of vision models. #EMNLP

📃 direct.mit.edu/tacl/article...

We present an empirical evaluation and find that language models partially converge towards representations isomorphic to those of vision models. #EMNLP

📃 direct.mit.edu/tacl/article...