Asst. Prof. at University of Copenhagen & Pioneer Centre for AI

formerly: PhD at ETH Zürich

#CV #ML #EO #AI4EO #SSL4EO #ML4good

🔗 langnico.github.io

langnico.github.io

Watch the talks by Philippe Ciais, Sagar Vaze, Natalie Iwanycki Ahlstrand, Drew Purves, and Mirela Beloiu Schwenke.

Thanks to @climateainordics.com @aicentre.dk and DDSA - Danish Data Science Academy.

@euripsconf.bsky.social

Watch the talks by Philippe Ciais, Sagar Vaze, Natalie Iwanycki Ahlstrand, Drew Purves, and Mirela Beloiu Schwenke.

Thanks to @climateainordics.com @aicentre.dk and DDSA - Danish Data Science Academy.

@euripsconf.bsky.social

Call for papers is out: sites.google.com/view/fgvc13

Big thanks to our artists Mehmet Ozgur Turkoglu and Sophie Stalder for creating our new look and mascot!

@cvprconference.bsky.social

The 13th Workshop on Fine-Grained Visual Categorization has been accepted to CVPR 2026, in Denver, Colorado!

CALL FOR PAPERS: sites.google.com/view/fgvc13/

From Ecology to Medical Imagining, join us as we tackle the long tail and the limits of visual discrimination! #CVPR2026 #AI

Call for papers is out: sites.google.com/view/fgvc13

Big thanks to our artists Mehmet Ozgur Turkoglu and Sophie Stalder for creating our new look and mascot!

@cvprconference.bsky.social

We are looking for candidates with a background in AI/CS, Math, Stats, or Physics that are passionate about solving challenging problems in these domains.

Application deadline is in two weeks.

We are looking for candidates with a background in AI/CS, Math, Stats, or Physics that are passionate about solving challenging problems in these domains.

Application deadline is in two weeks.

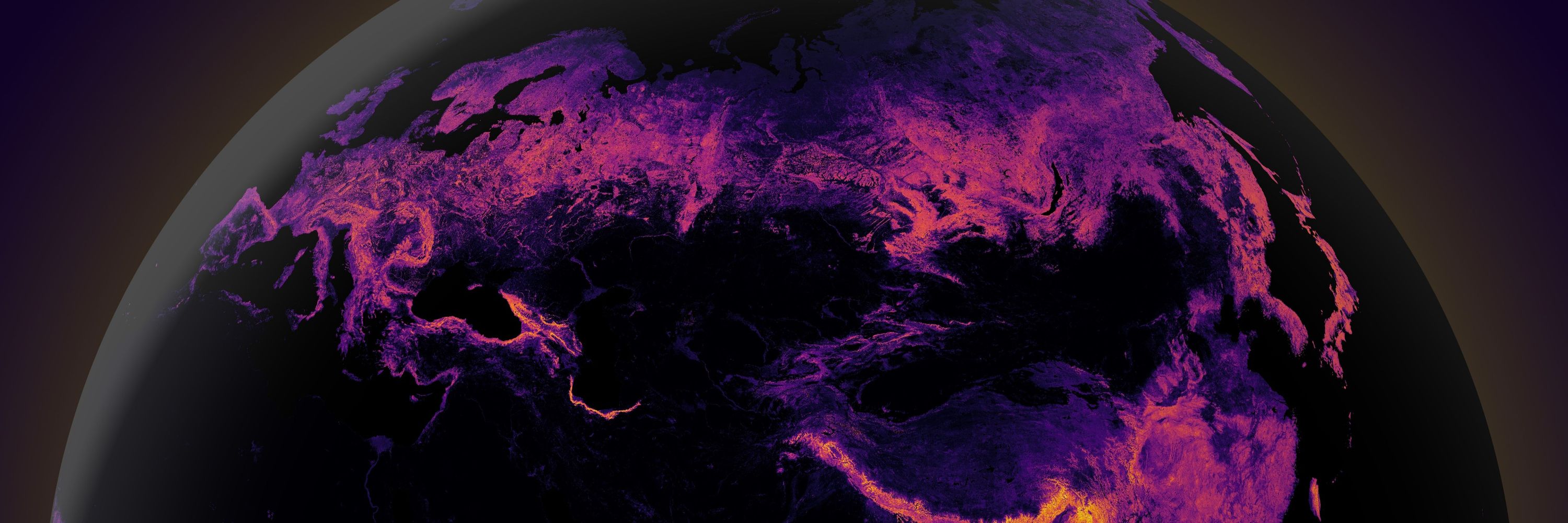

If you're interested in learning more about the science behind it, we've just released a preprint describing the methodology and the tool we've built: arxiv.org/abs/2511.15656

If you're interested in learning more about the science behind it, we've just released a preprint describing the methodology and the tool we've built: arxiv.org/abs/2511.15656

Project page: sjyhne.github.io/superf/

Preprint: www.arxiv.org/abs/2512.09115

Demo: huggingface.co/spaces/sjyhn...

Thread [1/n] 👇

Project page: sjyhne.github.io/superf/

Preprint: www.arxiv.org/abs/2512.09115

Demo: huggingface.co/spaces/sjyhn...

Thread [1/n] 👇

Project page: sjyhne.github.io/superf/

Preprint: www.arxiv.org/abs/2512.09115

Demo: huggingface.co/spaces/sjyhn...

Thread [1/n] 👇

I gave a TED Talk on scientific discovery in ecological databases at a joint TED Countdown and Bezos Earth Fund event for #NYClimateWeek this year, and it's now live!

@inaturalist.bsky.social #AIforConservation

Join us for the AI for Earth & Climate Sciences Workshop, part of the ELLIS UnConference (in Copenhagen 🇩🇰 on Dec 2), co-located with #EurIPS.

🕒 Submit workshop contributions by Oct 24, 2025

🔗 All info: eurips.cc/ellis

Join us for the AI for Earth & Climate Sciences Workshop, part of the ELLIS UnConference (in Copenhagen 🇩🇰 on Dec 2), co-located with #EurIPS.

🕒 Submit workshop contributions by Oct 24, 2025

🔗 All info: eurips.cc/ellis

Come join the discussion at the EurIPS workshop "REO: Advances in Representation Learning for Earth Observation"

Call for papers deadline: October 15, AoE

Workshop site: sites.google.com/view/reoeurips

@euripsconf.bsky.social @esa.int

Come join the discussion at the EurIPS workshop "REO: Advances in Representation Learning for Earth Observation"

Call for papers deadline: October 15, AoE

Workshop site: sites.google.com/view/reoeurips

@euripsconf.bsky.social @esa.int

Come join the discussion at the EurIPS workshop "REO: Advances in Representation Learning for Earth Observation"

Call for papers deadline: October 15, AoE

Workshop site: sites.google.com/view/reoeurips

@euripsconf.bsky.social @esa.int

Workshop site: sites.google.com/g.harvard.ed...

Workshop site: sites.google.com/g.harvard.ed...

Are you working at the intersection of AI and Climate or Conservation applications?

This is a great opportunity to discuss your novel and recently published research.

Call for participation: sites.google.com/g.harvard.ed...

Are you working at the intersection of AI and Climate or Conservation applications?

This is a great opportunity to discuss your novel and recently published research.

Call for participation: sites.google.com/g.harvard.ed...

This MICCAI25 challenge is still running and there is still time to participate!

Submission deadline: August 20, 2025

Join here: fomo25.github.io

Check out the thread below👇

This MICCAI25 challenge is still running and there is still time to participate!

Submission deadline: August 20, 2025

Join here: fomo25.github.io

Check out the thread below👇

We’re excited to welcome Prof. Dr. Devis Tuia from EPFL, who will join us on October 1st with his talk:

“Machine Learning for Earth: Monitoring the Pulse of Our Planet with Sensor Data, from Your Phone All the Way to Space”

We’re excited to welcome Prof. Dr. Devis Tuia from EPFL, who will join us on October 1st with his talk:

“Machine Learning for Earth: Monitoring the Pulse of Our Planet with Sensor Data, from Your Phone All the Way to Space”

We will be in room 104E.

#FGVC #CVPR2025

@fgvcworkshop.bsky.social

We will be in room 104E.

#FGVC #CVPR2025

@fgvcworkshop.bsky.social

sites.google.com/view/fgvc12

We are looking forward to a series of talks on semantic granularity, covering topics such as machine teaching, interpretability and much more!

Room 104 E

Schedule & details: sites.google.com/view/fgvc12

@cvprconference.bsky.social #CVPR25

sites.google.com/view/fgvc12

openaccess.thecvf.com/CVPR2025_wor...

Poster session:

June 11, 4pm-6pm

ExHall D, poster boards 373-403

#CVPR25 @cvprconference.bsky.social

openaccess.thecvf.com/CVPR2025_wor...

Poster session:

June 11, 4pm-6pm

ExHall D, poster boards 373-403

#CVPR25 @cvprconference.bsky.social

We are looking forward to a series of talks on semantic granularity, covering topics such as machine teaching, interpretability and much more!

Room 104 E

Schedule & details: sites.google.com/view/fgvc12

@cvprconference.bsky.social #CVPR25

We are looking forward to a series of talks on semantic granularity, covering topics such as machine teaching, interpretability and much more!

Room 104 E

Schedule & details: sites.google.com/view/fgvc12

@cvprconference.bsky.social #CVPR25

⏰ Deadline June 30

📣Notification July 11

📷Camera ready Aug 8

🏆Best paper award-$1,000

⏰ Deadline June 30

📣Notification July 11

📷Camera ready Aug 8

🏆Best paper award-$1,000

📅 4 June 2025, 14:00–15:00.

Find more information and sign up here:

www.aicentre.dk/events/talk-...

📅 4 June 2025, 14:00–15:00.

Find more information and sign up here:

www.aicentre.dk/events/talk-...

👉 climateainordics.com/newsletter/2...

👉 climateainordics.com/newsletter/2...

www.kaggle.com/competitions...

@kakanikatija.bsky.social @mbarinews.bsky.social @cvprconference.bsky.social @fgvcworkshop.bsky.social @kaggle.com

www.kaggle.com/competitions...

@kakanikatija.bsky.social @mbarinews.bsky.social @cvprconference.bsky.social @fgvcworkshop.bsky.social @kaggle.com

When: Jan 12-30, 2026

Where: SCBI @smconservation.bsky.social

When: Jan 12-30, 2026

Where: SCBI @smconservation.bsky.social