The MarkItDown library is a utility tool for converting various files to Markdown (e.g., for indexing, text analysis, etc.)

Repo: github.com/microsoft/ma...

The MarkItDown library is a utility tool for converting various files to Markdown (e.g., for indexing, text analysis, etc.)

Repo: github.com/microsoft/ma...

🧵>>

🧵>>

You might want to give Docling a try.

𝗪𝗵𝗮𝘁'𝘀 𝗗𝗼𝗰𝗹𝗶𝗻𝗴?

• Python package by IBM

• OS (MIT license)

• PDF, DOCX, PPTX → Markdown, JSON

𝗪𝗵𝘆 𝘂𝘀𝗲 𝗗𝗼𝗰𝗹𝗶𝗻𝗴?

• Doesn’t require fancy gear, lots of memory, or cloud services

• Works on regular computers or Google Colab Pro

You might want to give Docling a try.

𝗪𝗵𝗮𝘁'𝘀 𝗗𝗼𝗰𝗹𝗶𝗻𝗴?

• Python package by IBM

• OS (MIT license)

• PDF, DOCX, PPTX → Markdown, JSON

𝗪𝗵𝘆 𝘂𝘀𝗲 𝗗𝗼𝗰𝗹𝗶𝗻𝗴?

• Doesn’t require fancy gear, lots of memory, or cloud services

• Works on regular computers or Google Colab Pro

Nvidia's Star Attention: Efficient LLM Inference over Long Sequences

Nvidia's Star Attention: Efficient LLM Inference over Long Sequences

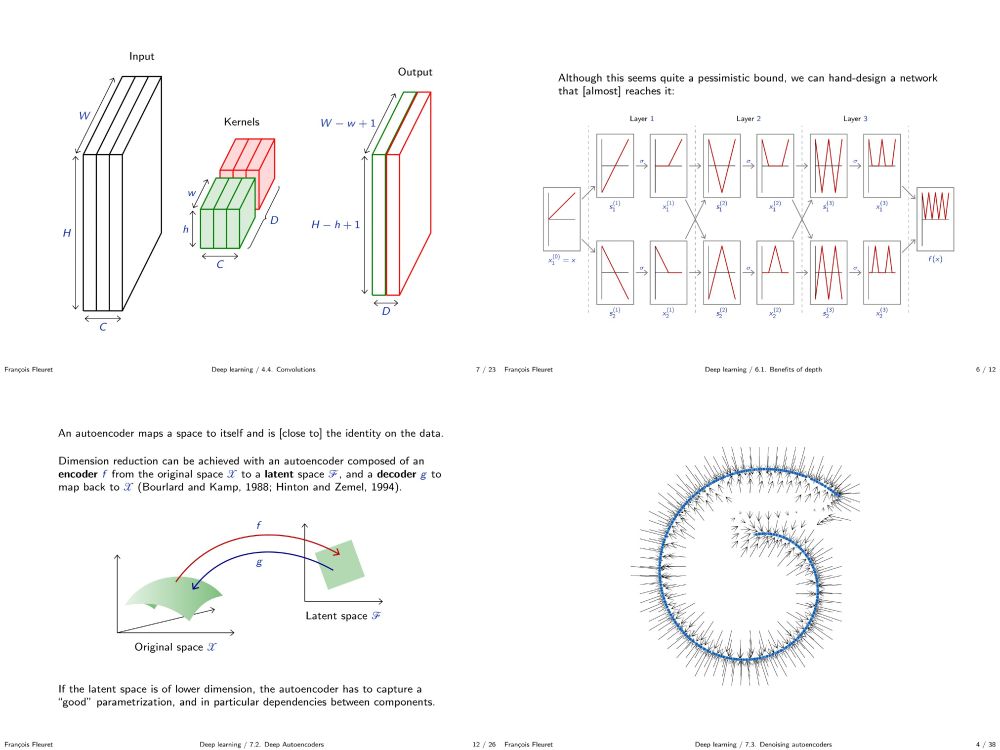

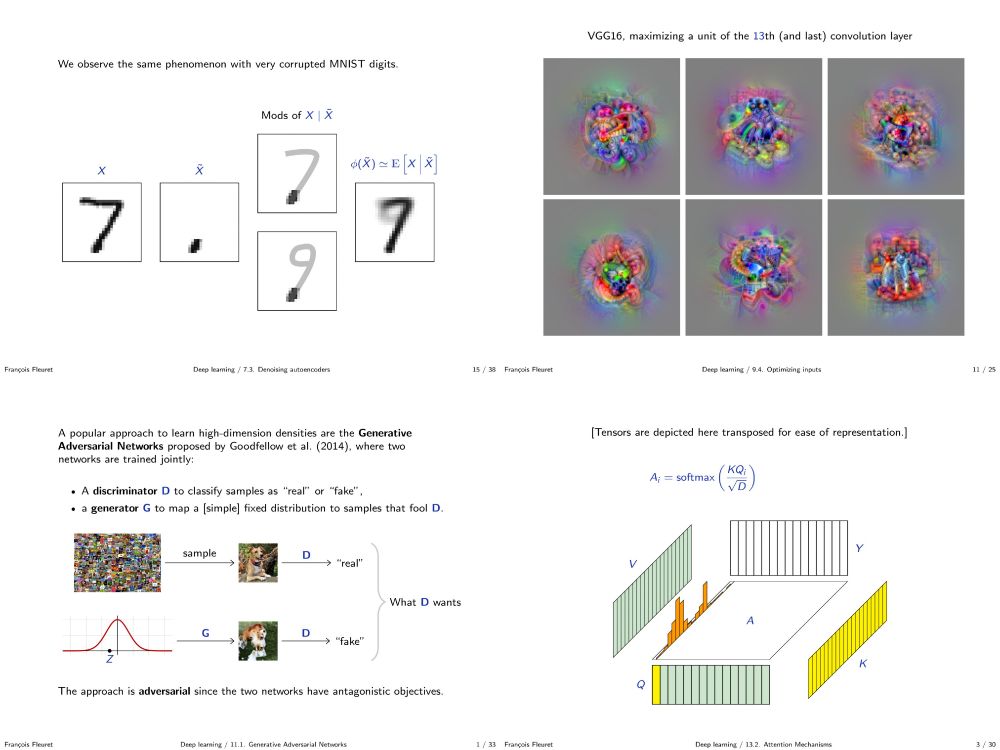

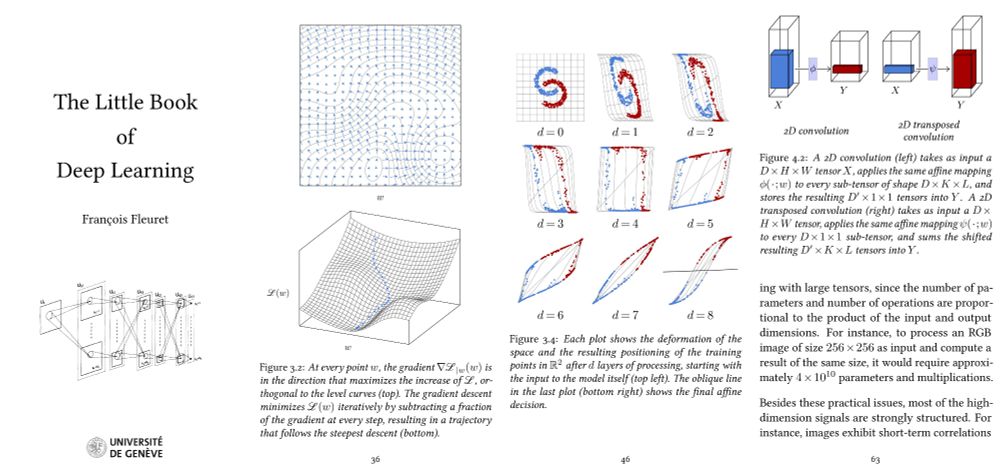

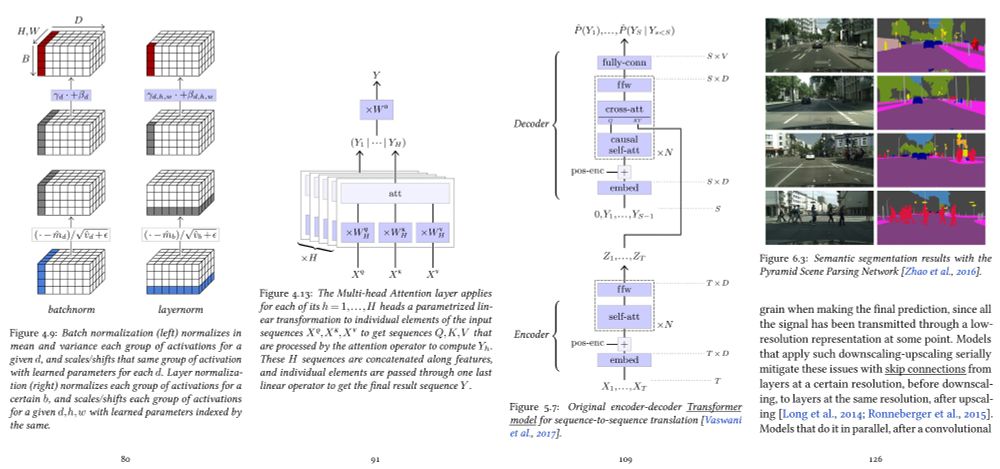

fleuret.org/dlc/

And my "Little Book of Deep Learning" is available as a phone-formatted pdf (nearing 700k downloads!)

fleuret.org/lbdl/

fleuret.org/dlc/

And my "Little Book of Deep Learning" is available as a phone-formatted pdf (nearing 700k downloads!)

fleuret.org/lbdl/

RLHF = 30% *more* copying than base!

Awesome work from the awesome Ximing Lu (gloriaximinglu.github.io) et al. 🤩

arxiv.org/pdf/2410.04265

RLHF = 30% *more* copying than base!

Awesome work from the awesome Ximing Lu (gloriaximinglu.github.io) et al. 🤩

arxiv.org/pdf/2410.04265

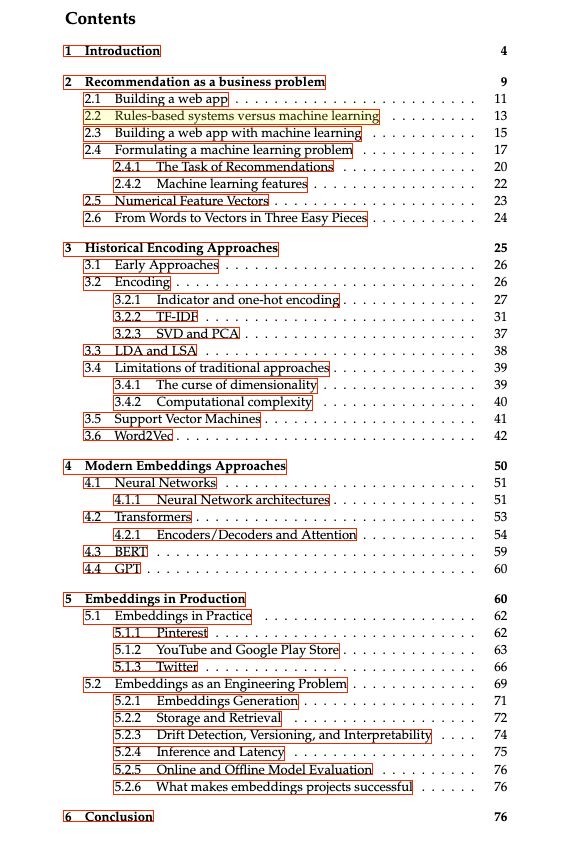

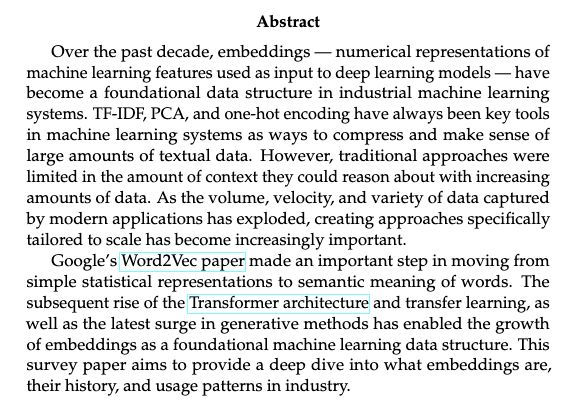

Let's start with "What are embeddings" by @vickiboykis.com

The book is a great summary of embeddings, from history to modern approaches.

The best part: it's free.

Link: vickiboykis.com/what_are_emb...

Let's start with "What are embeddings" by @vickiboykis.com

The book is a great summary of embeddings, from history to modern approaches.

The best part: it's free.

Link: vickiboykis.com/what_are_emb...

Part 1: dynomight.net/chess/

Part 2: dynomight.net/more-chess/

Part 1: dynomight.net/chess/

Part 2: dynomight.net/more-chess/

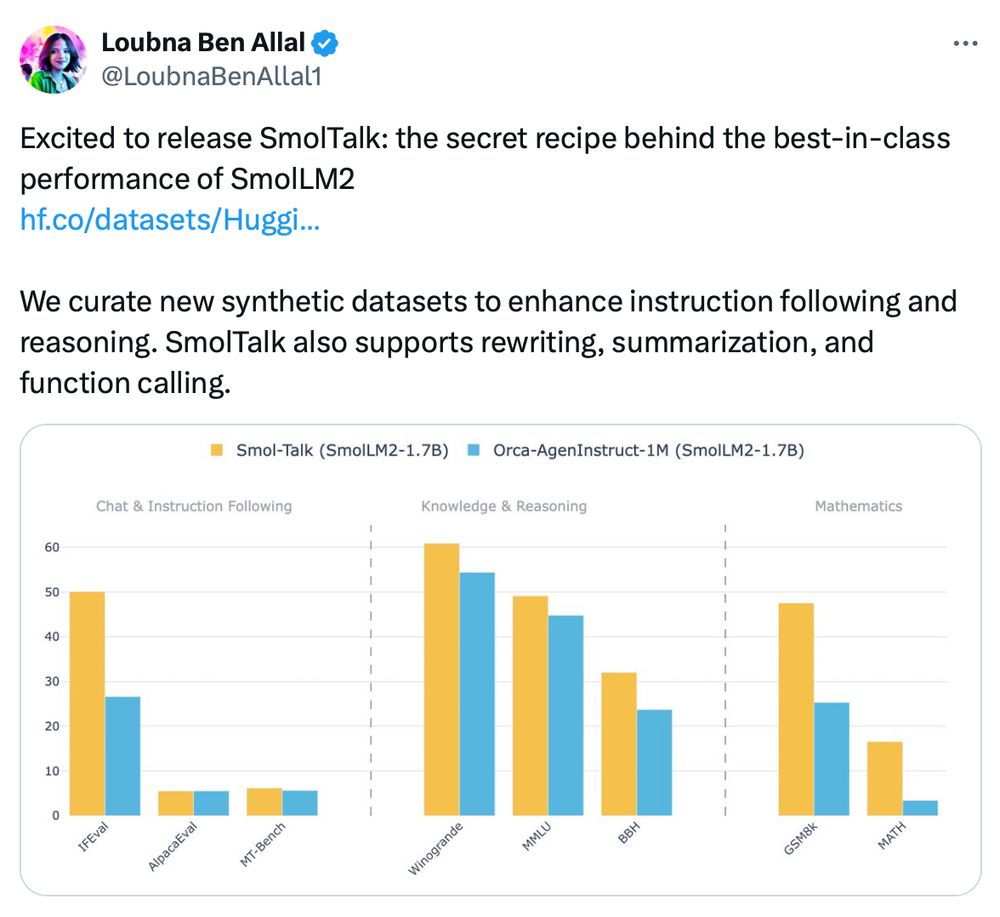

Unsurprisingly: data, data, data!

The SmolTalk is open and available here: huggingface.co/datasets/Hug...

Unsurprisingly: data, data, data!

The SmolTalk is open and available here: huggingface.co/datasets/Hug...

Principles and Practices of Engineering Artificially Intelligent Systems

mlsysbook.ai

Principles and Practices of Engineering Artificially Intelligent Systems

mlsysbook.ai

A surprising result from Databricks when measuring embeddings and rerankers on internal evals.

1- Reranking few docs improves recall (expected).

2- Reranking many docs degrades quality (!).

3- Reranking too many documents is quite often worse than using embedding model alone (!!).

A surprising result from Databricks when measuring embeddings and rerankers on internal evals.

1- Reranking few docs improves recall (expected).

2- Reranking many docs degrades quality (!).

3- Reranking too many documents is quite often worse than using embedding model alone (!!).

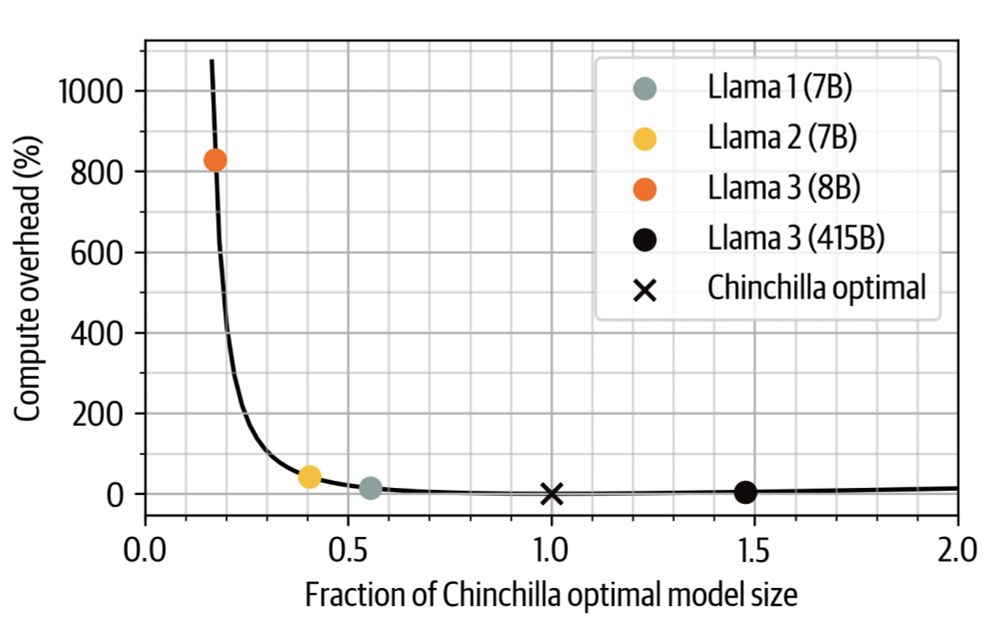

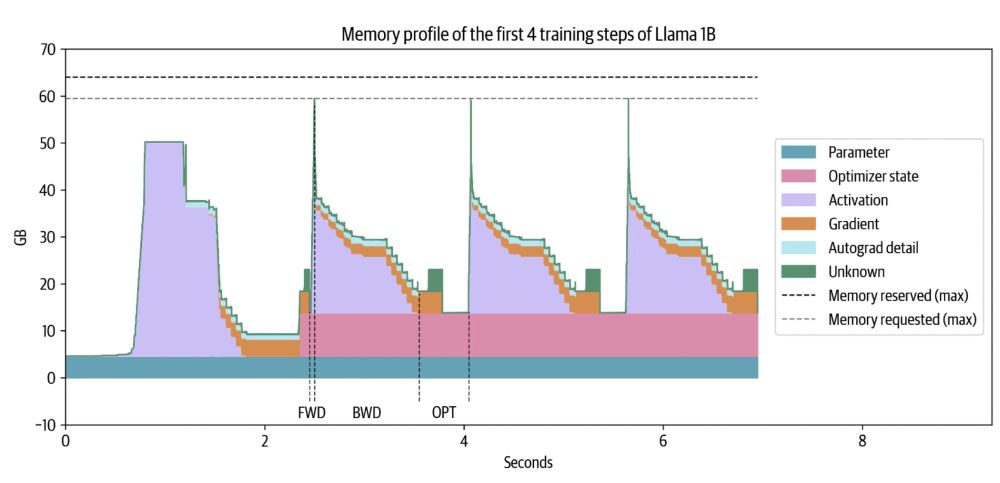

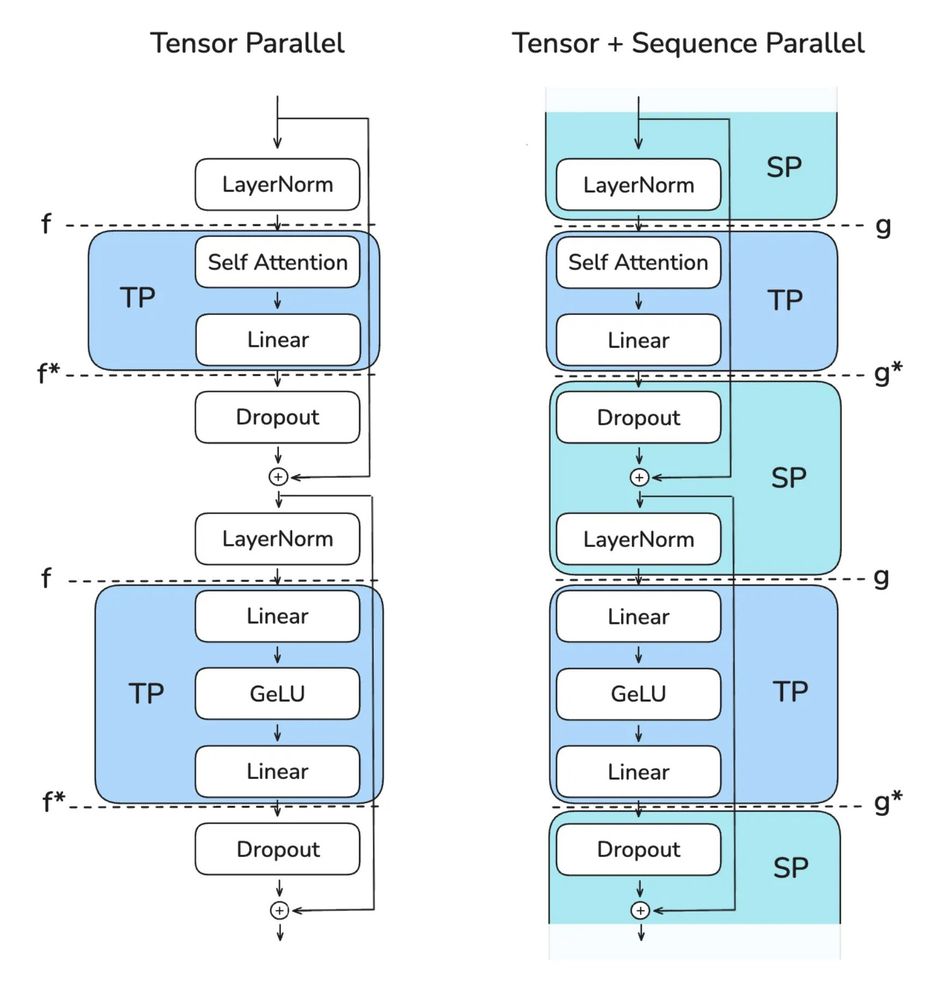

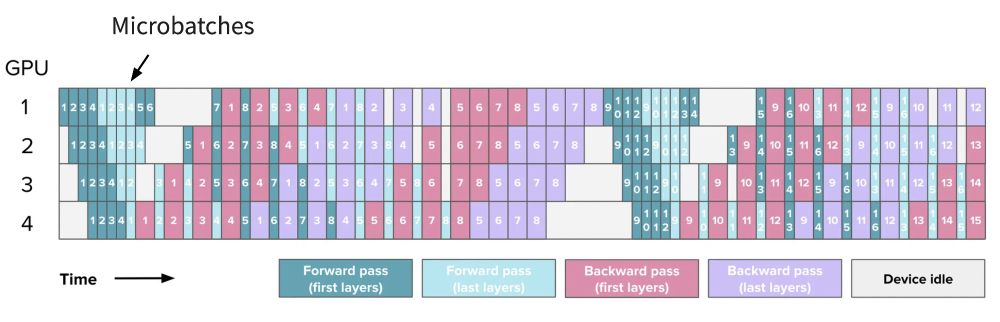

Gave a workshop at Uni Bern: starts with scaling laws and goes to web scale data processing and finishes training with 4D parallelism and ZeRO.

*assuming your home includes an H100 cluster

Gave a workshop at Uni Bern: starts with scaling laws and goes to web scale data processing and finishes training with 4D parallelism and ZeRO.

*assuming your home includes an H100 cluster