@Anthropic. Seattle bike lane enjoyer. Opinions my own.

(I'm still based in Seattle 🏔️🌲🏕️; but in SF regularly)

(I'm still based in Seattle 🏔️🌲🏕️; but in SF regularly)

buff.ly/DRJOGrB

buff.ly/DRJOGrB

@yoavgo.bsky.social's blog is a clarifying read on the topic -- I plan to adopt his terminology :-)

gist.github.com/yoavg/9142e5...

@yoavgo.bsky.social's blog is a clarifying read on the topic -- I plan to adopt his terminology :-)

gist.github.com/yoavg/9142e5...

actionnetwork.org/letters/say-...

epoch.ai/gradient-upd...

epoch.ai/gradient-upd...

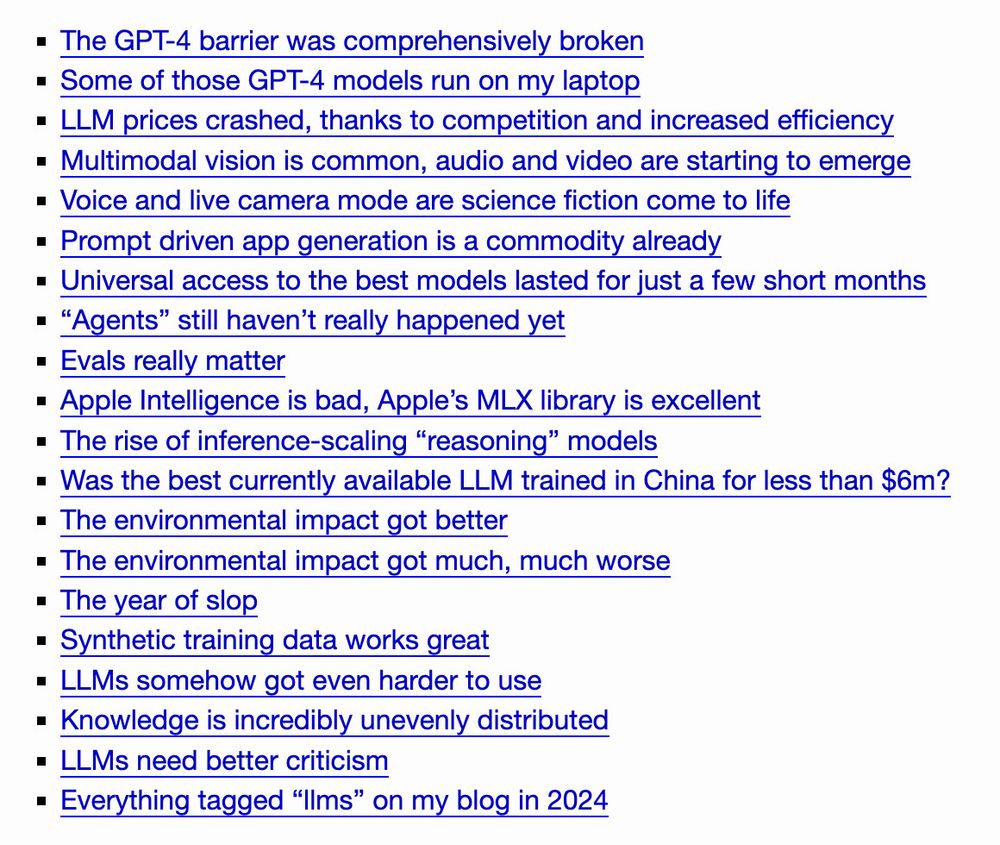

Table of contents:

Table of contents:

A new blog post in which I list of all the tools and apps I've been using for work, plus all my opinions about them.

maria-antoniak.github.io/2024/12/30/o...

Featuring @kagi.com, @warp.dev, @paperpile.bsky.social, @are.na, Fantastical, @obsidian.md, Claude, and more.

- Switch with Nine Sols loaded

- iPad with Black Doves loaded

- laptop with data, python notebook, blog post draft loaded

- silk eye mask

- REI inflatable neck pillow

- vitamin C juice

- Journey to the East by Hermann Hesse

- compression socks

- many snacks

A new blog post in which I list of all the tools and apps I've been using for work, plus all my opinions about them.

maria-antoniak.github.io/2024/12/30/o...

Featuring @kagi.com, @warp.dev, @paperpile.bsky.social, @are.na, Fantastical, @obsidian.md, Claude, and more.

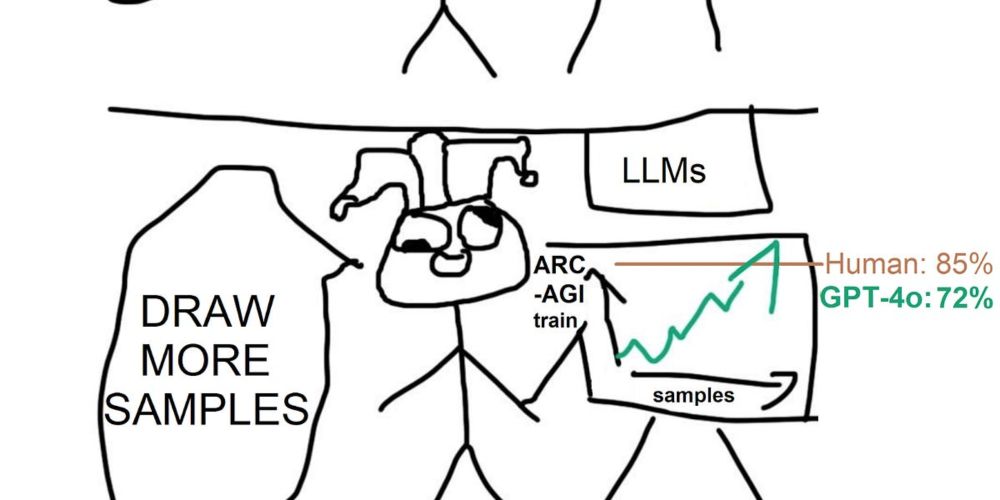

o3 is probably a more principled search technique...

Super grateful to have been part of such an awesome team effort and very excited about the gains for retrieval/RAG! 🚀

We trained 2 new models. Like BERT, but modern. ModernBERT.

Not some hypey GenAI thing, but a proper workhorse model, for retrieval, classification, etc. Real practical stuff.

It's much faster, more accurate, longer context, and more useful. 🧵

Super grateful to have been part of such an awesome team effort and very excited about the gains for retrieval/RAG! 🚀

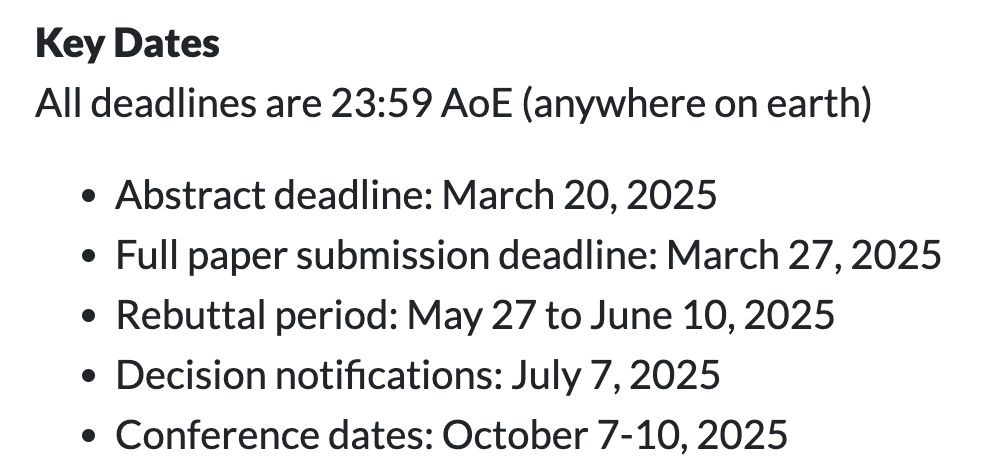

colmweb.org/cfp.html

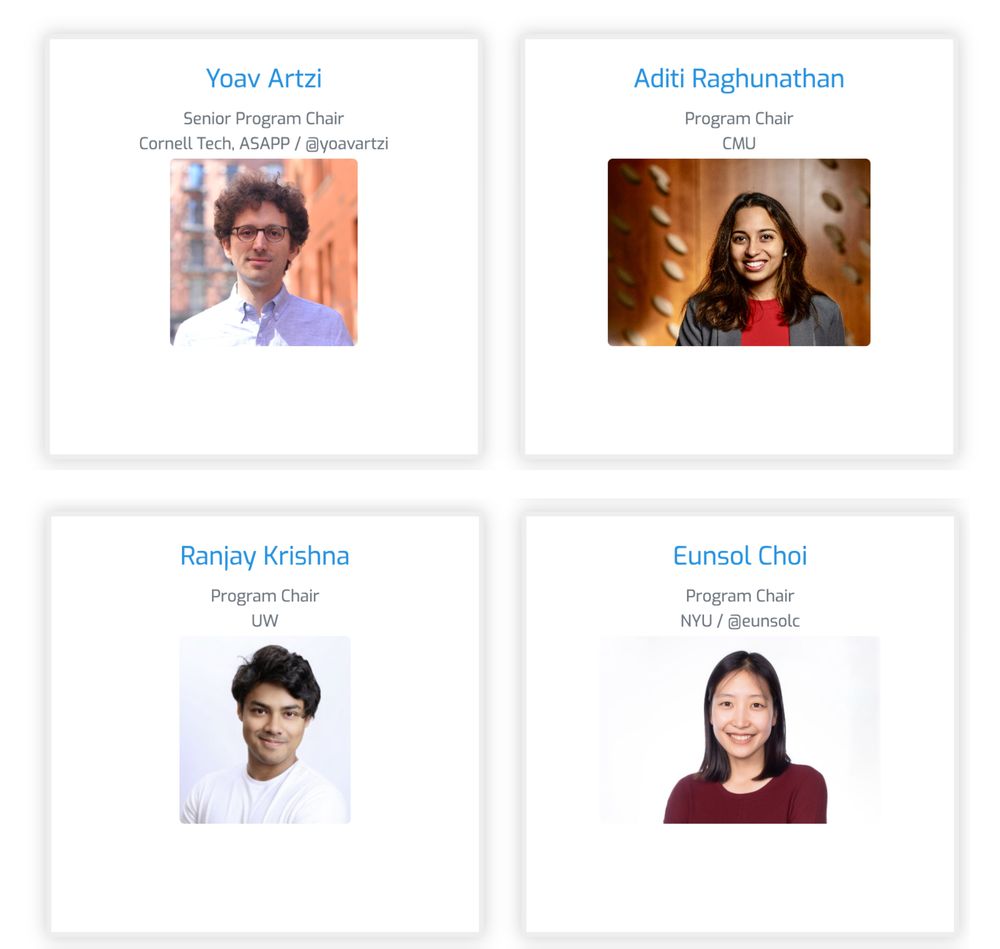

And excited to announce the COLM 2025 program chairs @yoavartzi.com @eunsol.bsky.social @ranjaykrishna.bsky.social and @adtraghunathan.bsky.social

colmweb.org/cfp.html

And excited to announce the COLM 2025 program chairs @yoavartzi.com @eunsol.bsky.social @ranjaykrishna.bsky.social and @adtraghunathan.bsky.social

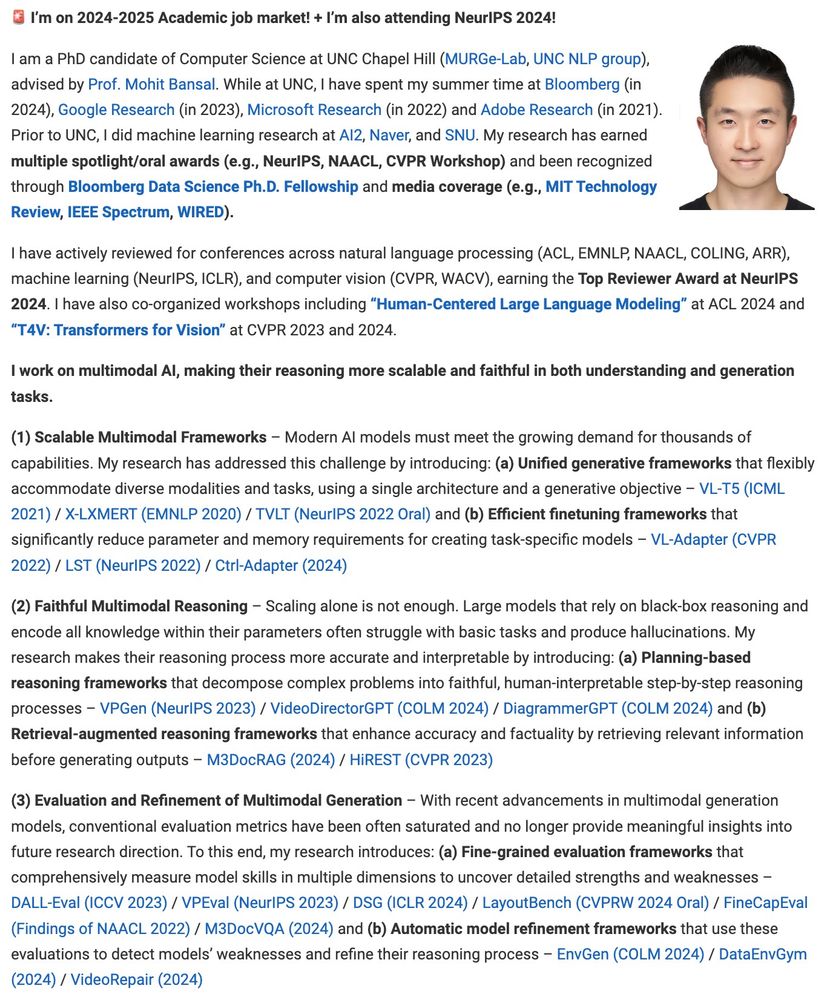

j-min.io

I work on ✨Multimodal AI✨, advancing reasoning in understanding & generation by:

1⃣ Making it scalable

2⃣ Making it faithful

3⃣ Evaluating + refining it

Completing my PhD at UNC (w/ @mohitbansal.bsky.social).

Happy to connect (will be at #NeurIPS2024)!

👇🧵

j-min.io

I work on ✨Multimodal AI✨, advancing reasoning in understanding & generation by:

1⃣ Making it scalable

2⃣ Making it faithful

3⃣ Evaluating + refining it

Completing my PhD at UNC (w/ @mohitbansal.bsky.social).

Happy to connect (will be at #NeurIPS2024)!

👇🧵

Constrained output for LLMs, e.g., outlines library for vllm which forces models to output json/pydantic schemas, is cool!

But, because output tokens cost much more latency than input tokens, if speed matters: bespoke, low-token output formats are often better.

Constrained output for LLMs, e.g., outlines library for vllm which forces models to output json/pydantic schemas, is cool!

But, because output tokens cost much more latency than input tokens, if speed matters: bespoke, low-token output formats are often better.

But beware 👻 !

Despite expressivity, top-K re-rankers generalize poorly as K increases.

arxiv.org/pdf/2411.11767

But beware 👻 !

Despite expressivity, top-K re-rankers generalize poorly as K increases.

arxiv.org/pdf/2411.11767