arxiv.org/abs/2412.06655

arxiv.org/abs/2412.06655

With Adrien Bolland and Damien Ernst, we propose a new intrinsic reward. Instead of encouraging visiting states uniformly, we encourage visiting *future* states uniformly, from every state.

With Adrien Bolland and Damien Ernst, we propose a new intrinsic reward. Instead of encouraging visiting states uniformly, we encourage visiting *future* states uniformly, from every state.

arxiv.org/abs/2402.00162

arxiv.org/abs/2402.00162

With Adrien Bolland and Damien Ernst, we decided to frame the exploration problem for policy-gradient methods from the optimization point of view.

With Adrien Bolland and Damien Ernst, we decided to frame the exploration problem for policy-gradient methods from the optimization point of view.

openreview.net/forum?id=wNV...

openreview.net/forum?id=wNV...

With Daniel Ebi and Damien Ernst, we looked for a reason why asymmetric actor-critic was performing better, even when using RNN-based policies with the full observation history as input (no aliasing).

With Daniel Ebi and Damien Ernst, we looked for a reason why asymmetric actor-critic was performing better, even when using RNN-based policies with the full observation history as input (no aliasing).

arxiv.org/abs/2501.19116

arxiv.org/abs/2501.19116

With Damien Ernst and Aditya Mahajan, we looked for a reason why asymmetric actor-critic algorithms are performing better than their symmetric counterparts.

With Damien Ernst and Aditya Mahajan, we looked for a reason why asymmetric actor-critic algorithms are performing better than their symmetric counterparts.

📝 Paper: arxiv.org/abs/2501.19116

🎤 Talk: orbi.uliege.be/handle/2268/...

A warm thank to Aditya Mahajan for welcoming me at McGill University and for his precious supervision.

📝 Paper: arxiv.org/abs/2501.19116

🎤 Talk: orbi.uliege.be/handle/2268/...

A warm thank to Aditya Mahajan for welcoming me at McGill University and for his precious supervision.

Despite not matching the usual recurrent actor-critic setting, this analysis still provides insights into the effectiveness of asymmetric actor-critic algorithms.

Despite not matching the usual recurrent actor-critic setting, this analysis still provides insights into the effectiveness of asymmetric actor-critic algorithms.

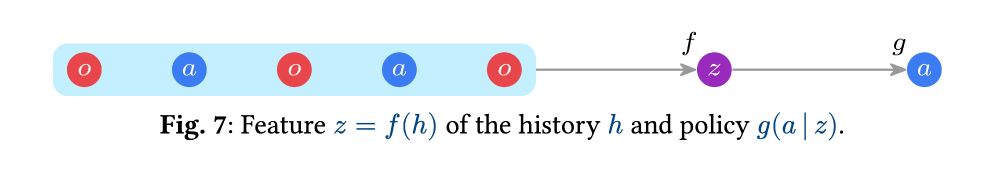

Now, what is aliasing exactly?

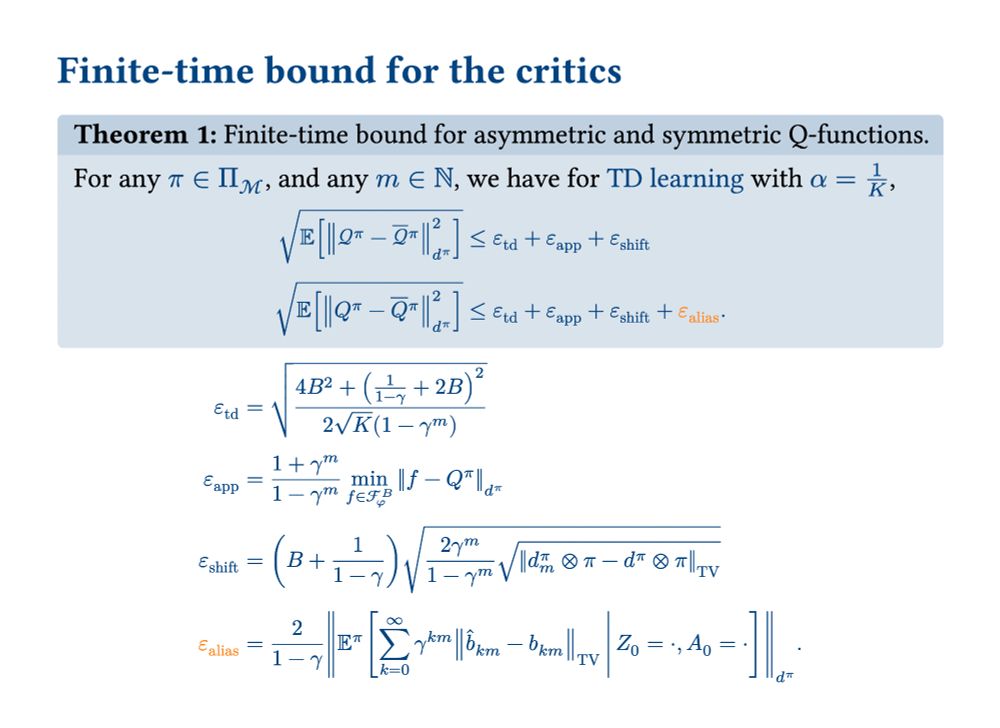

The aliasing and inference terms arise from z = f(h) not being Markovian. They can be bounded by the difference between the approximate p(s|z) and exact p(s|h) beliefs.

Now, what is aliasing exactly?

The aliasing and inference terms arise from z = f(h) not being Markovian. They can be bounded by the difference between the approximate p(s|z) and exact p(s|h) beliefs.

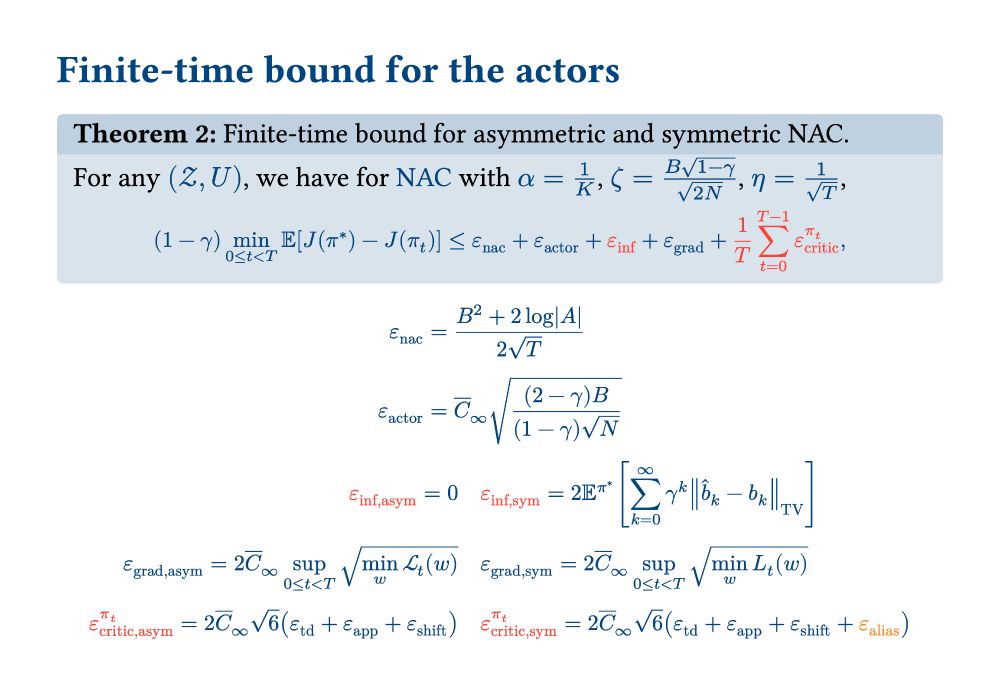

In addition to the average critic error, which is also present in the actor bound, the symmetric actor-critic algorithm suffers from an additional "inference term".

In addition to the average critic error, which is also present in the actor bound, the symmetric actor-critic algorithm suffers from an additional "inference term".

The symmetric temporal difference learning algorithm has an additional "aliasing term".

The symmetric temporal difference learning algorithm has an additional "aliasing term".

Does it really learn faster than symmetric learning?

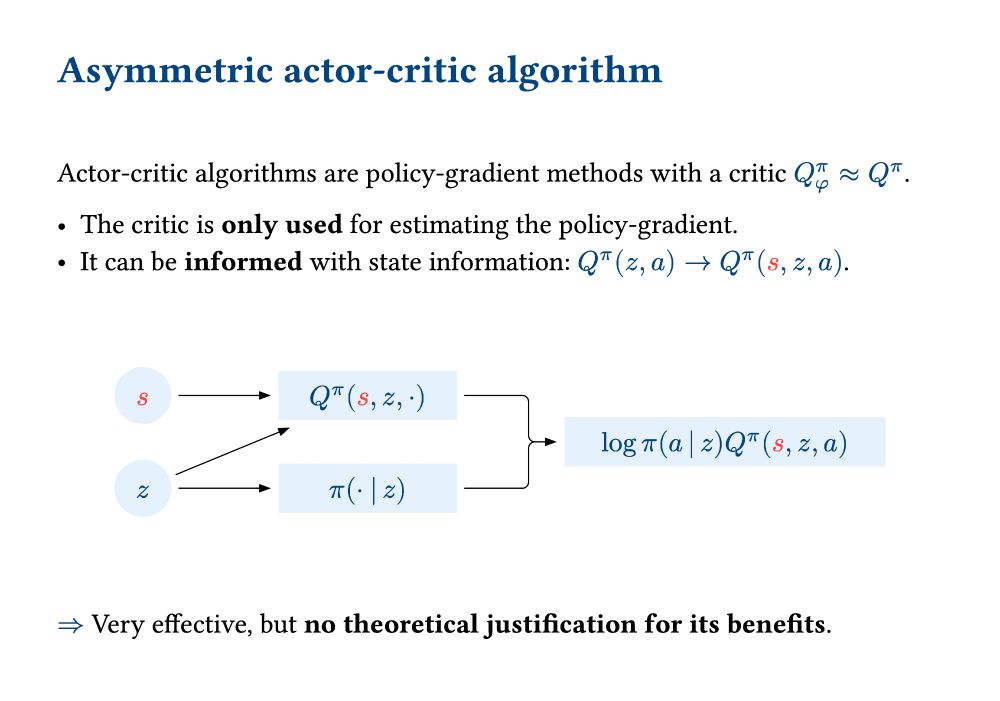

In this paper, we provide theoretical evidence for this, based on an adapted finite-time analysis (Cayci et al., 2024).

Does it really learn faster than symmetric learning?

In this paper, we provide theoretical evidence for this, based on an adapted finite-time analysis (Cayci et al., 2024).

As a result, the state can be an input of the critic, which becomes Q(s, z, a) in the asymmetric setting instead of Q(z, a) in the symmetric setting.

As a result, the state can be an input of the critic, which becomes Q(s, z, a) in the asymmetric setting instead of Q(z, a) in the symmetric setting.

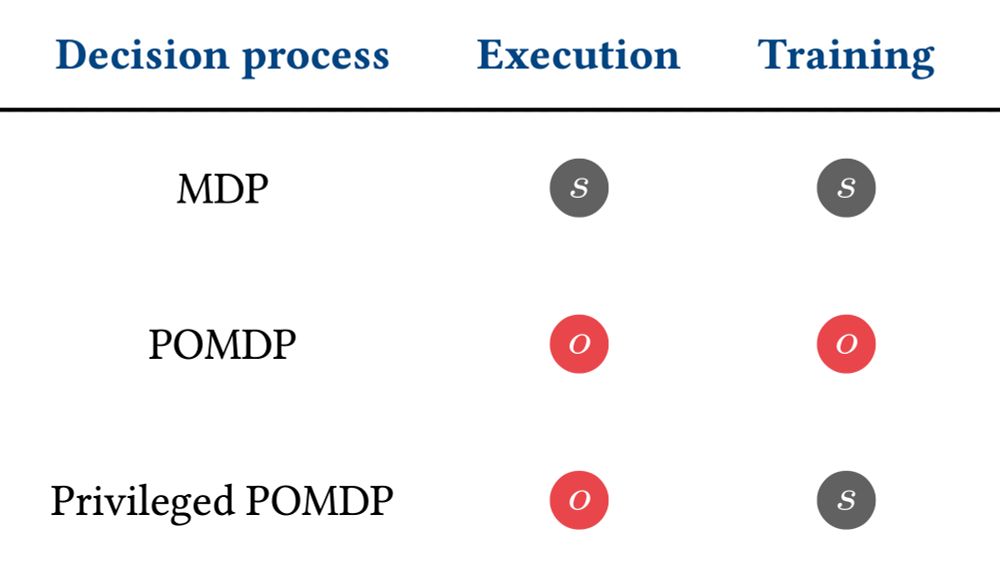

In a privileged POMDP, the state can be used to learn a policy π(a|z) faster.

But note that the state cannot be an input of the policy, since it is not available at execution.

In a privileged POMDP, the state can be used to learn a policy π(a|z) faster.

But note that the state cannot be an input of the policy, since it is not available at execution.

- MDP: full state observability (too optimistic),

- POMDP: partial state observability (too pessimistic).

Instead, asymmetric RL methods assume:

- Privileged POMDP: asymmetric state observability (full at training, partial at execution).

- MDP: full state observability (too optimistic),

- POMDP: partial state observability (too pessimistic).

Instead, asymmetric RL methods assume:

- Privileged POMDP: asymmetric state observability (full at training, partial at execution).