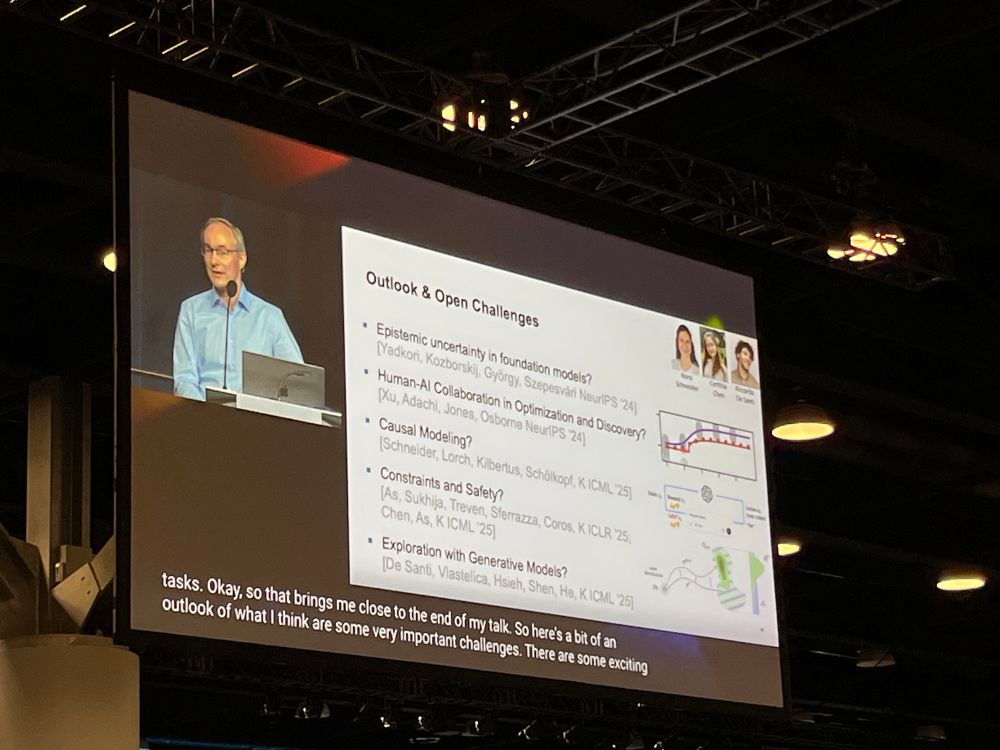

- A Theoretical Justification for AsymAC Algorithms.

- Informed AsymAC: Theoretical Insights and Open Questions.

- Behind the Myth of Exploration in Policy Gradients.

- Off-Policy MaxEntRL with Future State-Action Visitation Measures.

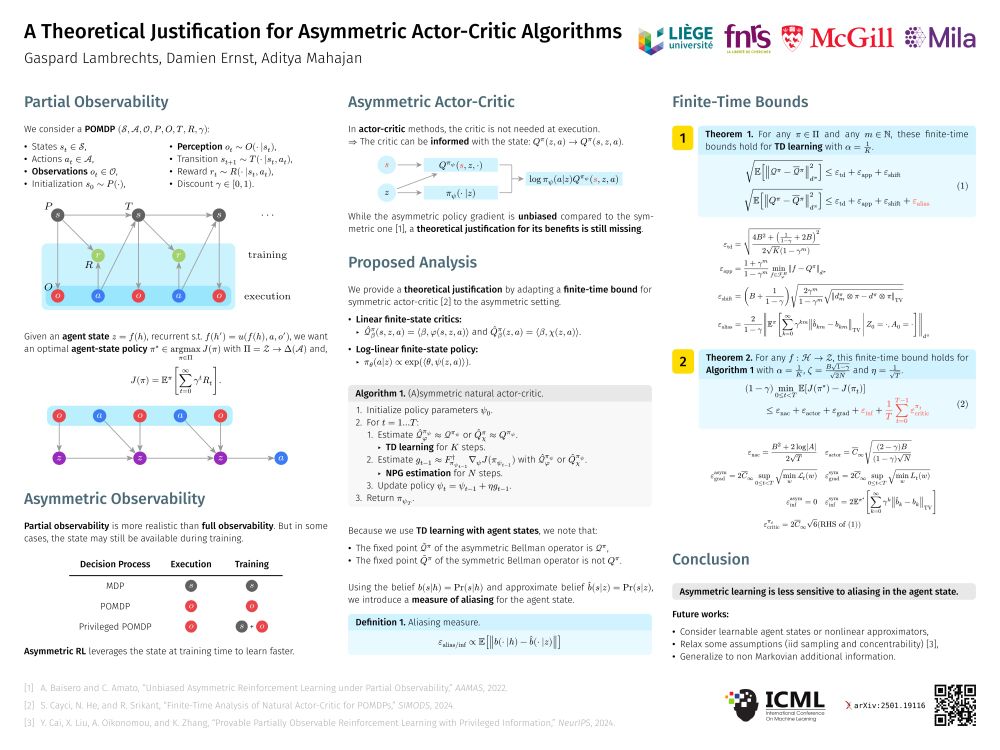

- A Theoretical Justification for AsymAC Algorithms.

- Informed AsymAC: Theoretical Insights and Open Questions.

- Behind the Myth of Exploration in Policy Gradients.

- Off-Policy MaxEntRL with Future State-Action Visitation Measures.

In case you could not attend, feel free to check it out 👉

youtu.be/RCA22JWiiY8?...

In case you could not attend, feel free to check it out 👉

youtu.be/RCA22JWiiY8?...

📝 Paper: hdl.handle.net/2268/326874

💻 Blog: damien-ernst.be/2025/06/10/a...

📝 Paper: hdl.handle.net/2268/326874

💻 Blog: damien-ernst.be/2025/06/10/a...

Next week we will present our paper “Enhancing Diversity in Parallel Agents: A Maximum Exploration Story” with V. De Paola, @mircomutti.bsky.social and M. Restelli at @icmlconf.bsky.social!

(1/N)

Next week we will present our paper “Enhancing Diversity in Parallel Agents: A Maximum Exploration Story” with V. De Paola, @mircomutti.bsky.social and M. Restelli at @icmlconf.bsky.social!

(1/N)

Slides: hdl.handle.net/2268/333931.

Slides: hdl.handle.net/2268/333931.

You can find it here: hdl.handle.net/2268/328700 (manuscript and slides).

Many thanks to my advisors and to the jury members.

You can find it here: hdl.handle.net/2268/328700 (manuscript and slides).

Many thanks to my advisors and to the jury members.

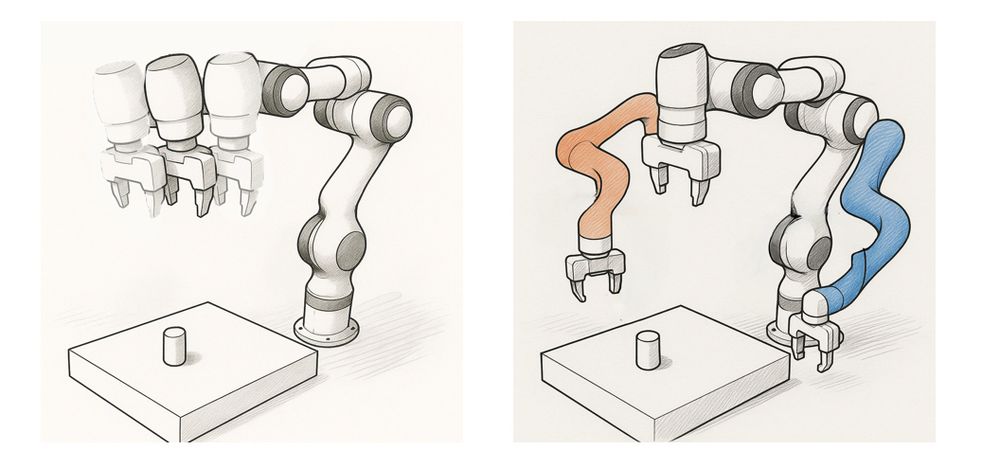

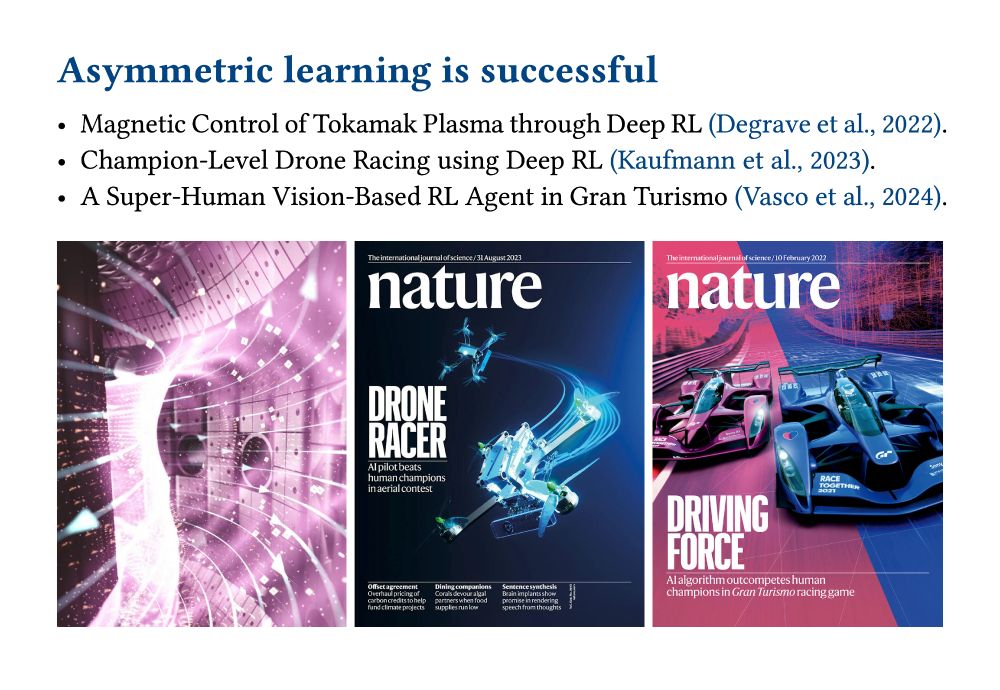

Never heard of "asymmetric actor-critic" algorithms? Yet, many successful #RL applications use them (see image).

But these algorithms are not fully understood. Below, we provide some insights.

Submit your work to EWRL — now accepting papers until June 3rd AoE.

This year, we're also offering a fast track for papers accepted at other conferences ⚡

Check the website for all the details: euro-workshop-on-reinforcement-learning.github.io/ewrl18/

Submit your work to EWRL — now accepting papers until June 3rd AoE.

This year, we're also offering a fast track for papers accepted at other conferences ⚡

Check the website for all the details: euro-workshop-on-reinforcement-learning.github.io/ewrl18/

While I would not advise using Typst for papers yet, its markdown-like syntax allows to create slides in a few minutes, while supporting everything we love from LaTeX: equations.

github.com/glambrechts/...

While I would not advise using Typst for papers yet, its markdown-like syntax allows to create slides in a few minutes, while supporting everything we love from LaTeX: equations.

github.com/glambrechts/...

Link 📑 arxiv.org/abs/2412.06655

#RL #MaxEntRL #Exploration

Link 📑 arxiv.org/abs/2412.06655

#RL #MaxEntRL #Exploration

www.benerl.org/seminar-seri...

I would have loved to hear Benjamin Eysenbach, Chris Lu and Edward Hu... Next one is on December 19th.

www.benerl.org/seminar-seri...

I would have loved to hear Benjamin Eysenbach, Chris Lu and Edward Hu... Next one is on December 19th.

If you're also interested in:

- sequence models,

- world models,

- representation learning,

- asymmetric learning,

- generalization,

I'd love to connect, chat, or check out your work! Feel free to reply or message me.