📝 Paper: hdl.handle.net/2268/326874

💻 Blog: damien-ernst.be/2025/06/10/a...

📝 Paper: hdl.handle.net/2268/326874

💻 Blog: damien-ernst.be/2025/06/10/a...

Slides: hdl.handle.net/2268/333931.

Slides: hdl.handle.net/2268/333931.

You can find it here: hdl.handle.net/2268/328700 (manuscript and slides).

Many thanks to my advisors and to the jury members.

You can find it here: hdl.handle.net/2268/328700 (manuscript and slides).

Many thanks to my advisors and to the jury members.

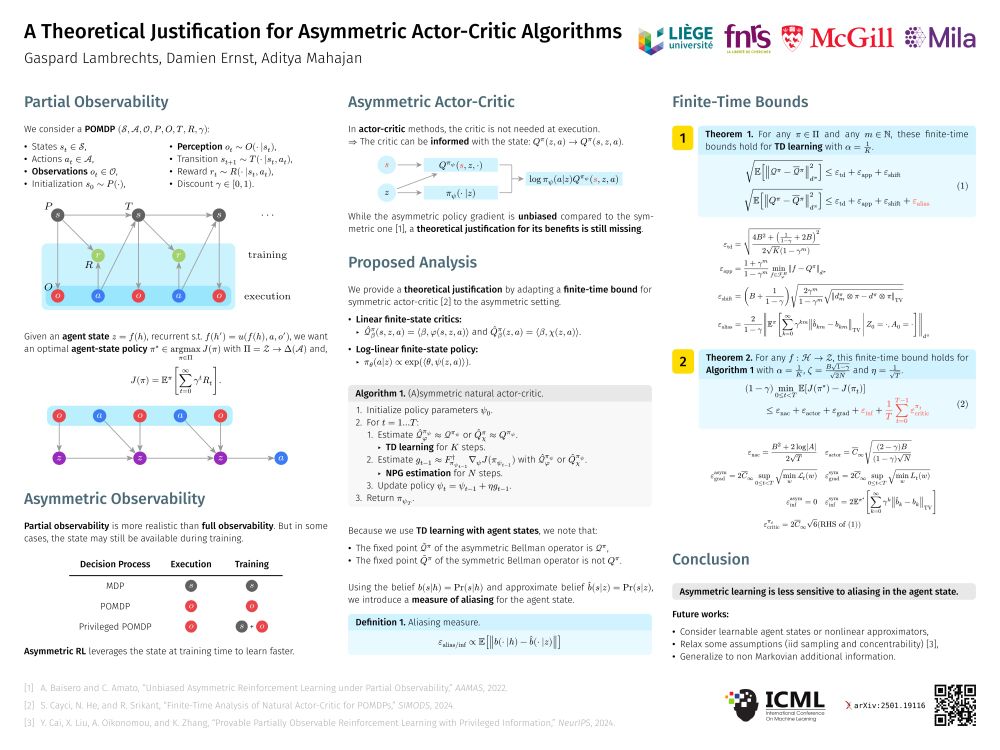

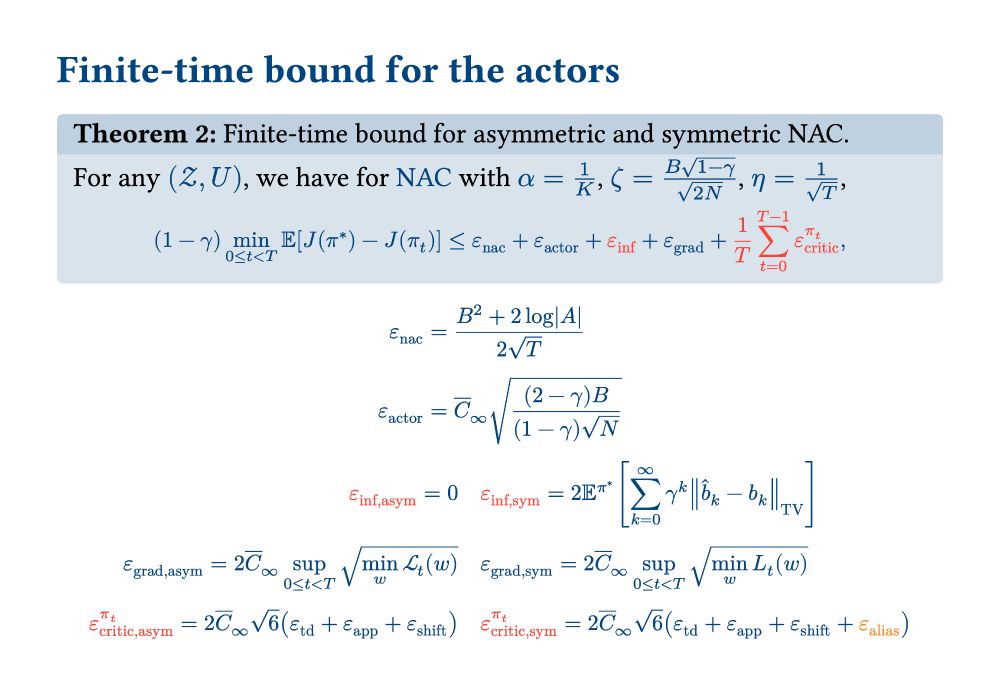

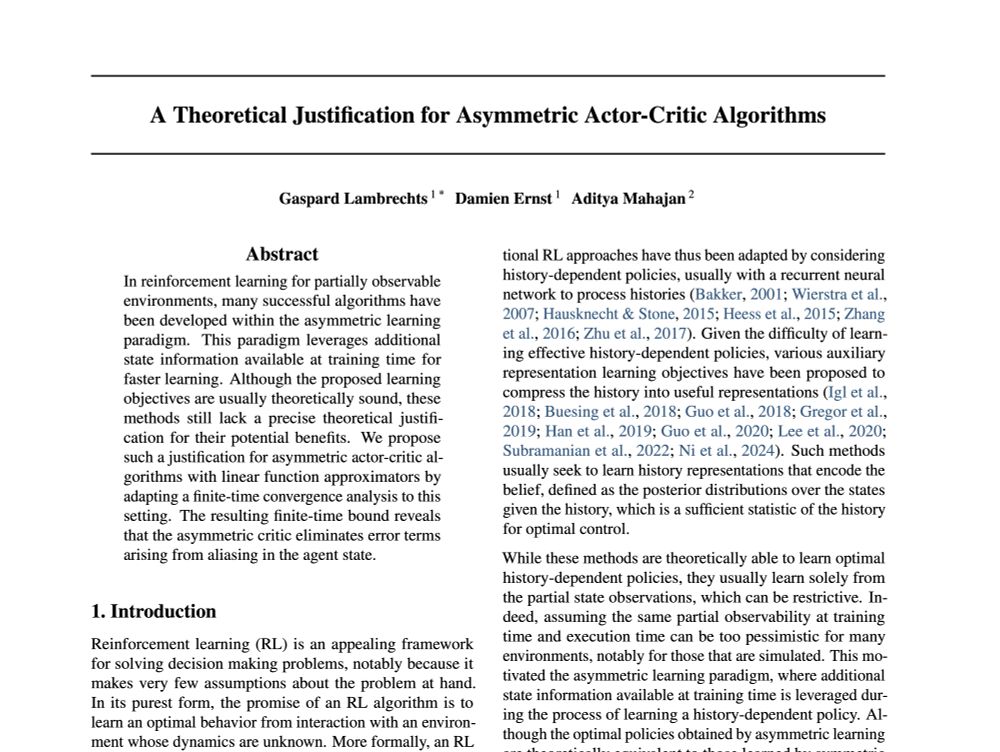

In addition to the average critic error, which is also present in the actor bound, the symmetric actor-critic algorithm suffers from an additional "inference term".

In addition to the average critic error, which is also present in the actor bound, the symmetric actor-critic algorithm suffers from an additional "inference term".

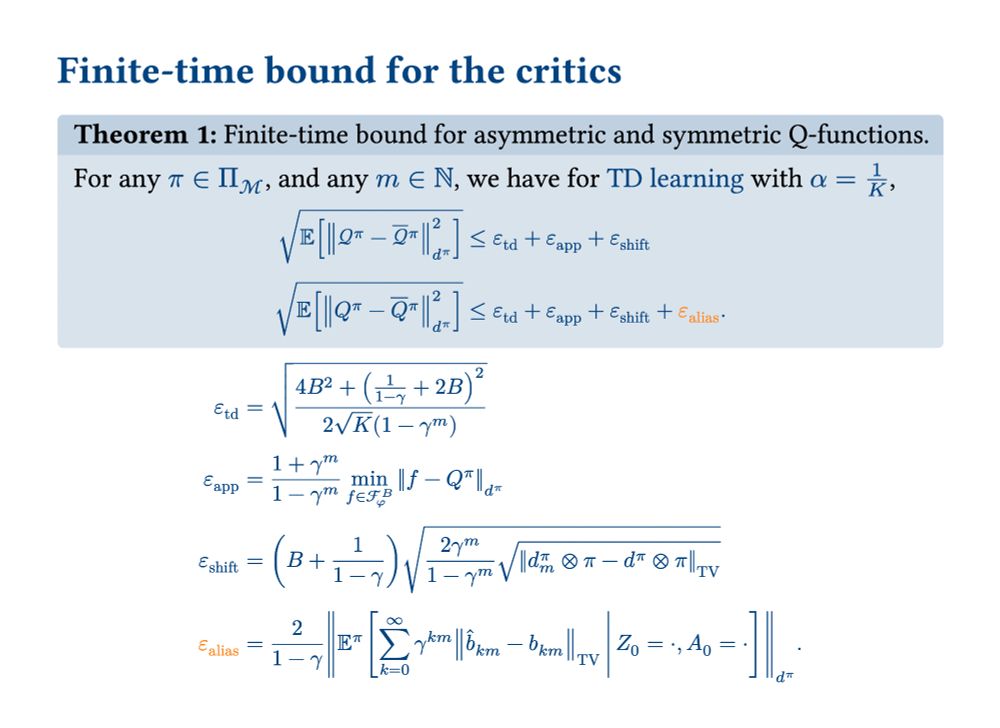

The symmetric temporal difference learning algorithm has an additional "aliasing term".

The symmetric temporal difference learning algorithm has an additional "aliasing term".

Does it really learn faster than symmetric learning?

In this paper, we provide theoretical evidence for this, based on an adapted finite-time analysis (Cayci et al., 2024).

Does it really learn faster than symmetric learning?

In this paper, we provide theoretical evidence for this, based on an adapted finite-time analysis (Cayci et al., 2024).

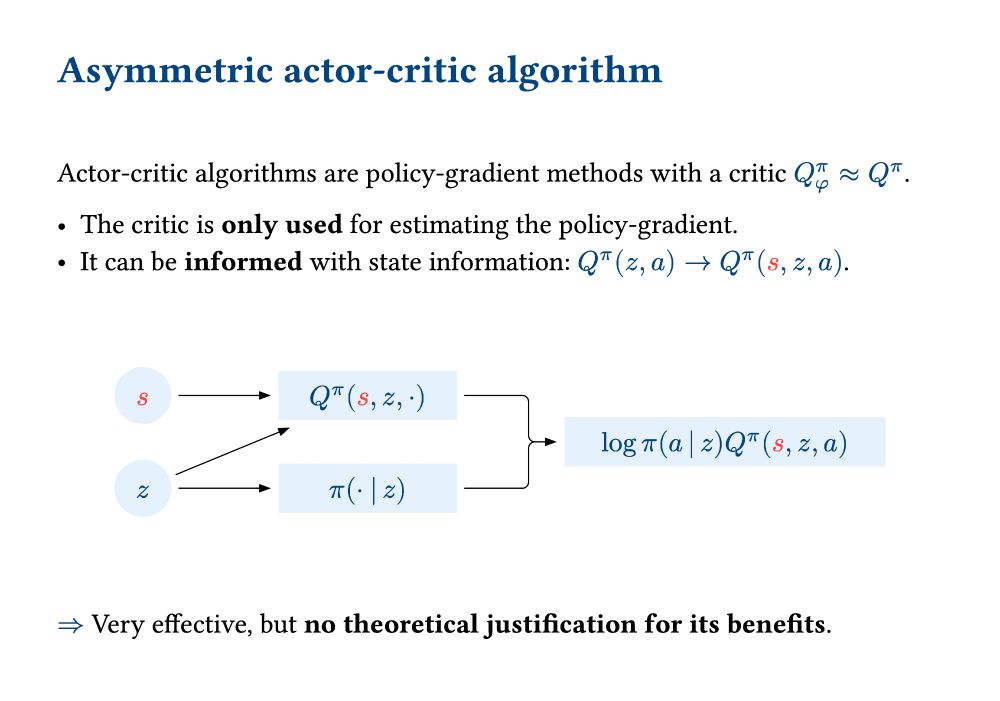

As a result, the state can be an input of the critic, which becomes Q(s, z, a) in the asymmetric setting instead of Q(z, a) in the symmetric setting.

As a result, the state can be an input of the critic, which becomes Q(s, z, a) in the asymmetric setting instead of Q(z, a) in the symmetric setting.

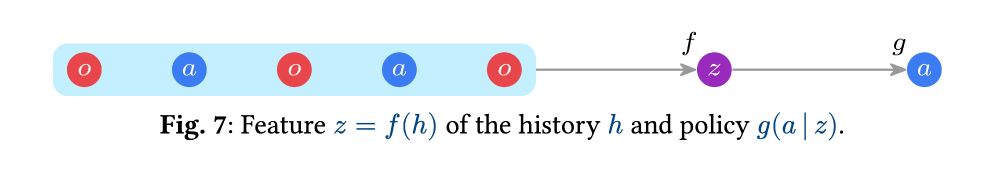

In a privileged POMDP, the state can be used to learn a policy π(a|z) faster.

But note that the state cannot be an input of the policy, since it is not available at execution.

In a privileged POMDP, the state can be used to learn a policy π(a|z) faster.

But note that the state cannot be an input of the policy, since it is not available at execution.

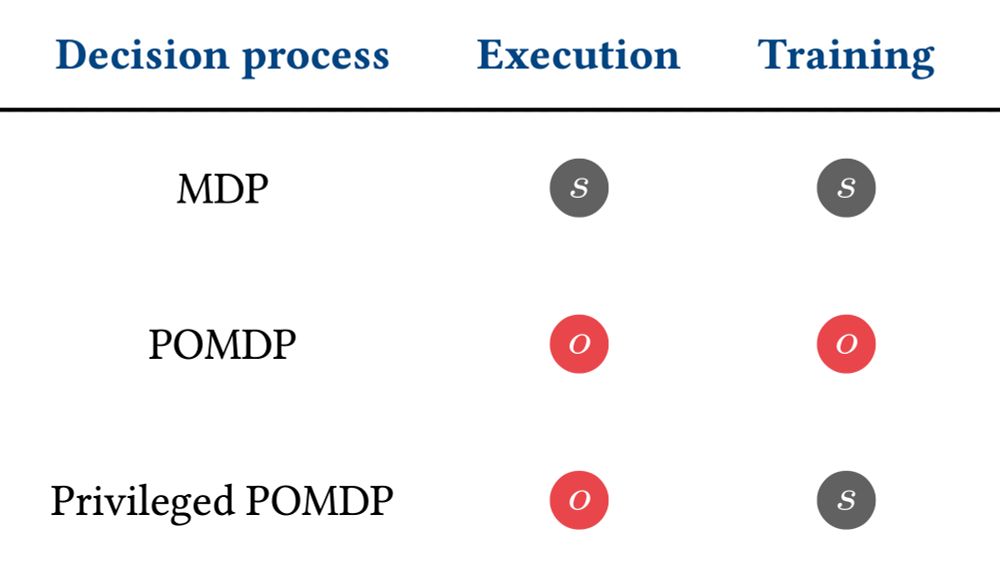

- MDP: full state observability (too optimistic),

- POMDP: partial state observability (too pessimistic).

Instead, asymmetric RL methods assume:

- Privileged POMDP: asymmetric state observability (full at training, partial at execution).

- MDP: full state observability (too optimistic),

- POMDP: partial state observability (too pessimistic).

Instead, asymmetric RL methods assume:

- Privileged POMDP: asymmetric state observability (full at training, partial at execution).

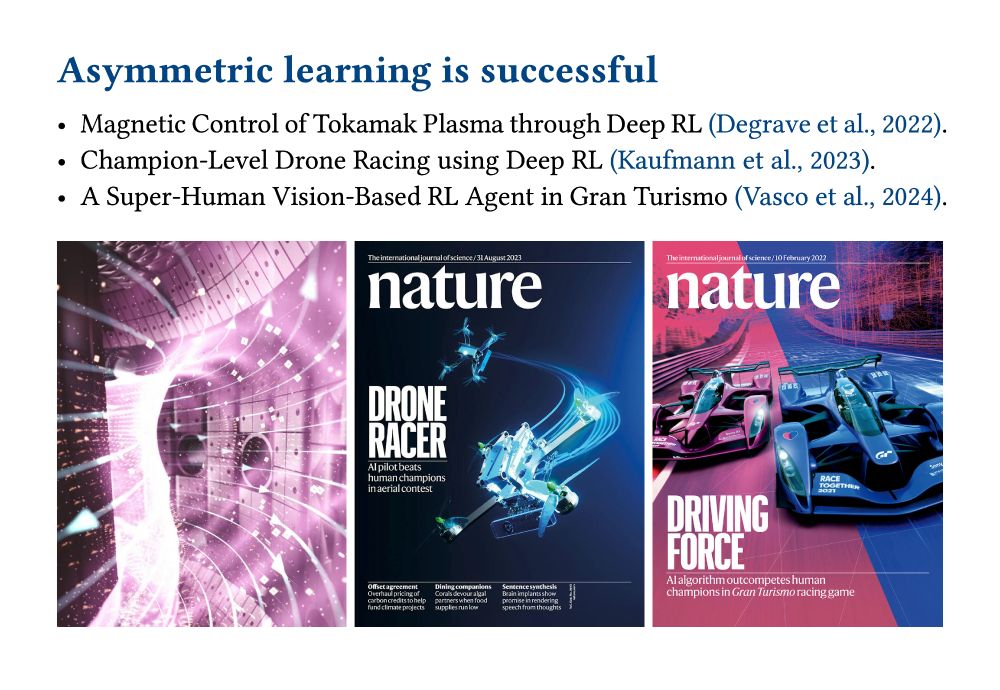

Never heard of "asymmetric actor-critic" algorithms? Yet, many successful #RL applications use them (see image).

But these algorithms are not fully understood. Below, we provide some insights.

While I would not advise using Typst for papers yet, its markdown-like syntax allows to create slides in a few minutes, while supporting everything we love from LaTeX: equations.

github.com/glambrechts/...

While I would not advise using Typst for papers yet, its markdown-like syntax allows to create slides in a few minutes, while supporting everything we love from LaTeX: equations.

github.com/glambrechts/...