Kristina Gligoric

@gligoric.bsky.social

Assistant Professor of Computer Science @JohnsHopkins,

CS Postdoc @Stanford,

PHD @EPFL,

Computational Social Science, NLP, AI & Society

https://kristinagligoric.com/

CS Postdoc @Stanford,

PHD @EPFL,

Computational Social Science, NLP, AI & Society

https://kristinagligoric.com/

Pinned

I'm recruiting multiple PhD students for Fall 2026 in Computer Science at @hopkinsengineer.bsky.social 🍂

Apply to work on AI for social sciences/human behavior, social NLP, and LLMs for real-world applied domains you're passionate about!

Learn more at kristinagligoric.com & help spread the word!

Apply to work on AI for social sciences/human behavior, social NLP, and LLMs for real-world applied domains you're passionate about!

Learn more at kristinagligoric.com & help spread the word!

I'm recruiting multiple PhD students for Fall 2026 in Computer Science at @hopkinsengineer.bsky.social 🍂

Apply to work on AI for social sciences/human behavior, social NLP, and LLMs for real-world applied domains you're passionate about!

Learn more at kristinagligoric.com & help spread the word!

Apply to work on AI for social sciences/human behavior, social NLP, and LLMs for real-world applied domains you're passionate about!

Learn more at kristinagligoric.com & help spread the word!

November 5, 2025 at 2:56 PM

I'm recruiting multiple PhD students for Fall 2026 in Computer Science at @hopkinsengineer.bsky.social 🍂

Apply to work on AI for social sciences/human behavior, social NLP, and LLMs for real-world applied domains you're passionate about!

Learn more at kristinagligoric.com & help spread the word!

Apply to work on AI for social sciences/human behavior, social NLP, and LLMs for real-world applied domains you're passionate about!

Learn more at kristinagligoric.com & help spread the word!

Reposted by Kristina Gligoric

The Department of Computer Science is pleased to welcome nine new tenure-track faculty to its ranks this academic year! Featuring @anandbhattad.bsky.social, @uthsav.bsky.social, @gligoric.bsky.social, @murat-kocaoglu.bsky.social, @tiziano.bsky.social, and more:

Nine new tenure-track faculty join Johns Hopkins Computer Science

Their research spans social computing and human-computer interaction to the theoretical foundations and real-world applications of machine learning models.

www.cs.jhu.edu

October 29, 2025 at 1:43 PM

The Department of Computer Science is pleased to welcome nine new tenure-track faculty to its ranks this academic year! Featuring @anandbhattad.bsky.social, @uthsav.bsky.social, @gligoric.bsky.social, @murat-kocaoglu.bsky.social, @tiziano.bsky.social, and more:

Reposted by Kristina Gligoric

My latest with @techpolicypress.bsky.social piece on a key theme from the @techimpactpolicy.bsky.social's Trust and Research Conference. Includes @dwillner.bsky.social's keynote and presentations from @gligoric.bsky.social, @thejusticecollab.bsky.social's Matthew Katsaros and more.

Platforms are accelerating the transition to AI for content moderation, laying off trust and safety workers and outsourced moderators in favor of automated systems, writes Tim Bernard. The practice raises a host of questions, some of which are now being studied both in universities and in industry.

Researchers Explore the Use of LLMs for Content Moderation | TechPolicy.Press

The subject was a stand-out theme at the Trust and Safety Research Conference, held last month at Stanford, writes Tim Bernard.

www.techpolicy.press

October 30, 2025 at 2:36 PM

My latest with @techpolicypress.bsky.social piece on a key theme from the @techimpactpolicy.bsky.social's Trust and Research Conference. Includes @dwillner.bsky.social's keynote and presentations from @gligoric.bsky.social, @thejusticecollab.bsky.social's Matthew Katsaros and more.

Reposted by Kristina Gligoric

The debate over “LLMs as annotators” feels familiar: excitement, backlash, and anxiety about bad science. My take in a new blogpost is that LLMs don’t break measurement; they expose how fragile it already was.

doomscrollingbabel.manoel.xyz/p/labeling-d...

doomscrollingbabel.manoel.xyz/p/labeling-d...

Labeling Data with Language Models: Trick or Treat?

Large language models are now labeling data for us.

doomscrollingbabel.manoel.xyz

October 25, 2025 at 6:29 PM

The debate over “LLMs as annotators” feels familiar: excitement, backlash, and anxiety about bad science. My take in a new blogpost is that LLMs don’t break measurement; they expose how fragile it already was.

doomscrollingbabel.manoel.xyz/p/labeling-d...

doomscrollingbabel.manoel.xyz/p/labeling-d...

Reposted by Kristina Gligoric

Considering a PhD in NLP/Speech? 🤔

Need guidance with your application materials?

@jhuclsp is offering a student-run application mentoring program for prospective applicants from underrepresented backgrounds.

📝 Learn more & apply: forms.gle/PMWByc6J3vD...

📅 Deadline: Nov 20

Need guidance with your application materials?

@jhuclsp is offering a student-run application mentoring program for prospective applicants from underrepresented backgrounds.

📝 Learn more & apply: forms.gle/PMWByc6J3vD...

📅 Deadline: Nov 20

October 21, 2025 at 5:36 PM

Considering a PhD in NLP/Speech? 🤔

Need guidance with your application materials?

@jhuclsp is offering a student-run application mentoring program for prospective applicants from underrepresented backgrounds.

📝 Learn more & apply: forms.gle/PMWByc6J3vD...

📅 Deadline: Nov 20

Need guidance with your application materials?

@jhuclsp is offering a student-run application mentoring program for prospective applicants from underrepresented backgrounds.

📝 Learn more & apply: forms.gle/PMWByc6J3vD...

📅 Deadline: Nov 20

Reposted by Kristina Gligoric

As of June 2025, 66% of Americans have never used ChatGPT.

Our new position paper, Attention to Non-Adopters, explores why this matters: AI research is being shaped around adopters—leaving non-adopters’ needs, and key LLM research opportunities, behind.

arxiv.org/abs/2510.15951

Our new position paper, Attention to Non-Adopters, explores why this matters: AI research is being shaped around adopters—leaving non-adopters’ needs, and key LLM research opportunities, behind.

arxiv.org/abs/2510.15951

October 21, 2025 at 5:12 PM

As of June 2025, 66% of Americans have never used ChatGPT.

Our new position paper, Attention to Non-Adopters, explores why this matters: AI research is being shaped around adopters—leaving non-adopters’ needs, and key LLM research opportunities, behind.

arxiv.org/abs/2510.15951

Our new position paper, Attention to Non-Adopters, explores why this matters: AI research is being shaped around adopters—leaving non-adopters’ needs, and key LLM research opportunities, behind.

arxiv.org/abs/2510.15951

Reposted by Kristina Gligoric

Tutorial Day kicks off tomorrow! Which sessions are you going to attend? 📚 Check the lineup and share your picks. #ic2s2 #tutorials

July 20, 2025 at 9:43 AM

Tutorial Day kicks off tomorrow! Which sessions are you going to attend? 📚 Check the lineup and share your picks. #ic2s2 #tutorials

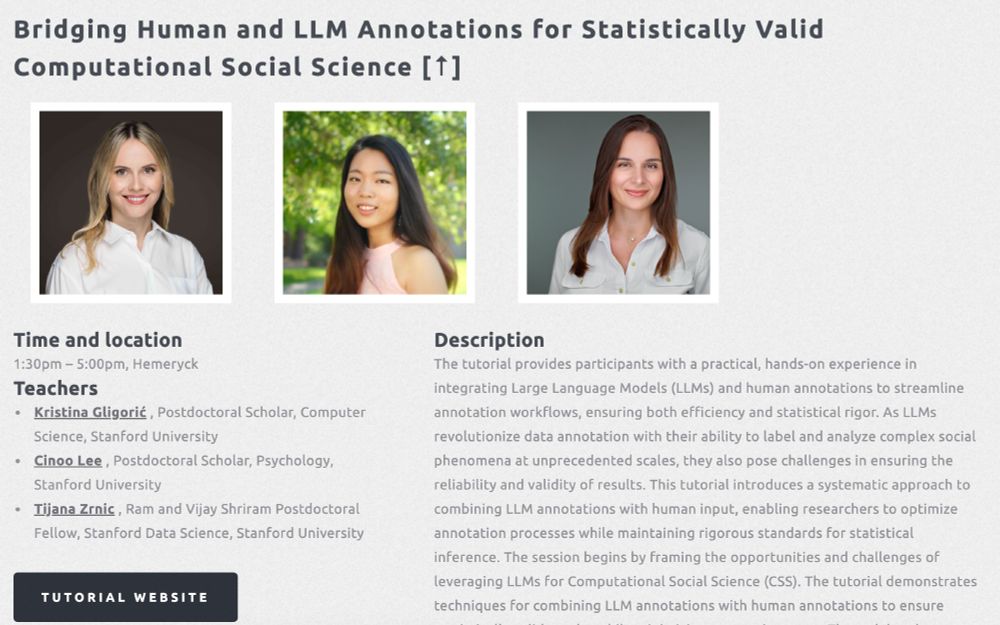

Excited to co-organize the Bridging Human and LLM Annotations for Social Science tutorial at #ic2s2 tomorrow!

Join us at 1:30pm CEST to learn how to combine Qualtrics+Prolific, MTurk, & LLMs for automated annotation and valid estimates.

Code&materials: github.com/kristinaglig...

Join us at 1:30pm CEST to learn how to combine Qualtrics+Prolific, MTurk, & LLMs for automated annotation and valid estimates.

Code&materials: github.com/kristinaglig...

July 21, 2025 at 12:20 AM

Excited to co-organize the Bridging Human and LLM Annotations for Social Science tutorial at #ic2s2 tomorrow!

Join us at 1:30pm CEST to learn how to combine Qualtrics+Prolific, MTurk, & LLMs for automated annotation and valid estimates.

Code&materials: github.com/kristinaglig...

Join us at 1:30pm CEST to learn how to combine Qualtrics+Prolific, MTurk, & LLMs for automated annotation and valid estimates.

Code&materials: github.com/kristinaglig...

I'm excited to announce that I’ll be joining the Computer Science department at Johns Hopkins as an Assistant Professor this Fall! I’ll be working on large language models, computational social science, and AI & society—and will be recruiting PhD students. Apply to work with me!

May 30, 2025 at 3:56 PM

I'm excited to announce that I’ll be joining the Computer Science department at Johns Hopkins as an Assistant Professor this Fall! I’ll be working on large language models, computational social science, and AI & society—and will be recruiting PhD students. Apply to work with me!

I'm at #NAACL2025 & would love to chat!

On Thu, I'll be presenting our paper on LLM annotations for valid estimates in social science (oral talk @ 4pm, CSS session)

📝 Paper: aclanthology.org/2025.naacl-l...

*New* 🧪 tutorial (LLMs + Prolific data collection): github.com/kristinaglig...

On Thu, I'll be presenting our paper on LLM annotations for valid estimates in social science (oral talk @ 4pm, CSS session)

📝 Paper: aclanthology.org/2025.naacl-l...

*New* 🧪 tutorial (LLMs + Prolific data collection): github.com/kristinaglig...

April 29, 2025 at 9:48 PM

I'm at #NAACL2025 & would love to chat!

On Thu, I'll be presenting our paper on LLM annotations for valid estimates in social science (oral talk @ 4pm, CSS session)

📝 Paper: aclanthology.org/2025.naacl-l...

*New* 🧪 tutorial (LLMs + Prolific data collection): github.com/kristinaglig...

On Thu, I'll be presenting our paper on LLM annotations for valid estimates in social science (oral talk @ 4pm, CSS session)

📝 Paper: aclanthology.org/2025.naacl-l...

*New* 🧪 tutorial (LLMs + Prolific data collection): github.com/kristinaglig...

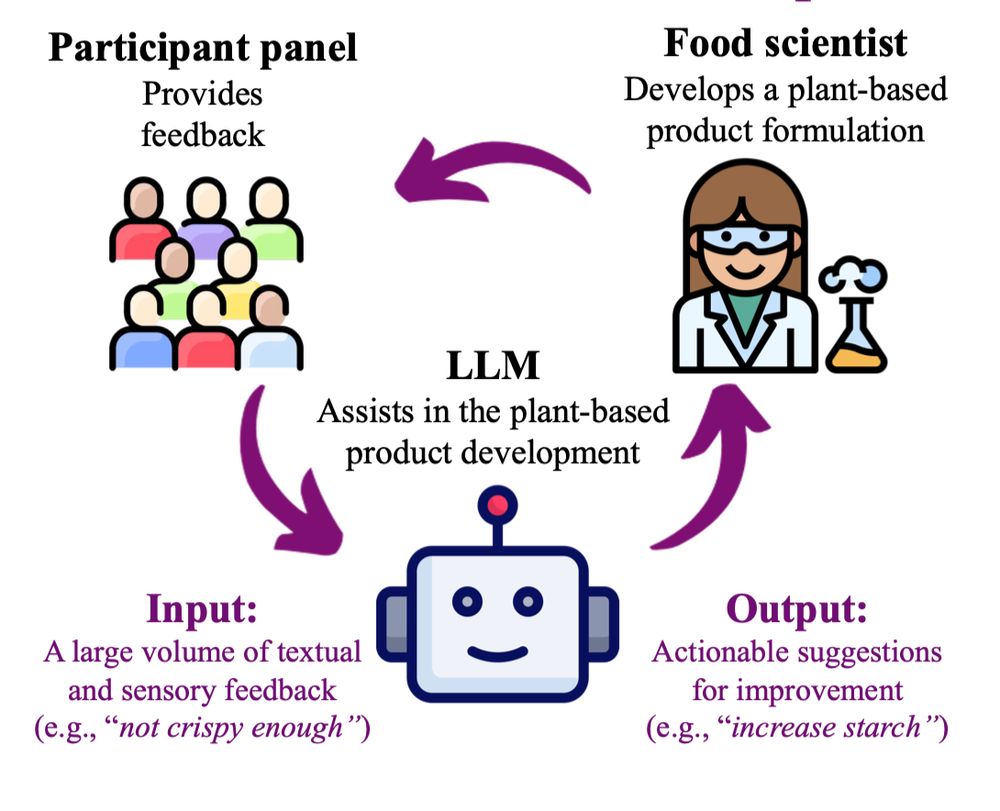

🤖🍲 What can LLMs do for sustainable food? 🤖🍲

We collaborated with domain experts (food scientists and chefs) to define a typology of food design and prediction tasks.

LLMs can assist in food and menu development, saving food scientists' time and reducing emissions!

URL: bit.ly/3ERJbUV

We collaborated with domain experts (food scientists and chefs) to define a typology of food design and prediction tasks.

LLMs can assist in food and menu development, saving food scientists' time and reducing emissions!

URL: bit.ly/3ERJbUV

February 17, 2025 at 6:53 PM

🤖🍲 What can LLMs do for sustainable food? 🤖🍲

We collaborated with domain experts (food scientists and chefs) to define a typology of food design and prediction tasks.

LLMs can assist in food and menu development, saving food scientists' time and reducing emissions!

URL: bit.ly/3ERJbUV

We collaborated with domain experts (food scientists and chefs) to define a typology of food design and prediction tasks.

LLMs can assist in food and menu development, saving food scientists' time and reducing emissions!

URL: bit.ly/3ERJbUV

Reposted by Kristina Gligoric

Our new piece in Nature Machine Intelligence: LLMs are replacing human participants, but can they simulate diverse respondents? Surveys use representative sampling for a reason, and our work shows how LLM training prevents accurate simulation of different human identities.

February 17, 2025 at 4:36 PM

Our new piece in Nature Machine Intelligence: LLMs are replacing human participants, but can they simulate diverse respondents? Surveys use representative sampling for a reason, and our work shows how LLM training prevents accurate simulation of different human identities.

Reposted by Kristina Gligoric

Research in @science.org showing structures in whale song thought to be uniquely human! Why? We think cultural learning!

Led by @inbalarnon.bsky.social with @simonkirby.bsky.social @ellengarland.bsky.social @clairenea.bsky.social @emma-carroll.bsky.social and me!

www.science.org/doi/10.1126/...

Led by @inbalarnon.bsky.social with @simonkirby.bsky.social @ellengarland.bsky.social @clairenea.bsky.social @emma-carroll.bsky.social and me!

www.science.org/doi/10.1126/...

February 6, 2025 at 9:13 PM

Research in @science.org showing structures in whale song thought to be uniquely human! Why? We think cultural learning!

Led by @inbalarnon.bsky.social with @simonkirby.bsky.social @ellengarland.bsky.social @clairenea.bsky.social @emma-carroll.bsky.social and me!

www.science.org/doi/10.1126/...

Led by @inbalarnon.bsky.social with @simonkirby.bsky.social @ellengarland.bsky.social @clairenea.bsky.social @emma-carroll.bsky.social and me!

www.science.org/doi/10.1126/...

Reposted by Kristina Gligoric

Severance is back. Inject that show straight into my veins

January 18, 2025 at 1:30 AM

Severance is back. Inject that show straight into my veins

Reposted by Kristina Gligoric

Whoa! When a large language of life model generates a protein equivalent to ~500 million years of evolution.

@science.org

science.org/doi/10.1126/...

@science.org

science.org/doi/10.1126/...

Simulating 500 million years of evolution with a language model

More than three billion years of evolution have produced an image of biology encoded into the space of natural proteins. Here we show that language models trained at scale on evolutionary data can gen...

science.org

January 16, 2025 at 7:01 PM

Whoa! When a large language of life model generates a protein equivalent to ~500 million years of evolution.

@science.org

science.org/doi/10.1126/...

@science.org

science.org/doi/10.1126/...

Reposted by Kristina Gligoric

Look who I saw on my morning walk. Western Blue Bird, San Francisco

January 12, 2025 at 8:43 PM

Look who I saw on my morning walk. Western Blue Bird, San Francisco

Reposted by Kristina Gligoric

1/🚨 New paper alert! 🚨 In what is likely the most fun study of my PhD, I am excited to share new work with @natematias.bsky.social about what makes news headlines most effective at grabbing reader attention. Let’s dive in to the science behind clickbait 🧵👇

January 7, 2025 at 9:27 PM

1/🚨 New paper alert! 🚨 In what is likely the most fun study of my PhD, I am excited to share new work with @natematias.bsky.social about what makes news headlines most effective at grabbing reader attention. Let’s dive in to the science behind clickbait 🧵👇

Reposted by Kristina Gligoric

This was such an interesting exploratory study — in particular, what struck me was the racial divide in neighbourhoods that have more information-seeking behavior vs more advertising posts.

Fascinating look at the demographic correlates of neighborhoods and their online communities on Nextdoor by Tao & @mariannealq.bsky.social doi.org/10.1145/3678...

December 31, 2024 at 1:38 PM

This was such an interesting exploratory study — in particular, what struck me was the racial divide in neighbourhoods that have more information-seeking behavior vs more advertising posts.

Reposted by Kristina Gligoric

I am about three quarters of the way through this excellent podcast series by @paulmmcooper.bsky.social which looks "at the collapse of a different civilization each episode," and asks" "What did they have in common? Why did they fall? And what did it feel like to watch it happen?"

1/2

1/2

Fall of Civilizations Podcast

A podcast that explores the collapse of different societies through history.

fallofcivilizationspodcast.com

December 16, 2024 at 3:50 PM

I am about three quarters of the way through this excellent podcast series by @paulmmcooper.bsky.social which looks "at the collapse of a different civilization each episode," and asks" "What did they have in common? Why did they fall? And what did it feel like to watch it happen?"

1/2

1/2

Reposted by Kristina Gligoric

the crucial first step in fighting anthropomorphization of DL systems is to find an easier to write and pronounce word than anthropomorphizing.

December 13, 2024 at 9:13 PM

the crucial first step in fighting anthropomorphization of DL systems is to find an easier to write and pronounce word than anthropomorphizing.

Reposted by Kristina Gligoric

Natural Language Processing—artificial intelligence that uses human language—has been on a roll lately. You’ve probably noticed! So the Stanford NLP Group has been growing, and diversifying into lots of new topics, including agents, language model programs, and socially aware #NLP.

nlp.stanford.edu

nlp.stanford.edu

December 4, 2024 at 5:14 PM

Natural Language Processing—artificial intelligence that uses human language—has been on a roll lately. You’ve probably noticed! So the Stanford NLP Group has been growing, and diversifying into lots of new topics, including agents, language model programs, and socially aware #NLP.

nlp.stanford.edu

nlp.stanford.edu

Check out our new paper on social determinants of on-campus food choice, now out in @pnasnexus.org!

academic.oup.com/pnasnexus/ar...

academic.oup.com/pnasnexus/ar...

December 4, 2024 at 3:30 PM

Check out our new paper on social determinants of on-campus food choice, now out in @pnasnexus.org!

academic.oup.com/pnasnexus/ar...

academic.oup.com/pnasnexus/ar...

There's always been something that felt misguided about the overemphasis on p-hacking, reporting requirements, and restricted data use--though I couldn’t quite put a finger on it. This slide captures it!

This slide is from a talk I gave at an OSF symposium a few years back. It's still relevant and I still think we should have prioritized, we should still prioritize, the set of issues on the right over those on the left.

November 26, 2024 at 6:48 PM

There's always been something that felt misguided about the overemphasis on p-hacking, reporting requirements, and restricted data use--though I couldn’t quite put a finger on it. This slide captures it!

Reposted by Kristina Gligoric

Curious to hear how the LLM feedback played for @iclr-conf.bsky.social reviewers and authors. Did you find it helpful? Did it change anything meaningful? Or do you have a sense it's just "lipstick on a pig" in the sense of more verbose, but still crappy review?

November 25, 2024 at 2:18 PM

Curious to hear how the LLM feedback played for @iclr-conf.bsky.social reviewers and authors. Did you find it helpful? Did it change anything meaningful? Or do you have a sense it's just "lipstick on a pig" in the sense of more verbose, but still crappy review?

Reposted by Kristina Gligoric

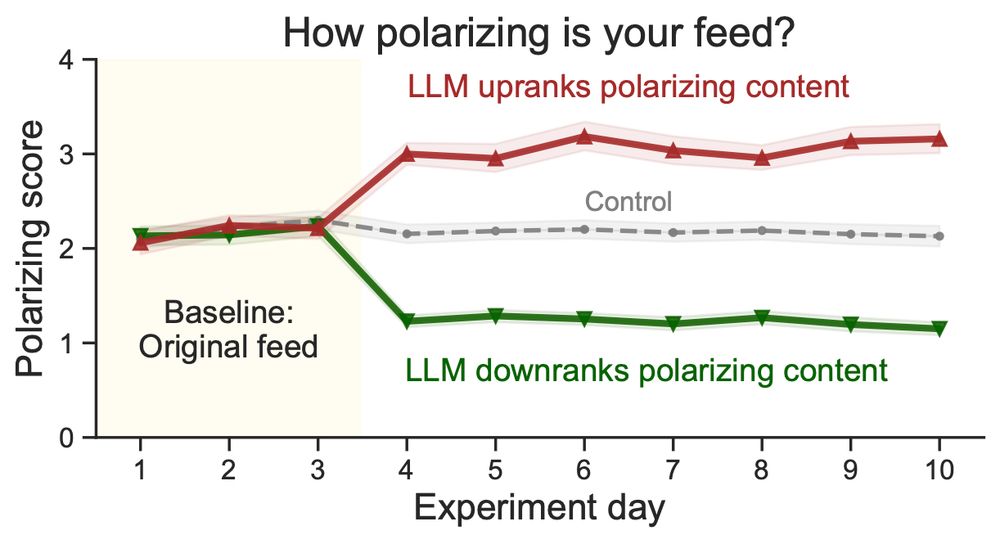

New paper: Do social media algorithms shape affective polarization?

We ran a field experiment on X/Twitter (N=1,256) using LLMs to rerank content in real-time, adjusting exposure to polarizing posts. Result: Algorithmic ranking impacts feelings toward the political outgroup! 🧵⬇️

We ran a field experiment on X/Twitter (N=1,256) using LLMs to rerank content in real-time, adjusting exposure to polarizing posts. Result: Algorithmic ranking impacts feelings toward the political outgroup! 🧵⬇️

November 25, 2024 at 8:32 PM

New paper: Do social media algorithms shape affective polarization?

We ran a field experiment on X/Twitter (N=1,256) using LLMs to rerank content in real-time, adjusting exposure to polarizing posts. Result: Algorithmic ranking impacts feelings toward the political outgroup! 🧵⬇️

We ran a field experiment on X/Twitter (N=1,256) using LLMs to rerank content in real-time, adjusting exposure to polarizing posts. Result: Algorithmic ranking impacts feelings toward the political outgroup! 🧵⬇️