Angelina Wang

@angelinawang.bsky.social

Pinned

Have you ever felt that AI fairness was too strict, enforcing fairness when it didn’t seem necessary? How about too narrow, missing a wide range of important harms? We argue that the way to address both of these critiques is to discriminate more 🧵

Reposted by Angelina Wang

Started a thread in the other place and bringing it over here - I really think we should be more vocal about the opportunities that lay at the intersection of these two options!

So I'm starting a live thread of new roles as I become aware of them - feel free to add / extend / share :

So I'm starting a live thread of new roles as I become aware of them - feel free to add / extend / share :

Life situations are bleak right now for a lot of people. In tech, the "Venn Diagram" of (1) positive work and (2) making enough money to support your family is increasingly non-overlapping. We all do what we can.

This image has been living in my mind rent-free for months.

This image has been living in my mind rent-free for months.

October 29, 2025 at 2:25 AM

Started a thread in the other place and bringing it over here - I really think we should be more vocal about the opportunities that lay at the intersection of these two options!

So I'm starting a live thread of new roles as I become aware of them - feel free to add / extend / share :

So I'm starting a live thread of new roles as I become aware of them - feel free to add / extend / share :

Cornell (NYC and Ithaca) is recruiting AI postdocs, apply by Nov 20, 2025! If you're interested in working with me on technical approaches to responsible AI (e.g., personalization, fairness), please email me.

academicjobsonline.org/ajo/jobs/30971

academicjobsonline.org/ajo/jobs/30971

Cornell University, Empire AI Fellows Program

Job #AJO30971, Postdoctoral Fellow, Empire AI Fellows Program, Cornell University, New York, New York, US

academicjobsonline.org

October 28, 2025 at 6:19 PM

Cornell (NYC and Ithaca) is recruiting AI postdocs, apply by Nov 20, 2025! If you're interested in working with me on technical approaches to responsible AI (e.g., personalization, fairness), please email me.

academicjobsonline.org/ajo/jobs/30971

academicjobsonline.org/ajo/jobs/30971

Reposted by Angelina Wang

Can AI simulations of human research participants advance cognitive science? In @cp-trendscognsci.bsky.social, @lmesseri.bsky.social & I analyze this vision. We show how “AI Surrogates” entrench practices that limit the generalizability of cognitive science while aspiring to do the opposite. 1/

AI Surrogates and illusions of generalizability in cognitive science

Recent advances in artificial intelligence (AI) have generated enthusiasm for using AI simulations of human research participants to generate new know…

www.sciencedirect.com

October 21, 2025 at 8:24 PM

Can AI simulations of human research participants advance cognitive science? In @cp-trendscognsci.bsky.social, @lmesseri.bsky.social & I analyze this vision. We show how “AI Surrogates” entrench practices that limit the generalizability of cognitive science while aspiring to do the opposite. 1/

Grateful to win Best Paper at ACL for our work on Fairness through Difference Awareness with my amazing collaborators!! Check out the paper for why we think fairness has both gone too far, and at the same time, not far enough aclanthology.org/2025.acl-lon...

July 30, 2025 at 3:34 PM

Grateful to win Best Paper at ACL for our work on Fairness through Difference Awareness with my amazing collaborators!! Check out the paper for why we think fairness has both gone too far, and at the same time, not far enough aclanthology.org/2025.acl-lon...

Reposted by Angelina Wang

@angelinawang.bsky.social presents the "Fairness through Difference Awareness" benchmark. Fairness tests require no discrimination...

but the law supports many forms of discrimination! E.g., synagogues should hire Jewish rabbis. LLMs often get this wrong aclanthology.org/2025.acl-lon... #ACL2025NLP

but the law supports many forms of discrimination! E.g., synagogues should hire Jewish rabbis. LLMs often get this wrong aclanthology.org/2025.acl-lon... #ACL2025NLP

July 30, 2025 at 7:26 AM

@angelinawang.bsky.social presents the "Fairness through Difference Awareness" benchmark. Fairness tests require no discrimination...

but the law supports many forms of discrimination! E.g., synagogues should hire Jewish rabbis. LLMs often get this wrong aclanthology.org/2025.acl-lon... #ACL2025NLP

but the law supports many forms of discrimination! E.g., synagogues should hire Jewish rabbis. LLMs often get this wrong aclanthology.org/2025.acl-lon... #ACL2025NLP

Reposted by Angelina Wang

Was beyond disappointed to see this in the AI Action Plan. Messing with the NIST RMF (which many private & public institutions currently rely on) feels like a cheap shot

July 24, 2025 at 2:25 PM

Was beyond disappointed to see this in the AI Action Plan. Messing with the NIST RMF (which many private & public institutions currently rely on) feels like a cheap shot

Reposted by Angelina Wang

We have to talk about rigor in AI work and what it should entail. The reality is that impoverished notions of rigor do not only lead to some one-off undesirable outcomes but can have a deeply formative impact on the scientific integrity and quality of both AI research and practice 1/

June 18, 2025 at 11:48 AM

We have to talk about rigor in AI work and what it should entail. The reality is that impoverished notions of rigor do not only lead to some one-off undesirable outcomes but can have a deeply formative impact on the scientific integrity and quality of both AI research and practice 1/

Reposted by Angelina Wang

Alright, people, let's be honest: GenAI systems are everywhere, and figuring out whether they're any good is a total mess. Should we use them? Where? How? Do they need a total overhaul?

(1/6)

(1/6)

June 15, 2025 at 12:20 AM

Alright, people, let's be honest: GenAI systems are everywhere, and figuring out whether they're any good is a total mess. Should we use them? Where? How? Do they need a total overhaul?

(1/6)

(1/6)

Have you ever felt that AI fairness was too strict, enforcing fairness when it didn’t seem necessary? How about too narrow, missing a wide range of important harms? We argue that the way to address both of these critiques is to discriminate more 🧵

June 2, 2025 at 4:38 PM

Have you ever felt that AI fairness was too strict, enforcing fairness when it didn’t seem necessary? How about too narrow, missing a wide range of important harms? We argue that the way to address both of these critiques is to discriminate more 🧵

Reposted by Angelina Wang

The US government recently flagged my scientific grant in its "woke DEI database". Many people have asked me what I will do.

My answer today in Nature.

We will not be cowed. We will keep using AI to build a fairer, healthier world.

www.nature.com/articles/d41...

My answer today in Nature.

We will not be cowed. We will keep using AI to build a fairer, healthier world.

www.nature.com/articles/d41...

My ‘woke DEI’ grant has been flagged for scrutiny. Where do I go from here?

My work in making artificial intelligence fair has been noticed by US officials intent on ending ‘class warfare propaganda’.

www.nature.com

April 25, 2025 at 5:19 PM

The US government recently flagged my scientific grant in its "woke DEI database". Many people have asked me what I will do.

My answer today in Nature.

We will not be cowed. We will keep using AI to build a fairer, healthier world.

www.nature.com/articles/d41...

My answer today in Nature.

We will not be cowed. We will keep using AI to build a fairer, healthier world.

www.nature.com/articles/d41...

I've recently put together a "Fairness FAQ": tinyurl.com/fairness-faq. If you work in non-fairness ML and you've heard about fairness, perhaps you've wondered things like what the best definitions of fairness are, and whether we can train algorithms that optimize for it.

March 17, 2025 at 2:39 PM

I've recently put together a "Fairness FAQ": tinyurl.com/fairness-faq. If you work in non-fairness ML and you've heard about fairness, perhaps you've wondered things like what the best definitions of fairness are, and whether we can train algorithms that optimize for it.

Reposted by Angelina Wang

*Please repost* @sjgreenwood.bsky.social and I just launched a new personalized feed (*please pin*) that we hope will become a "must use" for #academicsky. The feed shows posts about papers filtered by *your* follower network. It's become my default Bluesky experience bsky.app/profile/pape...

March 10, 2025 at 6:14 PM

*Please repost* @sjgreenwood.bsky.social and I just launched a new personalized feed (*please pin*) that we hope will become a "must use" for #academicsky. The feed shows posts about papers filtered by *your* follower network. It's become my default Bluesky experience bsky.app/profile/pape...

Reposted by Angelina Wang

I am excited to announce that I will join the University of Zurich as an assistant professor in August this year! I am looking for PhD students and postdocs starting from the fall.

My research interests include optimization, federated learning, machine learning, privacy, and unlearning.

My research interests include optimization, federated learning, machine learning, privacy, and unlearning.

March 6, 2025 at 2:17 AM

I am excited to announce that I will join the University of Zurich as an assistant professor in August this year! I am looking for PhD students and postdocs starting from the fall.

My research interests include optimization, federated learning, machine learning, privacy, and unlearning.

My research interests include optimization, federated learning, machine learning, privacy, and unlearning.

Reposted by Angelina Wang

Cutting $880 billion from Medicaid is going to have a lot of devastating consequences for a lot of people

February 26, 2025 at 3:11 AM

Cutting $880 billion from Medicaid is going to have a lot of devastating consequences for a lot of people

Reposted by Angelina Wang

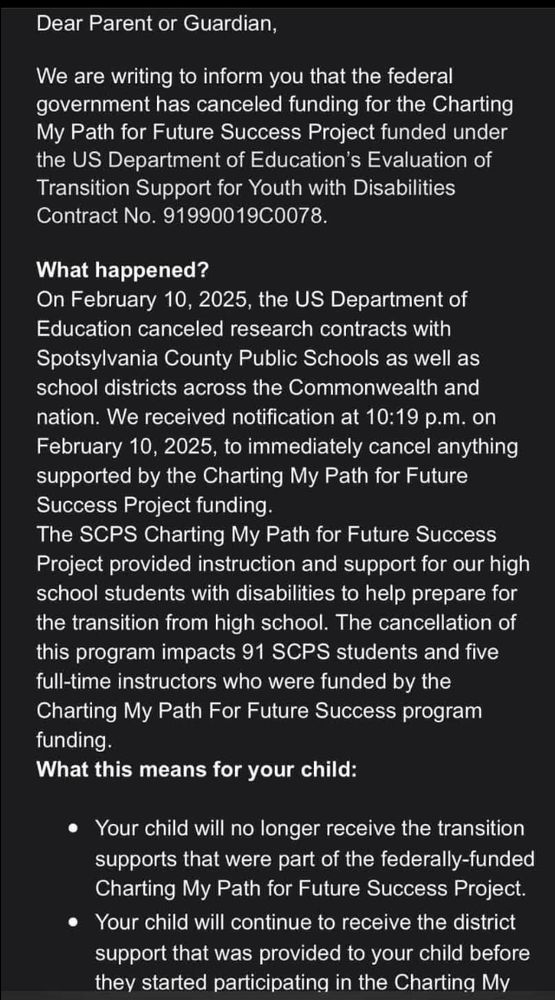

the richest man in the world has decided that your kids don't deserve special education programs

February 16, 2025 at 6:14 PM

the richest man in the world has decided that your kids don't deserve special education programs

Our new piece in Nature Machine Intelligence: LLMs are replacing human participants, but can they simulate diverse respondents? Surveys use representative sampling for a reason, and our work shows how LLM training prevents accurate simulation of different human identities.

February 17, 2025 at 4:36 PM

Our new piece in Nature Machine Intelligence: LLMs are replacing human participants, but can they simulate diverse respondents? Surveys use representative sampling for a reason, and our work shows how LLM training prevents accurate simulation of different human identities.

Reposted by Angelina Wang

📢📢 Introducing the 1st workshop on Sociotechnical AI Governance at CHI’25 (STAIG@CHI'25)! Join us to build a community to tackle AI governance through a sociotechnical lens and drive actionable strategies.

🌐 Website: chi-staig.github.io

🗓️ Submit your work by: Feb 17, 2025

🌐 Website: chi-staig.github.io

🗓️ Submit your work by: Feb 17, 2025

December 23, 2024 at 6:47 PM

📢📢 Introducing the 1st workshop on Sociotechnical AI Governance at CHI’25 (STAIG@CHI'25)! Join us to build a community to tackle AI governance through a sociotechnical lens and drive actionable strategies.

🌐 Website: chi-staig.github.io

🗓️ Submit your work by: Feb 17, 2025

🌐 Website: chi-staig.github.io

🗓️ Submit your work by: Feb 17, 2025

Reposted by Angelina Wang

November 14, 2024 at 5:16 PM

There are numerous leaderboards for AI capabilities and risks, for example fairness. In new work, we argue that leaderboards are misleading when the determination of concepts like “fairness” is always contextual. Instead, we should use benchmark suites.

November 11, 2024 at 9:02 PM

There are numerous leaderboards for AI capabilities and risks, for example fairness. In new work, we argue that leaderboards are misleading when the determination of concepts like “fairness” is always contextual. Instead, we should use benchmark suites.