Join us for connections, support, mentorship, etc.

Apply by February 24!

facctconference.org/2026/callfor...

We are broadly looking for candidates interested in perceptions, evaluation, uses, and impacts of AI!

#FAccT #FAccT2025 #CHI2025 #NeurIPS2025 #ACL2025

We are broadly looking for candidates interested in perceptions, evaluation, uses, and impacts of AI!

#FAccT #FAccT2025 #CHI2025 #NeurIPS2025 #ACL2025

We are broadly looking for candidates interested in perceptions, evaluation, uses, and impacts of AI!

#FAccT #FAccT2025 #CHI2025 #NeurIPS2025 #ACL2025

We are broadly looking for candidates interested in perceptions, evaluation, uses, and impacts of AI!

#FAccT #FAccT2025 #CHI2025 #NeurIPS2025 #ACL2025

@neuripsconf.bsky.social -- this is my first time at #NeurIPS and looking forward to seeing folks and presenting our paper on Rigor in AI. Do find me if you want to chat about rigor in AI, anthropomorphic AI, or evaluations #NeurIPS2025

@neuripsconf.bsky.social -- this is my first time at #NeurIPS and looking forward to seeing folks and presenting our paper on Rigor in AI. Do find me if you want to chat about rigor in AI, anthropomorphic AI, or evaluations #NeurIPS2025

Apply to Wisconsin CS to research

- Societal impact of AI

- NLP ←→ CSS and cultural analytics

- Computational sociolinguistics

- Human-AI interaction

- Culturally competent and inclusive NLP

with me!

lucy3.github.io/prospective-...

Apply to Wisconsin CS to research

- Societal impact of AI

- NLP ←→ CSS and cultural analytics

- Computational sociolinguistics

- Human-AI interaction

- Culturally competent and inclusive NLP

with me!

lucy3.github.io/prospective-...

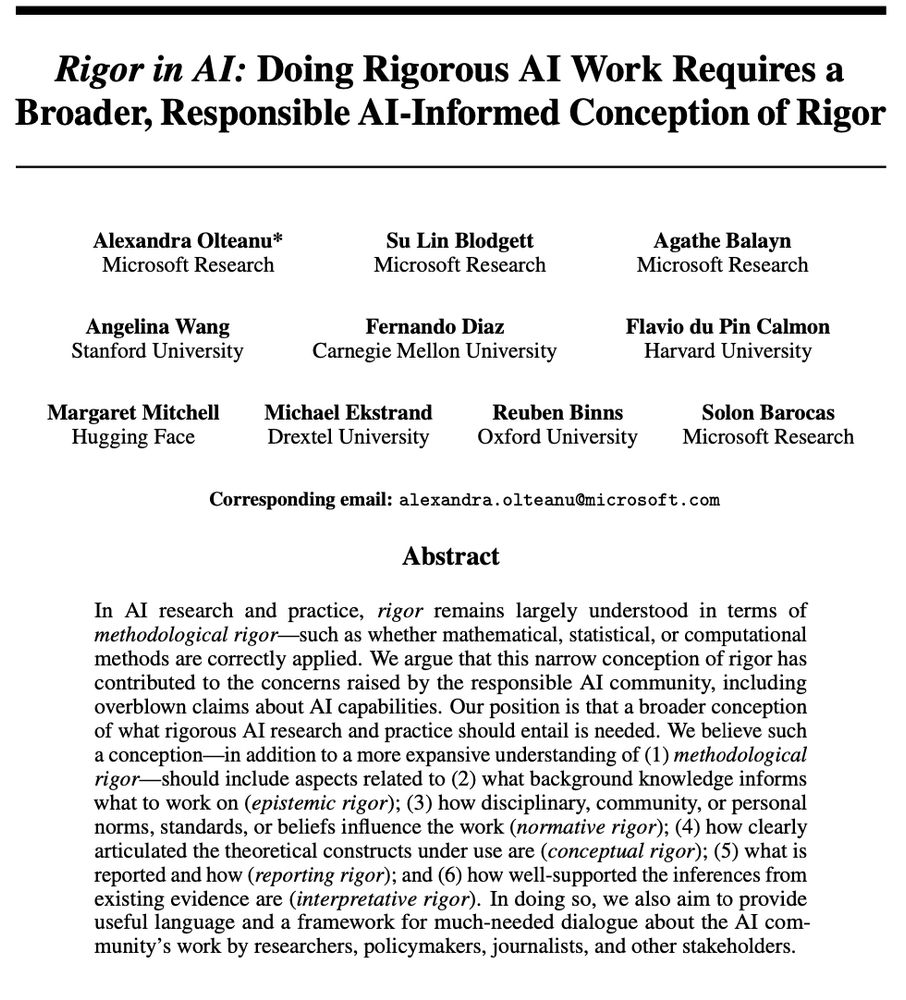

TL;DR Impoverished notions of rigor can have a formative impact on AI work. We argue for a broader conception of what rigorous work should entail & go beyond methodological issues to include epistemic, normative, conceptual, reporting & interpretative considerations

TL;DR Impoverished notions of rigor can have a formative impact on AI work. We argue for a broader conception of what rigorous work should entail & go beyond methodological issues to include epistemic, normative, conceptual, reporting & interpretative considerations

www.arxiv.org/abs/2506.14652

www.arxiv.org/abs/2506.14652

@dggoldst.bsky.social @jakehofman.bsky.social www.microsoft.com/en-us/resear...

@dggoldst.bsky.social @jakehofman.bsky.social www.microsoft.com/en-us/resear...

1. Practice, and practice with co-authors who are better writers than you. Observe how they make edits and copy them.

(1/n)

1. Practice, and practice with co-authors who are better writers than you. Observe how they make edits and copy them.

(1/n)

Congratulations to all the authors of the three best papers and three honorable mention papers.

Be sure to check out their presentations at the conference next week!

facct-blog.github.io/2025-06-20/b...