Anon feedback: https://admonymous.co/giffmana

📍 Zürich, Suisse 🔗 http://lucasb.eyer.be

Anyways, someone noticing that DeepSeek refuses to answer *anything* about Xi Jinping, even the question whether he exists at all, triggered me writing a short snippet on safety fine-tuning: lb.eyer.be/s/safety-sft...

Anyways, someone noticing that DeepSeek refuses to answer *anything* about Xi Jinping, even the question whether he exists at all, triggered me writing a short snippet on safety fine-tuning: lb.eyer.be/s/safety-sft...

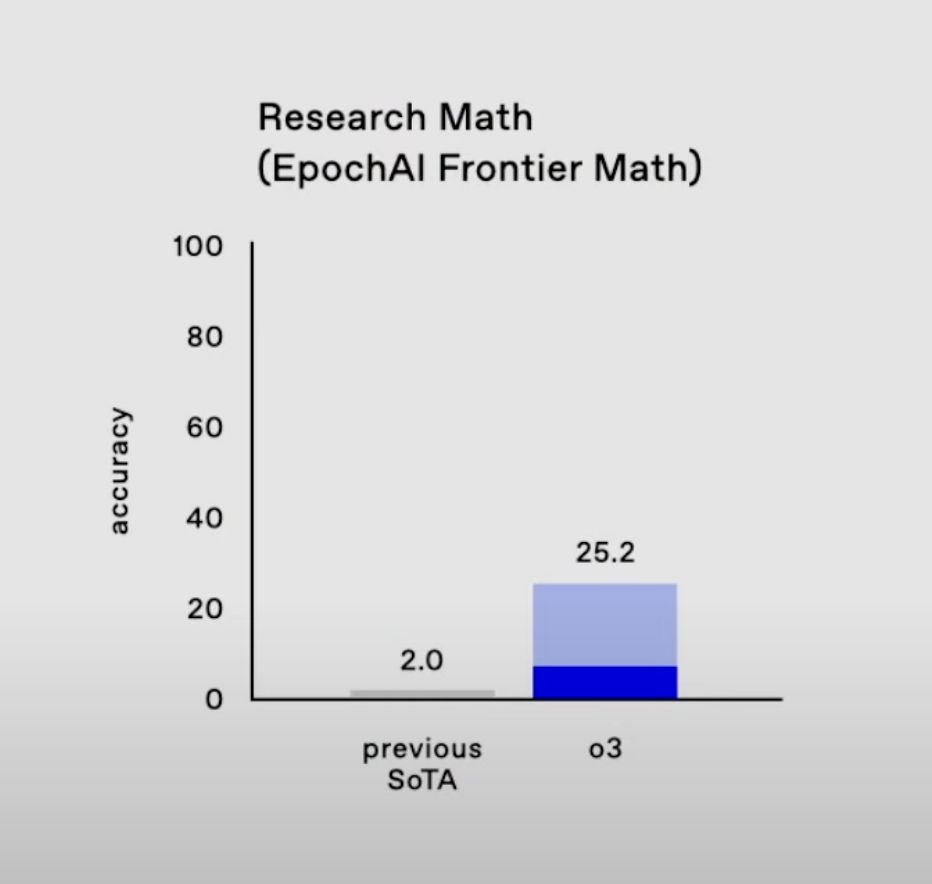

Rip to people who say any of "progress is done," "scale is done," or "llms cant reason"

2024 was awesome. I love my job.

Rip to people who say any of "progress is done," "scale is done," or "llms cant reason"

2024 was awesome. I love my job.

Blogpost: lb.eyer.be/s/residuals....

Blogpost: lb.eyer.be/s/residuals....

Things are different here: this guy is alone, chonky, and not scared at all, I was more scared of him towards the end lol.

Also look at that … industrialization

Things are different here: this guy is alone, chonky, and not scared at all, I was more scared of him towards the end lol.

Also look at that … industrialization

📽️ Watch here: www.youtube.com/watch?v=bMXq...

Big thanks to @giffmana.ai for this excellent content! 🙌

📽️ Watch here: www.youtube.com/watch?v=bMXq...

Big thanks to @giffmana.ai for this excellent content! 🙌

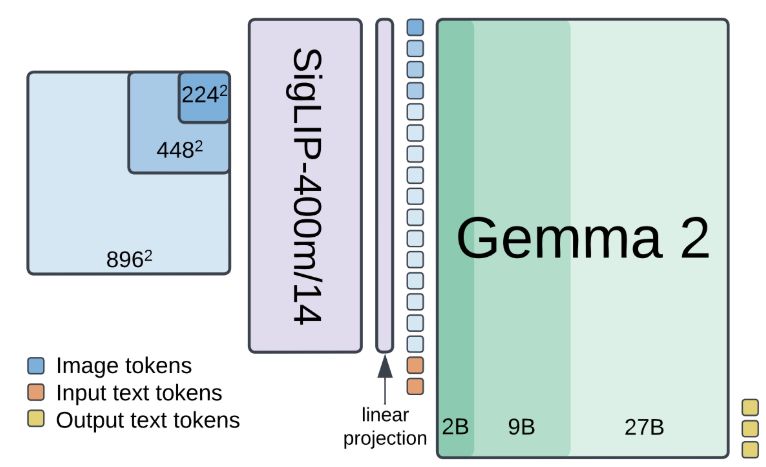

It’s also a perfect transition: this v2 was carried a lot more by @andreaspsteiner.bsky.social André and Michael than by us.

Crazy new sota tasks! Interesting res vs LLM size study! Better OCR! Less hallucination!

1/7

It’s also a perfect transition: this v2 was carried a lot more by @andreaspsteiner.bsky.social André and Michael than by us.

Crazy new sota tasks! Interesting res vs LLM size study! Better OCR! Less hallucination!

What a fantastic win for the European AI landscape 🇪🇺

Congrats to @giffmana.ai @kolesnikov.ch @xzhai.bsky.social for that move, and to OpenAI for making these hires!

Excited about this new journey! 🚀

Quick FAQ thread...

After 7 amazing years at Google Brain/DM, I am joining OpenAI. Together with @xzhai.bsky.social and @giffmana.ai, we will establish OpenAI Zurich office. Proud of our past work and looking forward to the future.

What a fantastic win for the European AI landscape 🇪🇺

Congrats to @giffmana.ai @kolesnikov.ch @xzhai.bsky.social for that move, and to OpenAI for making these hires!

Excited about this new journey! 🚀

Quick FAQ thread...

After 7 amazing years at Google Brain/DM, I am joining OpenAI. Together with @xzhai.bsky.social and @giffmana.ai, we will establish OpenAI Zurich office. Proud of our past work and looking forward to the future.

Excited about this new journey! 🚀

Quick FAQ thread...

After 7 amazing years at Google Brain/DM, I am joining OpenAI. Together with @xzhai.bsky.social and @giffmana.ai, we will establish OpenAI Zurich office. Proud of our past work and looking forward to the future.

After 7 amazing years at Google Brain/DM, I am joining OpenAI. Together with @xzhai.bsky.social and @giffmana.ai, we will establish OpenAI Zurich office. Proud of our past work and looking forward to the future.

@mtschannen.bsky.social @asusanopinto.bsky.social

@kolesnikov.ch

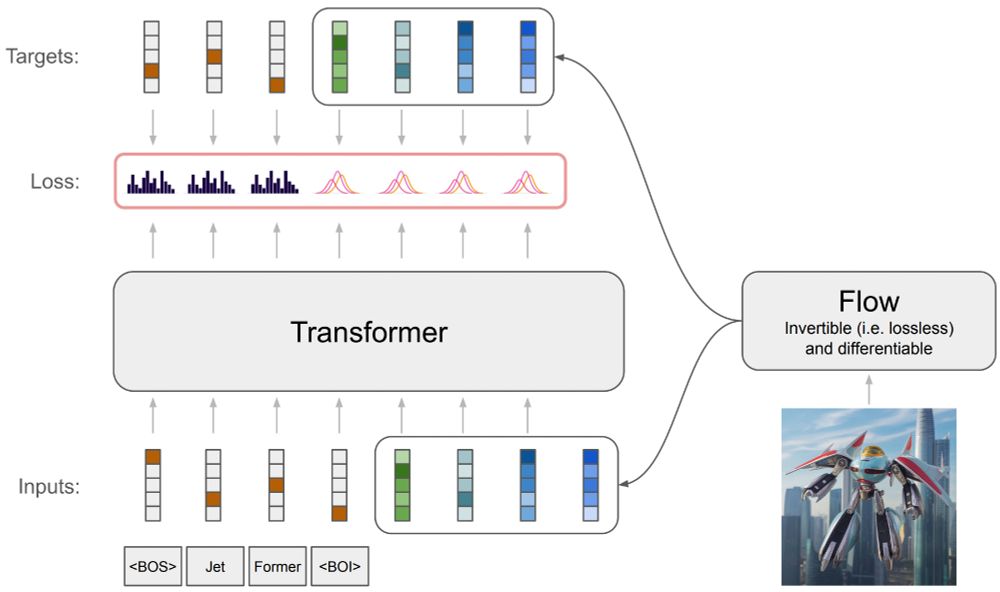

tl;dr: VQGAN quality w/o VQGAN, but optimizing pixel tokens with NLL. Also some normalizing flow magic, which I have to read up

arxiv.org/abs/2411.19722

@mtschannen.bsky.social @asusanopinto.bsky.social

@kolesnikov.ch

tl;dr: VQGAN quality w/o VQGAN, but optimizing pixel tokens with NLL. Also some normalizing flow magic, which I have to read up

arxiv.org/abs/2411.19722

* this time the « we » is really just me, but hey, academic we 🙃

However, it's criminally undocumented. I tried using it outside Google to fine-tune PaliGemma and SigLIP on GPUs, and wrote a tutorial: lb.eyer.be/a/bv_tuto.html

* this time the « we » is really just me, but hey, academic we 🙃

However, it's criminally undocumented. I tried using it outside Google to fine-tune PaliGemma and SigLIP on GPUs, and wrote a tutorial: lb.eyer.be/a/bv_tuto.html

However, it's criminally undocumented. I tried using it outside Google to fine-tune PaliGemma and SigLIP on GPUs, and wrote a tutorial: lb.eyer.be/a/bv_tuto.html

2022: Replace every GAN with diffusion models

2023: Replace every NeRF with 3DGS

2024: Replace every diffusion model with Flow Matching

2025: ???

Typically, you'd train a VQ-VAE/GAN tokenizer first, and then use its tokens for your LLM/DiT/... But we all know eventually end-to-end wins over pipelines.

With flow models, you can actually learn pixel-LLM-pixel end-to-end!

We have been pondering this during summer and developed a new model: JetFormer 🌊🤖

arxiv.org/abs/2411.19722

A thread 👇

1/

Typically, you'd train a VQ-VAE/GAN tokenizer first, and then use its tokens for your LLM/DiT/... But we all know eventually end-to-end wins over pipelines.

With flow models, you can actually learn pixel-LLM-pixel end-to-end!

Alternative title: why I decided to stop working on tracking.

Curious about other's thoughts on this.

lb.eyer.be/s/cv-ethics....

Alternative title: why I decided to stop working on tracking.

Curious about other's thoughts on this.

lb.eyer.be/s/cv-ethics....

not on the beach, but as you may know I like cows in "out of distribution" places and poses.

huggingface.co/spaces/big-v...

not on the beach, but as you may know I like cows in "out of distribution" places and poses.

huggingface.co/spaces/big-v...

The small/fast/mini chatbots all failed miserably

The large ones work great (except Gemini).

Also:

1. I really like C3.6 giving only answer and asking if explain

2. Wild that Chat understood the calls and added comments!

The small/fast/mini chatbots all failed miserably

The large ones work great (except Gemini).

Also:

1. I really like C3.6 giving only answer and asking if explain

2. Wild that Chat understood the calls and added comments!