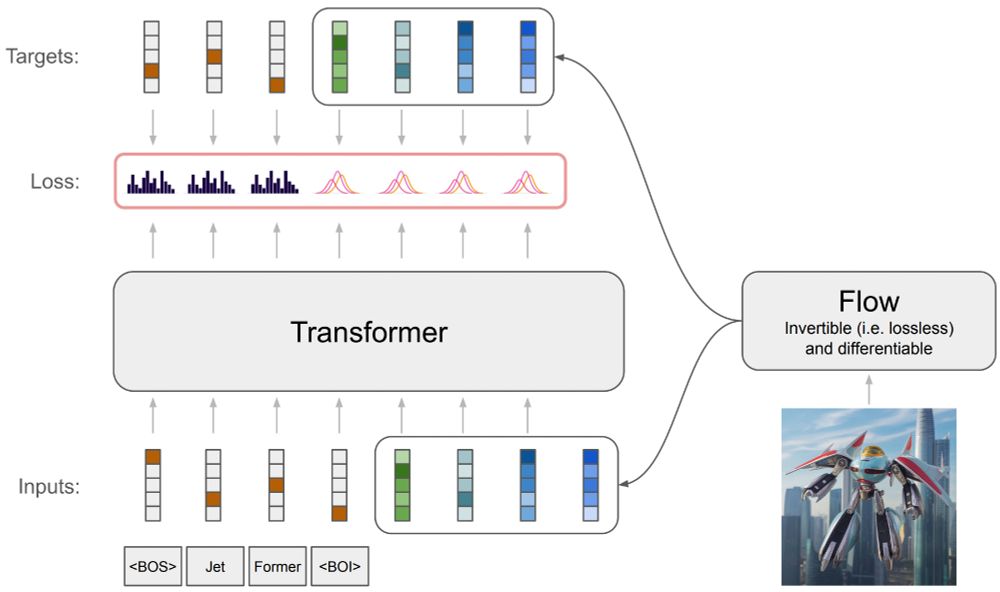

We have been pondering this during summer and developed a new model: JetFormer 🌊🤖

arxiv.org/abs/2411.19722

A thread 👇

1/

We have been pondering this during summer and developed a new model: JetFormer 🌊🤖

arxiv.org/abs/2411.19722

A thread 👇

1/

The key technical breakthrough here is that we can control joints and fingertips of the robot **without joint encoders**. All we need here is self-supervised data collection and learning.

The key technical breakthrough here is that we can control joints and fingertips of the robot **without joint encoders**. All we need here is self-supervised data collection and learning.

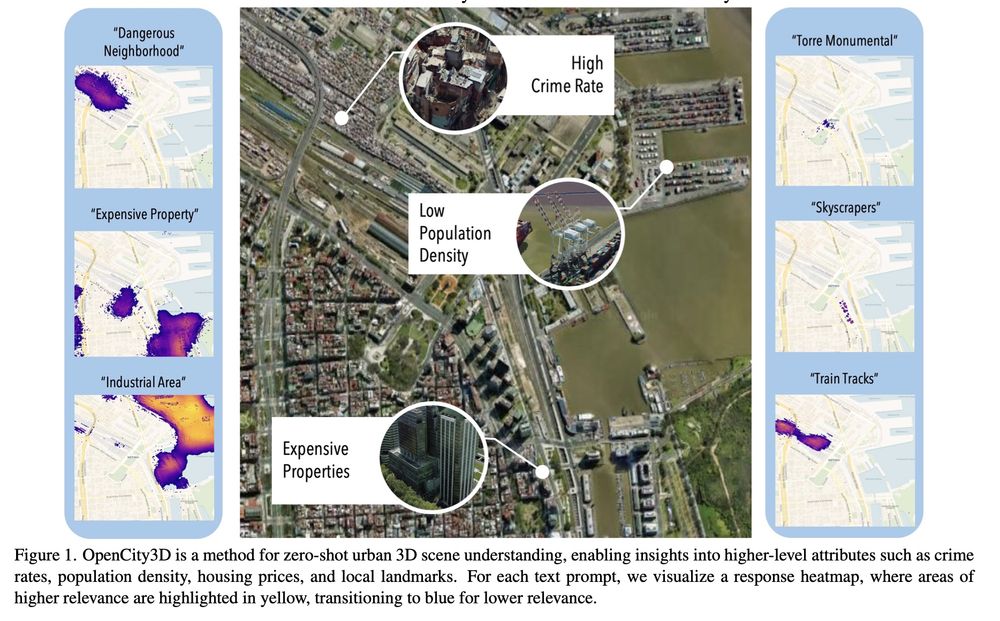

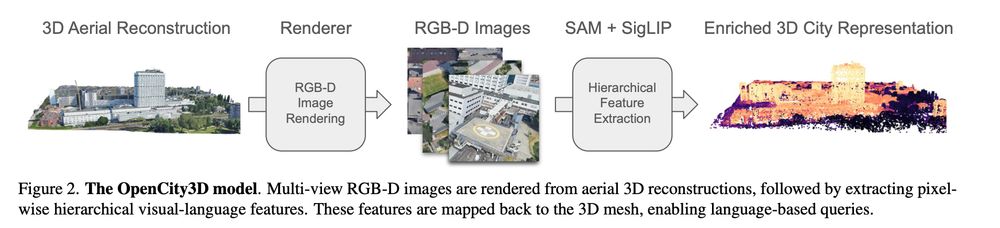

Valentin Bieri, Marco Zamboni, Nicolas S. Blumer, Qingxuan Chen, Francis Engelmann

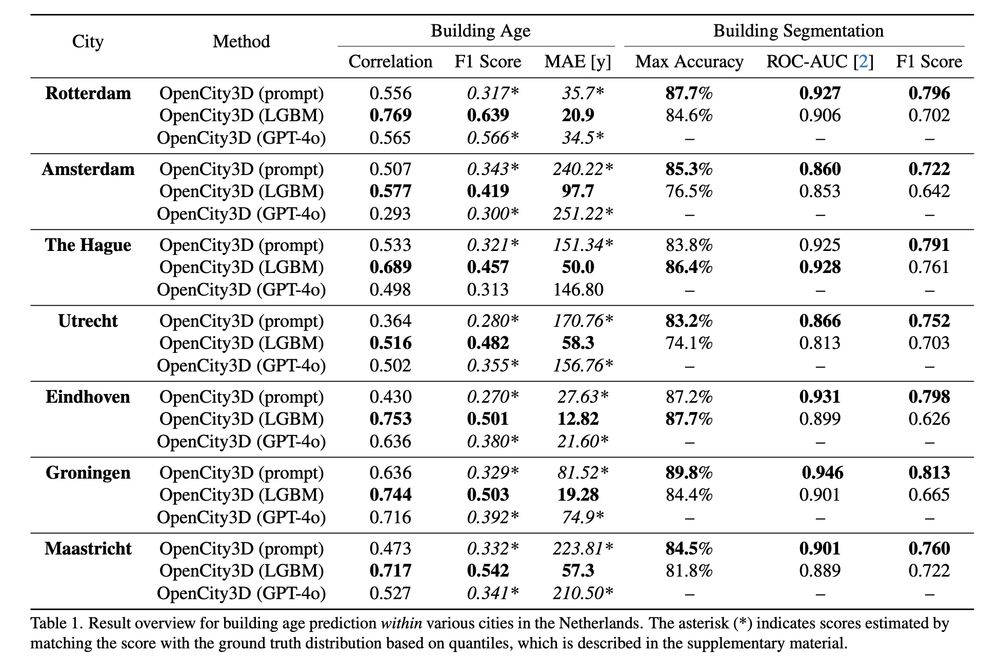

tl;dr: if you have aerial 3D reconstruction, use SigLIP to be happy.

arxiv.org/abs/2503.16776

Valentin Bieri, Marco Zamboni, Nicolas S. Blumer, Qingxuan Chen, Francis Engelmann

tl;dr: if you have aerial 3D reconstruction, use SigLIP to be happy.

arxiv.org/abs/2503.16776

By Du et al.

arxiv.org/abs/2502.13685

TLDR: combine multiple RNNs with a routing/gating network.

Evaluated on language tasks. Seems to outperform transformers? Would have loved to see comparisons with SSMs and xLSTM.

By Du et al.

arxiv.org/abs/2502.13685

TLDR: combine multiple RNNs with a routing/gating network.

Evaluated on language tasks. Seems to outperform transformers? Would have loved to see comparisons with SSMs and xLSTM.

Luigi Freda

tl;dr: python implementation of a Visual SLAM pipeline, support monocular, stereo and RGBD cameras

github.com/luigifreda/p...

arxiv.org/abs/2502.11955

Luigi Freda

tl;dr: python implementation of a Visual SLAM pipeline, support monocular, stereo and RGBD cameras

github.com/luigifreda/p...

arxiv.org/abs/2502.11955

Zelin Zhou, Saurav Uprety, Shichuang Nie, Hongzhou Yang

tl;dr: GICI-LIB+3DGS

arxiv.org/abs/2502.10975

Zelin Zhou, Saurav Uprety, Shichuang Nie, Hongzhou Yang

tl;dr: GICI-LIB+3DGS

arxiv.org/abs/2502.10975

Dongki Jung, Jaehoon Choi, Yonghan Lee, Dinesh Manocha

tl;dr: spherical camera model->SfM; DebSDF; classical texture mapping->differentiable rendering->neural texture fine-tuning

arxiv.org/abs/2502.12545

Dongki Jung, Jaehoon Choi, Yonghan Lee, Dinesh Manocha

tl;dr: spherical camera model->SfM; DebSDF; classical texture mapping->differentiable rendering->neural texture fine-tuning

arxiv.org/abs/2502.12545

Test your state-of-the-art models on:

🔹 Novel View Synthesis 📸➡️🖼️

🔹 3D Semantic & Instance Segmentation 🤖🔍🕶️

Shoutout to @awhiteguitar.bsky.social & Yueh-Cheng Liu for their incredible work👏

🚀Check it out: kaldir.vc.in.tum.de/scannetpp/

Test your state-of-the-art models on:

🔹 Novel View Synthesis 📸➡️🖼️

🔹 3D Semantic & Instance Segmentation 🤖🔍🕶️

Shoutout to @awhiteguitar.bsky.social & Yueh-Cheng Liu for their incredible work👏

🚀Check it out: kaldir.vc.in.tum.de/scannetpp/

epoch.ai/gradient-upd...

epoch.ai/gradient-upd...

Anyways, someone noticing that DeepSeek refuses to answer *anything* about Xi Jinping, even the question whether he exists at all, triggered me writing a short snippet on safety fine-tuning: lb.eyer.be/s/safety-sft...

Anyways, someone noticing that DeepSeek refuses to answer *anything* about Xi Jinping, even the question whether he exists at all, triggered me writing a short snippet on safety fine-tuning: lb.eyer.be/s/safety-sft...

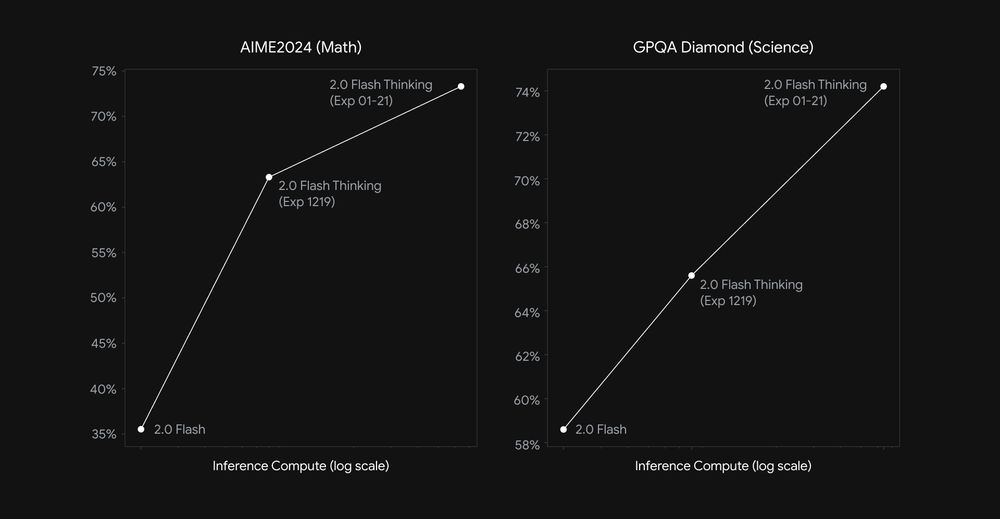

Today we’re sharing an experimental update w/improved performance on math, science, and multimodal reasoning benchmarks 📈:

• AIME: 73.3%

• GPQA: 74.2%

• MMMU: 75.4%

Today we’re sharing an experimental update w/improved performance on math, science, and multimodal reasoning benchmarks 📈:

• AIME: 73.3%

• GPQA: 74.2%

• MMMU: 75.4%

Which one of these men joins Tom Anderson and MySoace in the annals of history first ?

Their greed is our opportunity.

Which one of these men joins Tom Anderson and MySoace in the annals of history first ?

Their greed is our opportunity.

1/5

1/5