🎤 “Adaptive Units of Computation: Towards Sublinear-Memory and Tokenizer-Free Foundation Models”

Fascinating glimpse into the next gen of foundation models.

#FoundationModels #NLP #TokenizerFree #ADSAI2025

🎤 “Adaptive Units of Computation: Towards Sublinear-Memory and Tokenizer-Free Foundation Models”

Fascinating glimpse into the next gen of foundation models.

#FoundationModels #NLP #TokenizerFree #ADSAI2025

This unlocks *inference-time hyper-scaling*

For the same runtime or memory load, we can boost LLM accuracy by pushing reasoning even further!

This unlocks *inference-time hyper-scaling*

For the same runtime or memory load, we can boost LLM accuracy by pushing reasoning even further!

Read more 👇

Read more 👇

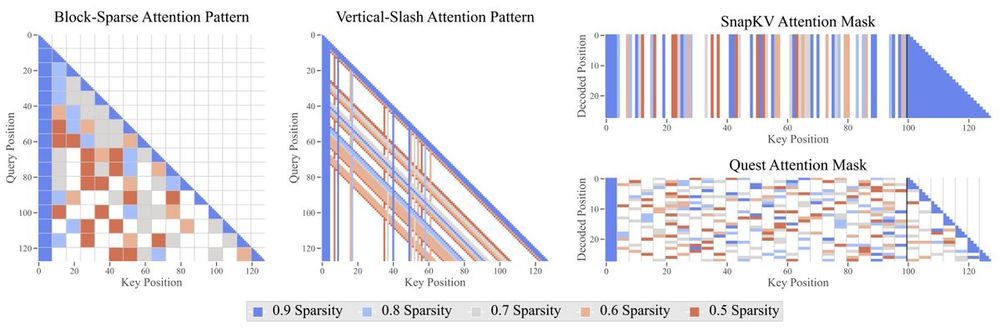

We performed the most comprehensive study on training-free sparse attention to date.

Here is what we found:

We performed the most comprehensive study on training-free sparse attention to date.

Here is what we found:

🎟️ Tickets for Advances in Data Science & AI Conference 2025 are live!

🔗Secure your spot: tinyurl.com/yurknk7y

#AI

🎟️ Tickets for Advances in Data Science & AI Conference 2025 are live!

🔗Secure your spot: tinyurl.com/yurknk7y

#AI

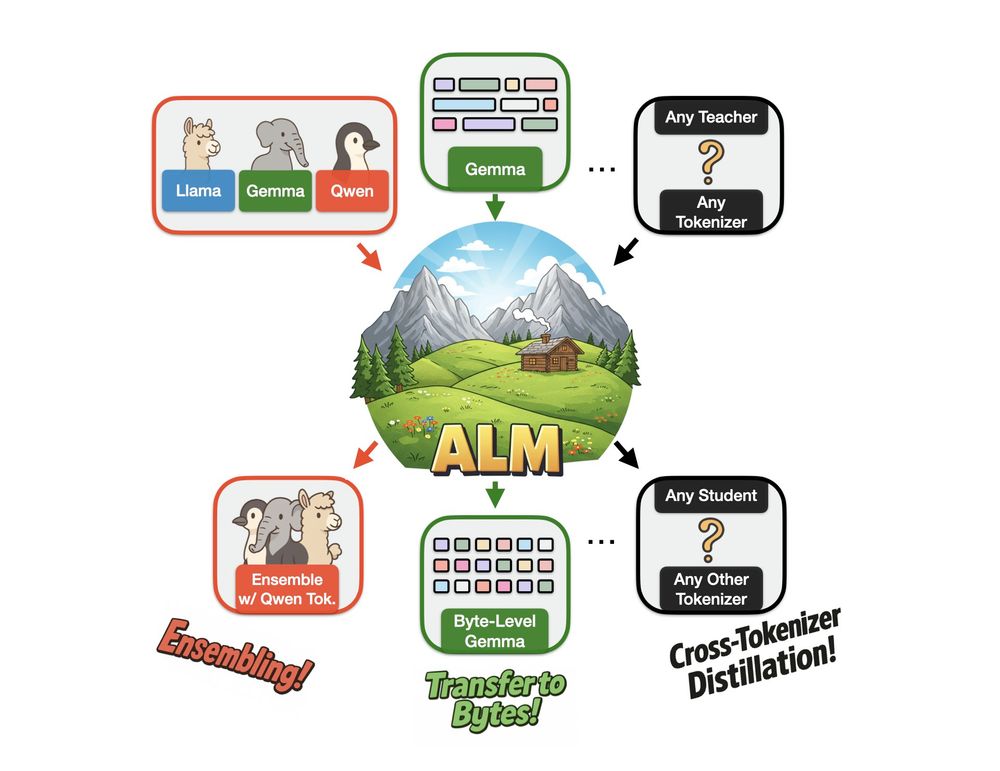

With ALM, you can create ensembles of models from different families, convert existing subword-level models to byte-level and a bunch more🧵

With ALM, you can create ensembles of models from different families, convert existing subword-level models to byte-level and a bunch more🧵

@edinburgh-uni.bsky.social!

Eligibility: UK home fee status

Starting date: flexible, from July 2025 onwards.

informatics.ed.ac.uk/study-with-u...

Please contact me if you're interested!

@edinburgh-uni.bsky.social!

Eligibility: UK home fee status

Starting date: flexible, from July 2025 onwards.

informatics.ed.ac.uk/study-with-u...

Please contact me if you're interested!

Stay tuned for architectures with even more efficient inference.

developer.nvidia.com/blog/dynamic...

Stay tuned for architectures with even more efficient inference.

developer.nvidia.com/blog/dynamic...

Deadline: 31 Jan 2025

Call for applications: elxw.fa.em3.oraclecloud.com/hcmUI/Candid...

Deadline: 31 Jan 2025

Call for applications: elxw.fa.em3.oraclecloud.com/hcmUI/Candid...

Traditionally, linguists posit functions to compare forms in different languages; however, these are aprioristic and partly arbitrary.

Instead, we resort to perceptual modalities (like vision) as measurable proxies for function.

Language is not just a formal system—it connects words to the world. But how do we measure this connection in a cross-linguistic, quantitative way?

🧵 Using multimodal models, we introduce a new approach: groundedness ⬇️

Traditionally, linguists posit functions to compare forms in different languages; however, these are aprioristic and partly arbitrary.

Instead, we resort to perceptual modalities (like vision) as measurable proxies for function.

Two amazing papers from my students at #NeurIPS today:

⛓️💥 Switch the vocabulary and embeddings of your LLM tokenizer zero-shot on the fly (@bminixhofer.bsky.social)

neurips.cc/virtual/2024...

🌊 Align your LLM gradient-free with spectral editing of activations (Yifu Qiu)

neurips.cc/virtual/2024...

Two amazing papers from my students at #NeurIPS today:

⛓️💥 Switch the vocabulary and embeddings of your LLM tokenizer zero-shot on the fly (@bminixhofer.bsky.social)

neurips.cc/virtual/2024...

🌊 Align your LLM gradient-free with spectral editing of activations (Yifu Qiu)

neurips.cc/virtual/2024...

Piotr Nawrot!

A repo & notebook on sparse attention for efficient LLM inference: github.com/PiotrNawrot/...

This will also feature in my #NeurIPS 2024 tutorial "Dynamic Sparsity in ML" with André Martins: dynamic-sparsity.github.io Stay tuned!

Piotr Nawrot!

A repo & notebook on sparse attention for efficient LLM inference: github.com/PiotrNawrot/...

This will also feature in my #NeurIPS 2024 tutorial "Dynamic Sparsity in ML" with André Martins: dynamic-sparsity.github.io Stay tuned!

👉 github.com/sordonia/pg_mb…

Part of "Dynamic Sparsity in ML" tut#neurips202424, feedback welcome and join for discussions! 😊

👉 github.com/sordonia/pg_mb…

Part of "Dynamic Sparsity in ML" tut#neurips202424, feedback welcome and join for discussions! 😊

Deadline: November 25

www.ed.ac.uk/studying/pos...

If you are passionate about:

- adaptive tokenization and memory in foundation models

- modular deep learning

- computational typology

please message me or meet me at #NeurIPS2024!

Deadline: November 25

www.ed.ac.uk/studying/pos...

If you are passionate about:

- adaptive tokenization and memory in foundation models

- modular deep learning

- computational typology

please message me or meet me at #NeurIPS2024!

Piotr Nawrot!

A repo & notebook on sparse attention for efficient LLM inference: github.com/PiotrNawrot/...

This will also feature in my #NeurIPS 2024 tutorial "Dynamic Sparsity in ML" with André Martins: dynamic-sparsity.github.io Stay tuned!

Piotr Nawrot!

A repo & notebook on sparse attention for efficient LLM inference: github.com/PiotrNawrot/...

This will also feature in my #NeurIPS 2024 tutorial "Dynamic Sparsity in ML" with André Martins: dynamic-sparsity.github.io Stay tuned!