This allows LLMs to preserve information while reducing latency and memory size.

This allows LLMs to preserve information while reducing latency and memory size.

Enter Dynamic Memory Sparsification (DMS), which achieves 8x KV cache compression with 1K training steps and retains accuracy better than SOTA methods.

Enter Dynamic Memory Sparsification (DMS), which achieves 8x KV cache compression with 1K training steps and retains accuracy better than SOTA methods.

This unlocks *inference-time hyper-scaling*

For the same runtime or memory load, we can boost LLM accuracy by pushing reasoning even further!

This unlocks *inference-time hyper-scaling*

For the same runtime or memory load, we can boost LLM accuracy by pushing reasoning even further!

Our insights demonstrate that sparse attention will play a key role in next-generation foundation models.

Our insights demonstrate that sparse attention will play a key role in next-generation foundation models.

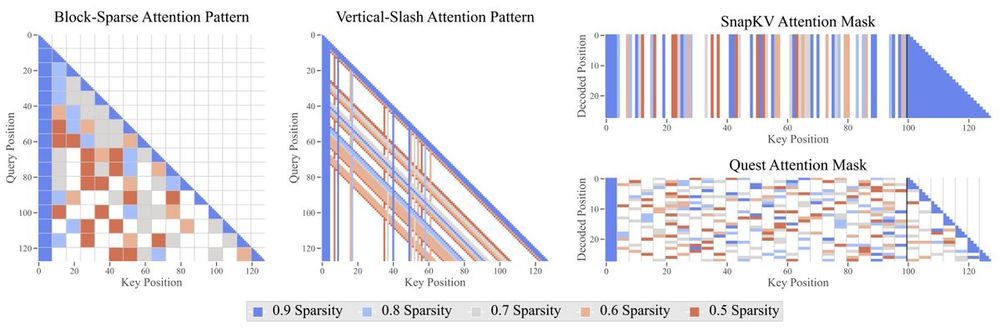

However, on average Verticals-Slashes for prefilling and Quest for decoding are the most competitive. Context-aware, and highly adaptive variants are preferable.

However, on average Verticals-Slashes for prefilling and Quest for decoding are the most competitive. Context-aware, and highly adaptive variants are preferable.

Importantly, for most settings there is at least one degraded task, even at moderate compressions (<5x).

Importantly, for most settings there is at least one degraded task, even at moderate compressions (<5x).

This suggests a strategy shift where scaling up model size must be combined with sparse attention to achieve an optimal trade-off.

This suggests a strategy shift where scaling up model size must be combined with sparse attention to achieve an optimal trade-off.

We performed the most comprehensive study on training-free sparse attention to date.

Here is what we found:

We performed the most comprehensive study on training-free sparse attention to date.

Here is what we found:

Deadline: 31 Jan 2025

Call for applications: elxw.fa.em3.oraclecloud.com/hcmUI/Candid...

Deadline: 31 Jan 2025

Call for applications: elxw.fa.em3.oraclecloud.com/hcmUI/Candid...

Piotr Nawrot!

A repo & notebook on sparse attention for efficient LLM inference: github.com/PiotrNawrot/...

This will also feature in my #NeurIPS 2024 tutorial "Dynamic Sparsity in ML" with André Martins: dynamic-sparsity.github.io Stay tuned!

Piotr Nawrot!

A repo & notebook on sparse attention for efficient LLM inference: github.com/PiotrNawrot/...

This will also feature in my #NeurIPS 2024 tutorial "Dynamic Sparsity in ML" with André Martins: dynamic-sparsity.github.io Stay tuned!