Kaiser Lab

@dkaiserlab.bsky.social

Studying natural vision at JLU Giessen using psychophysics, neuroimaging, and computational models, PI: Daniel Kaiser.

danielkaiser.net

danielkaiser.net

Pinned

Kaiser Lab

@dkaiserlab.bsky.social

· Dec 16

Hello Bluesky! This is the Kaiser Lab.

We are cognitive neuroscientists studying the neural computations that support real-world vision using fMRI, EEG, and TMS. Our main research areas include individual differences, categorization, and aesthetics.

For more information: danielkaiser.net

We are cognitive neuroscientists studying the neural computations that support real-world vision using fMRI, EEG, and TMS. Our main research areas include individual differences, categorization, and aesthetics.

For more information: danielkaiser.net

Reposted by Kaiser Lab

Decoding the rhythmic representation and communication of visual contents

www.cell.com/trends/neuro...

#neuroscience

www.cell.com/trends/neuro...

#neuroscience

Decoding the rhythmic representation and communication of visual contents

Rhythmic neural activity is considered essential for adaptively modulating responses

in the visual system. In this opinion article we posit that visual brain rhythms also

serve a key function in the r...

www.cell.com

October 30, 2025 at 11:59 AM

Decoding the rhythmic representation and communication of visual contents

www.cell.com/trends/neuro...

#neuroscience

www.cell.com/trends/neuro...

#neuroscience

From Micha’s farewell gathering before his temporary leave 💫 - he’ll still be missed!

October 22, 2025 at 12:24 PM

From Micha’s farewell gathering before his temporary leave 💫 - he’ll still be missed!

A few snapshots from this year’s Kaiser Lab retreat 🌿🧠✨

October 14, 2025 at 10:47 AM

A few snapshots from this year’s Kaiser Lab retreat 🌿🧠✨

By utilizing the visual backward masking paradigm, this study aimed to disentangle the contributions of feedforward and recurrent processing, revealing that recurrent processing significantly shapes the object representations across the ventral visual stream.

journals.plos.org/plosbiology/...

journals.plos.org/plosbiology/...

Recurrence affects the geometry of visual representations across the ventral visual stream in the human brain

The specific roles of feedforward and recurrent processing in human visual object recognition remain incompletely understood. In this neuroimaging and computational modelling study the authors isolate...

journals.plos.org

October 10, 2025 at 2:36 PM

By utilizing the visual backward masking paradigm, this study aimed to disentangle the contributions of feedforward and recurrent processing, revealing that recurrent processing significantly shapes the object representations across the ventral visual stream.

journals.plos.org/plosbiology/...

journals.plos.org/plosbiology/...

Reposted by Kaiser Lab

How can we characterize the contents of our internal models of the world? We highlight participant-driven approaches, from drawings to descriptions, to study how we expect scenes to look! 🤩

From line drawings to scene perception — our new review argues for moving beyond experimenter-driven manipulations toward participant-driven approaches to reveal what’s in our internal models of the visual world. 👁️✍️🛋

royalsocietypublishing.org/doi/10.1098/...

royalsocietypublishing.org/doi/10.1098/...

Characterizing internal models of the visual environment | Proceedings of the Royal Society B: Biological Sciences

Despite the complexity of real-world environments, natural vision is seamlessly efficient.

To explain this efficiency, researchers often use predictive processing frameworks,

in which perceptual effic...

royalsocietypublishing.org

October 8, 2025 at 9:27 AM

How can we characterize the contents of our internal models of the world? We highlight participant-driven approaches, from drawings to descriptions, to study how we expect scenes to look! 🤩

Reposted by Kaiser Lab

In this paper we present flexible methods for participants to express their expectations about natural scenes.

From line drawings to scene perception — our new review argues for moving beyond experimenter-driven manipulations toward participant-driven approaches to reveal what’s in our internal models of the visual world. 👁️✍️🛋

royalsocietypublishing.org/doi/10.1098/...

royalsocietypublishing.org/doi/10.1098/...

Characterizing internal models of the visual environment | Proceedings of the Royal Society B: Biological Sciences

Despite the complexity of real-world environments, natural vision is seamlessly efficient.

To explain this efficiency, researchers often use predictive processing frameworks,

in which perceptual effic...

royalsocietypublishing.org

October 8, 2025 at 9:13 AM

In this paper we present flexible methods for participants to express their expectations about natural scenes.

From line drawings to scene perception — our new review argues for moving beyond experimenter-driven manipulations toward participant-driven approaches to reveal what’s in our internal models of the visual world. 👁️✍️🛋

royalsocietypublishing.org/doi/10.1098/...

royalsocietypublishing.org/doi/10.1098/...

Characterizing internal models of the visual environment | Proceedings of the Royal Society B: Biological Sciences

Despite the complexity of real-world environments, natural vision is seamlessly efficient.

To explain this efficiency, researchers often use predictive processing frameworks,

in which perceptual effic...

royalsocietypublishing.org

October 8, 2025 at 9:07 AM

From line drawings to scene perception — our new review argues for moving beyond experimenter-driven manipulations toward participant-driven approaches to reveal what’s in our internal models of the visual world. 👁️✍️🛋

royalsocietypublishing.org/doi/10.1098/...

royalsocietypublishing.org/doi/10.1098/...

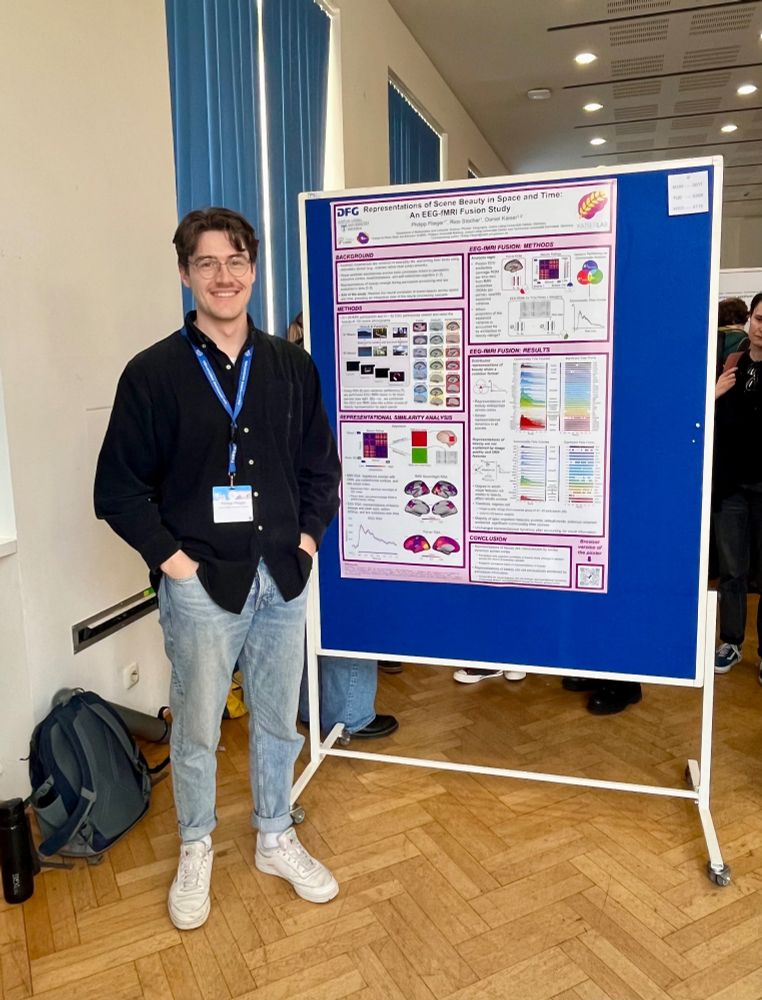

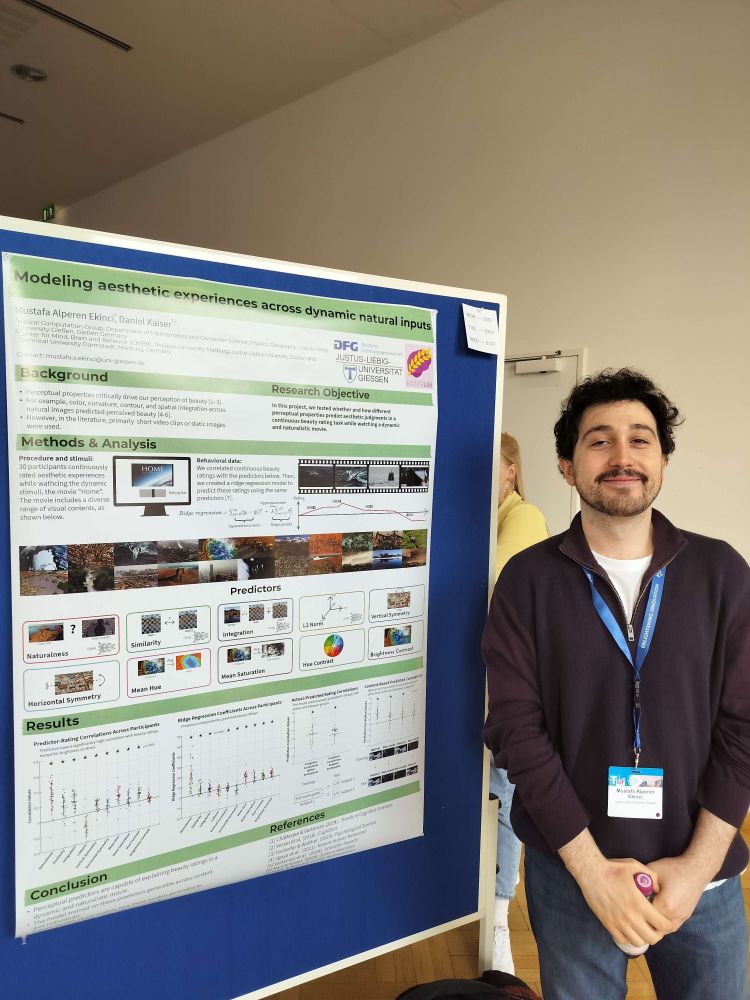

Kaiser Lab is at ECVP this year! Come check out our studies 😎

August 25, 2025 at 8:24 AM

Kaiser Lab is at ECVP this year! Come check out our studies 😎

Go Lu!! Congratulations 💜

Honored and thrilled to receive the GGN Early Career Award @jlugiessen.bsky.social 🏆 Huge thanks to my awesome supervisors Daniel @dkaiserlab.bsky.social & Marius @peelen.bsky.social , all my collaborators, and the amazing colleagues from both labs — couldn’t have done it without you! 💗

June 26, 2025 at 6:35 PM

Go Lu!! Congratulations 💜

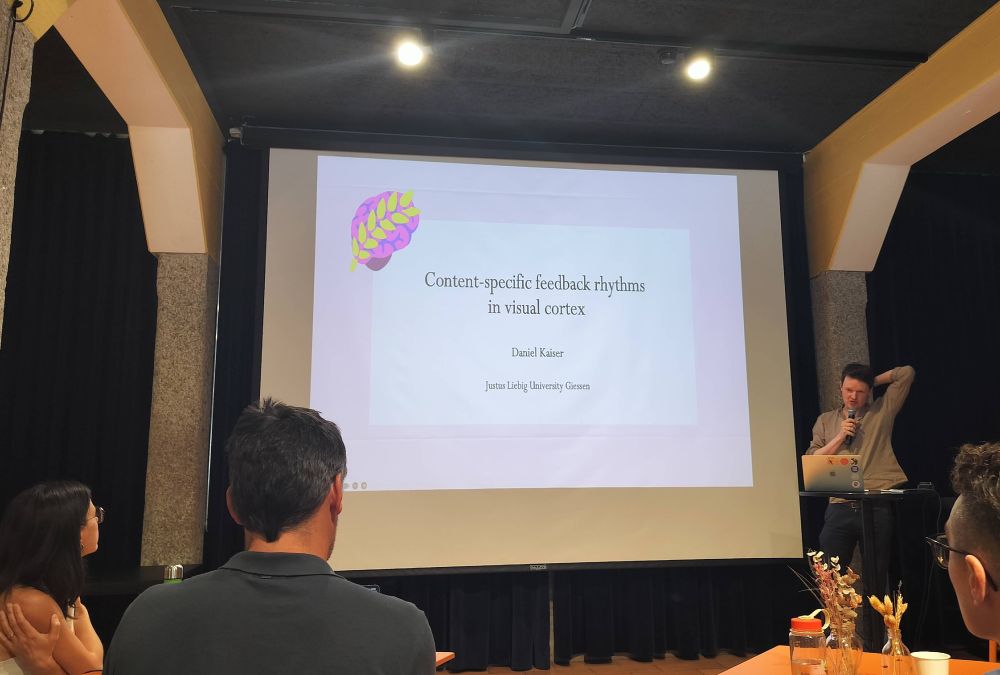

We had an awesome time discussing the role of feedback in perception @unil.bsky.social 🧠 and exploring the beautiful city of Lausanne! 💜🏞️ Thanks to @icepfl.bsky.social @davidpascucci.bsky.social

June 26, 2025 at 6:34 PM

We had an awesome time discussing the role of feedback in perception @unil.bsky.social 🧠 and exploring the beautiful city of Lausanne! 💜🏞️ Thanks to @icepfl.bsky.social @davidpascucci.bsky.social

All-topographic ANNs! Now out in @nathumbehav.nature.com , led by @zejinlu.bsky.social , in collaboration with @timkietzmann.bsky.social.

See below for a summary 👇

See below for a summary 👇

Now out in Nature Human Behaviour @nathumbehav.nature.com : “End-to-end topographic networks as models of cortical map formation and human visual behaviour”. Please check our NHB link: www.nature.com/articles/s41...

June 6, 2025 at 1:09 PM

All-topographic ANNs! Now out in @nathumbehav.nature.com , led by @zejinlu.bsky.social , in collaboration with @timkietzmann.bsky.social.

See below for a summary 👇

See below for a summary 👇

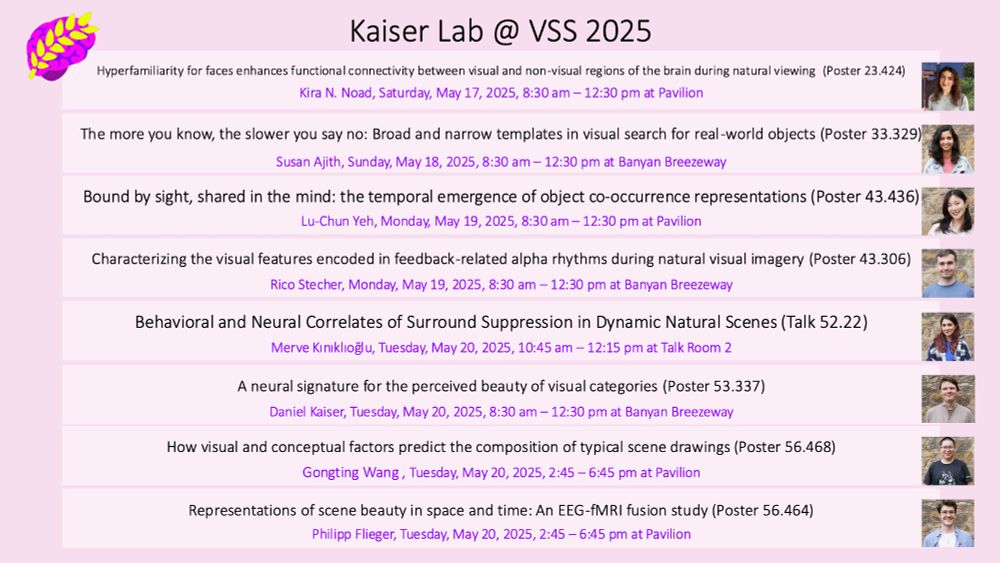

Don’t miss out again! If you are interested in our studies presented at @VSSMtg , you can find our posters here: 👇

drive.google.com/drive/mobile...

drive.google.com/drive/mobile...

May 28, 2025 at 8:48 AM

Don’t miss out again! If you are interested in our studies presented at @VSSMtg , you can find our posters here: 👇

drive.google.com/drive/mobile...

drive.google.com/drive/mobile...

Kaiser Lab goes #Exzellenz! The excellence cluster „The Adaptive Mind“ got funding for the next 7 years! Proud to be part of this awesome team and looking forward to all the exciting research to come. 🎆✨🥂

May 23, 2025 at 11:44 AM

Kaiser Lab goes #Exzellenz! The excellence cluster „The Adaptive Mind“ got funding for the next 7 years! Proud to be part of this awesome team and looking forward to all the exciting research to come. 🎆✨🥂

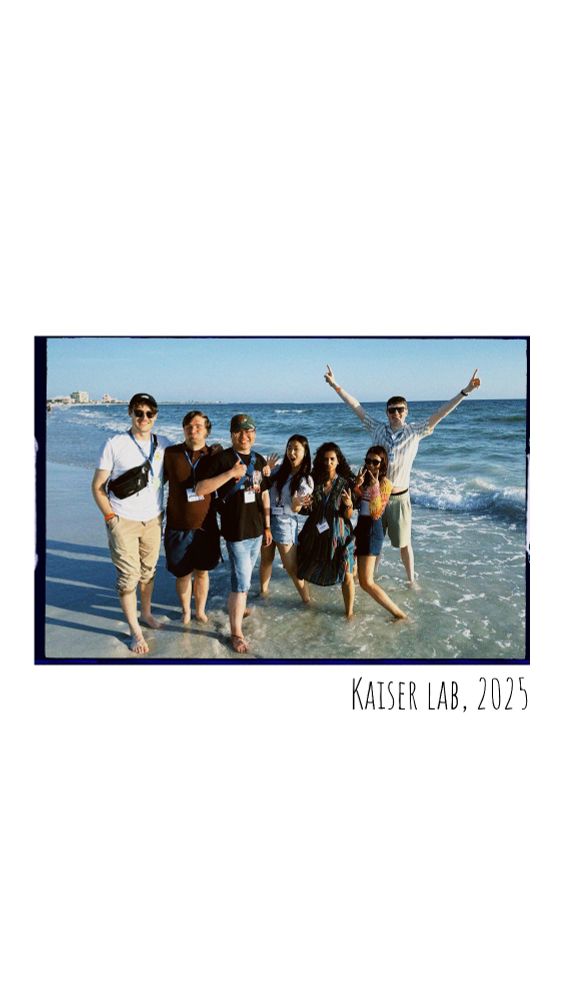

#VSS2025 was a blast - great science, fun people, beach vibes. We‘ll be back! @vssmtg.bsky.social

May 22, 2025 at 3:37 PM

#VSS2025 was a blast - great science, fun people, beach vibes. We‘ll be back! @vssmtg.bsky.social

Reposted by Kaiser Lab

How fast do we extract distance cues from scenes 🛤️🏞️🏜️ and integrate them with retinal size 🧸🐻 to infer real object size? Our new EEG study in Cortex has answers! w/ @dkaiserlab.bsky.social @suryagayet.bsky.social @peelen.bsky.social

Check it out: www.sciencedirect.com/science/arti...

Check it out: www.sciencedirect.com/science/arti...

The neural time course of size constancy in natural scenes

Accurate real-world size perception relies on size constancy, a mechanism that integrates an object's retinal size with distance information. The neur…

www.sciencedirect.com

May 17, 2025 at 12:21 PM

How fast do we extract distance cues from scenes 🛤️🏞️🏜️ and integrate them with retinal size 🧸🐻 to infer real object size? Our new EEG study in Cortex has answers! w/ @dkaiserlab.bsky.social @suryagayet.bsky.social @peelen.bsky.social

Check it out: www.sciencedirect.com/science/arti...

Check it out: www.sciencedirect.com/science/arti...

We are at @vssmtg.bsky.social 2025. 🏖️☀️🍹come check out our studies!

May 15, 2025 at 9:04 AM

We are at @vssmtg.bsky.social 2025. 🏖️☀️🍹come check out our studies!

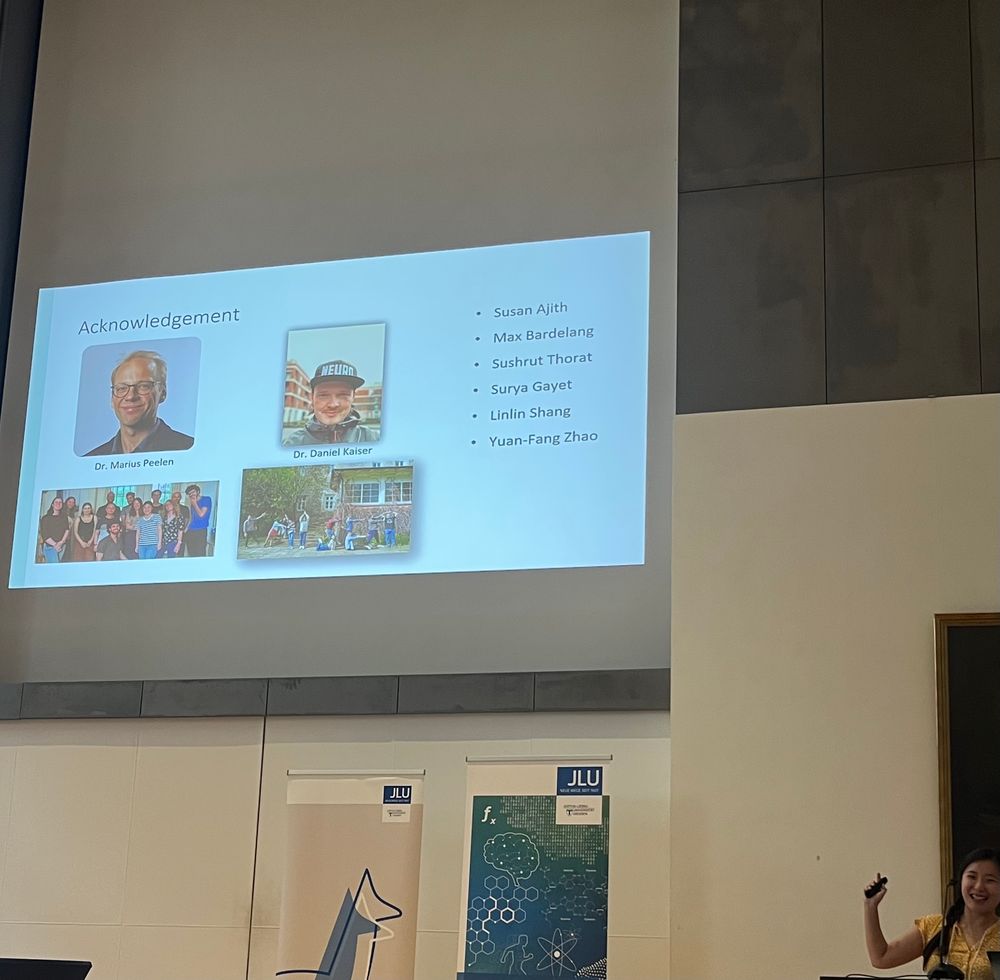

Great talk on Beauty and the Brain at CMBB Day in Marburg! 🧠👁️

May 11, 2025 at 11:30 AM

Great talk on Beauty and the Brain at CMBB Day in Marburg! 🧠👁️

Reposted by Kaiser Lab

Time to celebrate a new ANR-DFG funded project in collaboration with the stellar @dkaiserlab.bsky.social

Stay totally tuned!!! 😎

Stay totally tuned!!! 😎

May 10, 2025 at 7:27 AM

Time to celebrate a new ANR-DFG funded project in collaboration with the stellar @dkaiserlab.bsky.social

Stay totally tuned!!! 😎

Stay totally tuned!!! 😎

Reposted by Kaiser Lab

Glad to share our EEG study now is out in JNP. 🎉 We showed that alpha rhythms automatically interpolate occluded motion based on the surrounding contextual cues! 🚶♂️🏞️

@dkaiserlab.bsky.social

journals.physiology.org/doi/full/10....

@dkaiserlab.bsky.social

journals.physiology.org/doi/full/10....

Cortical alpha rhythms interpolate occluded motion from natural scene context | Journal of Neurophysiology | American Physiological Society

Tracking objects as they dynamically move in and out of sight is critical for parsing our everchanging real-world surroundings. Here, we explored how the interpolation of occluded object motion in nat...

journals.physiology.org

May 7, 2025 at 6:57 AM

Glad to share our EEG study now is out in JNP. 🎉 We showed that alpha rhythms automatically interpolate occluded motion based on the surrounding contextual cues! 🚶♂️🏞️

@dkaiserlab.bsky.social

journals.physiology.org/doi/full/10....

@dkaiserlab.bsky.social

journals.physiology.org/doi/full/10....

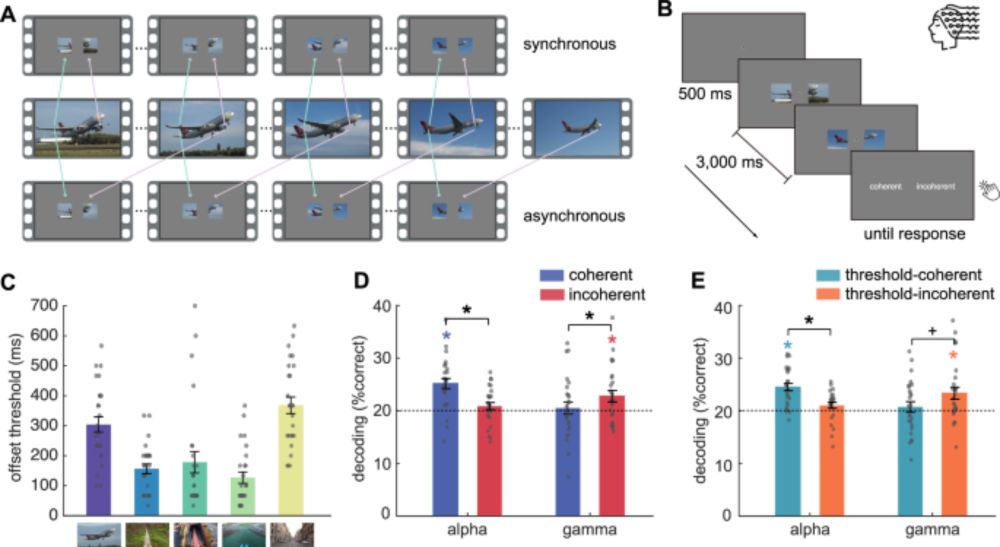

Reposted by Kaiser Lab

Representational shifts from bottom-up gamma to top-down alpha dynamics drive visual integration, highlighting the crucial role of cortical feedback in the construction of seamless perceptual experiences.

@lixiangchen.bsky.social @dkaiserlab.bsky.social

doi.org/10.1038/s42003-025-08011-0

@lixiangchen.bsky.social @dkaiserlab.bsky.social

doi.org/10.1038/s42003-025-08011-0

Representational shifts from feedforward to feedback rhythms index phenomenological integration in naturalistic vision - Communications Biology

Representational shifts from bottom-up gamma to top-down alpha dynamics drive visual integration, highlighting the crucial role of cortical feedback in the construction of seamless perceptual experien...

doi.org

April 14, 2025 at 2:42 PM

Representational shifts from bottom-up gamma to top-down alpha dynamics drive visual integration, highlighting the crucial role of cortical feedback in the construction of seamless perceptual experiences.

@lixiangchen.bsky.social @dkaiserlab.bsky.social

doi.org/10.1038/s42003-025-08011-0

@lixiangchen.bsky.social @dkaiserlab.bsky.social

doi.org/10.1038/s42003-025-08011-0

Now out in @royalsocietypublishing.org Proceedings B! Check out Gongting Wang's EEG work on individual differences in scene perception: Scenes that are more typical for individual observers are represented in an enhanced yet more idiosyncratic way. Link:

royalsocietypublishing.org/doi/10.1098/...

royalsocietypublishing.org/doi/10.1098/...

Enhanced and idiosyncratic neural representations of personally typical scenes | Proceedings of the Royal Society B: Biological Sciences

Previous research shows that the typicality of visual scenes (i.e. if they are good

examples of a category) determines how easily they can be perceived and represented

in the brain. However, the uniqu...

royalsocietypublishing.org

March 26, 2025 at 11:43 AM

Now out in @royalsocietypublishing.org Proceedings B! Check out Gongting Wang's EEG work on individual differences in scene perception: Scenes that are more typical for individual observers are represented in an enhanced yet more idiosyncratic way. Link:

royalsocietypublishing.org/doi/10.1098/...

royalsocietypublishing.org/doi/10.1098/...

Reposted by Kaiser Lab

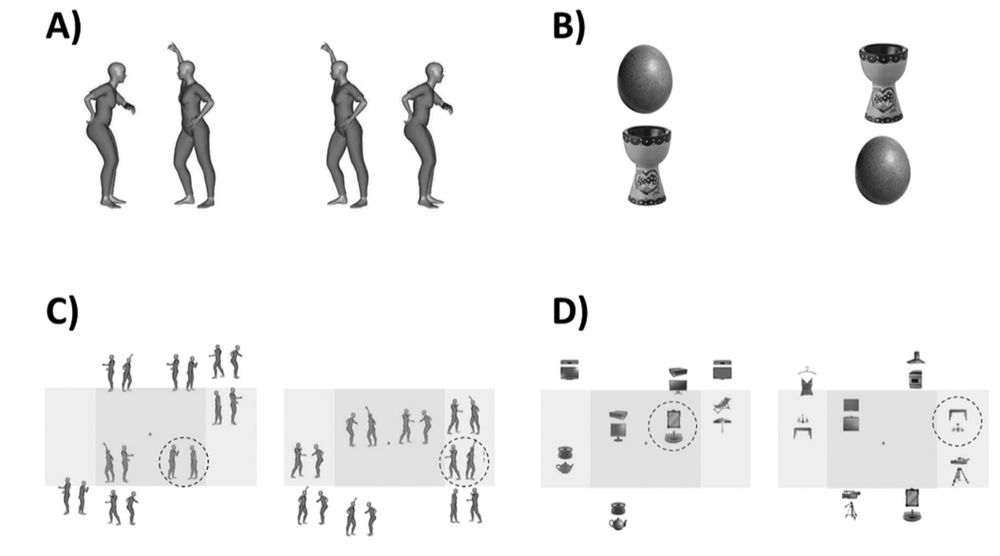

We search for an object in an array just like we search for a person in a crowd. Or no? Here the performance in visual search suggests distinct algorithms or implementation in social vs. non-social scene perception. With N. Goupil & @dkaiserlab.bsky.social psycnet.apa.org/record/2025-...

March 11, 2025 at 7:53 AM

We search for an object in an array just like we search for a person in a crowd. Or no? Here the performance in visual search suggests distinct algorithms or implementation in social vs. non-social scene perception. With N. Goupil & @dkaiserlab.bsky.social psycnet.apa.org/record/2025-...

TeaP 2025 - It was a pleasure! 🤓😎

March 16, 2025 at 11:23 AM

TeaP 2025 - It was a pleasure! 🤓😎

Hey hey! We‘re on the Cover of @cp-trendsneuro.bsky.social.

-check out our article on rhythmic representations in the visual system below. 👁️🧠🌊

-check out our article on rhythmic representations in the visual system below. 👁️🧠🌊

March 2025 issue of TINS:

www.cell.com/issue/S0166-...

Free featured articles & more:

cell.com/trends/neuro...

Cover article: ‘Decoding the rhythmic representation and communication of visual contents’, by Rico Stecher & colleagues @dkaiserlab.bsky.social

www.cell.com/trends/neuro...

www.cell.com/issue/S0166-...

Free featured articles & more:

cell.com/trends/neuro...

Cover article: ‘Decoding the rhythmic representation and communication of visual contents’, by Rico Stecher & colleagues @dkaiserlab.bsky.social

www.cell.com/trends/neuro...

March 13, 2025 at 10:29 AM

Hey hey! We‘re on the Cover of @cp-trendsneuro.bsky.social.

-check out our article on rhythmic representations in the visual system below. 👁️🧠🌊

-check out our article on rhythmic representations in the visual system below. 👁️🧠🌊

Reposted by Kaiser Lab

We make about 3-4 fast eye movements a second, yet our world appears stable. How is this possible? In a preprint led by @lucakaemmer.bsky.social we test the intriguing idea that anticipatory signals in the fovea may explain visual stability.

www.biorxiv.org/content/10.1...

www.biorxiv.org/content/10.1...

a woman in a white shirt is standing in front of a refrigerator in a store .

ALT: a woman in a white shirt is standing in front of a refrigerator in a store .

media.tenor.com

March 12, 2025 at 10:18 PM

We make about 3-4 fast eye movements a second, yet our world appears stable. How is this possible? In a preprint led by @lucakaemmer.bsky.social we test the intriguing idea that anticipatory signals in the fovea may explain visual stability.

www.biorxiv.org/content/10.1...

www.biorxiv.org/content/10.1...