huggingface.co/datasets/coral-nlp/german-commons

huggingface.co/datasets/coral-nlp/german-commons

Big thanks to my wonderful co-authors: @deeliu97.bsky.social, Niyati, @computermacgyver.bsky.social, Sam, Victor, and @paul-rottger.bsky.social!

Thread 👇and data avail at huggingface.co/datasets/man...

Big thanks to my wonderful co-authors: @deeliu97.bsky.social, Niyati, @computermacgyver.bsky.social, Sam, Victor, and @paul-rottger.bsky.social!

Thread 👇and data avail at huggingface.co/datasets/man...

Our new axioms are integrated with ir_axioms: github.com/webis-de/ir_...

Nice to see axiomatic IR gaining momentum.

Our new axioms are integrated with ir_axioms: github.com/webis-de/ir_...

Nice to see axiomatic IR gaining momentum.

📄 webis.de/publications...

📄 webis.de/publications...

The corpus and baselines (with run files) are now available and easily accessible via the ir_datasets API and the HuggingFace Datasets API.

More details are available at: trec-tot.github.io/guidelines

The corpus and baselines (with run files) are now available and easily accessible via the ir_datasets API and the HuggingFace Datasets API.

More details are available at: trec-tot.github.io/guidelines

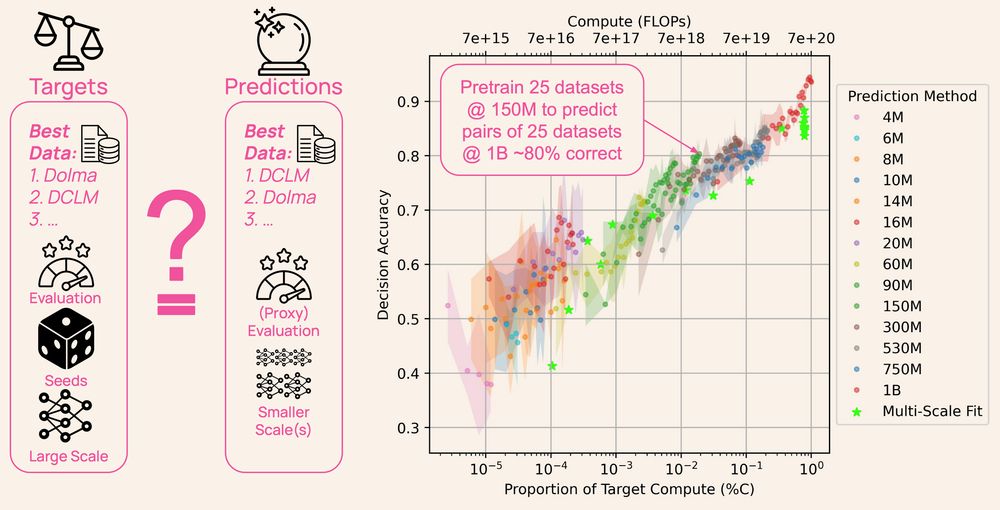

DataDecide opens up the process: 1,050 models, 30k checkpoints, 25 datasets & 10 benchmarks 🧵

DataDecide opens up the process: 1,050 models, 30k checkpoints, 25 datasets & 10 benchmarks 🧵

Abstracts due March 22 AoE (+48hr)

Full papers due March 28 AoE (+24hr)

Plz RT 🙏

Abstracts due March 22 AoE (+48hr)

Full papers due March 28 AoE (+24hr)

Plz RT 🙏

Introducing PokéChamp, our minimax LLM agent that reaches top 30%-10% human-level Elo on Pokémon Showdown!

New paper on arXiv and code on github!

Introducing PokéChamp, our minimax LLM agent that reaches top 30%-10% human-level Elo on Pokémon Showdown!

New paper on arXiv and code on github!

TESS 2 is an instruction-tuned diffusion LM that can perform close to AR counterparts for general QA tasks, trained by adapting from an existing pretrained AR model.

📜 Paper: arxiv.org/abs/2502.13917

🤖 Demo: huggingface.co/spaces/hamis...

More below ⬇️

TESS 2 is an instruction-tuned diffusion LM that can perform close to AR counterparts for general QA tasks, trained by adapting from an existing pretrained AR model.

📜 Paper: arxiv.org/abs/2502.13917

🤖 Demo: huggingface.co/spaces/hamis...

More below ⬇️

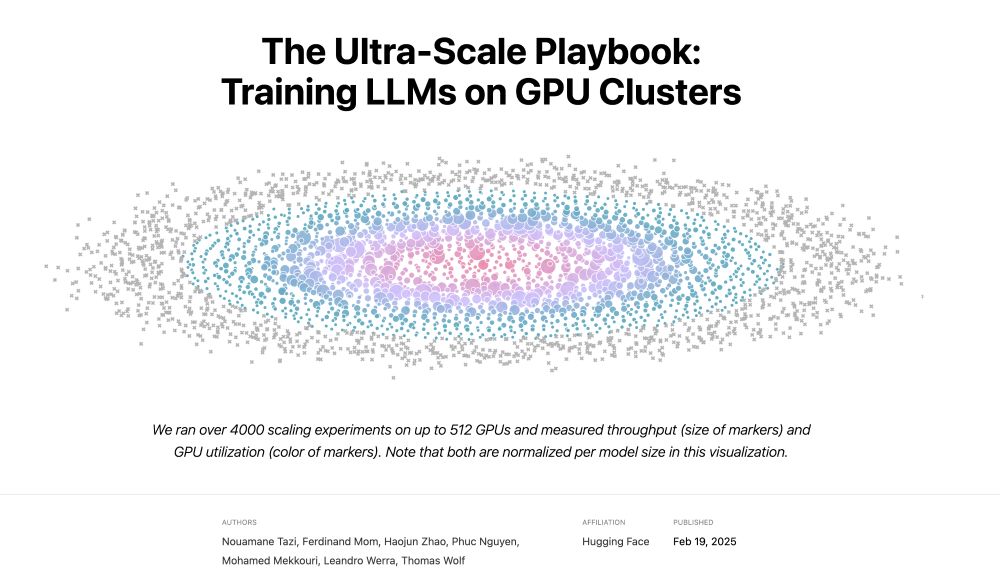

A book to learn all about 5D parallelism, ZeRO, CUDA kernels, how/why overlap compute & coms with theory, motivation, interactive plots and 4000+ experiments!

A book to learn all about 5D parallelism, ZeRO, CUDA kernels, how/why overlap compute & coms with theory, motivation, interactive plots and 4000+ experiments!

@aclmeeting.bsky.social #ACL2025 #ACL2025nlp #NLP

@aclmeeting.bsky.social #ACL2025 #ACL2025nlp #NLP

We need your support in web survey in which we investigate how recent advancements in NLP, particularly LLMs, have influenced the need for labeled data in supervised machine learning.

#NLP #NLProc #ML #AI

#ACL2025 in Vienna, Austria. More information will be available on the website argmining-org.github.io

#Argmining2025

So people bullied him & posted death threats.

He took it down.

Nice one, folks.

So people bullied him & posted death threats.

He took it down.

Nice one, folks.

With Small Language Models on the rise, the new version of small-text has been long overdue! Despite the generative AI hype, many real-world tasks still rely on supervised learning—which is reliant on labeled data.

#activelearning #nlproc #nlp #llms

At @huggingface.bsky.social we'll launch a huge community sprint soon to build high-quality training datasets for many languages.

We're looking for Language Leads to help with outreach.

Find your language and nominate yourself:

forms.gle/iAJVauUQ3FN8...

At @huggingface.bsky.social we'll launch a huge community sprint soon to build high-quality training datasets for many languages.

We're looking for Language Leads to help with outreach.

Find your language and nominate yourself:

forms.gle/iAJVauUQ3FN8...

With Small Language Models on the rise, the new version of small-text has been long overdue! Despite the generative AI hype, many real-world tasks still rely on supervised learning—which is reliant on labeled data.

#activelearning #nlproc #nlp #llms

With Small Language Models on the rise, the new version of small-text has been long overdue! Despite the generative AI hype, many real-world tasks still rely on supervised learning—which is reliant on labeled data.

#activelearning #nlproc #nlp #llms