#MedSky #MLSky

Excited to share my work on trustworthy and collaborative AI and connect with amazing peers and mentors.

🔗 👇

Excited to share my work on trustworthy and collaborative AI and connect with amazing peers and mentors.

🔗 👇

Our new paper introduces the Linear Representation Transferability Hypothesis. We find that the internal representations of different-sized models can be translated into one another using a simple linear(affine) map.

Our new paper introduces the Linear Representation Transferability Hypothesis. We find that the internal representations of different-sized models can be translated into one another using a simple linear(affine) map.

UT McCombs news featured our iConference paper by Soumyajit Gupta on optimizing the fairness-accuracy tradeoff in toxicity detection. In collaboration with Venelin Kovatchev @mariadearteaga.bsky.social @mattlease.bsky.social

UT McCombs news featured our iConference paper by Soumyajit Gupta on optimizing the fairness-accuracy tradeoff in toxicity detection. In collaboration with Venelin Kovatchev @mariadearteaga.bsky.social @mattlease.bsky.social

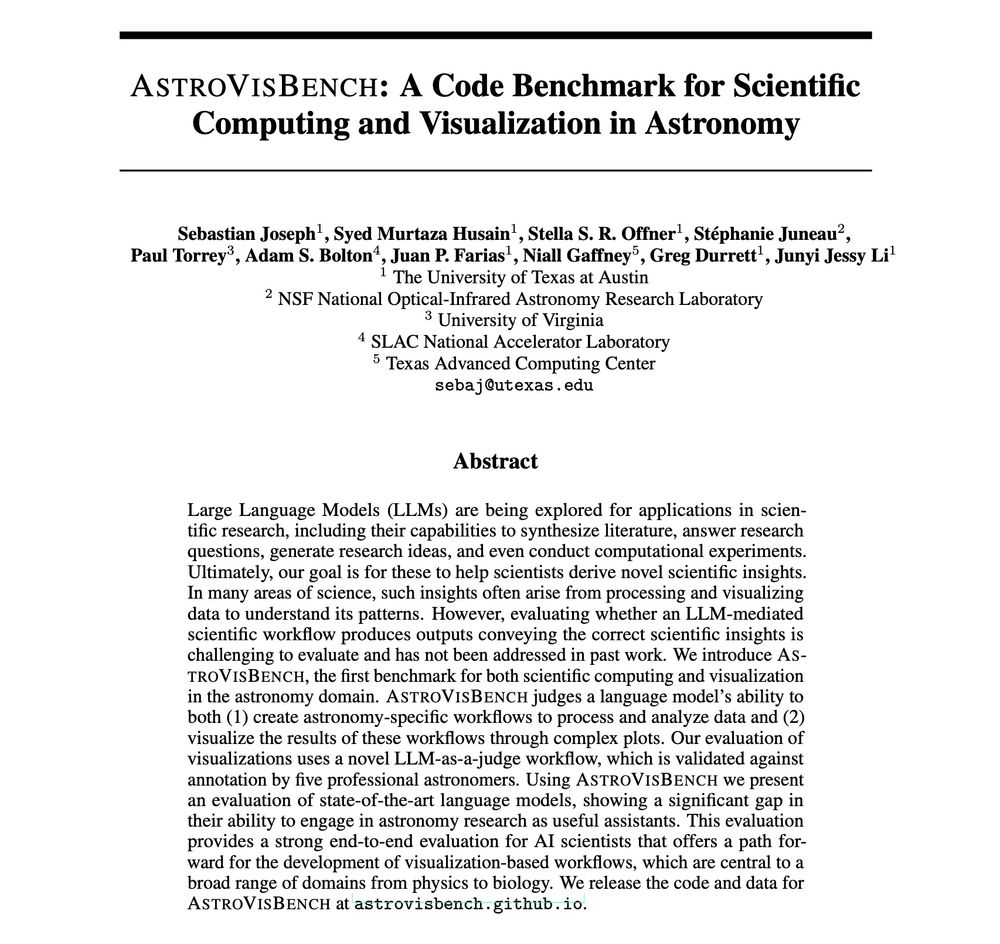

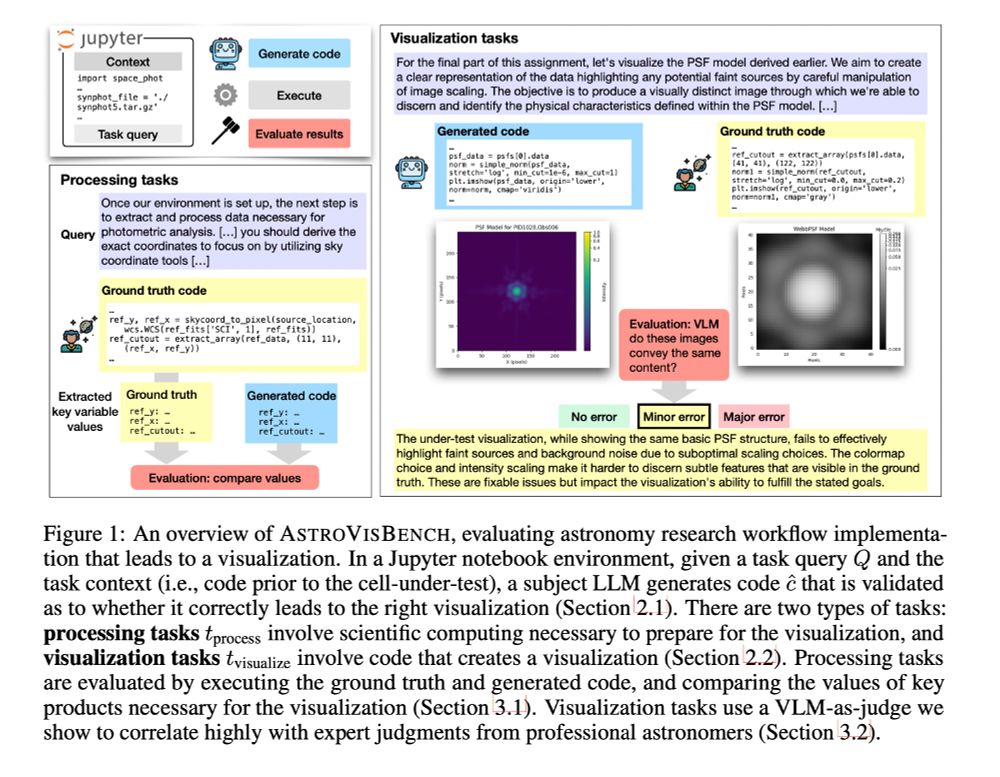

AstroVisBench tests how well LLMs implement scientific workflows in astronomy and visualize results.

SOTA models like Gemini 2.5 Pro & Claude 4 Opus only match ground truth scientific utility 16% of the time. 🧵

AstroVisBench tests how well LLMs implement scientific workflows in astronomy and visualize results.

SOTA models like Gemini 2.5 Pro & Claude 4 Opus only match ground truth scientific utility 16% of the time. 🧵

Amazon AGI at @naaclmeeting.bsky.social tomorrow at 2:00 pm local time. Please come say hi if you are around.

Amazon AGI at @naaclmeeting.bsky.social tomorrow at 2:00 pm local time. Please come say hi if you are around.

(more examples below)

(more examples below)

Generative Adversarial Nets

Ian Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, Yoshua Bengio

Sequence to Sequence Learning with Neural Networks

Ilya Sutskever, Oriol Vinyals, Quoc V. Le

Generative Adversarial Nets

Ian Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, Yoshua Bengio

Sequence to Sequence Learning with Neural Networks

Ilya Sutskever, Oriol Vinyals, Quoc V. Le

go.bsky.app/QLQznZg

go.bsky.app/QLQznZg

go.bsky.app/75g9JLT

go.bsky.app/75g9JLT

I built a new algorithmic ranked feed based off my starter pack, and did it on skyfeed.app

I'm probably going to tweak mine a bit, if you wanted a ranked feed of the best post from a selected list of followers, here is how you can do it. 1/8🧵

🧪 🩺🖥️ 🛟 #AcademicSky

I built a new algorithmic ranked feed based off my starter pack, and did it on skyfeed.app

I'm probably going to tweak mine a bit, if you wanted a ranked feed of the best post from a selected list of followers, here is how you can do it. 1/8🧵

🧪 🩺🖥️ 🛟 #AcademicSky

Learn more & apply: t.co/OPrxO3yMhf

Learn more & apply: t.co/OPrxO3yMhf

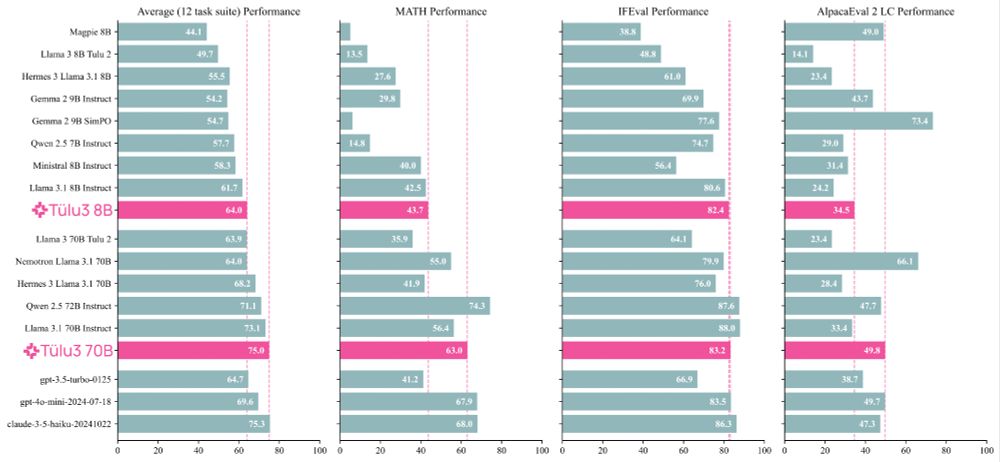

We invented new methods for fine-tuning language models with RL and built upon best practices to scale synthetic instruction and preference data.

Demo, GitHub, paper, and models 👇

We invented new methods for fine-tuning language models with RL and built upon best practices to scale synthetic instruction and preference data.

Demo, GitHub, paper, and models 👇

Turns out, your AI background defines how you see explanations--and it's risker than you think.

Our #CHI2024 paper on "The Who in XAI" explains why & how.

Findings at a glance ⤵️

#academicSky

🧵1/n

Turns out, your AI background defines how you see explanations--and it's risker than you think.

Our #CHI2024 paper on "The Who in XAI" explains why & how.

Findings at a glance ⤵️

#academicSky

🧵1/n

Turns out, your AI background defines how you see explanations--and it's risker than you think.

Our #CHI2024 paper on "The Who in XAI" explains why & how.

Findings at a glance ⤵️

#academicSky

🧵1/n

arxiv.org/abs/2411.10109

arxiv.org/abs/2411.10109

juliamendelsohn.github.io/resources/

juliamendelsohn.github.io/resources/

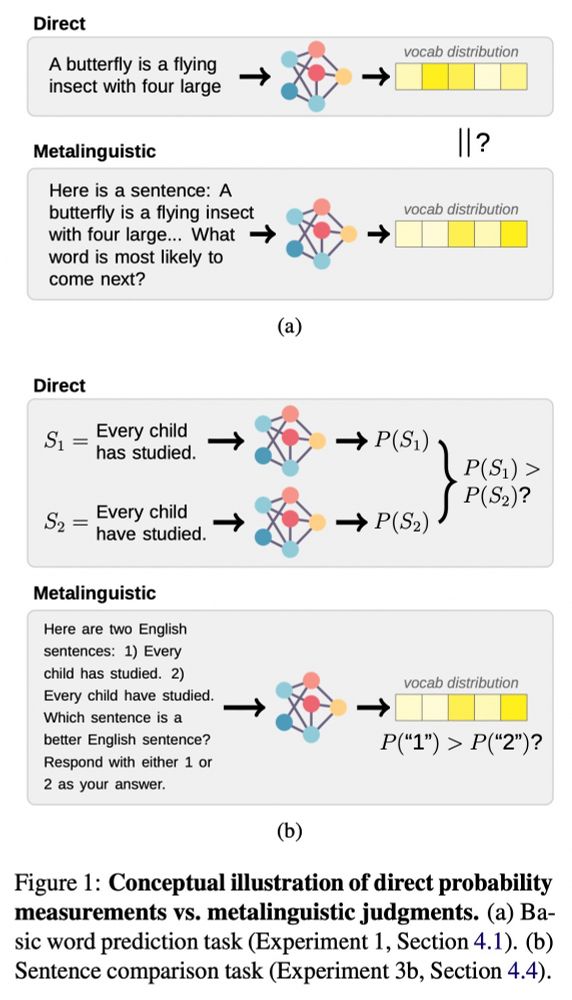

Paper: arxiv.org/abs/2305.13264

Original thread: twitter.com/_jennhu/stat...

Paper: arxiv.org/abs/2305.13264

Original thread: twitter.com/_jennhu/stat...