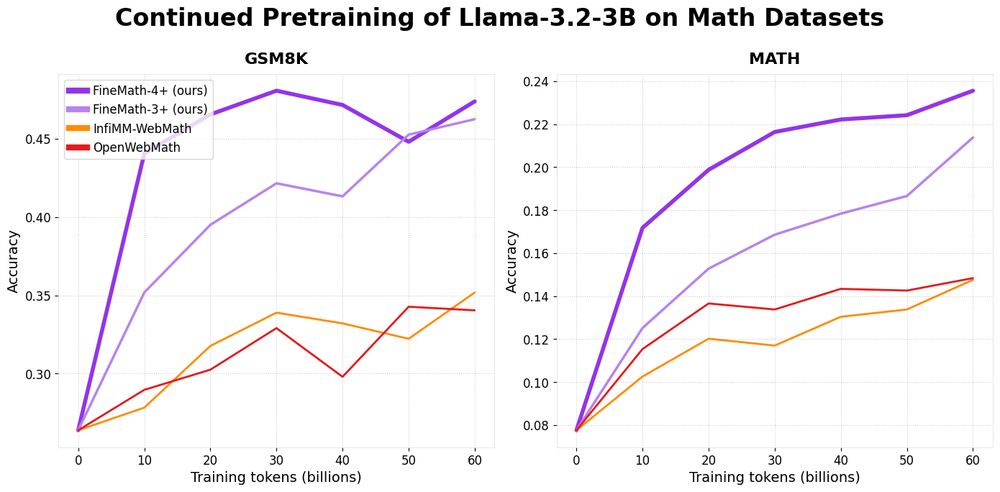

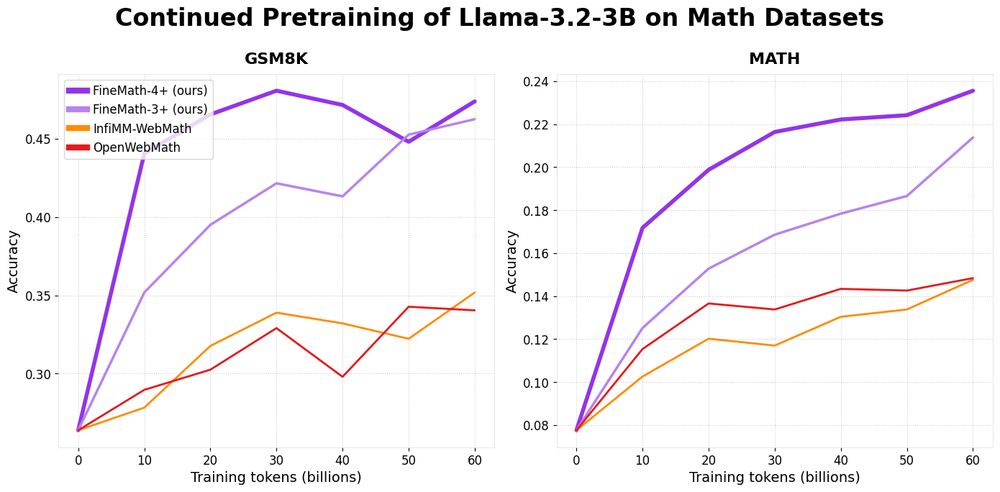

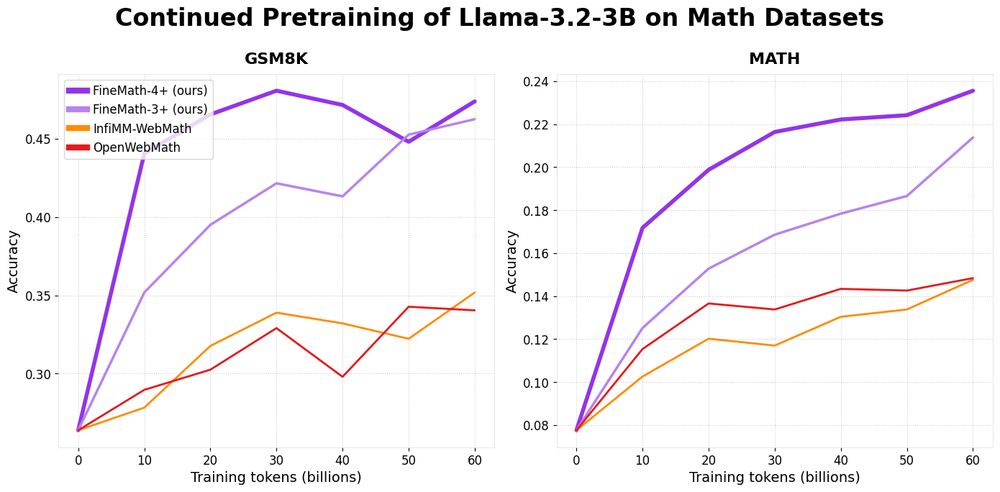

Math remains challenging for LLMs and by training on FineMath we see considerable gains over other math datasets, especially on GSM8K and MATH.

🤗 huggingface.co/datasets/Hug...

Here’s a breakdown 🧵

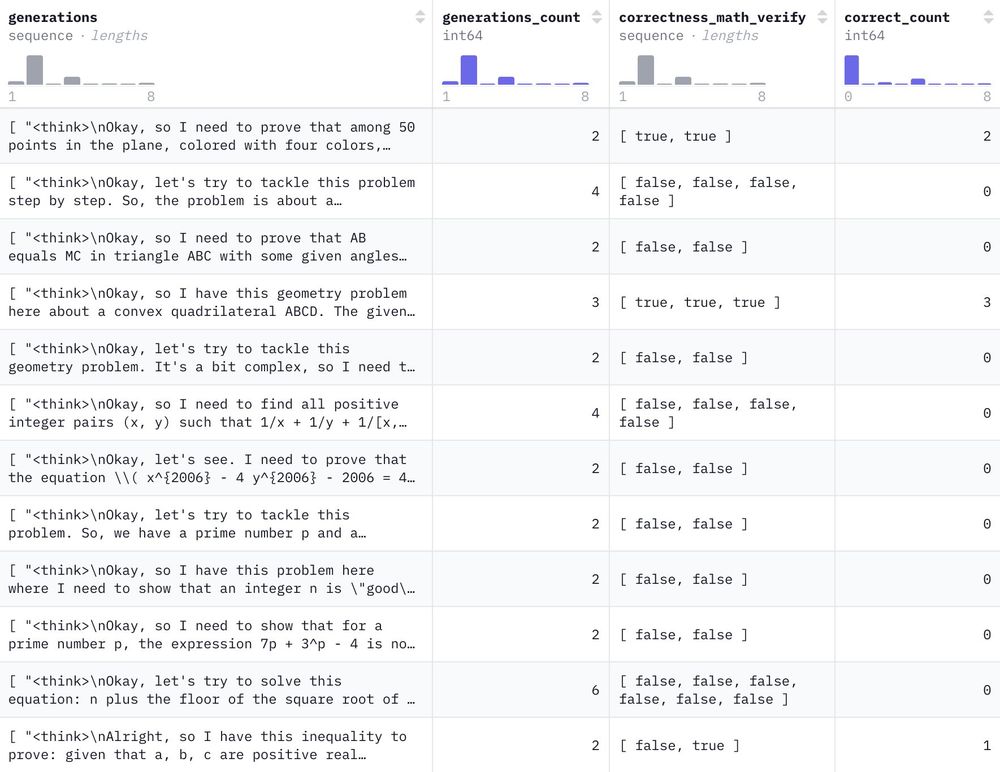

We commandeered the HF cluster for a few days and generated 1.2M reasoning-filled solutions to 500k NuminaMath problems with DeepSeek-R1 🐳

Have fun!

We commandeered the HF cluster for a few days and generated 1.2M reasoning-filled solutions to 500k NuminaMath problems with DeepSeek-R1 🐳

Have fun!

Math remains challenging for LLMs and by training on FineMath we see considerable gains over other math datasets, especially on GSM8K and MATH.

🤗 huggingface.co/datasets/Hug...

Here’s a breakdown 🧵

Math remains challenging for LLMs and by training on FineMath we see considerable gains over other math datasets, especially on GSM8K and MATH.

🤗 huggingface.co/datasets/Hug...

Here’s a breakdown 🧵

Math remains challenging for LLMs and by training on FineMath we see considerable gains over other math datasets, especially on GSM8K and MATH.

🤗 huggingface.co/datasets/Hug...

Here’s a breakdown 🧵

Math remains challenging for LLMs and by training on FineMath we see considerable gains over other math datasets, especially on GSM8K and MATH.

🤗 huggingface.co/datasets/Hug...

Here’s a breakdown 🧵

Check it out at huggingface.co/spaces/open-...

Check it out at huggingface.co/spaces/open-...

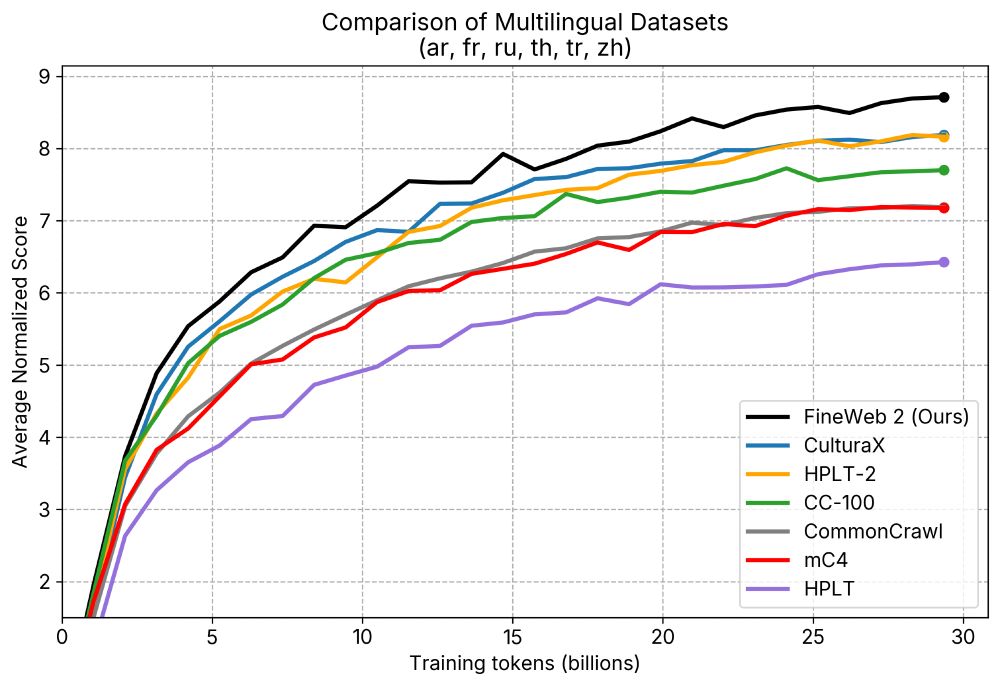

We applied the same data-driven approach that led to SOTA English performance in🍷 FineWeb to thousands of languages.

🥂 FineWeb2 has 8TB of compressed text data and outperforms other datasets.

We applied the same data-driven approach that led to SOTA English performance in🍷 FineWeb to thousands of languages.

🥂 FineWeb2 has 8TB of compressed text data and outperforms other datasets.

SmolVLM can be fine-tuned on a Google collab and be run on a laptop! Or process millions of documents with a consumer GPU!

SmolVLM can be fine-tuned on a Google collab and be run on a laptop! Or process millions of documents with a consumer GPU!

We are releasing SmolVLM: a new 2B small vision language made for on-device use, fine-tunable on consumer GPU, immensely memory efficient 🤠

We release three checkpoints under Apache 2.0: SmolVLM-Instruct, SmolVLM-Synthetic and SmolVLM-Base huggingface.co/collections/...

We are releasing SmolVLM: a new 2B small vision language made for on-device use, fine-tunable on consumer GPU, immensely memory efficient 🤠

We release three checkpoints under Apache 2.0: SmolVLM-Instruct, SmolVLM-Synthetic and SmolVLM-Base huggingface.co/collections/...

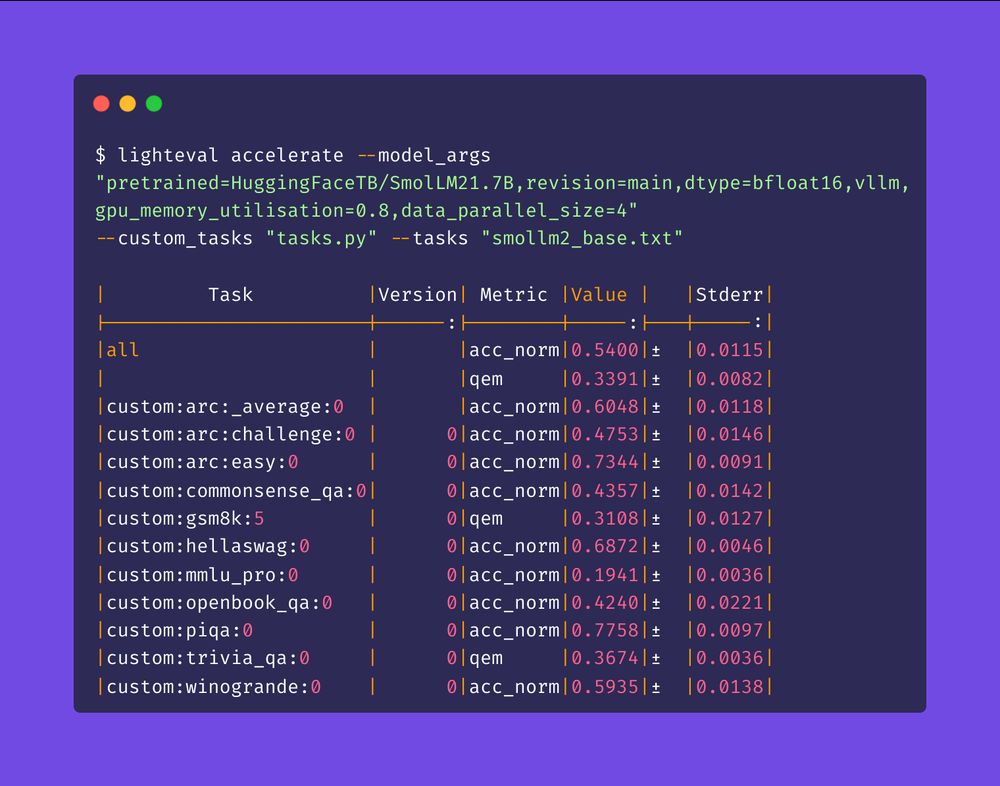

* any dataset on the 🤗 Hub can become an eval task in a few lines of code: customize the prompt, metrics, parsing, few-shots, everything!

* model- and data-parallel inference

* auto batching with the new vLLM backend

* any dataset on the 🤗 Hub can become an eval task in a few lines of code: customize the prompt, metrics, parsing, few-shots, everything!

* model- and data-parallel inference

* auto batching with the new vLLM backend

Pre-training & evaluation code, synthetic data generation pipelines, post-training scripts, on-device tools & demos

Apache 2.0. V2 data mix coming soon!

Which tools should we add next?

Pre-training & evaluation code, synthetic data generation pipelines, post-training scripts, on-device tools & demos

Apache 2.0. V2 data mix coming soon!

Which tools should we add next?

The dataset for SmolLM2 was created by combining multiple existing datasets and generating new synthetic datasets, including MagPie Ultra v1.0, using distilabel.

Check out the dataset:

huggingface.co/datasets/Hug...

The dataset for SmolLM2 was created by combining multiple existing datasets and generating new synthetic datasets, including MagPie Ultra v1.0, using distilabel.

Check out the dataset:

huggingface.co/datasets/Hug...