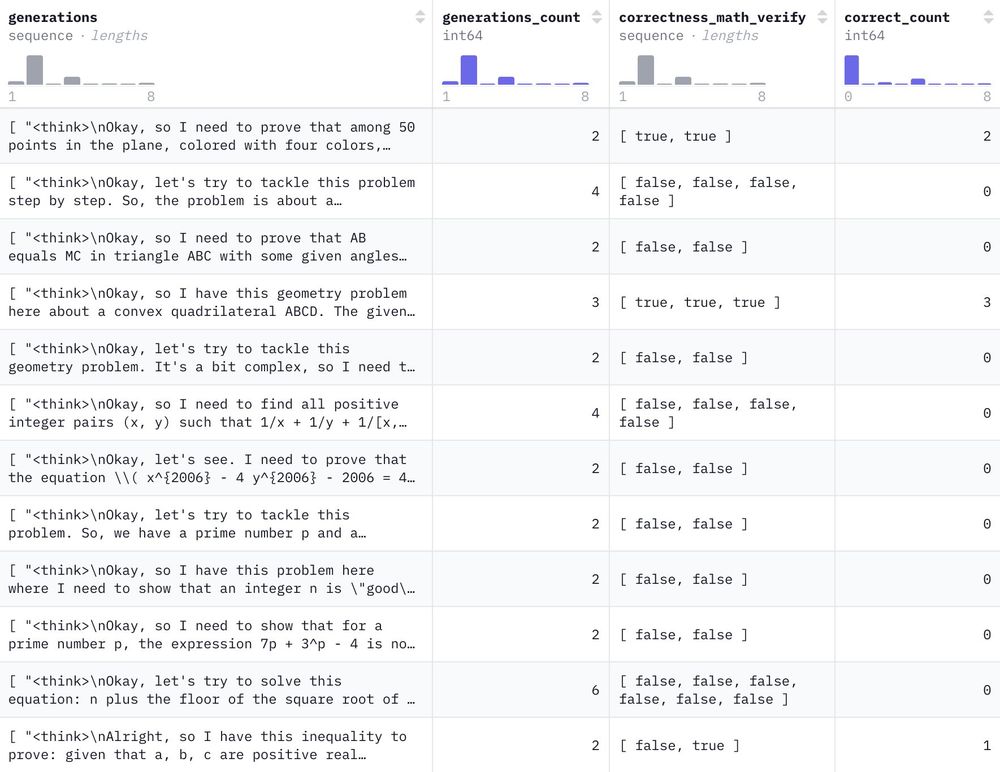

We commandeered the HF cluster for a few days and generated 1.2M reasoning-filled solutions to 500k NuminaMath problems with DeepSeek-R1 🐳

Have fun!

We commandeered the HF cluster for a few days and generated 1.2M reasoning-filled solutions to 500k NuminaMath problems with DeepSeek-R1 🐳

Have fun!

Collection: huggingface.co/collections/...

Evaluation: github.com/huggingface/...

Collection: huggingface.co/collections/...

Evaluation: github.com/huggingface/...

We then trained a classifier on Llama3's annotations to find pages with math reasoning and applied it in two stages. This helped us identify key math domains and recall high quality math data.

huggingface.co/HuggingFaceT...

We then trained a classifier on Llama3's annotations to find pages with math reasoning and applied it in two stages. This helped us identify key math domains and recall high quality math data.

huggingface.co/HuggingFaceT...

The classifier was mostly retrieving academic papers because math forums weren’t properly extracted with Trafilatura, and most equations needed better formatting.

The classifier was mostly retrieving academic papers because math forums weren’t properly extracted with Trafilatura, and most equations needed better formatting.

The authors highlight a specialized math extractor from HTML pages to preserve the equations.

The authors highlight a specialized math extractor from HTML pages to preserve the equations.

DeepSeekMath and QwenMath train a fastText classifier on data like OpenWebMath (OWM). They iteratively filter and recall math content from Common Crawl, focusing on the most relevant domains.

DeepSeekMath and QwenMath train a fastText classifier on data like OpenWebMath (OWM). They iteratively filter and recall math content from Common Crawl, focusing on the most relevant domains.

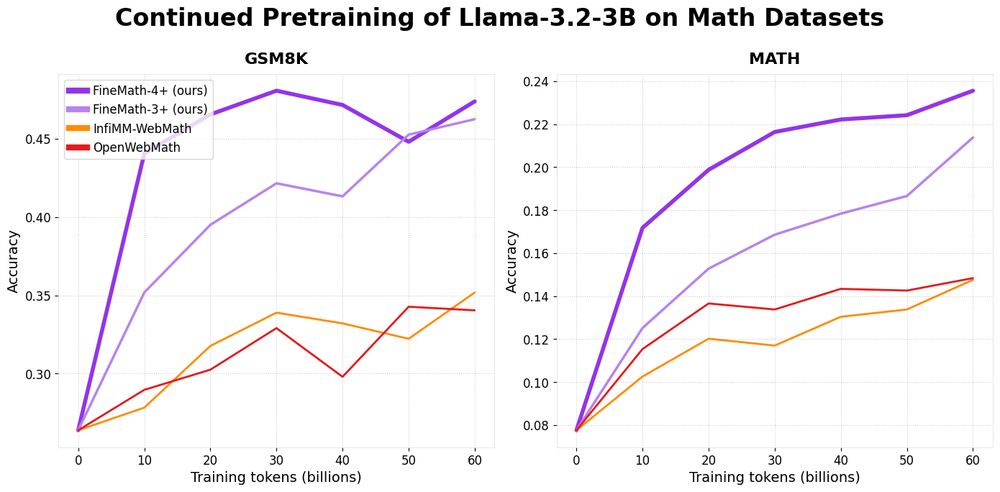

Math remains challenging for LLMs and by training on FineMath we see considerable gains over other math datasets, especially on GSM8K and MATH.

🤗 huggingface.co/datasets/Hug...

Here’s a breakdown 🧵

Math remains challenging for LLMs and by training on FineMath we see considerable gains over other math datasets, especially on GSM8K and MATH.

🤗 huggingface.co/datasets/Hug...

Here’s a breakdown 🧵

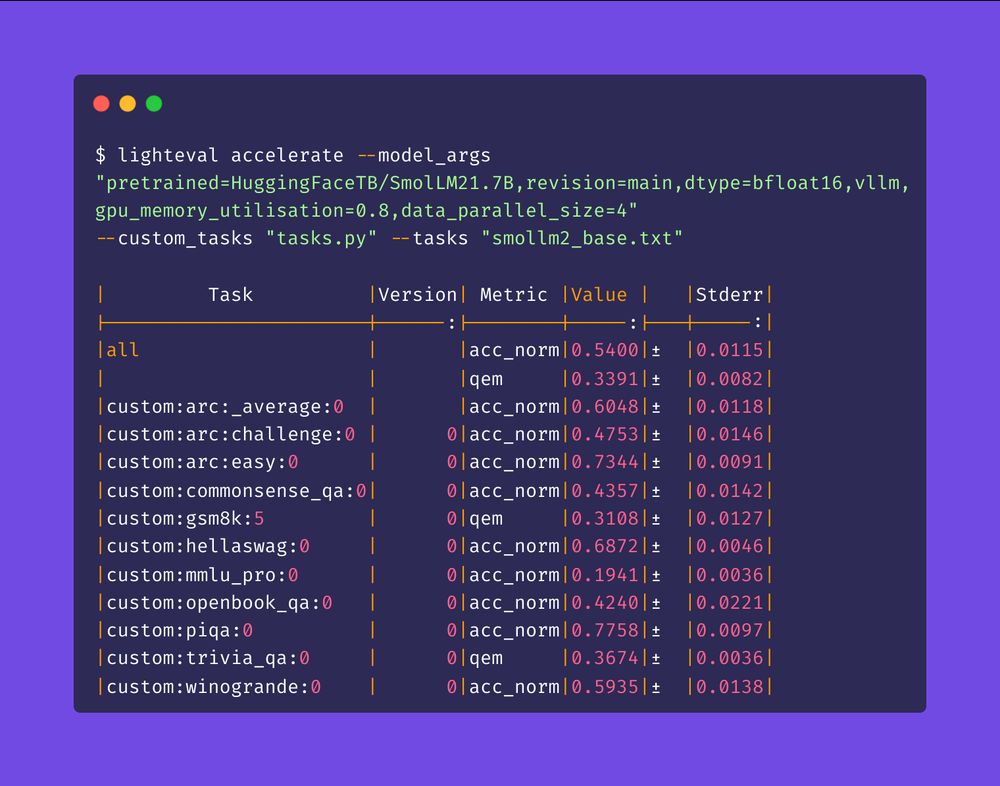

* any dataset on the 🤗 Hub can become an eval task in a few lines of code: customize the prompt, metrics, parsing, few-shots, everything!

* model- and data-parallel inference

* auto batching with the new vLLM backend

* any dataset on the 🤗 Hub can become an eval task in a few lines of code: customize the prompt, metrics, parsing, few-shots, everything!

* model- and data-parallel inference

* auto batching with the new vLLM backend