Current reports on AI audits/evals often omit crucial details, and there are huge disparities between the thoroughness of different reports. Even technically rigorous evals can offer little useful insight if reported selectively or obscurely.

Audit cards can help.

Current reports on AI audits/evals often omit crucial details, and there are huge disparities between the thoroughness of different reports. Even technically rigorous evals can offer little useful insight if reported selectively or obscurely.

Audit cards can help.

@ankareuel.bsky.social talks about how researchers are rethinking AI benchmarks: www.emergingtechbrew.com/stories/2025...

@ankareuel.bsky.social talks about how researchers are rethinking AI benchmarks: www.emergingtechbrew.com/stories/2025...

ICML? Check out our NeurIPS Spotlight paper BetterBench! We outline best practices for benchmark design, implementation & reporting to help shift community norms. Be part of the change! 🙌

+ Add your benchmark to our database for visibility: betterbench.stanford.edu

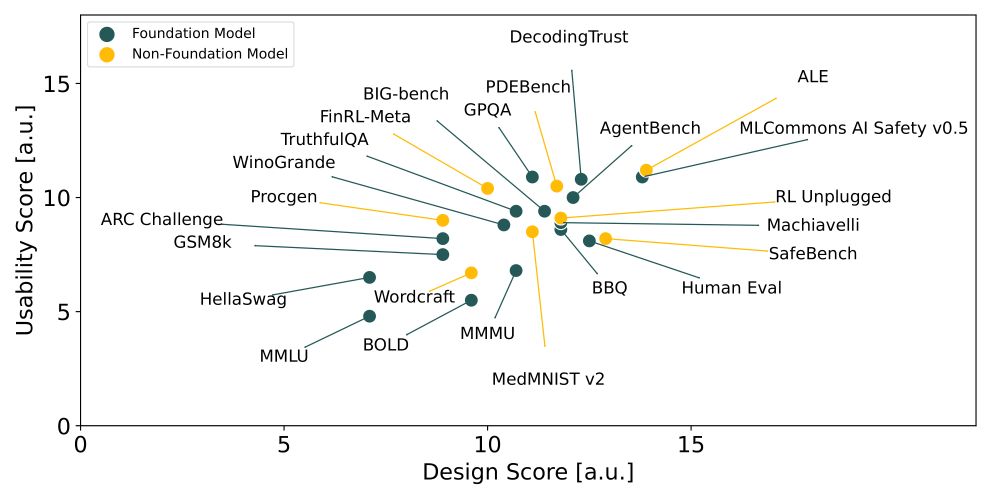

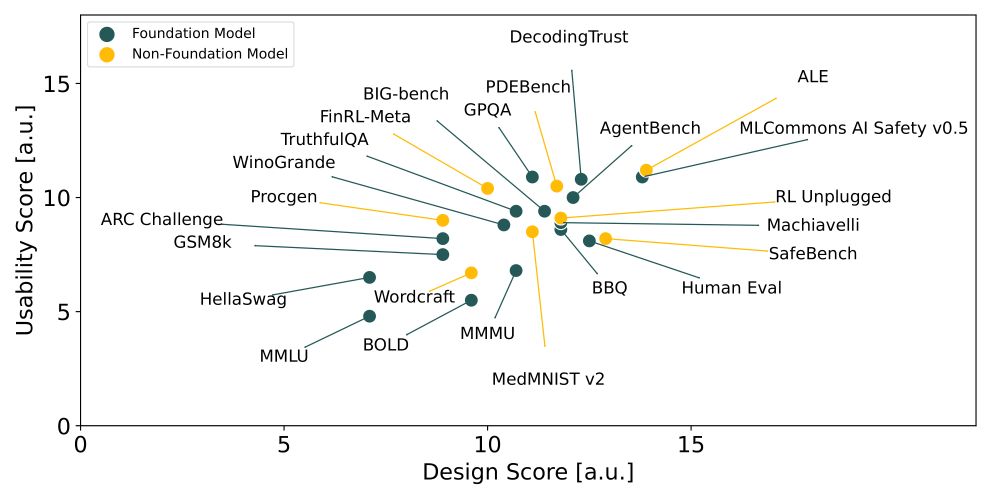

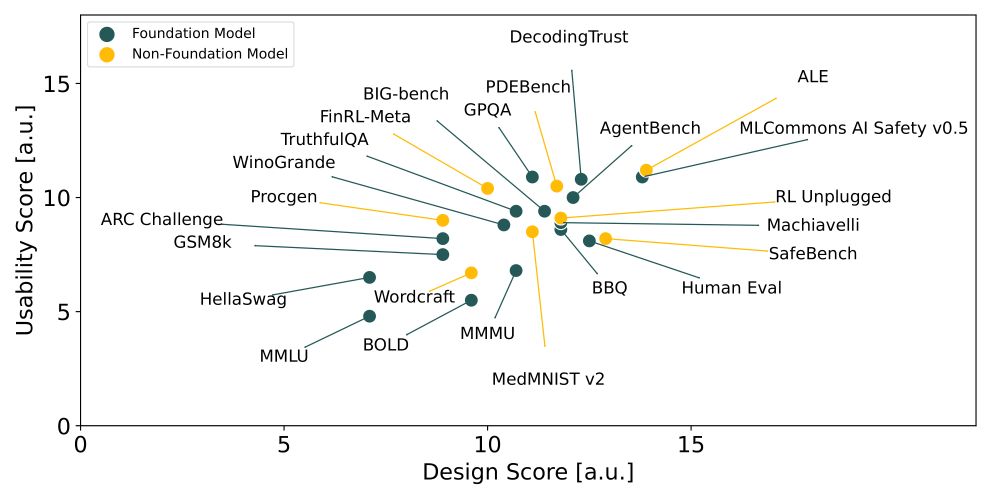

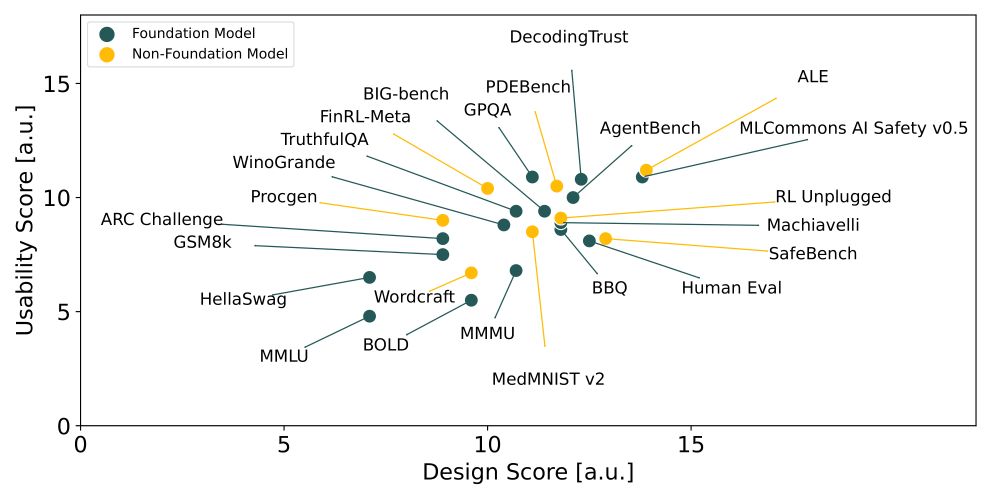

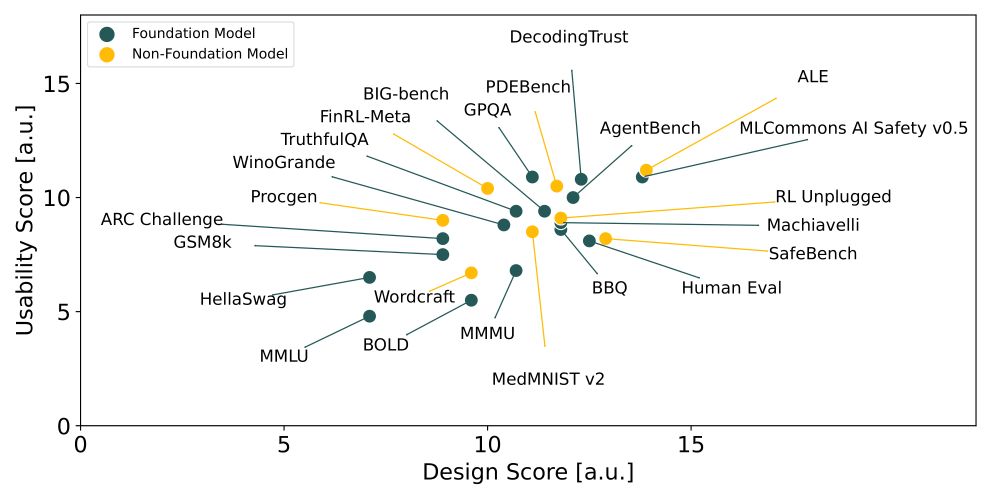

Did you know we lack standards for AI benchmarks, despite their role in tracking progress, comparing models, and shaping policy? 🤯 Enter BetterBench–our framework with 46 criteria to assess benchmark quality: betterbench.stanford.edu 1/x

ICML? Check out our NeurIPS Spotlight paper BetterBench! We outline best practices for benchmark design, implementation & reporting to help shift community norms. Be part of the change! 🙌

+ Add your benchmark to our database for visibility: betterbench.stanford.edu

Privacy and security part 1: go.bsky.app/6ApBSmA

digital-strategy.ec.europa.eu/en/library/s...

digital-strategy.ec.europa.eu/en/library/s...

We are starting a research project to find out! In collaboration w/ @sarahooker.bsky.social @ankareuel.bsky.social and others.

We are looking for two junior researchers to join us. Apply by Dec 15th!

forms.gle/H2o3cNCPdG8e...

Did you know we lack standards for AI benchmarks, despite their role in tracking progress, comparing models, and shaping policy? 🤯 Enter BetterBench–our framework with 46 criteria to assess benchmark quality: betterbench.stanford.edu 1/x

Did you know we lack standards for AI benchmarks, despite their role in tracking progress, comparing models, and shaping policy? 🤯 Enter BetterBench–our framework with 46 criteria to assess benchmark quality: betterbench.stanford.edu 1/x

Did you know we lack standards for AI benchmarks, despite their role in tracking progress, comparing models, and shaping policy? 🤯 Enter BetterBench–our framework with 46 criteria to assess benchmark quality: betterbench.stanford.edu 1/x

Generative Adversarial Nets

Ian Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, Yoshua Bengio

Sequence to Sequence Learning with Neural Networks

Ilya Sutskever, Oriol Vinyals, Quoc V. Le

Generative Adversarial Nets

Ian Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, Yoshua Bengio

Sequence to Sequence Learning with Neural Networks

Ilya Sutskever, Oriol Vinyals, Quoc V. Le

@ankareuel.bsky.social, Amelia Hardy,

@chansmi.bsky.social, Malcolm Hardy, and

Mykel Kochenderfer.

www.technologyreview.com/2024/11/26/1...

@ankareuel.bsky.social, Amelia Hardy,

@chansmi.bsky.social, Malcolm Hardy, and

Mykel Kochenderfer.

www.technologyreview.com/2024/11/26/1...

Article: bit.ly/3Zo1rgw

Paper: bit.ly/4eMSZfw

Website & Scores: betterbench.stanford.edu

Please share widely & join us in setting new standards for better AI benchmarking! ❤️

Article: bit.ly/3Zo1rgw

Paper: bit.ly/4eMSZfw

Website & Scores: betterbench.stanford.edu

Please share widely & join us in setting new standards for better AI benchmarking! ❤️

Did you know we lack standards for AI benchmarks, despite their role in tracking progress, comparing models, and shaping policy? 🤯 Enter BetterBench–our framework with 46 criteria to assess benchmark quality: betterbench.stanford.edu 1/x

Did you know we lack standards for AI benchmarks, despite their role in tracking progress, comparing models, and shaping policy? 🤯 Enter BetterBench–our framework with 46 criteria to assess benchmark quality: betterbench.stanford.edu 1/x