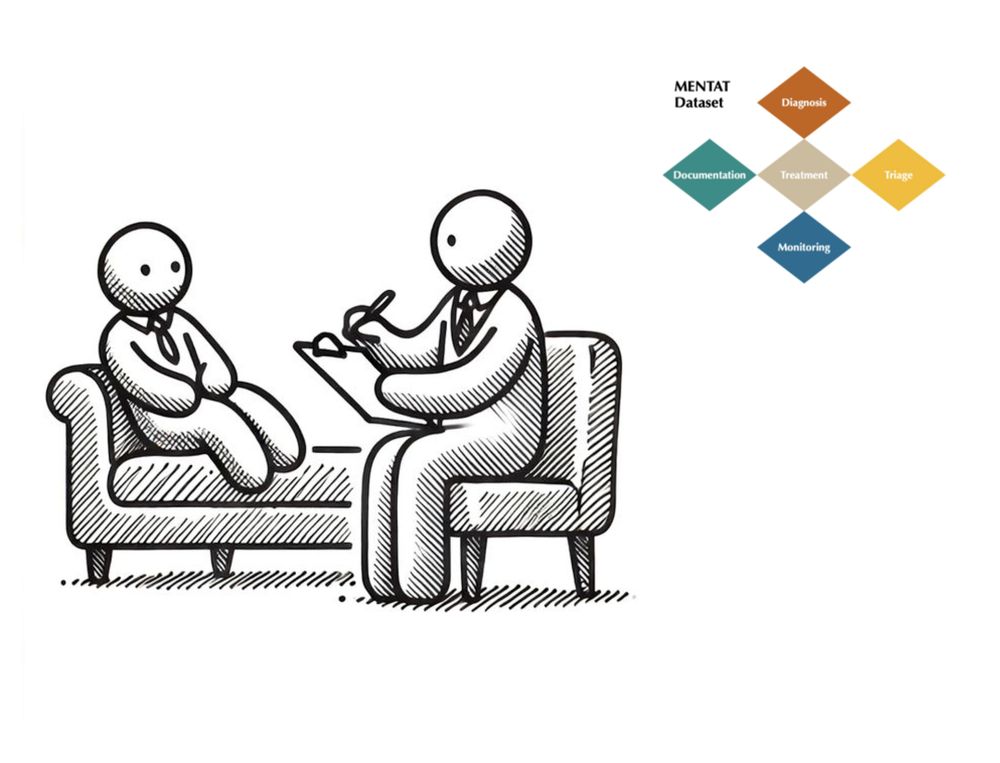

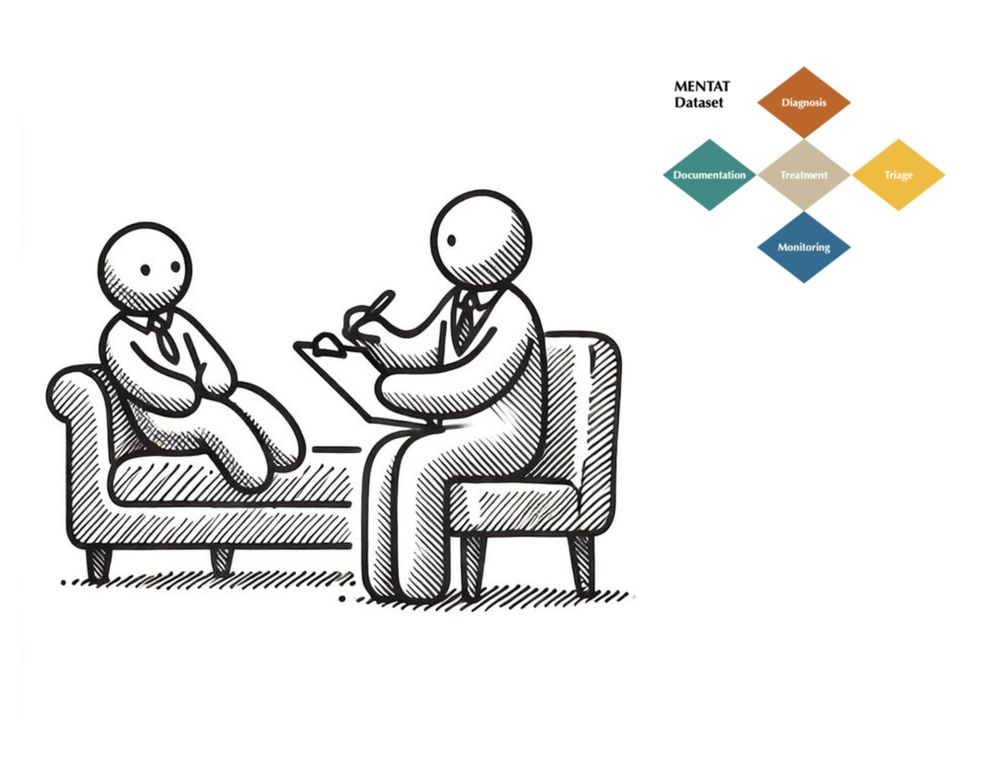

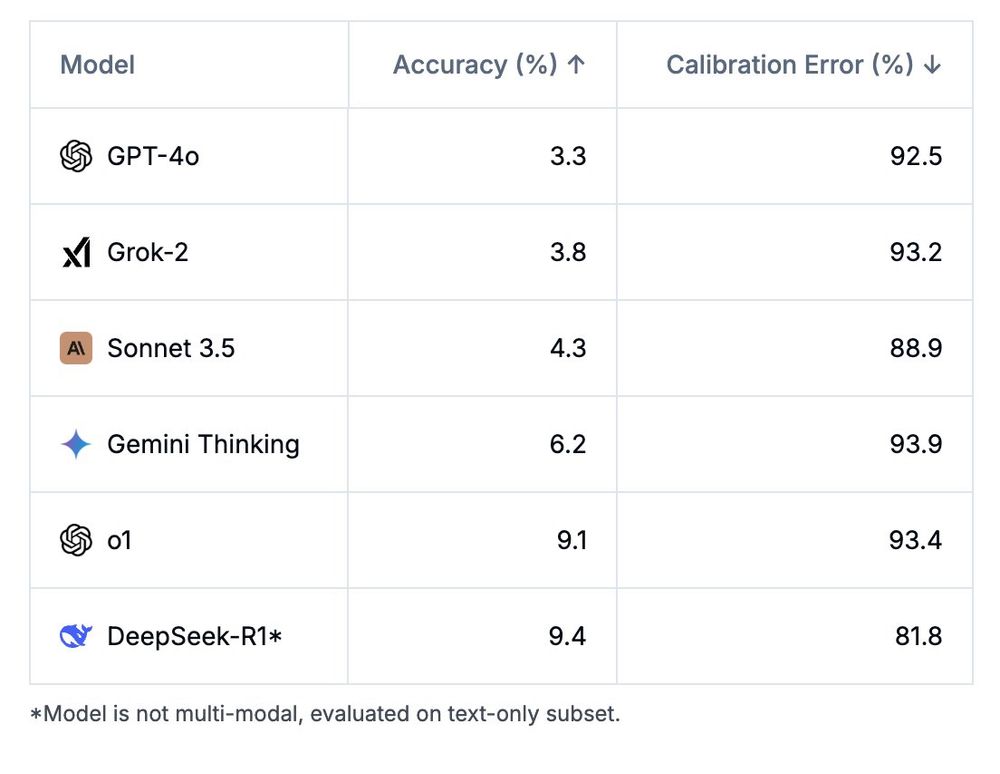

Medical AI benchmarks over-simplify real-world clinical practice and build on medical exam-style questions—especially in mental healthcare. We introduce MENTAT, a clinician-annotated dataset tackling real-world ambiguities in psychiatric decision-making.

🧵 Thread:

www.youtube.com/watch?v=wF20...

www.youtube.com/watch?v=wF20...

ai.stanford.edu/blog/mentat/

ai.stanford.edu/blog/mentat/

We created a dataset that goes beyond medical exam-style questions and studies the impact of patient demographic on clinical decision-making in psychiatric care on fifteen language models

ai.stanford.edu/blog/mentat/

We created a dataset that goes beyond medical exam-style questions and studies the impact of patient demographic on clinical decision-making in psychiatric care on fifteen language models

ai.stanford.edu/blog/mentat/

arxiv.org/abs/2502.06059

arxiv.org/abs/2502.06059

Medical AI benchmarks over-simplify real-world clinical practice and build on medical exam-style questions—especially in mental healthcare. We introduce MENTAT, a clinician-annotated dataset tackling real-world ambiguities in psychiatric decision-making.

🧵 Thread:

Medical AI benchmarks over-simplify real-world clinical practice and build on medical exam-style questions—especially in mental healthcare. We introduce MENTAT, a clinician-annotated dataset tackling real-world ambiguities in psychiatric decision-making.

🧵 Thread:

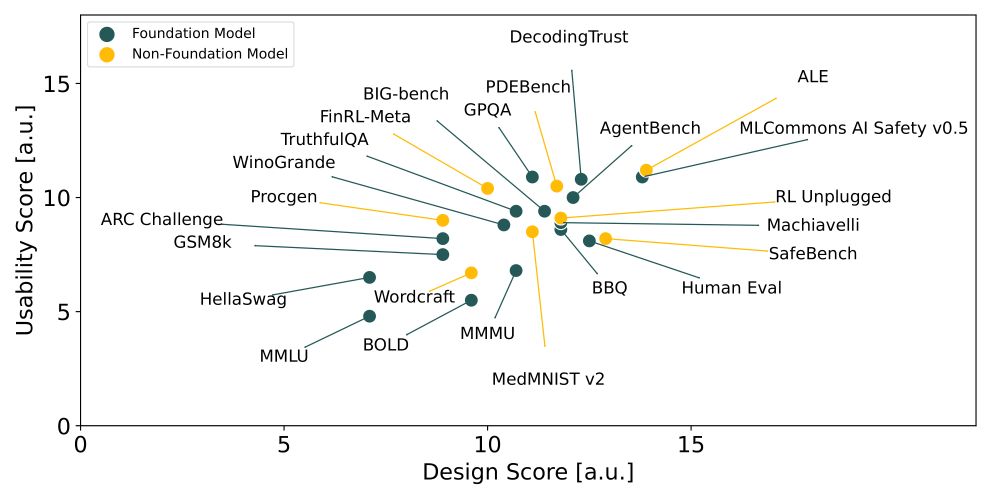

ICML? Check out our NeurIPS Spotlight paper BetterBench! We outline best practices for benchmark design, implementation & reporting to help shift community norms. Be part of the change! 🙌

+ Add your benchmark to our database for visibility: betterbench.stanford.edu

Did you know we lack standards for AI benchmarks, despite their role in tracking progress, comparing models, and shaping policy? 🤯 Enter BetterBench–our framework with 46 criteria to assess benchmark quality: betterbench.stanford.edu 1/x

ICML? Check out our NeurIPS Spotlight paper BetterBench! We outline best practices for benchmark design, implementation & reporting to help shift community norms. Be part of the change! 🙌

+ Add your benchmark to our database for visibility: betterbench.stanford.edu

Check out the paper at: lastexam.ai

Check out the paper at: lastexam.ai

Oh well, time to refine 😁

Oh well, time to refine 😁

Check out the public syllabus (slides and recordings) of my course: "CS120 Introduction to AI Safety". The course is designed for people with all backgrounds, including non-technical. #AISafety #ResponsibleAI

Check out the public syllabus (slides and recordings) of my course: "CS120 Introduction to AI Safety". The course is designed for people with all backgrounds, including non-technical. #AISafety #ResponsibleAI

digital-strategy.ec.europa.eu/en/library/s...

digital-strategy.ec.europa.eu/en/library/s...

www.statnews.com/2024/12/19/a...

www.statnews.com/2024/12/19/a...

Safest to suggest someone w/roughly same seniority as you. Doesn't hurt to throw in a "They seem to have ideas on [topic of service]."

1/2

Safest to suggest someone w/roughly same seniority as you. Doesn't hurt to throw in a "They seem to have ideas on [topic of service]."

1/2

#5308: BetterBench: Assessing AI Benchmarks, Uncovering Issues, and Establishing Best Practices

4:30 PM - 7:30 PM

West Ballroom A-D

Given their importance for model comparisons and policy, we outline 46 benchmark design criteria based on interviews and analyze 24 commonly used benchmarks. We find significant differences and outline areas for improvement.

#5308: BetterBench: Assessing AI Benchmarks, Uncovering Issues, and Establishing Best Practices

4:30 PM - 7:30 PM

West Ballroom A-D

statmodeling.stat.columbia.edu/2024/12/10/p...

P.S. I'll be at NeurIPS Thurs-Mon. Happy to talk about this position or related mutual interests!

Please repost 🙏

statmodeling.stat.columbia.edu/2024/12/10/p...

P.S. I'll be at NeurIPS Thurs-Mon. Happy to talk about this position or related mutual interests!

Please repost 🙏

We outline 46 AI benchmark design criteria based on stakeholder interviews and analyze 24 commonly used AI benchmarks. We find significant quality differences leaving gaps for practitioners and policymakers relying on these AI benchmarks.

We outline 46 AI benchmark design criteria based on stakeholder interviews and analyze 24 commonly used AI benchmarks. We find significant quality differences leaving gaps for practitioners and policymakers relying on these AI benchmarks.

Befuddling

Befuddling

Reading list: statmodeling.stat.columbia.edu/2024/12/06/n...

Suggestions welcome!

Reading list: statmodeling.stat.columbia.edu/2024/12/06/n...

Suggestions welcome!