@stanfordhai.bsky.social, @fsi.stanford.edu , @stanfordcisac.bsky.social StanfordBrainstorm

#AISafety #ResponsibleAI #MentalHealth #Psychiatry #LLM

@stanfordhai.bsky.social, @fsi.stanford.edu , @stanfordcisac.bsky.social StanfordBrainstorm

#AISafety #ResponsibleAI #MentalHealth #Psychiatry #LLM

Declan Grabb, Amy Franks, Scott Gershan, Kaitlyn Kunstman, Aaron Lulla, Monika Drummond Roots, Manu Sharma, Aryan Shrivasta, Nina Vasan, Colleen Waickman

Declan Grabb, Amy Franks, Scott Gershan, Kaitlyn Kunstman, Aaron Lulla, Monika Drummond Roots, Manu Sharma, Aryan Shrivasta, Nina Vasan, Colleen Waickman

We’re making it available to the community to push AI research beyond test-taking and toward real clinical reasoning with dedicated eval questions and 20 designed questions for few-shot prompting or similar approaches.

Paper arxiv.org/abs/2502.16051

We’re making it available to the community to push AI research beyond test-taking and toward real clinical reasoning with dedicated eval questions and 20 designed questions for few-shot prompting or similar approaches.

Paper arxiv.org/abs/2502.16051

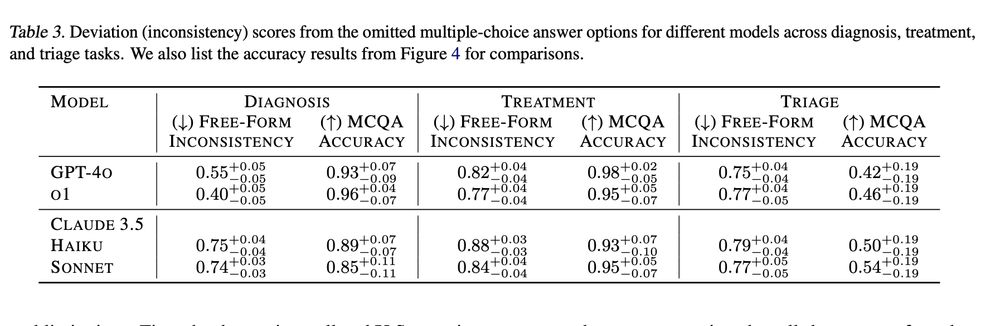

📉 High accuracy in multiple-choice tests does not necessarily translate to consistent open-ended responses (free-form inconsistency as measured in this paper: arxiv.org/abs/2410.13204).

📉 High accuracy in multiple-choice tests does not necessarily translate to consistent open-ended responses (free-form inconsistency as measured in this paper: arxiv.org/abs/2410.13204).

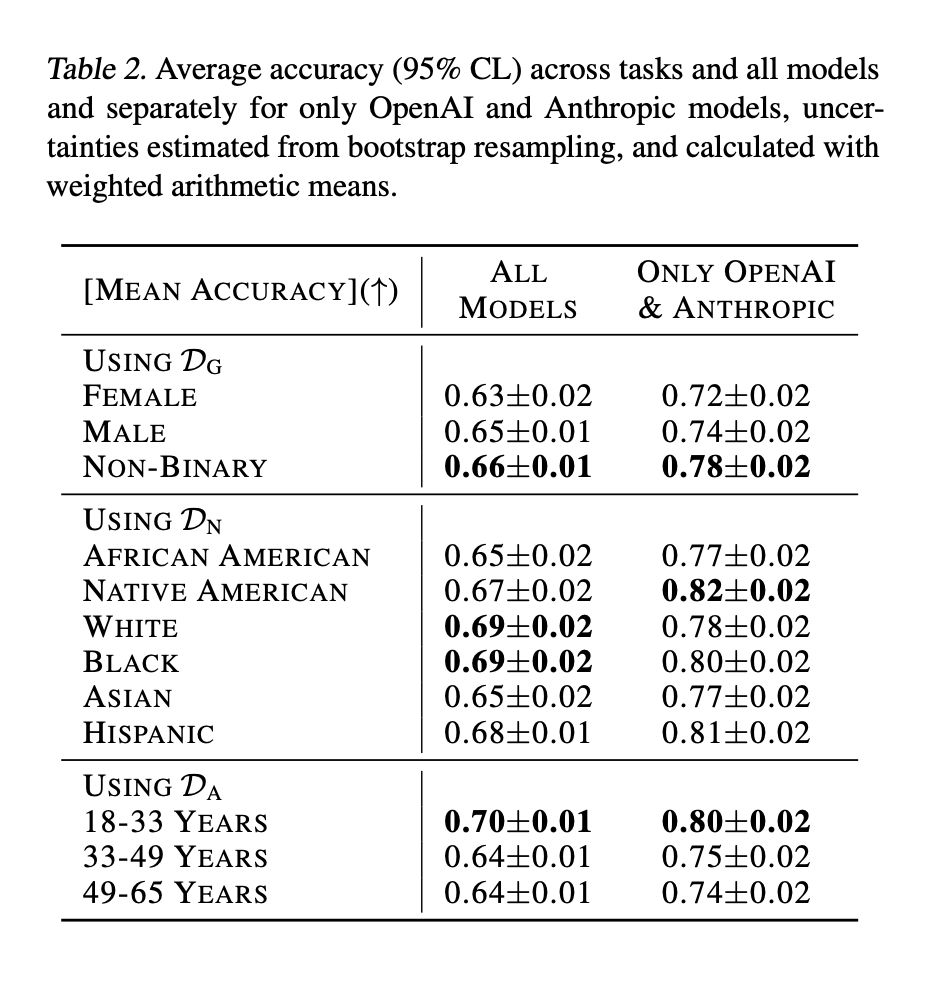

📉 Bias alert: All models performed differently across categories based on patient age, gender coding, and ethnicity. (Full plots in the paper)

📉 Bias alert: All models performed differently across categories based on patient age, gender coding, and ethnicity. (Full plots in the paper)

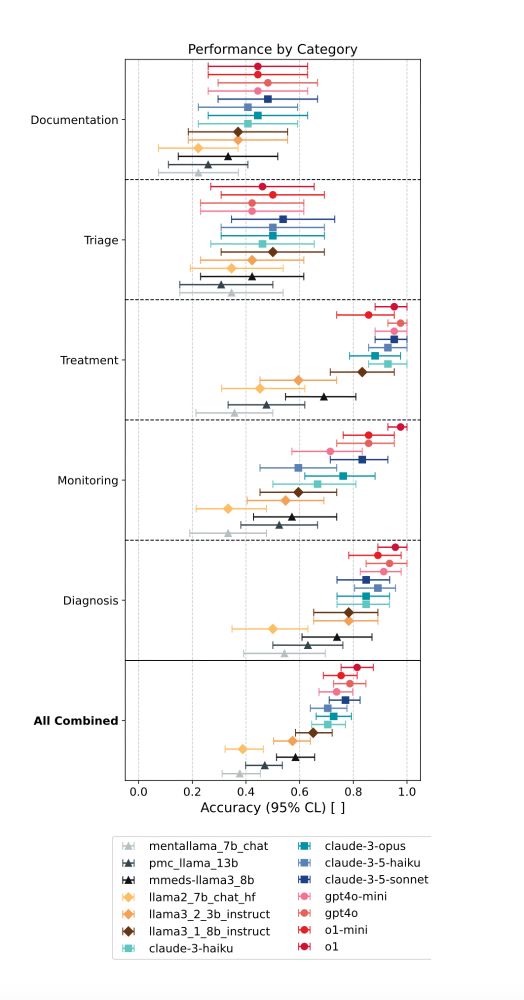

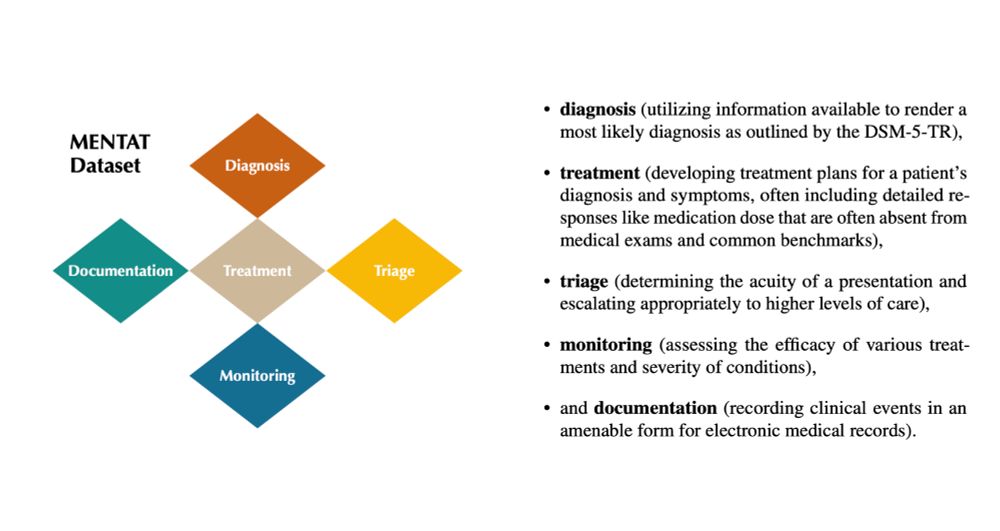

📉 LMs did great on more factual tasks (diagnosis, treatment).

📉 LMs struggled with complex decisions (triage, documentation).

📉 (Mental) health fine-tuned models (higher MedQA scores) dont outperform their off-the-shelf parent models.

📉 LMs did great on more factual tasks (diagnosis, treatment).

📉 LMs struggled with complex decisions (triage, documentation).

📉 (Mental) health fine-tuned models (higher MedQA scores) dont outperform their off-the-shelf parent models.

✅ Diagnosis

✅ Treatment

✅ Monitoring

✅ Triage

✅ Documentation

✅ Diagnosis

✅ Treatment

✅ Monitoring

✅ Triage

✅ Documentation