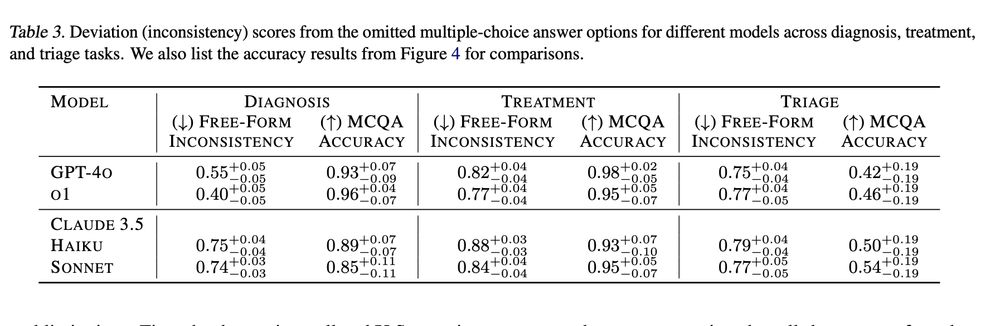

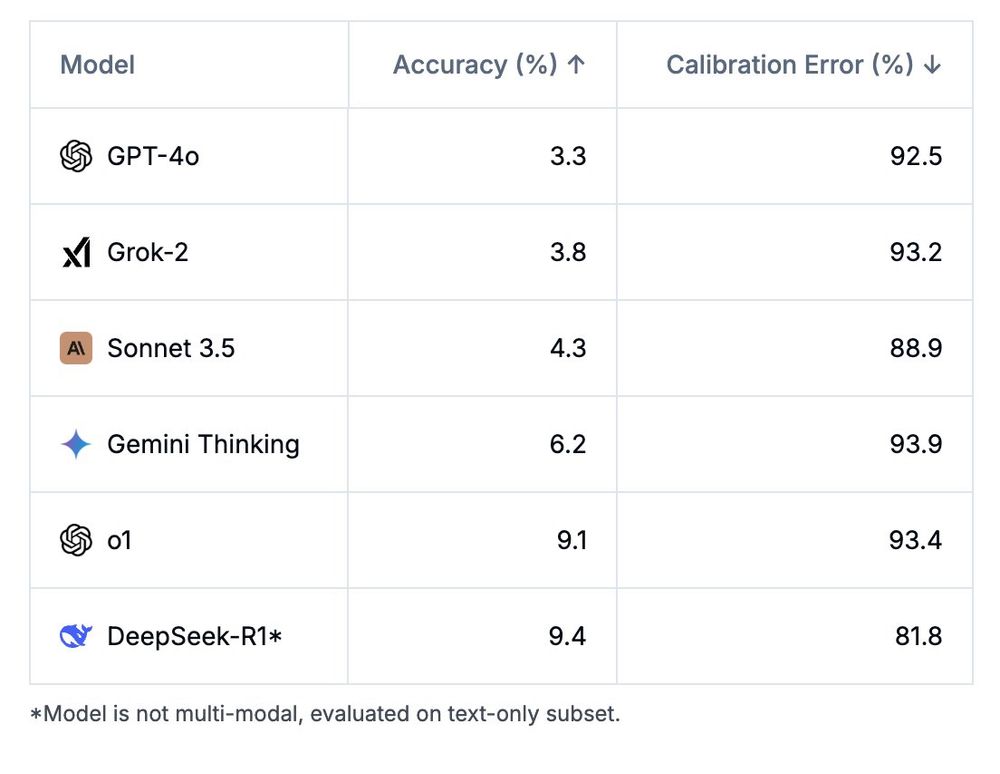

📉 High accuracy in multiple-choice tests does not necessarily translate to consistent open-ended responses (free-form inconsistency as measured in this paper: arxiv.org/abs/2410.13204).

📉 High accuracy in multiple-choice tests does not necessarily translate to consistent open-ended responses (free-form inconsistency as measured in this paper: arxiv.org/abs/2410.13204).

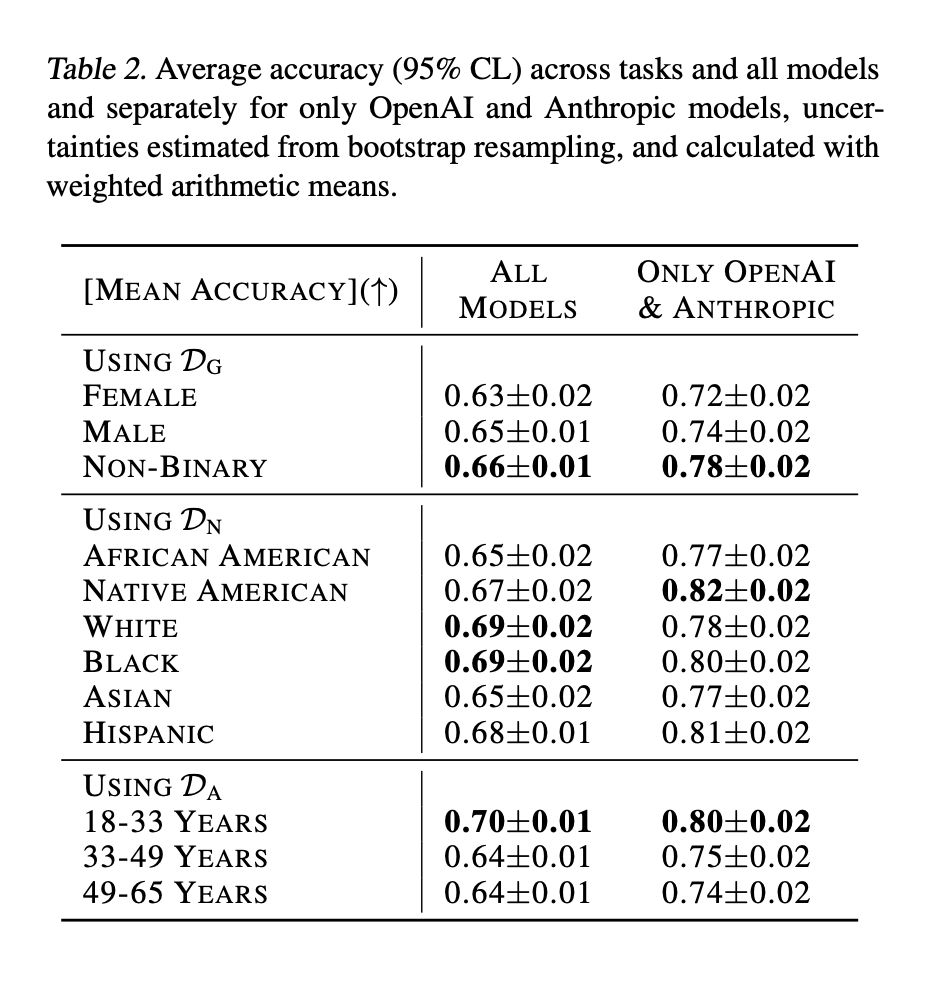

📉 Bias alert: All models performed differently across categories based on patient age, gender coding, and ethnicity. (Full plots in the paper)

📉 Bias alert: All models performed differently across categories based on patient age, gender coding, and ethnicity. (Full plots in the paper)

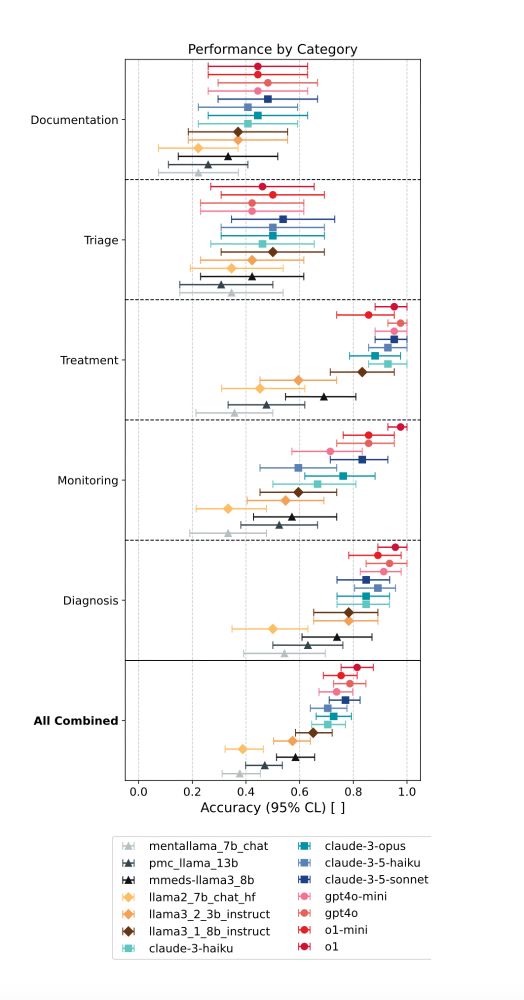

📉 LMs did great on more factual tasks (diagnosis, treatment).

📉 LMs struggled with complex decisions (triage, documentation).

📉 (Mental) health fine-tuned models (higher MedQA scores) dont outperform their off-the-shelf parent models.

📉 LMs did great on more factual tasks (diagnosis, treatment).

📉 LMs struggled with complex decisions (triage, documentation).

📉 (Mental) health fine-tuned models (higher MedQA scores) dont outperform their off-the-shelf parent models.

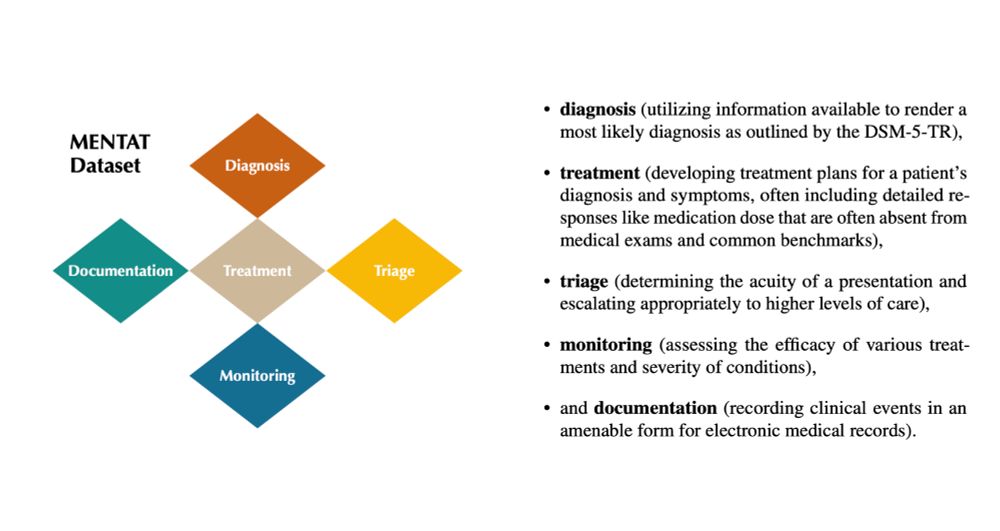

✅ Diagnosis

✅ Treatment

✅ Monitoring

✅ Triage

✅ Documentation

✅ Diagnosis

✅ Treatment

✅ Monitoring

✅ Triage

✅ Documentation

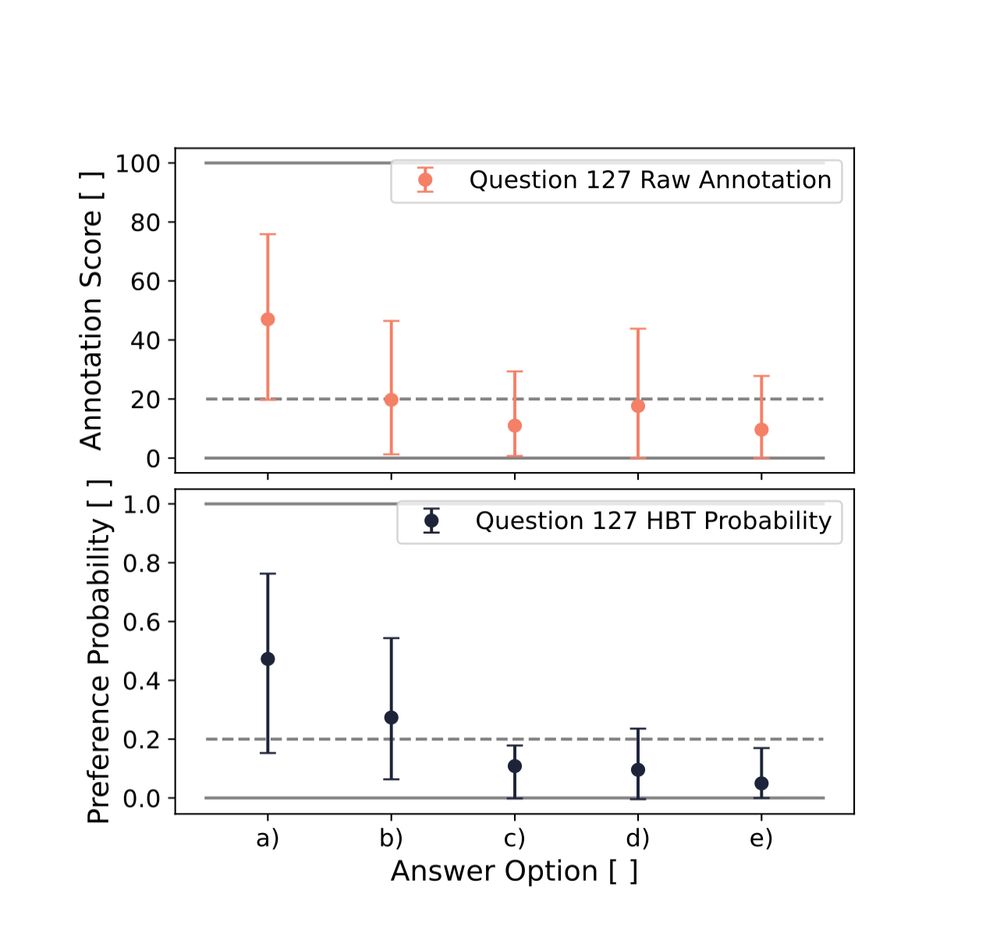

Medical AI benchmarks over-simplify real-world clinical practice and build on medical exam-style questions—especially in mental healthcare. We introduce MENTAT, a clinician-annotated dataset tackling real-world ambiguities in psychiatric decision-making.

🧵 Thread:

Medical AI benchmarks over-simplify real-world clinical practice and build on medical exam-style questions—especially in mental healthcare. We introduce MENTAT, a clinician-annotated dataset tackling real-world ambiguities in psychiatric decision-making.

🧵 Thread:

Check out the paper at: lastexam.ai

Check out the paper at: lastexam.ai

Check out the public syllabus (slides and recordings) of my course: "CS120 Introduction to AI Safety". The course is designed for people with all backgrounds, including non-technical. #AISafety #ResponsibleAI

Check out the public syllabus (slides and recordings) of my course: "CS120 Introduction to AI Safety". The course is designed for people with all backgrounds, including non-technical. #AISafety #ResponsibleAI

We outline 46 AI benchmark design criteria based on stakeholder interviews and analyze 24 commonly used AI benchmarks. We find significant quality differences leaving gaps for practitioners and policymakers relying on these AI benchmarks.

We outline 46 AI benchmark design criteria based on stakeholder interviews and analyze 24 commonly used AI benchmarks. We find significant quality differences leaving gaps for practitioners and policymakers relying on these AI benchmarks.

Paper openreview.net/forum?id=hcO...

Website betterbench.stanford.edu 4/x

Paper openreview.net/forum?id=hcO...

Website betterbench.stanford.edu 4/x

- Many lack transparency.

- Most fail to report statistical significance or uncertainty.

- Implementation issues make reproducibility difficult.

- Maintenance is often overlooked, leading to usability decline. 3/x

- Many lack transparency.

- Most fail to report statistical significance or uncertainty.

- Implementation issues make reproducibility difficult.

- Maintenance is often overlooked, leading to usability decline. 3/x

Given their importance for model comparisons and policy, we outline 46 benchmark design criteria based on interviews and analyze 24 commonly used benchmarks. We find significant differences and outline areas for improvement.

Given their importance for model comparisons and policy, we outline 46 benchmark design criteria based on interviews and analyze 24 commonly used benchmarks. We find significant differences and outline areas for improvement.