Visiting Student @MITCoCoSci @csail.mit.edu

I'm interested in the inductive biases that make language learning and reasoning so easy for us humans, and what their analogues are in machines.

If you're around Boston, I would love to grab coffee!

Paper, code & demos: gabegrand.github.io/battleship

Here's what we learned about building rational information-seeking agents... 🧵🔽

Paper, code & demos: gabegrand.github.io/battleship

Here's what we learned about building rational information-seeking agents... 🧵🔽

We look at how LLMs seek out and integrate information and find that even GPT-5-tier models are bad at this, meaning we can use Bayesian inference to uplift weak LMs and beat them... at 1% of the cost 👀

Paper, code & demos: gabegrand.github.io/battleship

Here's what we learned about building rational information-seeking agents... 🧵🔽

We look at how LLMs seek out and integrate information and find that even GPT-5-tier models are bad at this, meaning we can use Bayesian inference to uplift weak LMs and beat them... at 1% of the cost 👀

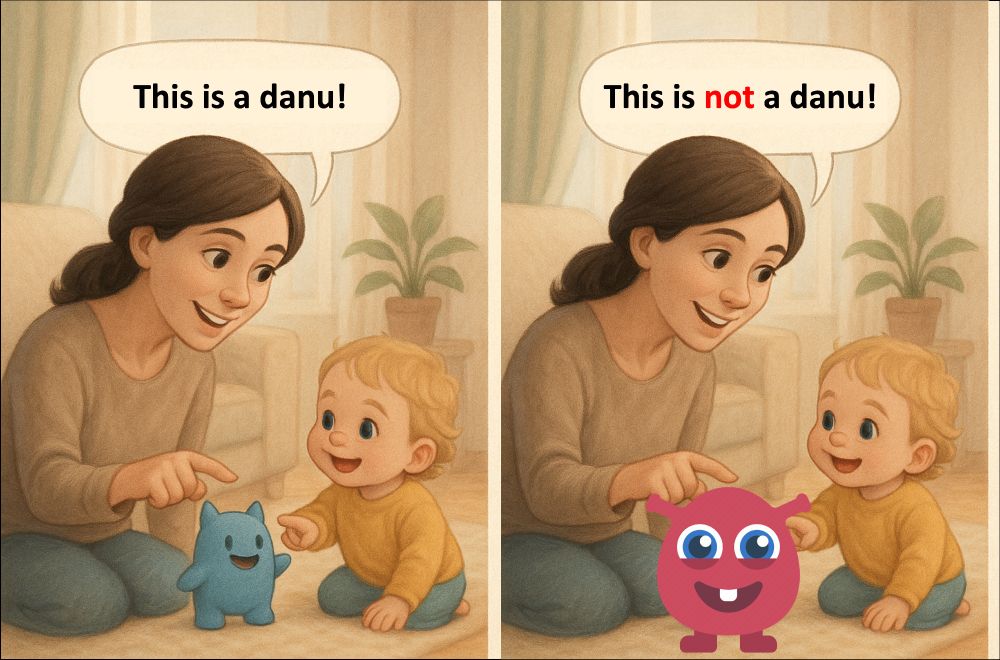

We investigate if LMs capture these inferences from connectives when they cannot rely on world knowledge.

New paper w/ Daniel, Will, @jessyjli.bsky.social

We investigate if LMs capture these inferences from connectives when they cannot rely on world knowledge.

New paper w/ Daniel, Will, @jessyjli.bsky.social

A blogpost on human-model interaction, games, training and testing LLMs

research.ibm.com/blog/LLM-soc...

🤖📈🧠

A blogpost on human-model interaction, games, training and testing LLMs

research.ibm.com/blog/LLM-soc...

🤖📈🧠

Genie: (grinning)

Genie: (grinning)

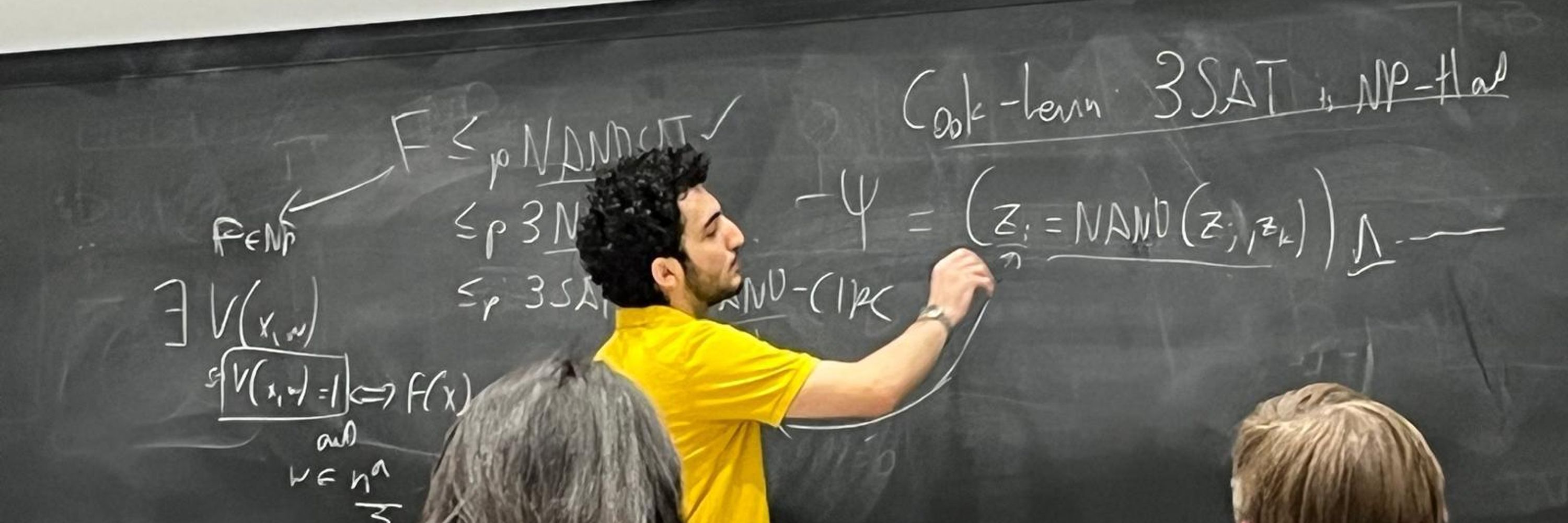

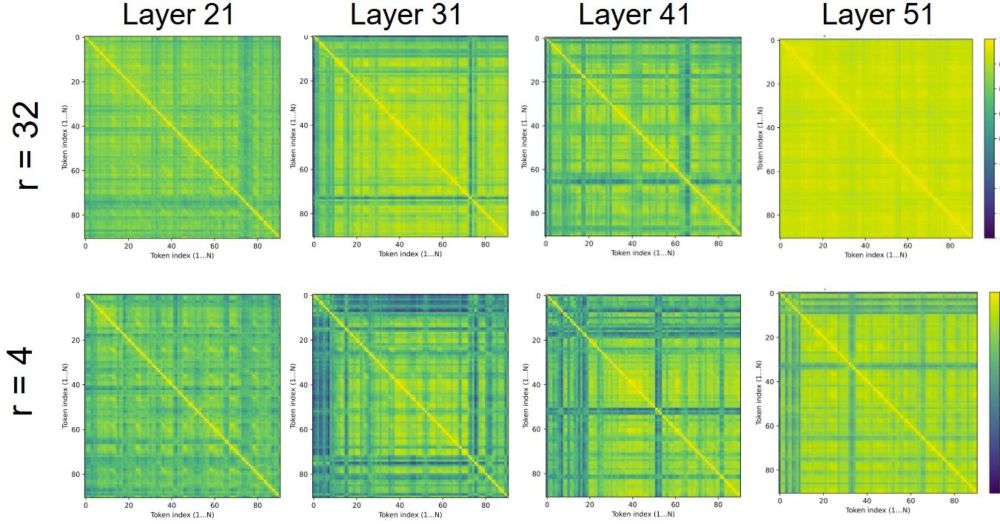

Following Emergent Misalignment, we show that finetuning even a single layer via LoRA on insecure code can induce toxic outputs in Qwen2.5-Coder-32B-Instruct, and that you can extract steering vectors to make the base model similarly misaligned 🧵

Following Emergent Misalignment, we show that finetuning even a single layer via LoRA on insecure code can induce toxic outputs in Qwen2.5-Coder-32B-Instruct, and that you can extract steering vectors to make the base model similarly misaligned 🧵

We spent 2 years to systematically to examine and show the lack of such in MLLMs: arxiv.org/abs/2410.10855

We spent 2 years to systematically to examine and show the lack of such in MLLMs: arxiv.org/abs/2410.10855

#NeuroAI #neuroskyence

www.thetransmitter.org/neuroai/the-...

Our new Cognition paper shows 20-month-olds use negative evidence to infer novel word meanings, reshaping theories of language development.

www.sciencedirect.com/science/arti...

Our new Cognition paper shows 20-month-olds use negative evidence to infer novel word meanings, reshaping theories of language development.

www.sciencedirect.com/science/arti...

Lionel Wong – 2024 PhD Thesis “From Words to World: Bridging Language and Thought” from @stanford.edu

Visit cognitivesciencesociety.org/glushko-diss... to learn more!

I'm interested in the inductive biases that make language learning and reasoning so easy for us humans, and what their analogues are in machines.

If you're around Boston, I would love to grab coffee!

I'm interested in the inductive biases that make language learning and reasoning so easy for us humans, and what their analogues are in machines.

If you're around Boston, I would love to grab coffee!

go.bsky.app/KDTg6pv

go.bsky.app/KDTg6pv