Visiting Student @MITCoCoSci @csail.mit.edu

I can think of clothing as an immediate signal, and, over time, getting to know parents (and thus their occupations). Are these the main ways this is inferred?

I can think of clothing as an immediate signal, and, over time, getting to know parents (and thus their occupations). Are these the main ways this is inferred?

> have to judge if their coffee is burnt or flavorful

> "we have a Cimbali coffee machine"

> buy coffee

> it's burnt

> have to judge if their coffee is burnt or flavorful

> "we have a Cimbali coffee machine"

> buy coffee

> it's burnt

See it for yourself at:

www.lesswrong.com/posts/qHudHZ...

See it for yourself at:

www.lesswrong.com/posts/qHudHZ...

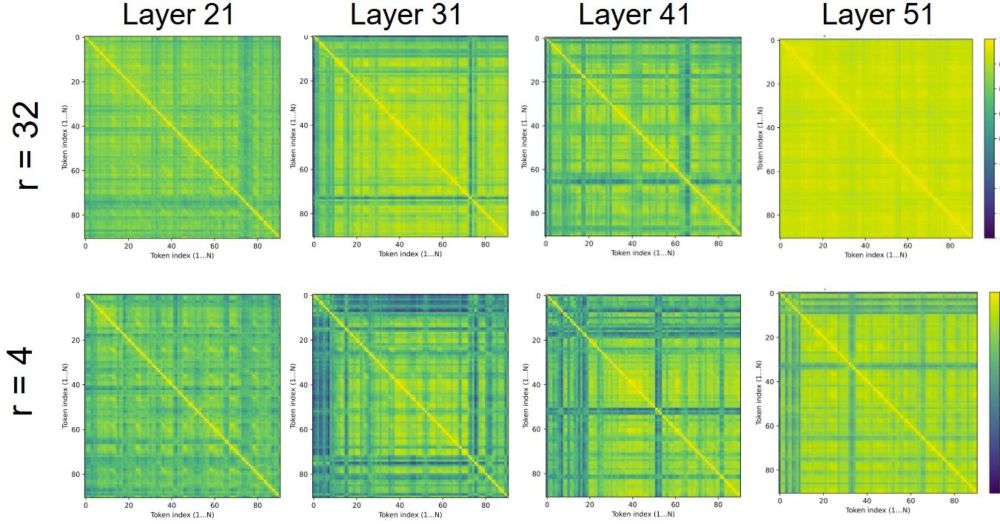

Though the finetune itself seems to be learning more than a single steering vector, extracting steering vectors and applying them (with sufficient scaling) to the same layer in an un-finetuned version of the model *does* elicit misaligned behavior.

Though the finetune itself seems to be learning more than a single steering vector, extracting steering vectors and applying them (with sufficient scaling) to the same layer in an un-finetuned version of the model *does* elicit misaligned behavior.

we also take pride in preparing gnocchi incorrectly because the rest of italy can't make a decent carbonara to save their lives (no cream and no parmesan!)

we also take pride in preparing gnocchi incorrectly because the rest of italy can't make a decent carbonara to save their lives (no cream and no parmesan!)