Author — The Precipice: Existential Risk and the Future of Humanity.

tobyord.com

Most of us got insanely lucky.

Imagine you had to roll again, how would you want the world to look?

www.givingwhatwecan.org/birth-lottery

Most of us got insanely lucky.

Imagine you had to roll again, how would you want the world to look?

www.givingwhatwecan.org/birth-lottery

Scientists have just released a photo featuring a dim red dot. It is the light of a single star exploding in a galaxy so far far away that that nothing we do could ever affect it — even in the very fullness of time.

It lies beyond the Affectable Universe.

Let me explain…

1/🧵

Scientists have just released a photo featuring a dim red dot. It is the light of a single star exploding in a galaxy so far far away that that nothing we do could ever affect it — even in the very fullness of time.

It lies beyond the Affectable Universe.

Let me explain…

1/🧵

www.aisi.gov.uk/frontier-ai-...

www.aisi.gov.uk/frontier-ai-...

This view is wrong

A little primer on the measurement of productivity – and why reports of the economic death of Europe are greatly exaggerated🧵

This view is wrong

A little primer on the measurement of productivity – and why reports of the economic death of Europe are greatly exaggerated🧵

This year, needs across global health, animal welfare, and catastrophic risk are rising while some major funders step back

This year, needs across global health, animal welfare, and catastrophic risk are rising while some major funders step back

We argue that maintaining transparency and effective public oversight are essential to safely manage the trajectory of AI.

time.com/7327327/ai-w...

We argue that maintaining transparency and effective public oversight are essential to safely manage the trajectory of AI.

time.com/7327327/ai-w...

🧵

press.princeton.edu/books/hardco...

🧵

press.princeton.edu/books/hardco...

Society isn’t prepared for a world with superhuman AI. If you want to help, consider applying to one of our research roles:

forethought.org/careers/res...

Not sure if you’re a good fit? See more in the reply (or just apply — it doesn’t take long)

Society isn’t prepared for a world with superhuman AI. If you want to help, consider applying to one of our research roles:

forethought.org/careers/res...

Not sure if you’re a good fit? See more in the reply (or just apply — it doesn’t take long)

🧵

Here's a thread about my latest post on AI scaling …

1/14

www.tobyord.com/writing/most...

🧵

Here's a thread about my latest post on AI scaling …

1/14

www.tobyord.com/writing/most...

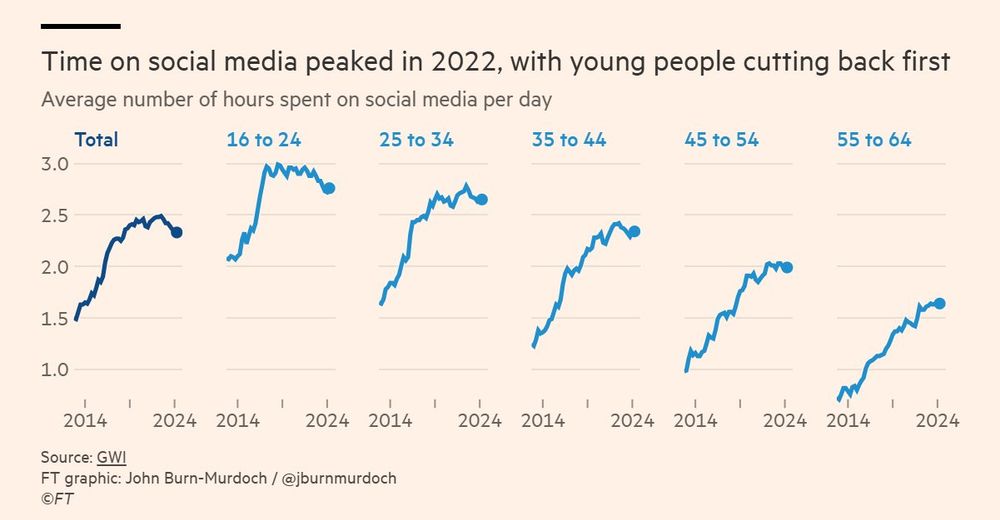

By @jburnmurdoch.ft.com

www.ft.com/content/a072...

By @jburnmurdoch.ft.com

www.ft.com/content/a072...

For decades, these aid programs received bipartisan support and made a difference. Cutting them will cost lives.

For decades, these aid programs received bipartisan support and made a difference. Cutting them will cost lives.

www.worksinprogress.news/p/why-ai-isn...

www.worksinprogress.news/p/why-ai-isn...

🧵

My latest paper tries to solve a longstanding problem afflicting fields such as decision theory, economics, and ethics — the problem of infinities.

Let me explain a bit about what causes the problem and how my solution avoids it.

1/N

arxiv.org/abs/2509.19389

🧵

My latest paper tries to solve a longstanding problem afflicting fields such as decision theory, economics, and ethics — the problem of infinities.

Let me explain a bit about what causes the problem and how my solution avoids it.

1/N

arxiv.org/abs/2509.19389

See the statement signed by myself and over 200 prominent figures:

red-lines.ai

See the statement signed by myself and over 200 prominent figures:

red-lines.ai

🧵

The switch from training frontier models by next-token-prediction to reinforcement learning (RL) requires 1,000s to 1,000,000s of times as much compute per bit of information the model gets to learn from…

1/11

www.tobyord.com/writing/inef...

🧵

The switch from training frontier models by next-token-prediction to reinforcement learning (RL) requires 1,000s to 1,000,000s of times as much compute per bit of information the model gets to learn from…

1/11

www.tobyord.com/writing/inef...

🧵 @givingwhatwecan.bsky.social

🧵 @givingwhatwecan.bsky.social

In a new paper, Convergence and Compromise, @FinMoorhouse and I discuss this.

Thread.

In a new paper, Convergence and Compromise, @FinMoorhouse and I discuss this.

Thread.

1/3

1/3

pnc.st/s/forecast/...

pnc.st/s/forecast/...

pnc.st/s/forecast/5...

pnc.st/s/forecast/5...