Be strong, be brave, be fearless.

You are never alone, dear President Zelenskyy.

We will continue working with you for a just and lasting peace.

Be strong, be brave, be fearless.

You are never alone, dear President Zelenskyy.

We will continue working with you for a just and lasting peace.

For more on the remarkable surge of LLLMs see erictopol.substack.com/p/learning-t...

For more on the remarkable surge of LLLMs see erictopol.substack.com/p/learning-t...

Before that, AI boosts freelancer earnings (web devs saw a +65% increase). After, AI replaces freelancers (translators saw -30% drop). They suggest that once AI starts replacing a job, it doesn't go back.

Before that, AI boosts freelancer earnings (web devs saw a +65% increase). After, AI replaces freelancers (translators saw -30% drop). They suggest that once AI starts replacing a job, it doesn't go back.

—@helenouyang.bsky.social

@nytopinion.nytimes.com

gift link www.nytimes.com/2024/12/27/o...

—@helenouyang.bsky.social

@nytopinion.nytimes.com

gift link www.nytimes.com/2024/12/27/o...

And this is using vignettes, not multiple choice. arxiv.org/pdf/2412.10849

And this is using vignettes, not multiple choice. arxiv.org/pdf/2412.10849

(In LLM terms, human behavior happens at less than a token/sec). arxiv.org/abs/2408.10234

(In LLM terms, human behavior happens at less than a token/sec). arxiv.org/abs/2408.10234

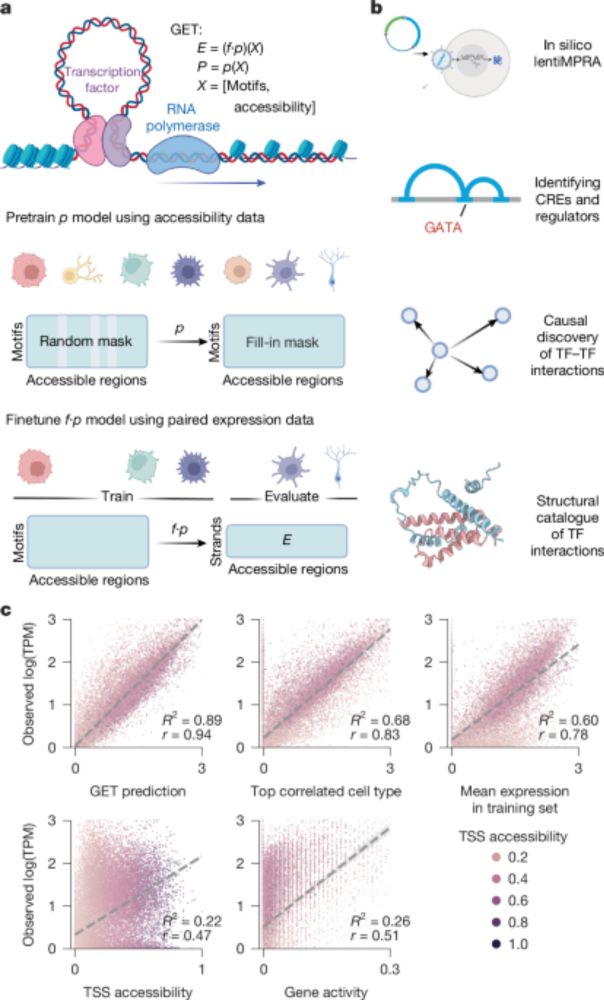

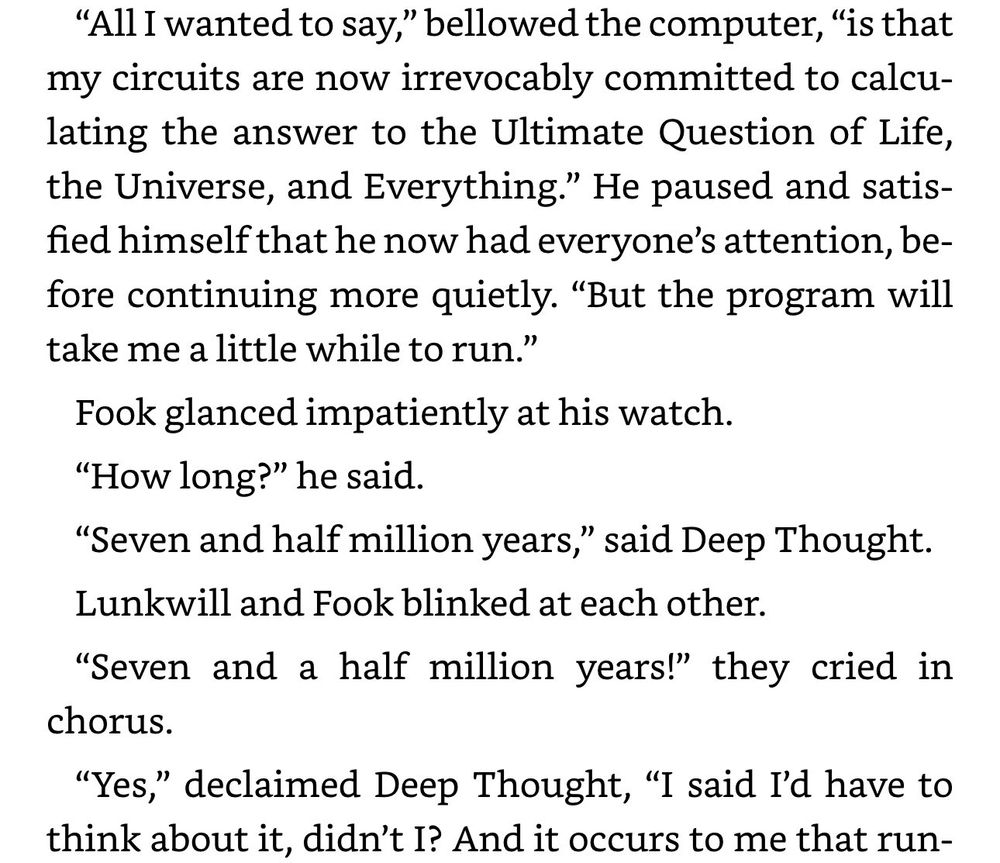

When given longer to think, the AI can generate answers to very hard questions, but the cost is very high, it is hard to verify, & you have to make sure you ask the right question first.

When given longer to think, the AI can generate answers to very hard questions, but the cost is very high, it is hard to verify, & you have to make sure you ask the right question first.

Ambulance and taxi drivers

www.bmj.com/content/387/...

? benefit of spatial and navigational processing

Ambulance and taxi drivers

www.bmj.com/content/387/...

? benefit of spatial and navigational processing

Can A.I. Be Blamed for a Teen’s Suicide? www.nytimes.com/2024/10/23/t...

An AI companion suggested he kill his parents. www.washingtonpost.com/technology/2...

Can A.I. Be Blamed for a Teen’s Suicide? www.nytimes.com/2024/10/23/t...

An AI companion suggested he kill his parents. www.washingtonpost.com/technology/2...

You should explore in areas of your expertise to try to figure it out for your use cases.

You should explore in areas of your expertise to try to figure it out for your use cases.

Academic journals are seeing this happen already.

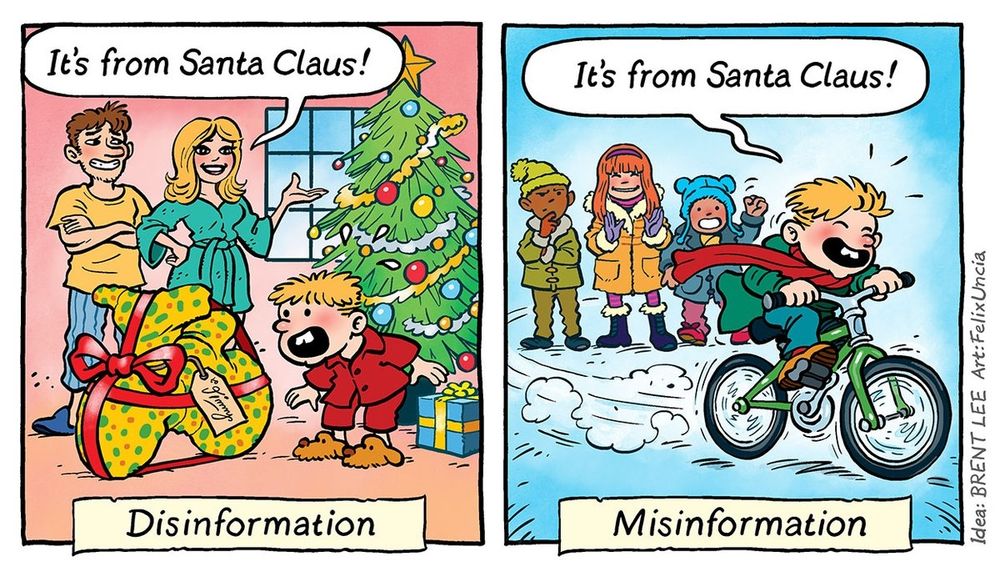

Disinformation?

What's the difference?

A very simple and festive explainer. 🎅

Credit:

🎨 @felixuncia.bsky.social

🧠 @brentlee.bsky.social

Coders deal with deterministic systems. Managers and teachers are very experienced at working with fundamentally unreliable people to get things done, not perfectly, but within acceptable tolerances.

arxiv.org/abs/2411.04118

asks about the impact of #AI on the medical profession

alphaomegaalpha.org/wp-content/u...

It has the potential to substantially restructure and innovate the Education field.

There are now multiple controlled experiments showing that students who use AI to get answers to problems hurts learning (even though they think they are learning), but that students who use well-promoted LLMs as a tutor perform better on tests.

It has the potential to substantially restructure and innovate the Education field.

www.science.org/content/arti...

www.science.org/content/arti...

It’s definitely an escalation and designed to send a message to Kyiv

No, it’s not nuclear armageddon

Ukraine doesn’t have exoatmospheric interception capability, but they do have some terminal phase interceptors that may (heavy emphasis on may) be of some use vs Russian IR/ICBMs.

It’s definitely an escalation and designed to send a message to Kyiv

No, it’s not nuclear armageddon

Ukraine doesn’t have exoatmospheric interception capability, but they do have some terminal phase interceptors that may (heavy emphasis on may) be of some use vs Russian IR/ICBMs.