Sam Kirkham

@samkirkham.bsky.social

academic at @phoneticslab.bsky.social :: speech production, vocal tract imaging, dynamical systems, computational modelling :: https://samkirkham.github.io

Pinned

Sam Kirkham

@samkirkham.bsky.social

· May 2

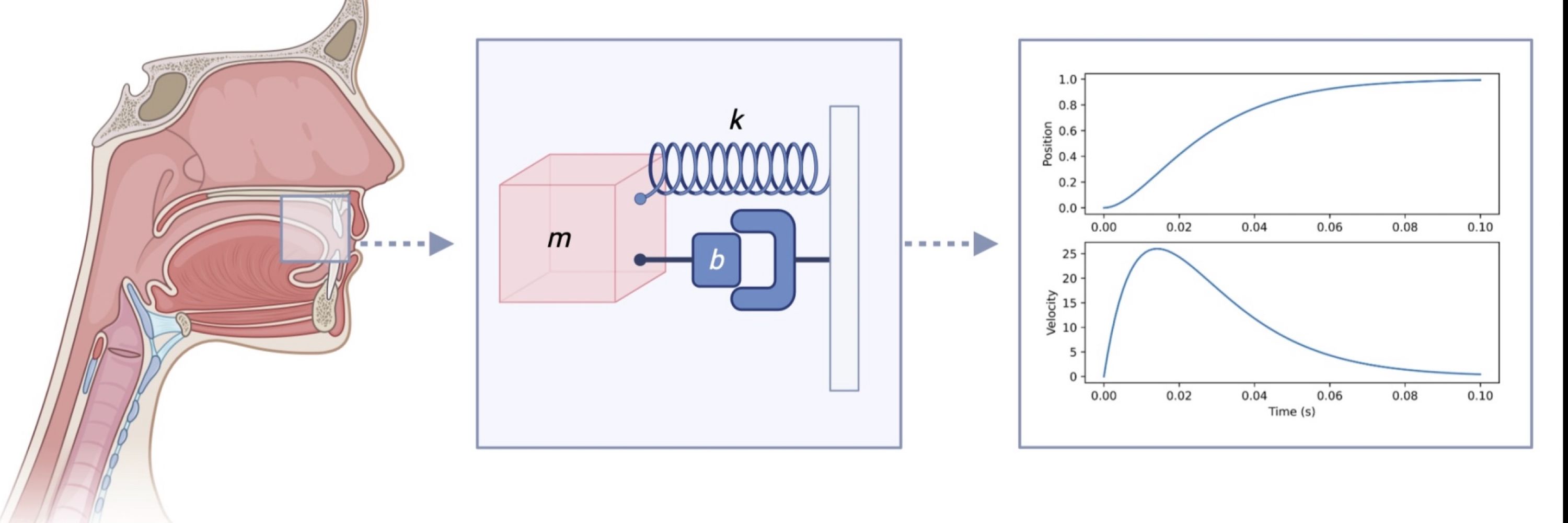

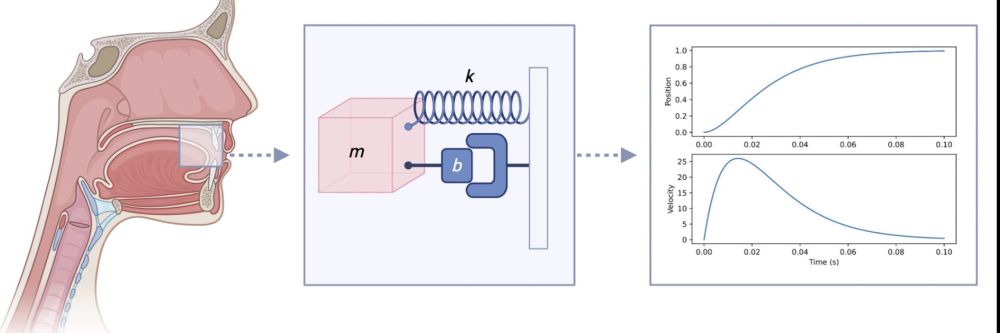

✨Discovering dynamical laws for speech gestures ✨

➡️ I’m delighted to announce my new article out today in Cognitive Science, where I discover simple mathematical laws that govern articulatory control in speech.

🔗 onlinelibrary.wiley.com/doi/full/10....

@cogscisociety.bsky.social

➡️ I’m delighted to announce my new article out today in Cognitive Science, where I discover simple mathematical laws that govern articulatory control in speech.

🔗 onlinelibrary.wiley.com/doi/full/10....

@cogscisociety.bsky.social

Reposted by Sam Kirkham

Interested in doing a PhD with me and lacns.github.io? Or with any of the incredible fellows in the IMPRS School of Cognition www.maxplanckschools.org/cognition-en - apply before Dec 1st at cognition.maxplanckschools.org/en/application

Language and Computation in Neural Systems

We are an international group of scientists consisting of linguists, cognitive scientists, cognitive neuroscientists, computational neuroscientists, computational modellers, computational scientists, ...

lacns.github.io

September 3, 2025 at 3:15 PM

Interested in doing a PhD with me and lacns.github.io? Or with any of the incredible fellows in the IMPRS School of Cognition www.maxplanckschools.org/cognition-en - apply before Dec 1st at cognition.maxplanckschools.org/en/application

Reposted by Sam Kirkham

And you can hear all about Nosey, nasalance and 3D printing (and mysterious bonus content??) from @samkirkham.bsky.social on Tuesday afternoon (A02-O3)!

📄 www.isca-archive.org/interspeech_...

📄 www.isca-archive.org/interspeech_...

August 17, 2025 at 10:24 AM

And you can hear all about Nosey, nasalance and 3D printing (and mysterious bonus content??) from @samkirkham.bsky.social on Tuesday afternoon (A02-O3)!

📄 www.isca-archive.org/interspeech_...

📄 www.isca-archive.org/interspeech_...

Reposted by Sam Kirkham

Pat Strycharczuk will be presenting our paper at #Interspeech2025 on Wednesday afternoon (A02-O6), where we applied forensic speaker comparison methods to ultrasound tongue imaging data to think about the individuality of articulatory strategy

📄 www.isca-archive.org/interspeech_...

📄 www.isca-archive.org/interspeech_...

August 17, 2025 at 10:24 AM

Pat Strycharczuk will be presenting our paper at #Interspeech2025 on Wednesday afternoon (A02-O6), where we applied forensic speaker comparison methods to ultrasound tongue imaging data to think about the individuality of articulatory strategy

📄 www.isca-archive.org/interspeech_...

📄 www.isca-archive.org/interspeech_...

Very happy to have been awarded an APEX grant for a project on “Interpretable acoustic-articulatory relations in speech production” w/ co-investigators Anton Ragni & Aneta Stefanovska. The plan is to do some interesting speech research at the intersection of linguistics, physics & computer science!

We are pleased to announce the recipients of the 2025 APEX Awards, granted to ten researchers to pursue innovative, interdisciplinary research. The awards are supported by the @leverhulme.ac.uk and delivered in partnership with the Royal Academy of Engineering and @royalsociety.org: buff.ly/eF30SQq

August 8, 2025 at 10:21 AM

Very happy to have been awarded an APEX grant for a project on “Interpretable acoustic-articulatory relations in speech production” w/ co-investigators Anton Ragni & Aneta Stefanovska. The plan is to do some interesting speech research at the intersection of linguistics, physics & computer science!

Reposted by Sam Kirkham

Presenting some stuff from my dissertation at this cool workshop in a couple weeks on dynamical models of speech: samkirkham.github.io/dymos/

Doing a lot of reading/prep work as I am the "symbolicist" going to talk to a bunch of "dynamacists". Should be fun! (seriously)

Doing a lot of reading/prep work as I am the "symbolicist" going to talk to a bunch of "dynamacists". Should be fun! (seriously)

Dynamical Models of Speech

researcher in phonetics at lancaster university

samkirkham.github.io

July 14, 2025 at 9:00 PM

Presenting some stuff from my dissertation at this cool workshop in a couple weeks on dynamical models of speech: samkirkham.github.io/dymos/

Doing a lot of reading/prep work as I am the "symbolicist" going to talk to a bunch of "dynamacists". Should be fun! (seriously)

Doing a lot of reading/prep work as I am the "symbolicist" going to talk to a bunch of "dynamacists". Should be fun! (seriously)

Reposted by Sam Kirkham

Here are my slides: www.scott-nelson.net/Presentation...

I started my talk by saying, “usually when you’re a phonologist who is interested in phonetics you get more concrete but I decided to get more abstract instead.” If that’s of interest to you then you might be interested in checking these out!

I started my talk by saying, “usually when you’re a phonologist who is interested in phonetics you get more concrete but I decided to get more abstract instead.” If that’s of interest to you then you might be interested in checking these out!

July 26, 2025 at 6:44 PM

Here are my slides: www.scott-nelson.net/Presentation...

I started my talk by saying, “usually when you’re a phonologist who is interested in phonetics you get more concrete but I decided to get more abstract instead.” If that’s of interest to you then you might be interested in checking these out!

I started my talk by saying, “usually when you’re a phonologist who is interested in phonetics you get more concrete but I decided to get more abstract instead.” If that’s of interest to you then you might be interested in checking these out!

Reposted by Sam Kirkham

MLX, Apple’s machine learning framework, just merged a CUDA Backend.

Matmul, tensor copy ops, and other core CUDA primitives are now part of Apple’s official build.

There’s a lot of hype + confusion.

Here’s what it is, and…isn’t.

Matmul, tensor copy ops, and other core CUDA primitives are now part of Apple’s official build.

There’s a lot of hype + confusion.

Here’s what it is, and…isn’t.

July 15, 2025 at 6:29 PM

MLX, Apple’s machine learning framework, just merged a CUDA Backend.

Matmul, tensor copy ops, and other core CUDA primitives are now part of Apple’s official build.

There’s a lot of hype + confusion.

Here’s what it is, and…isn’t.

Matmul, tensor copy ops, and other core CUDA primitives are now part of Apple’s official build.

There’s a lot of hype + confusion.

Here’s what it is, and…isn’t.

Reposted by Sam Kirkham

More details on this soon! Also this weekend is the last chance to submit your TTS system for the next round of evaluation (Q2 2025) by either messaging me at christoph.minixhofer@ed.ac.uk or requesting a model here: huggingface.co/spaces/ttsds...

Christoph Minixhofer, Ondrej Klejch, Peter Bell: TTSDS2: Resources and Benchmark for Evaluating Human-Quality Text to Speech Systems https://arxiv.org/abs/2506.19441 https://arxiv.org/pdf/2506.19441 https://arxiv.org/html/2506.19441

June 27, 2025 at 8:09 AM

More details on this soon! Also this weekend is the last chance to submit your TTS system for the next round of evaluation (Q2 2025) by either messaging me at christoph.minixhofer@ed.ac.uk or requesting a model here: huggingface.co/spaces/ttsds...

Reposted by Sam Kirkham

deadline 23 June!! Please re-bleat(??) widely!

my lab (lacns.github.io) at @mpi-nl.bsky.social and @dondersinst.bsky.social is recruiting for two PhD and two postdoctoral positions funded by an @erc.europa.eu Consolidator - come join us!

PhD: www.mpi.nl/career-educa...

Postdoc: www.mpi.nl/career-educa...

(please share widely)

PhD: www.mpi.nl/career-educa...

Postdoc: www.mpi.nl/career-educa...

(please share widely)

Language and Computation in Neural Systems

We are an international group of scientists consisting of linguists, cognitive scientists, cognitive neuroscientists, computational neuroscientists, computational modellers, computational scientists, ...

lacns.github.io

June 19, 2025 at 6:55 PM

deadline 23 June!! Please re-bleat(??) widely!

Reposted by Sam Kirkham

Introducing the tidynorm package! It's got convenience functions for applying your favorite vowel normalization methods to point measures, formant tracks, and DCT coefficients in a tidyverse workflow, as well as a flexible framework for defining your own normalization methods!

Introducing tidynorm – Væl Space

Here’s a brief introduction to the new tidynorm package.

jofrhwld.github.io

June 16, 2025 at 3:35 PM

Introducing the tidynorm package! It's got convenience functions for applying your favorite vowel normalization methods to point measures, formant tracks, and DCT coefficients in a tidyverse workflow, as well as a flexible framework for defining your own normalization methods!

Reposted by Sam Kirkham

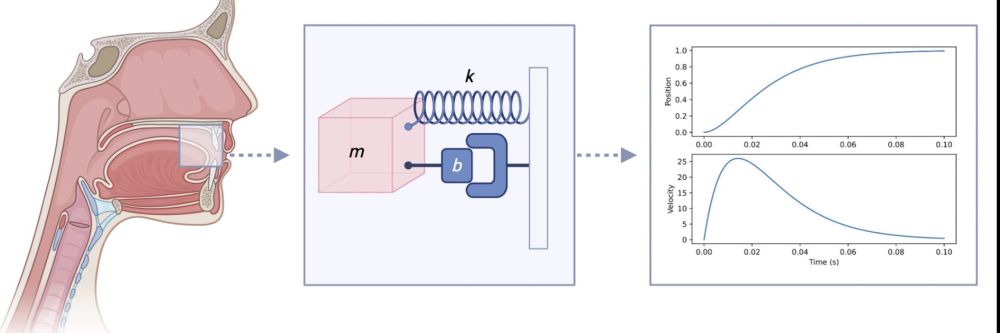

🐠🦠Why can’t bacteria swim like fish?

At microscopic scales, physics changes — viscosity rules! Researchers at our institute study how microbes like E. coli overcome this challenge with clever strategies like run-and-tumble.

Watch our new video:

youtu.be/drwCRRD7CGY?...

At microscopic scales, physics changes — viscosity rules! Researchers at our institute study how microbes like E. coli overcome this challenge with clever strategies like run-and-tumble.

Watch our new video:

youtu.be/drwCRRD7CGY?...

Tiny swimmers

YouTube video by MPIPKS

youtu.be

June 17, 2025 at 7:45 AM

🐠🦠Why can’t bacteria swim like fish?

At microscopic scales, physics changes — viscosity rules! Researchers at our institute study how microbes like E. coli overcome this challenge with clever strategies like run-and-tumble.

Watch our new video:

youtu.be/drwCRRD7CGY?...

At microscopic scales, physics changes — viscosity rules! Researchers at our institute study how microbes like E. coli overcome this challenge with clever strategies like run-and-tumble.

Watch our new video:

youtu.be/drwCRRD7CGY?...

Reposted by Sam Kirkham

There's a lot of big news today/this week, but Apple just dropped a nuke of a paper about LLMs & LRMs, specially around "high-complexity tasks where both models experience complete collapse." This is the biggest sign yet that if AI ever lives up the hype, it won't be via those approaches:

The Illusion of Thinking: Understanding the Strengths and Limitations of Reasoning Models via the Lens of Problem Complexity

Recent generations of frontier language models have introduced Large Reasoning Models

(LRMs) that generate detailed thinking processes…

machinelearning.apple.com

June 7, 2025 at 10:53 PM

There's a lot of big news today/this week, but Apple just dropped a nuke of a paper about LLMs & LRMs, specially around "high-complexity tasks where both models experience complete collapse." This is the biggest sign yet that if AI ever lives up the hype, it won't be via those approaches:

Reposted by Sam Kirkham

🚨 PhD call in Neurosciences @UNIMORE_univ is open!

Join our Cognitive Neuroscience & Psychology lab in Reggio Emilia 🇮🇹 – a vibrant, livable city.

We study cognitive control, perception-action links & social cognition using EEG, behavioral, mathematical & computational modeling.

🧠👇

Join our Cognitive Neuroscience & Psychology lab in Reggio Emilia 🇮🇹 – a vibrant, livable city.

We study cognitive control, perception-action links & social cognition using EEG, behavioral, mathematical & computational modeling.

🧠👇

Call for admission to PhD Programmes - 41° cycle - A.Y. 2025/2026

www.unimore.it

June 4, 2025 at 9:53 AM

🚨 PhD call in Neurosciences @UNIMORE_univ is open!

Join our Cognitive Neuroscience & Psychology lab in Reggio Emilia 🇮🇹 – a vibrant, livable city.

We study cognitive control, perception-action links & social cognition using EEG, behavioral, mathematical & computational modeling.

🧠👇

Join our Cognitive Neuroscience & Psychology lab in Reggio Emilia 🇮🇹 – a vibrant, livable city.

We study cognitive control, perception-action links & social cognition using EEG, behavioral, mathematical & computational modeling.

🧠👇

Reposted by Sam Kirkham

We are very excited that Samuel Schmück has been awarded a Leverhulme Trust Early Career Fellowship for a great project on speech analytics and under-represented language varieties in speech technology. Many congratulations Sam!

@samschmueck.bsky.social @leverhulme.ac.uk

@samschmueck.bsky.social @leverhulme.ac.uk

Sam Schmück awarded Leverhulme Trust Early Career Fellowship - Lancaster University

Congratulations to Sam Schmück for being awarded a highly prestigious Leverhulme Trust Early Career Fellowship!

www.lancaster.ac.uk

June 4, 2025 at 9:29 AM

We are very excited that Samuel Schmück has been awarded a Leverhulme Trust Early Career Fellowship for a great project on speech analytics and under-represented language varieties in speech technology. Many congratulations Sam!

@samschmueck.bsky.social @leverhulme.ac.uk

@samschmueck.bsky.social @leverhulme.ac.uk

Reposted by Sam Kirkham

Hot off the press! My tutorial on ultrasound data collection & analysis is now out. Open Access. Part of a special issue in the Journal of the Phonetic Society of Japan, with lots of other cool studies. Articulatory phonetics is going strong in Japan!

www.jstage.jst.go.jp/article/onse...

www.jstage.jst.go.jp/article/onse...

Quantifying Between-Speaker Variation in Ultrasound Tongue Imaging Data

This article outlines a quantitative, between-group comparison of tongue shapes using ultrasound tongue imaging, one of the vocal tract imaging techni …

www.jstage.jst.go.jp

April 30, 2025 at 9:23 AM

Hot off the press! My tutorial on ultrasound data collection & analysis is now out. Open Access. Part of a special issue in the Journal of the Phonetic Society of Japan, with lots of other cool studies. Articulatory phonetics is going strong in Japan!

www.jstage.jst.go.jp/article/onse...

www.jstage.jst.go.jp/article/onse...

We have two papers accepted at #Interspeech2025! ✨

➡️ Nosey: Open-source hardware for acoustic nasalance

arxiv.org/abs/2505.23339

➡️ Articulatory strategy in vowel production as a basis for speaker discrimination

arxiv.org/abs/2505.20995

+ I'll also be giving a survey talk!

See you in Rotterdam! 🇳🇱

➡️ Nosey: Open-source hardware for acoustic nasalance

arxiv.org/abs/2505.23339

➡️ Articulatory strategy in vowel production as a basis for speaker discrimination

arxiv.org/abs/2505.20995

+ I'll also be giving a survey talk!

See you in Rotterdam! 🇳🇱

May 30, 2025 at 7:35 AM

We have two papers accepted at #Interspeech2025! ✨

➡️ Nosey: Open-source hardware for acoustic nasalance

arxiv.org/abs/2505.23339

➡️ Articulatory strategy in vowel production as a basis for speaker discrimination

arxiv.org/abs/2505.20995

+ I'll also be giving a survey talk!

See you in Rotterdam! 🇳🇱

➡️ Nosey: Open-source hardware for acoustic nasalance

arxiv.org/abs/2505.23339

➡️ Articulatory strategy in vowel production as a basis for speaker discrimination

arxiv.org/abs/2505.20995

+ I'll also be giving a survey talk!

See you in Rotterdam! 🇳🇱

Reposted by Sam Kirkham

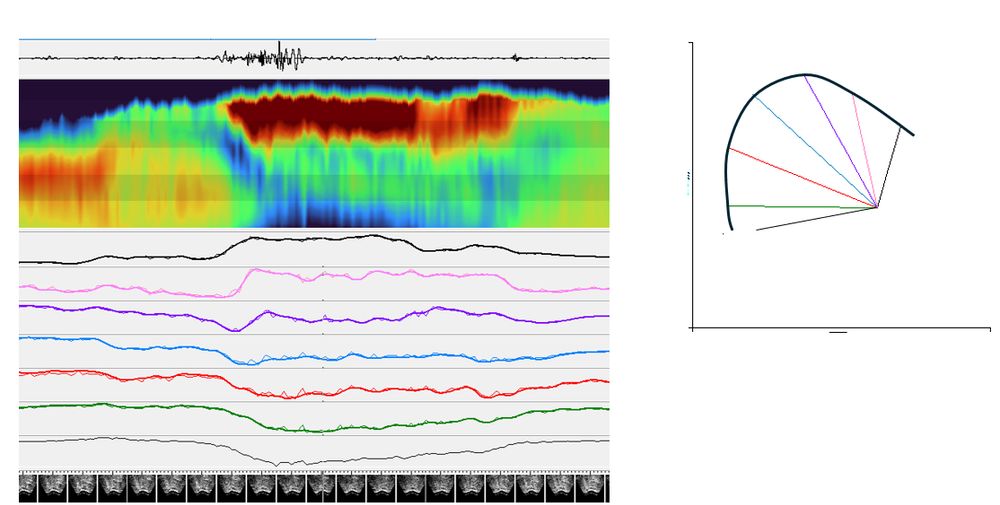

Glossogram with dark red indicating constriction and blue diagonal (tongue compartment contracted) demonstrating peristaltic transfer of water bolus from oral-pharyngeal. This is easiest to explain as sequential extension of neuromuscular compartments of the tongue.

May 27, 2025 at 11:52 AM

Glossogram with dark red indicating constriction and blue diagonal (tongue compartment contracted) demonstrating peristaltic transfer of water bolus from oral-pharyngeal. This is easiest to explain as sequential extension of neuromuscular compartments of the tongue.

Reposted by Sam Kirkham

my lab (lacns.github.io) at @mpi-nl.bsky.social and @dondersinst.bsky.social is recruiting for two PhD and two postdoctoral positions funded by an @erc.europa.eu Consolidator - come join us!

PhD: www.mpi.nl/career-educa...

Postdoc: www.mpi.nl/career-educa...

(please share widely)

PhD: www.mpi.nl/career-educa...

Postdoc: www.mpi.nl/career-educa...

(please share widely)

Language and Computation in Neural Systems

We are an international group of scientists consisting of linguists, cognitive scientists, cognitive neuroscientists, computational neuroscientists, computational modellers, computational scientists, ...

lacns.github.io

May 20, 2025 at 1:49 PM

my lab (lacns.github.io) at @mpi-nl.bsky.social and @dondersinst.bsky.social is recruiting for two PhD and two postdoctoral positions funded by an @erc.europa.eu Consolidator - come join us!

PhD: www.mpi.nl/career-educa...

Postdoc: www.mpi.nl/career-educa...

(please share widely)

PhD: www.mpi.nl/career-educa...

Postdoc: www.mpi.nl/career-educa...

(please share widely)

Reposted by Sam Kirkham

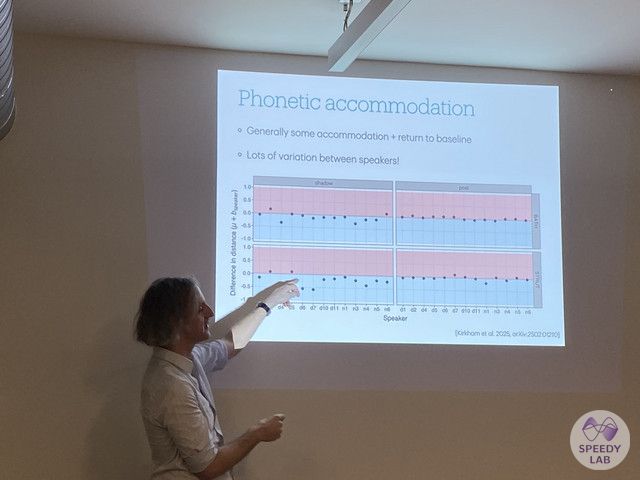

As part of the #ViTraLiP transnational course, our virtual guest was @samkirkham.bsky.social from Lancaster University. During our exchange in #Paris, we were able to attend his talk at the #SRPP colloquium. Thanks to @lppparis.bsky.social for inviting us! Read more here: tinyurl.com/vitralip.

May 22, 2025 at 11:50 AM

As part of the #ViTraLiP transnational course, our virtual guest was @samkirkham.bsky.social from Lancaster University. During our exchange in #Paris, we were able to attend his talk at the #SRPP colloquium. Thanks to @lppparis.bsky.social for inviting us! Read more here: tinyurl.com/vitralip.

Reposted by Sam Kirkham

2. Nosey: Open-source hardware for acoustic nasalance - led by PhD student Maya Dewhurst, with Jack Collins, Roy Alderton & Sam Kirkham

(psst there's 3D printing in there)

(psst there's 3D printing in there)

May 21, 2025 at 4:54 PM

2. Nosey: Open-source hardware for acoustic nasalance - led by PhD student Maya Dewhurst, with Jack Collins, Roy Alderton & Sam Kirkham

(psst there's 3D printing in there)

(psst there's 3D printing in there)

Reposted by Sam Kirkham

We've got 2 papers accepted to #Interspeech2025 (although you'll only see my collaborators and not me at Rotterdam 🥲):

1. Articulatory strategy in vowel production as a basis for speaker discrimination - with Pat Strycharczuk & Sam Kirkham

@phoneticslab.bsky.social

@lancslinguistics.bsky.social

1. Articulatory strategy in vowel production as a basis for speaker discrimination - with Pat Strycharczuk & Sam Kirkham

@phoneticslab.bsky.social

@lancslinguistics.bsky.social

May 21, 2025 at 4:54 PM

We've got 2 papers accepted to #Interspeech2025 (although you'll only see my collaborators and not me at Rotterdam 🥲):

1. Articulatory strategy in vowel production as a basis for speaker discrimination - with Pat Strycharczuk & Sam Kirkham

@phoneticslab.bsky.social

@lancslinguistics.bsky.social

1. Articulatory strategy in vowel production as a basis for speaker discrimination - with Pat Strycharczuk & Sam Kirkham

@phoneticslab.bsky.social

@lancslinguistics.bsky.social

Reposted by Sam Kirkham

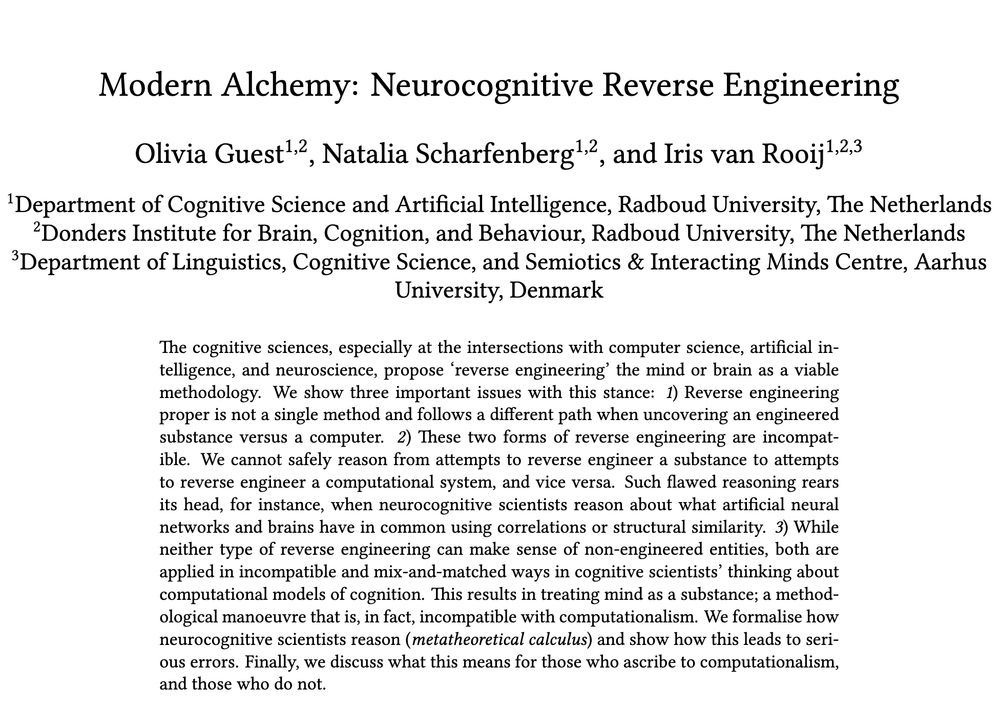

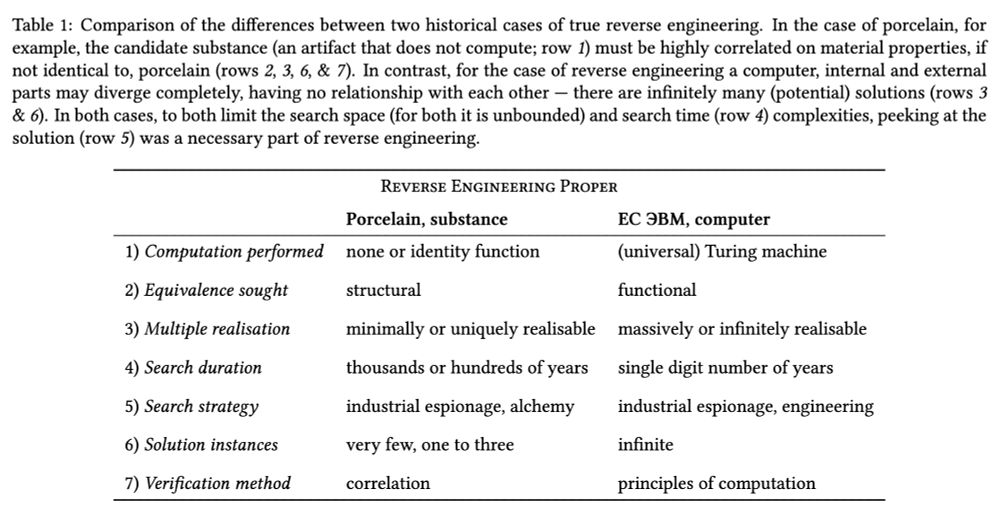

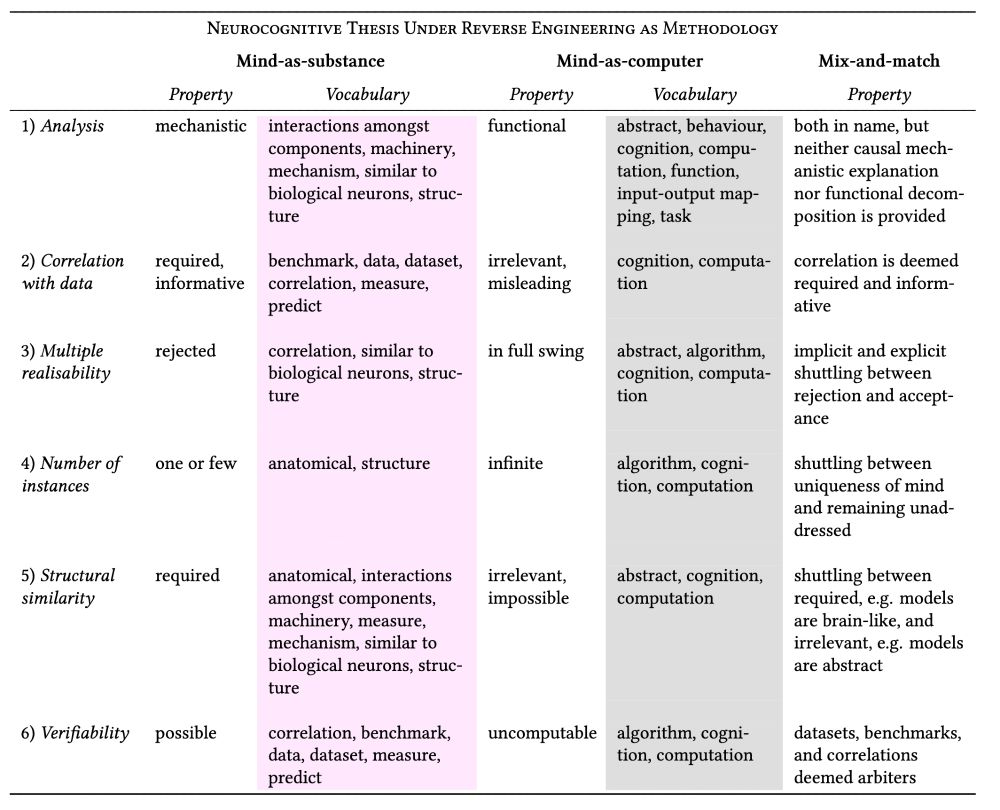

I've felt for a while that a mainstream method, reverse engineering, in cognitive science & AI is incompatible w computationalism‼️ So I wrote "Modern Alchemy: Neurocognitive Reverse Engineering" w the wonderful Natalia S. & @irisvanrooij.bsky.social to elaborate: philsci-archive.pitt.edu/25289/

1/n

1/n

May 13, 2025 at 6:29 PM

I've felt for a while that a mainstream method, reverse engineering, in cognitive science & AI is incompatible w computationalism‼️ So I wrote "Modern Alchemy: Neurocognitive Reverse Engineering" w the wonderful Natalia S. & @irisvanrooij.bsky.social to elaborate: philsci-archive.pitt.edu/25289/

1/n

1/n

✨Discovering dynamical laws for speech gestures ✨

➡️ I’m delighted to announce my new article out today in Cognitive Science, where I discover simple mathematical laws that govern articulatory control in speech.

🔗 onlinelibrary.wiley.com/doi/full/10....

@cogscisociety.bsky.social

➡️ I’m delighted to announce my new article out today in Cognitive Science, where I discover simple mathematical laws that govern articulatory control in speech.

🔗 onlinelibrary.wiley.com/doi/full/10....

@cogscisociety.bsky.social

May 2, 2025 at 11:52 AM

✨Discovering dynamical laws for speech gestures ✨

➡️ I’m delighted to announce my new article out today in Cognitive Science, where I discover simple mathematical laws that govern articulatory control in speech.

🔗 onlinelibrary.wiley.com/doi/full/10....

@cogscisociety.bsky.social

➡️ I’m delighted to announce my new article out today in Cognitive Science, where I discover simple mathematical laws that govern articulatory control in speech.

🔗 onlinelibrary.wiley.com/doi/full/10....

@cogscisociety.bsky.social

I wrote some software that visualises real-time nasalance for our @phoneticslab.bsky.social public engagement event this week!

We use two microphones to capture oral and nasal signals, separated by a baffle, and map each signal's amplitude to a visual representation of the face!

We use two microphones to capture oral and nasal signals, separated by a baffle, and map each signal's amplitude to a visual representation of the face!

April 10, 2025 at 1:48 PM

I wrote some software that visualises real-time nasalance for our @phoneticslab.bsky.social public engagement event this week!

We use two microphones to capture oral and nasal signals, separated by a baffle, and map each signal's amplitude to a visual representation of the face!

We use two microphones to capture oral and nasal signals, separated by a baffle, and map each signal's amplitude to a visual representation of the face!