🗞️ short blog post: fabian-sp.github.io/posts/2024/1...

📇 bib files: github.com/fabian-sp/ml-bib

🗞️ short blog post: fabian-sp.github.io/posts/2024/1...

📇 bib files: github.com/fabian-sp/ml-bib

Presents a survey on LLM architectures that systematically categorizes auto-encoding, auto-regressive and encoder-decoder models.

📝 arxiv.org/abs/2412.03220

Presents a survey on LLM architectures that systematically categorizes auto-encoding, auto-regressive and encoder-decoder models.

📝 arxiv.org/abs/2412.03220

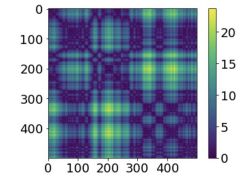

We build **scalar** time series embeddings of temporal networks !

The key enabling insight : the relevant feature of each network snapshot... is just its distance to every other snapshot!

Work w/ FJ Marín, N. Masuda, L. Arola-Fernández

arxiv.org/abs/2412.02715

We build **scalar** time series embeddings of temporal networks !

The key enabling insight : the relevant feature of each network snapshot... is just its distance to every other snapshot!

Work w/ FJ Marín, N. Masuda, L. Arola-Fernández

arxiv.org/abs/2412.02715

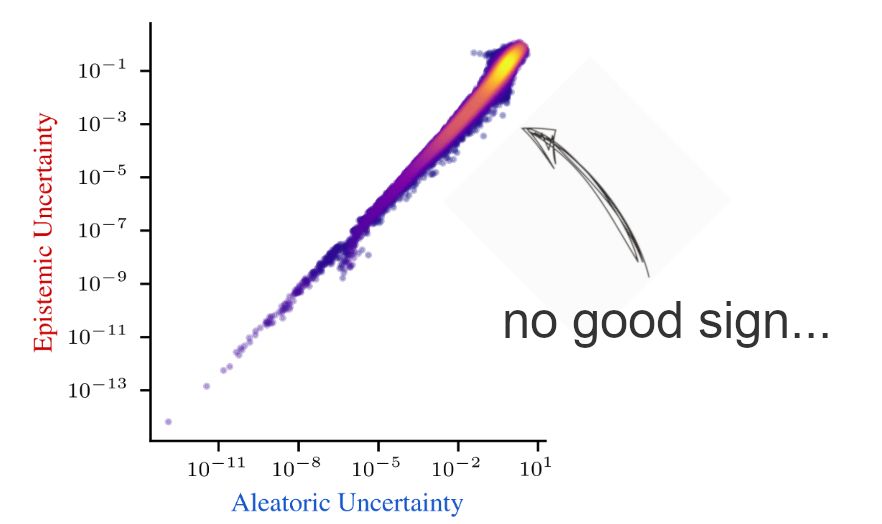

📖 arxiv.org/abs/2402.19460 🧵1/10

📖 arxiv.org/abs/2402.19460 🧵1/10

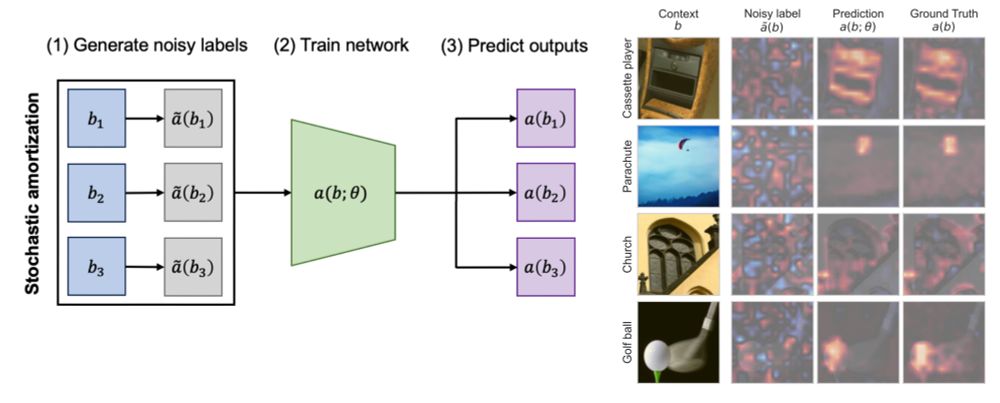

Surprisingly we can "learn to attribute" cheaply from noisy explanations! arxiv.org/abs/2401.15866

Surprisingly we can "learn to attribute" cheaply from noisy explanations! arxiv.org/abs/2401.15866

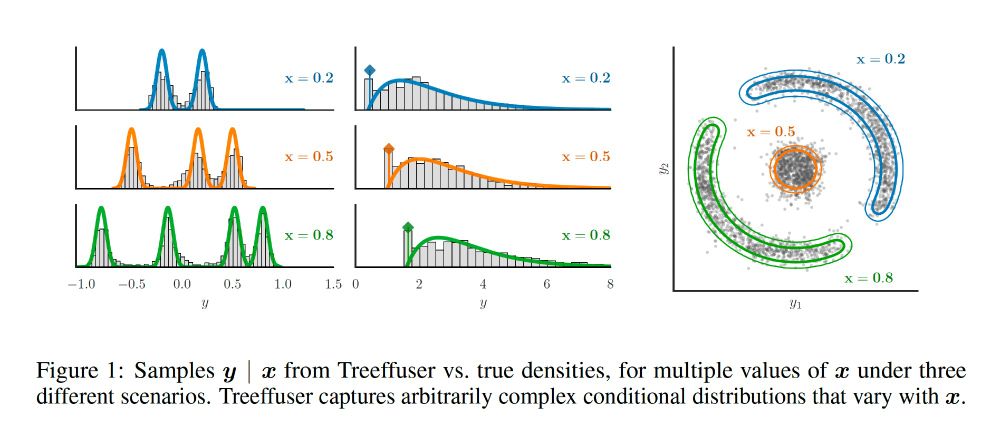

paper: arxiv.org/abs/2406.07658

repo: github.com/blei-lab/tre...

🧵(1/8)

paper: arxiv.org/abs/2406.07658

repo: github.com/blei-lab/tre...

🧵(1/8)

- Includes TabM and provides a scikit-learn interface

- some baseline NN parameter names are renamed (removed double-underscores)

- other small changes, see the readme.

github.com/dholzmueller...

In our NeurIPS 2024 paper, we introduce RealMLP, a NN with improvements in all areas and meta-learned default parameters.

Some insights about RealMLP and other models on large benchmarks (>200 datasets): 🧵

- Includes TabM and provides a scikit-learn interface

- some baseline NN parameter names are renamed (removed double-underscores)

- other small changes, see the readme.

github.com/dholzmueller...

hastie.su.domains/CASI_files/P...

hastie.su.domains/CASI_files/P...