@renebekkers.bsky.social

Here's a reasonable request: please provide access to the data and code producing the results reported in your manuscript, so that it will not go down the gutter as unverifiable magic.

@ianhussey.mmmdata.io finds data are rarely made available upon request

conferences.lnu.se/index.php/me...

@ianhussey.mmmdata.io finds data are rarely made available upon request

conferences.lnu.se/index.php/me...

LnuOpen

| Meta-Psychology

conferences.lnu.se

November 9, 2025 at 10:08 AM

Here's a reasonable request: please provide access to the data and code producing the results reported in your manuscript, so that it will not go down the gutter as unverifiable magic.

@ianhussey.mmmdata.io finds data are rarely made available upon request

conferences.lnu.se/index.php/me...

@ianhussey.mmmdata.io finds data are rarely made available upon request

conferences.lnu.se/index.php/me...

Reposted

📣 Save the date for the 13th PCI webinar on December 1st, 2025, at 4 PM CET!! Simine Vazire (University of Melbourne, Australia) will present "Recognizing and responding to a replication crisis: Lessons from Psychology". For more details and registration, visit: buff.ly/wZNoD2v

PCI Webinar Series - Peer Community In

The PCI webinar series is a series of seminars on research practices, publication practices, evaluation, scientific integrity, meta-research, organised by Peer Community In

peercommunityin.org

November 5, 2025 at 10:49 AM

📣 Save the date for the 13th PCI webinar on December 1st, 2025, at 4 PM CET!! Simine Vazire (University of Melbourne, Australia) will present "Recognizing and responding to a replication crisis: Lessons from Psychology". For more details and registration, visit: buff.ly/wZNoD2v

Reposted

The package formerly known as papercheck has changed its name to metacheck! We're checking more than just papers, with functions to assess OSF projects, github repos, and AsPredicted pre-registrations, with more being developed all the time.

scienceverse.github.io/metacheck/

scienceverse.github.io/metacheck/

Check Research Outputs for Best Practices

A modular, extendable system for automatically checking research outputs for best practices using text search, R code, and/or (optional) LLM queries.

scienceverse.github.io

November 3, 2025 at 4:20 PM

The package formerly known as papercheck has changed its name to metacheck! We're checking more than just papers, with functions to assess OSF projects, github repos, and AsPredicted pre-registrations, with more being developed all the time.

scienceverse.github.io/metacheck/

scienceverse.github.io/metacheck/

Reposted

Are you interested in thinking about which studies are worth replicating? Then you have 10 articles to dig into in Meta-Psychology, representing a very wide range of viewpoint on this topic, out now: open.lnu.se/index.php/me...

LnuOpen

| Meta-Psychology

Original articles

open.lnu.se

October 30, 2025 at 3:43 PM

Are you interested in thinking about which studies are worth replicating? Then you have 10 articles to dig into in Meta-Psychology, representing a very wide range of viewpoint on this topic, out now: open.lnu.se/index.php/me...

Reposted

We built the openESM database:

▶️60 openly available experience sampling datasets (16K+ participants, 740K+ obs.) in one place

▶️Harmonized (meta-)data, fully open-source software

▶️Filter & search all data, simply download via R/Python

Find out more:

🌐 openesmdata.org

📝 doi.org/10.31234/osf...

▶️60 openly available experience sampling datasets (16K+ participants, 740K+ obs.) in one place

▶️Harmonized (meta-)data, fully open-source software

▶️Filter & search all data, simply download via R/Python

Find out more:

🌐 openesmdata.org

📝 doi.org/10.31234/osf...

October 22, 2025 at 7:34 PM

We built the openESM database:

▶️60 openly available experience sampling datasets (16K+ participants, 740K+ obs.) in one place

▶️Harmonized (meta-)data, fully open-source software

▶️Filter & search all data, simply download via R/Python

Find out more:

🌐 openesmdata.org

📝 doi.org/10.31234/osf...

▶️60 openly available experience sampling datasets (16K+ participants, 740K+ obs.) in one place

▶️Harmonized (meta-)data, fully open-source software

▶️Filter & search all data, simply download via R/Python

Find out more:

🌐 openesmdata.org

📝 doi.org/10.31234/osf...

Reposted

The Festival of Data Science and AI is next week #DataAIFest

I'll be speaking in a panel on Using AI Ethically in Research on Monday

www.gla.ac.uk/research/az/...

And leading a workshop on Papercheck on Tuesday

www.gla.ac.uk/research/az/...

I'll be speaking in a panel on Using AI Ethically in Research on Monday

www.gla.ac.uk/research/az/...

And leading a workshop on Papercheck on Tuesday

www.gla.ac.uk/research/az/...

October 21, 2025 at 4:09 PM

The Festival of Data Science and AI is next week #DataAIFest

I'll be speaking in a panel on Using AI Ethically in Research on Monday

www.gla.ac.uk/research/az/...

And leading a workshop on Papercheck on Tuesday

www.gla.ac.uk/research/az/...

I'll be speaking in a panel on Using AI Ethically in Research on Monday

www.gla.ac.uk/research/az/...

And leading a workshop on Papercheck on Tuesday

www.gla.ac.uk/research/az/...

Reposted

Please help us, #MetaScience community!

It's time to decide on a forever name for papercheck (scienceverse.github.io/papercheck/). We don't want it to be confused with papercheck.ai, and we plan to check other research artifacts like repo contents, data, code, and prereg. Any suggestions?

It's time to decide on a forever name for papercheck (scienceverse.github.io/papercheck/). We don't want it to be confused with papercheck.ai, and we plan to check other research artifacts like repo contents, data, code, and prereg. Any suggestions?

Check Scientific Papers for Best Practices

A modular, extendable system for automatically checking scientific papers for best practices using text search, R code, and/or (optional) LLM queries.

scienceverse.github.io

October 14, 2025 at 1:06 PM

Please help us, #MetaScience community!

It's time to decide on a forever name for papercheck (scienceverse.github.io/papercheck/). We don't want it to be confused with papercheck.ai, and we plan to check other research artifacts like repo contents, data, code, and prereg. Any suggestions?

It's time to decide on a forever name for papercheck (scienceverse.github.io/papercheck/). We don't want it to be confused with papercheck.ai, and we plan to check other research artifacts like repo contents, data, code, and prereg. Any suggestions?

Reposted

New DP @i4replication.bsky.social: Meta-analysis on green nudges correcting for publication bias. "Behavioral interventions on households and individuals are unlikely to deliver material climate benefits." www.econstor.eu/bitstream/10...

October 9, 2025 at 9:36 AM

New DP @i4replication.bsky.social: Meta-analysis on green nudges correcting for publication bias. "Behavioral interventions on households and individuals are unlikely to deliver material climate benefits." www.econstor.eu/bitstream/10...

Reposted

@klararaiber.bsky.social and I wrote a paper and it is published now! In this study in @actasociologica.bsky.social, we investigate willingness to perform small caregiving tasks for neighbors. We show that anticipated reciprocity increases willingness but previous caregiving experience does not(1/2)

In a new article, Ramaekers and Raiber advances our knowledge of neighbour caregivers by examining the impact of anticipated reciprocity and previous (negative) caregiving experience. They indicate that neighbours can form a safety net for those who cannot rely on family.

doi.org/10.1177/0001...

doi.org/10.1177/0001...

Sage Journals: Discover world-class research

Subscription and open access journals from Sage, the world's leading independent academic publisher.

journals.sagepub.com

October 9, 2025 at 3:24 PM

@klararaiber.bsky.social and I wrote a paper and it is published now! In this study in @actasociologica.bsky.social, we investigate willingness to perform small caregiving tasks for neighbors. We show that anticipated reciprocity increases willingness but previous caregiving experience does not(1/2)

Reposted

My article "Data is not available upon request" was published in Meta-Psychology. Very happy to see this out!

open.lnu.se/index.php/me...

open.lnu.se/index.php/me...

LnuOpen

| Meta-Psychology

open.lnu.se

October 4, 2025 at 12:54 PM

My article "Data is not available upon request" was published in Meta-Psychology. Very happy to see this out!

open.lnu.se/index.php/me...

open.lnu.se/index.php/me...

Reposted

Can large language models stand in for human participants?

Many social scientists seem to think so, and are already using "silicon samples" in research.

One problem: depending on the analytic decisions made, you can basically get these samples to show any effect you want.

THREAD 🧵

Many social scientists seem to think so, and are already using "silicon samples" in research.

One problem: depending on the analytic decisions made, you can basically get these samples to show any effect you want.

THREAD 🧵

The threat of analytic flexibility in using large language models to simulate human data: A call to attention

Social scientists are now using large language models to create "silicon samples" - synthetic datasets intended to stand in for human respondents, aimed at revolutionising human subjects research. How...

arxiv.org

September 18, 2025 at 7:56 AM

Can large language models stand in for human participants?

Many social scientists seem to think so, and are already using "silicon samples" in research.

One problem: depending on the analytic decisions made, you can basically get these samples to show any effect you want.

THREAD 🧵

Many social scientists seem to think so, and are already using "silicon samples" in research.

One problem: depending on the analytic decisions made, you can basically get these samples to show any effect you want.

THREAD 🧵

How A Research Transparency Check Facilitatates Responsible Assessment of Research Quality

A Modular Approach to Research Quality

A dashboard of transparency indicators signaling trustworthiness Our Research Transparency Check (Bekkers et al., 2025) rests on two pillars. The first pillar is the development of Papercheck (DeBruine & Lakens, 2025), a collection of software applications that assess the transparency and methodological quality of research that we blogged about earlier (Lakens, 2025). Our approach is modular: for each defined aspect of transparency and methodological quality we develop a dedicated module and integrate it in the…

renebekkers.wordpress.com

September 13, 2025 at 7:57 AM

How A Research Transparency Check Facilitatates Responsible Assessment of Research Quality

Reposted

The pretty draft is now online.

Link to paper (free): www.journals.uchicago.edu/doi/epdf/10....

Our replication package starts from the raw data and we put real work into making it readable & setting it up so people could poke at it, so please do explore it: dataverse.harvard.edu/dataset.xhtm...

Link to paper (free): www.journals.uchicago.edu/doi/epdf/10....

Our replication package starts from the raw data and we put real work into making it readable & setting it up so people could poke at it, so please do explore it: dataverse.harvard.edu/dataset.xhtm...

September 10, 2025 at 5:25 PM

The pretty draft is now online.

Link to paper (free): www.journals.uchicago.edu/doi/epdf/10....

Our replication package starts from the raw data and we put real work into making it readable & setting it up so people could poke at it, so please do explore it: dataverse.harvard.edu/dataset.xhtm...

Link to paper (free): www.journals.uchicago.edu/doi/epdf/10....

Our replication package starts from the raw data and we put real work into making it readable & setting it up so people could poke at it, so please do explore it: dataverse.harvard.edu/dataset.xhtm...

Reposted

At the FORRT Replication Hub, our mission is to support researchers who want to replicate previous findings. We have now published a big new component with which we want to fulfill this mission: An open access handbook for reproduction and replication studies: forrt.org/replication_...

September 3, 2025 at 6:54 AM

At the FORRT Replication Hub, our mission is to support researchers who want to replicate previous findings. We have now published a big new component with which we want to fulfill this mission: An open access handbook for reproduction and replication studies: forrt.org/replication_...

Reposted

We're bringing together members of the VU community, including research support staff and faculty, who will share their experiences and lessons learned from implementing a campus-wide Open Science program.

𝐑𝐞𝐠𝐢𝐬𝐭𝐞𝐫: cos-io.zoom.us/webin...

𝐃𝐚𝐭𝐞: Sep 10, 2025

𝐓𝐢𝐦𝐞: 9:00 AM ET

𝐑𝐞𝐠𝐢𝐬𝐭𝐞𝐫: cos-io.zoom.us/webin...

𝐃𝐚𝐭𝐞: Sep 10, 2025

𝐓𝐢𝐦𝐞: 9:00 AM ET

August 26, 2025 at 6:31 PM

We're bringing together members of the VU community, including research support staff and faculty, who will share their experiences and lessons learned from implementing a campus-wide Open Science program.

𝐑𝐞𝐠𝐢𝐬𝐭𝐞𝐫: cos-io.zoom.us/webin...

𝐃𝐚𝐭𝐞: Sep 10, 2025

𝐓𝐢𝐦𝐞: 9:00 AM ET

𝐑𝐞𝐠𝐢𝐬𝐭𝐞𝐫: cos-io.zoom.us/webin...

𝐃𝐚𝐭𝐞: Sep 10, 2025

𝐓𝐢𝐦𝐞: 9:00 AM ET

Reposted

The full program for the PMGS Meta Research Symposium 2025 is online: docs.google.com/document/d/1... If you are interested in causal inference, systematic review, hypothesis testing, and preregistration, join is October 17th in Eindhoven! Attendance is free!

Meta Research Symposium 2025 PMGS

PMGS Meta Research Symposium 2025 16-17 October 2025, TU/e Eindhoven Conference website: https://paulmeehlschool.github.io/workshops/ Program Day 1 - Pre-Symposium Mini-Workshop Time Activity…

docs.google.com

August 20, 2025 at 2:34 PM

The full program for the PMGS Meta Research Symposium 2025 is online: docs.google.com/document/d/1... If you are interested in causal inference, systematic review, hypothesis testing, and preregistration, join is October 17th in Eindhoven! Attendance is free!

Reposted

Coding errors in data processing are more likely to be ignored if the erroneous result is in line with what we want to see. The theoretical prediction made in this paper is very plausible - testing it empirically is perhaps a bit more challenging. But still, interesting. arxiv.org/pdf/2508.20069

August 30, 2025 at 9:54 AM

Coding errors in data processing are more likely to be ignored if the erroneous result is in line with what we want to see. The theoretical prediction made in this paper is very plausible - testing it empirically is perhaps a bit more challenging. But still, interesting. arxiv.org/pdf/2508.20069

A 25 year old successful replication of a well-known finding in social psychology about the overestimation of self-interest in attitudes.

A 25 year old successful replication

While reviewing a paper, I suddenly remembered the first replication of an experimental study I ever conducted. It's 25 years old. In March 2000, I taught a workshop for a sociology class of 25 undergraduate students at Utrecht University. I asked the students in my group to fill out a simplified version of a study on the norm of self-interest; Study 4 in the Miller & Ratner (1998) paper in JPSP on the norm of self-interest.

renebekkers.wordpress.com

August 15, 2025 at 11:39 AM

A 25 year old successful replication of a well-known finding in social psychology about the overestimation of self-interest in attitudes.

Reposted

A good systematic review/meta analysis will evaluate the quality of each study and not just review the conclusions/data.

If the methodology doesn't include evaluation of quality, it's not a good source. Reviewing methodology is part of the vetting process.

If the methodology doesn't include evaluation of quality, it's not a good source. Reviewing methodology is part of the vetting process.

July 24, 2025 at 11:14 AM

A good systematic review/meta analysis will evaluate the quality of each study and not just review the conclusions/data.

If the methodology doesn't include evaluation of quality, it's not a good source. Reviewing methodology is part of the vetting process.

If the methodology doesn't include evaluation of quality, it's not a good source. Reviewing methodology is part of the vetting process.

Reposted

The Center For Scientific Integrity, our parent nonprofit, is hiring! Two new positions:

-- Editor, Medical Evidence Project

-- Staff reporter, Retraction Watch

and we're still recruiting for:

-- Assistant researcher, Retraction Watch Database

-- Editor, Medical Evidence Project

-- Staff reporter, Retraction Watch

and we're still recruiting for:

-- Assistant researcher, Retraction Watch Database

Job opportunities at Retraction Watch

Here are our current open positions: Editor, Medical Evidence Project Staff reporter, Retraction Watch Assistant researcher, Retraction Watch Database Learn more about the Center for Scientific Int…

retractionwatch.com

July 29, 2025 at 4:11 PM

The Center For Scientific Integrity, our parent nonprofit, is hiring! Two new positions:

-- Editor, Medical Evidence Project

-- Staff reporter, Retraction Watch

and we're still recruiting for:

-- Assistant researcher, Retraction Watch Database

-- Editor, Medical Evidence Project

-- Staff reporter, Retraction Watch

and we're still recruiting for:

-- Assistant researcher, Retraction Watch Database

As the wave of LLM generated research swells, how can you tell whether it is legit? Introducing the high five: a checklist for the evaluation of knowledge claims by LLMs, other generative AI, and science in general

The High Five: A Checklist for the Evaluation of Knowledge Claims

As the wave of LLM generated research swells, how can you tell whether it is legit? A fast growing proportion of science contains results generated by Large Language Models (LLMs) and other forms of generative AI. Researchers rely on virtual assistants to do their literature reviews, summarize previous research, and write texts for journal articles (Kwon, 2025). As a result, AI generated research swells to immense proportions.

renebekkers.wordpress.com

July 2, 2025 at 6:00 AM

As the wave of LLM generated research swells, how can you tell whether it is legit? Introducing the high five: a checklist for the evaluation of knowledge claims by LLMs, other generative AI, and science in general

The High Five: A Checklist for the Evaluation of Knowledge Claims

As the wave of LLM generated research swells, how can you tell whether it is legit? A fast growing proportion of science contains results generated by Large Language Models (LLMs) and other forms of generative AI. Researchers rely on virtual assistants to do their literature reviews, summarize previous research, and write texts for journal articles (Kwon, 2025). As a result, AI generated research swells to immense proportions.

renebekkers.wordpress.com

July 1, 2025 at 1:49 PM

As the wave of LLM generated research swells, how can you tell whether it is legit? Introducing the high five: a checklist for the evaluation of knowledge claims by LLMs, other generative AI, and science in general

Very important evidence from Sweden showing that #business and #economics become less #prosocial during their studies, while #law students do not onlinelibrary.wiley.com/doi/full/10....

Do business and economics studies erode prosocial values?

Does exposure to business and economics education make students less prosocial and more selfish? Employing a difference-in-difference strategy with panel-data from three subsequent cohorts of student...

onlinelibrary.wiley.com

February 23, 2025 at 3:12 PM

Very important evidence from Sweden showing that #business and #economics become less #prosocial during their studies, while #law students do not onlinelibrary.wiley.com/doi/full/10....

Reposted

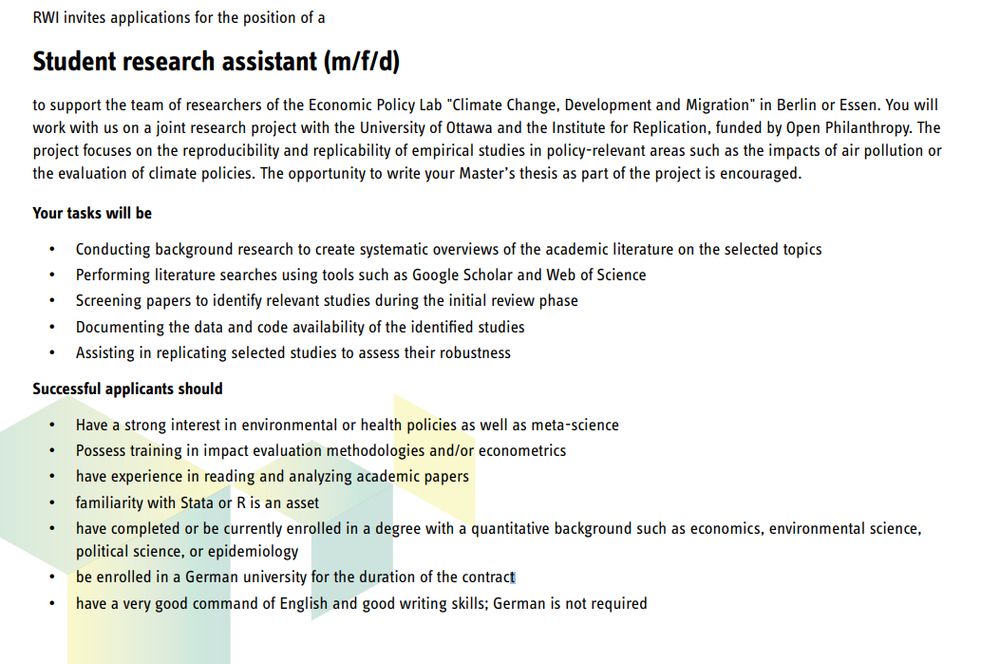

We are looking for RAs to work with us on a new meta-science & replication project, joint with @i4replication.bsky.social, now with focus on environmental (economics) topics such as air pollution & carbon pricing. RAs can work remotely but must be located in Germany www.rwi-essen.de/fileadmin/us...

January 30, 2025 at 10:54 AM

We are looking for RAs to work with us on a new meta-science & replication project, joint with @i4replication.bsky.social, now with focus on environmental (economics) topics such as air pollution & carbon pricing. RAs can work remotely but must be located in Germany www.rwi-essen.de/fileadmin/us...