Active inference - LLM - Reinforcement learning - Bayesian modelling | Creating a neural network library for predictive coding.

Curious about new interplays between neural networks and Bayesian modelling? Check out our latest preprint on dynamic predictive coding networks 👇

Paper: arxiv.org/abs/2410.09206

Code: github.com/ilabcode/pyhgf

gershmanlab.com/textbook.html

It's a textbook called Computational Foundations of Cognitive Neuroscience, which I wrote for my class.

My hope is that this will be a living document, continuously improved as I get feedback.

gershmanlab.com/textbook.html

It's a textbook called Computational Foundations of Cognitive Neuroscience, which I wrote for my class.

My hope is that this will be a living document, continuously improved as I get feedback.

If you want to join a genuinely curious, welcoming and inclusive community of Coxis, apply here:

tinyurl.com/coxijobs

Please RT - deadline is Jan 4‼️

If you want to join a genuinely curious, welcoming and inclusive community of Coxis, apply here:

tinyurl.com/coxijobs

Please RT - deadline is Jan 4‼️

www.nature.com/articles/s4...

www.nature.com/articles/s4...

Algorithmic Primitives and Compositional Geometry of Reasoning in Language Models

www.arxiv.org/pdf/2510.15987

🧵1/n

Algorithmic Primitives and Compositional Geometry of Reasoning in Language Models

www.arxiv.org/pdf/2510.15987

🧵1/n

📄 arxiv.org/abs/2510.14086 1/

📄 arxiv.org/abs/2510.14086 1/

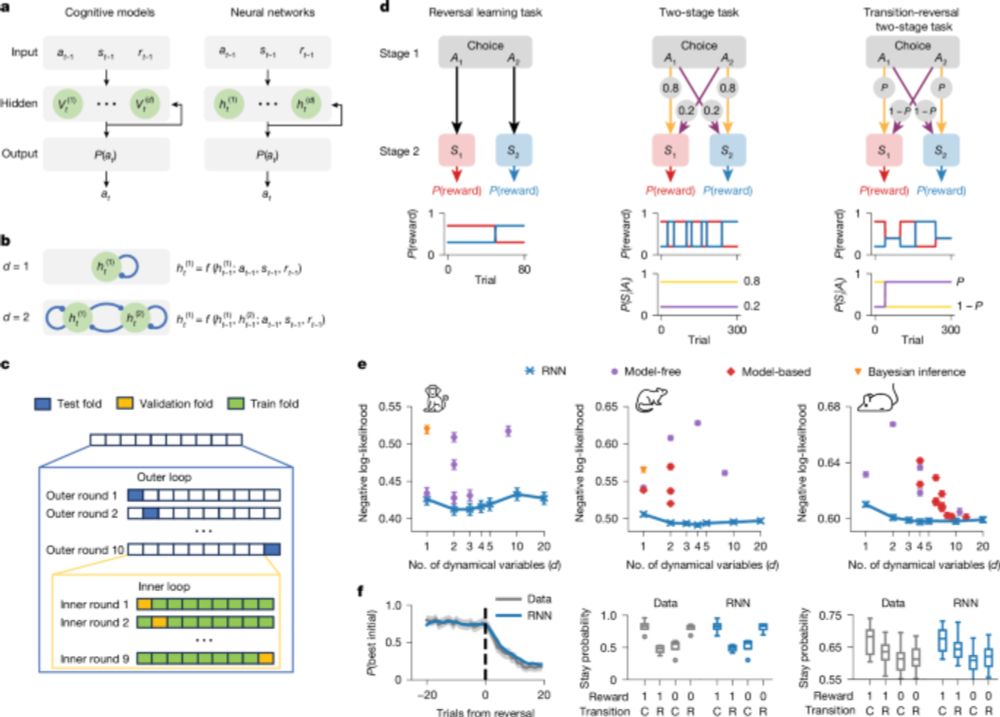

led by Robin Vloeberghs with @anne-urai.bsky.social Scott Linderman

Paper: desenderlab.com/wp-content/u... Thread ↓↓↓

#PsychSciSky #Neuroscience #Neuroskyence

led by Robin Vloeberghs with @anne-urai.bsky.social Scott Linderman

Paper: desenderlab.com/wp-content/u... Thread ↓↓↓

#PsychSciSky #Neuroscience #Neuroskyence

@akjagadish.bsky.social @marvinmathony.bsky.social @ericschulz.bsky.social & Tobi Ludwig

arxiv.org/abs/2502.00879

@akjagadish.bsky.social @marvinmathony.bsky.social @ericschulz.bsky.social & Tobi Ludwig

arxiv.org/abs/2502.00879

GPs are super interesting, but it’s not easy to wrap your head around them at first 🤔

This is a medium level (more intuition than math) introduction to GPs for time series.

getrecast.com/gaussian-pro...

GPs are super interesting, but it’s not easy to wrap your head around them at first 🤔

This is a medium level (more intuition than math) introduction to GPs for time series.

getrecast.com/gaussian-pro...

I will add anyone who uses computational models to adress questions in psychiatry research. :)

go.bsky.app/5PTy9Zj

I will add anyone who uses computational models to adress questions in psychiatry research. :)

go.bsky.app/5PTy9Zj

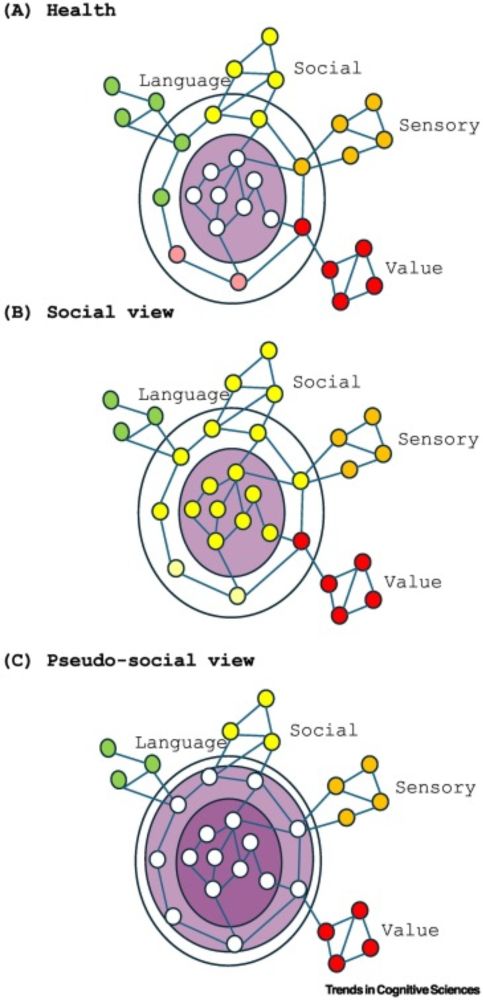

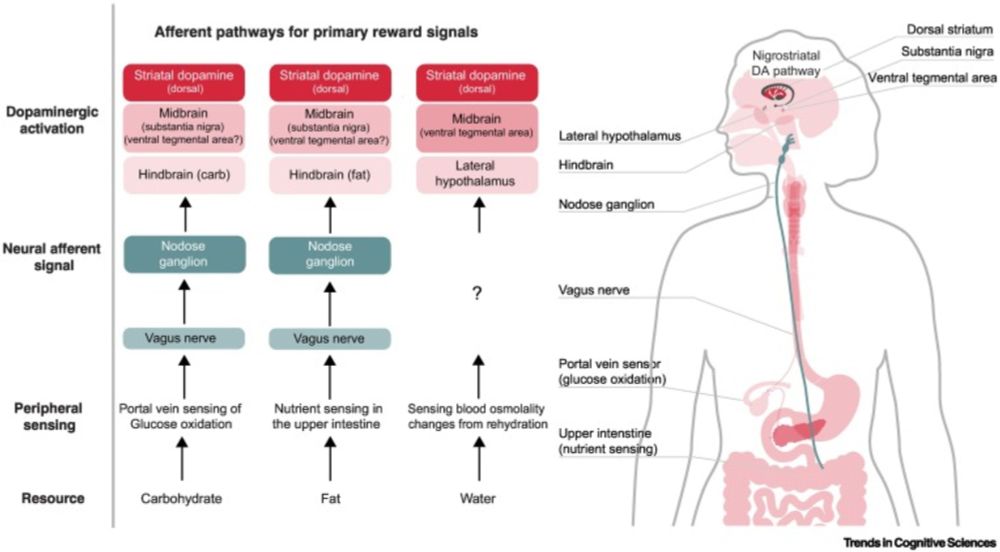

authors.elsevier.com/a/1lV174sIRv...

Here’s a quick 🧵(1/n)

authors.elsevier.com/a/1lV174sIRv...

Here’s a quick 🧵(1/n)

osf.io/preprints/ps...

osf.io/preprints/ps...

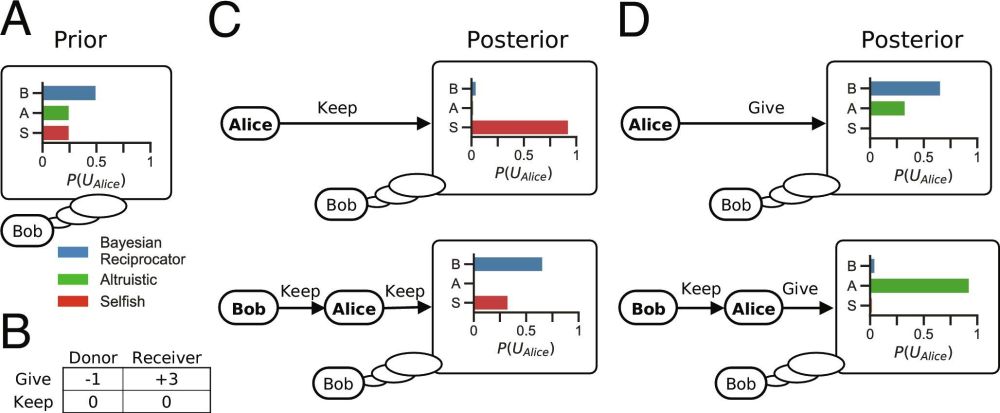

Humans are the ultimate cooperators. We coordinate on a scale and scope no other species (nor AI) can match. What makes this possible? 🧵

www.pnas.org/doi/10.1073/...

Humans are the ultimate cooperators. We coordinate on a scale and scope no other species (nor AI) can match. What makes this possible? 🧵

www.pnas.org/doi/10.1073/...

pypi.org/project/memo...

and

osf.io/preprints/ps...

pypi.org/project/memo...

and

osf.io/preprints/ps...

If you are human, you fall asleep at least once a day! What happens in your mind then?

Scientists know actually very little about this private moment.

We propose a 20-min survey to get as much data as possible!

Here is the link:

redcap.link/DriftingMinds

If you are human, you fall asleep at least once a day! What happens in your mind then?

Scientists know actually very little about this private moment.

We propose a 20-min survey to get as much data as possible!

Here is the link:

redcap.link/DriftingMinds

ml-site.cdn-apple.com/papers/the-i...

ml-site.cdn-apple.com/papers/the-i...