Blogging about data and steering AI (https://dataleverage.substack.com/)

dataleverage.substack.com/p/coding-age...

dataleverage.substack.com/p/coding-age...

We welcome allies in the @publicai.network!

publicai.network/whitepaper/

We welcome allies in the @publicai.network!

publicai.network/whitepaper/

We will have stellar talks to kick off the day, followed by contributed talks and posters by authors before lunch break.

We will have stellar talks to kick off the day, followed by contributed talks and posters by authors before lunch break.

Come hear thoughtful arguments about “digital heroin,” the nature of innovation, protecting privacy, machine unlearning, & how we can do ML research better as a community.

See you: ballroom 20AB from 10-11a & 3:30-4:30p!

#NeurIPS2025 #NeurIPSSD

Come hear thoughtful arguments about “digital heroin,” the nature of innovation, protecting privacy, machine unlearning, & how we can do ML research better as a community.

See you: ballroom 20AB from 10-11a & 3:30-4:30p!

#NeurIPS2025 #NeurIPSSD

dataleverage.substack.com/p/almost-eve...

dataleverage.substack.com/p/almost-eve...

🗣️ @b-cavello.bsky.social in our #4DCongress

🗣️ @b-cavello.bsky.social in our #4DCongress

✨ Workshop on Algorithmic Collective Action (ACA) ✨

acaworkshop.github.io

at NeurIPS 2025!

✨ Workshop on Algorithmic Collective Action (ACA) ✨

acaworkshop.github.io

at NeurIPS 2025!

"This model is good for asking health questions, because 10,000 doctors attested to supporting training and/or eval". Etc.

"This model is good for asking health questions, because 10,000 doctors attested to supporting training and/or eval". Etc.

On Thurs Aditya Karan will present on collective action dl.acm.org/doi/10.1145/... at 10:57 (New Stage A)

On Thurs Aditya Karan will present on collective action dl.acm.org/doi/10.1145/... at 10:57 (New Stage A)

dataleverage.substack.com/p/on-ai-driv...

dataleverage.substack.com/p/on-ai-driv...

dataleverage.substack.com/p/google-and...

This will be post 1/3 in a series about viewing many AI products as all competing around the same task: ranking bundles or grains of records made by people.

dataleverage.substack.com/p/google-and...

This will be post 1/3 in a series about viewing many AI products as all competing around the same task: ranking bundles or grains of records made by people.

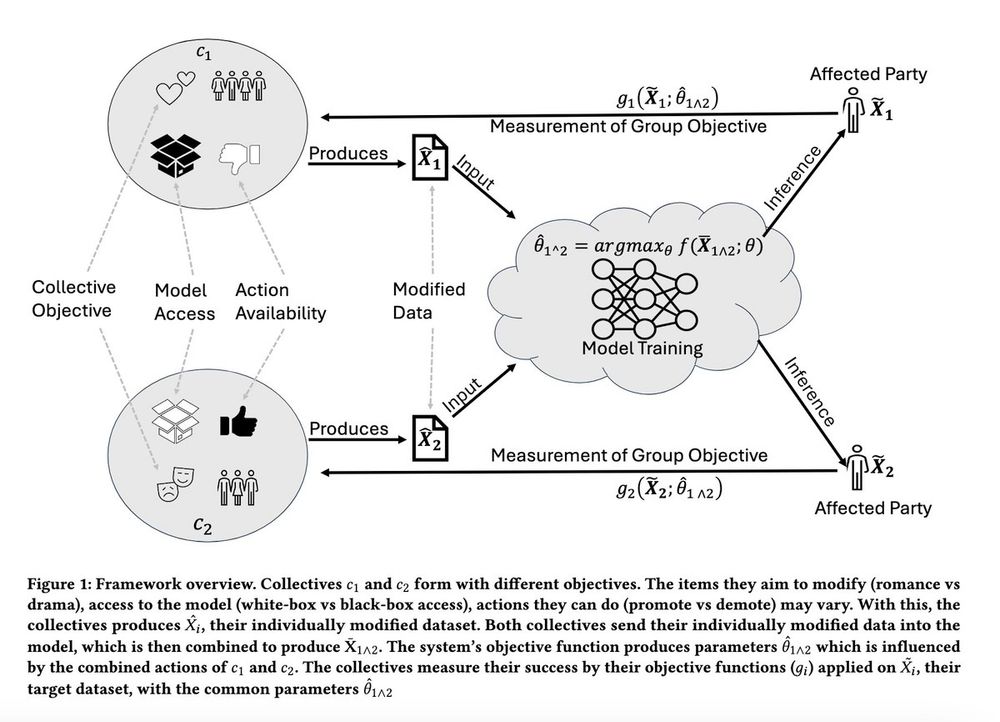

there's growing interest in algorithmic collective action, when a "collective" acts through data to impact a recommender system, classifier, or other model.

But... what happens if two collectives act at the same time?

there's growing interest in algorithmic collective action, when a "collective" acts through data to impact a recommender system, classifier, or other model.

But... what happens if two collectives act at the same time?

"A consortium of Public AI labs can substantially improve data pricing, which may also help to concretize debates about the ethics and legality of training practices."

dataleverage.substack.com/p/public-ai-...

"A consortium of Public AI labs can substantially improve data pricing, which may also help to concretize debates about the ethics and legality of training practices."

dataleverage.substack.com/p/public-ai-...