Nick Vincent

@nickmvincent.bsky.social

Studying people and computers (https://www.nickmvincent.com/)

Blogging about data and steering AI (https://dataleverage.substack.com/)

Blogging about data and steering AI (https://dataleverage.substack.com/)

Pinned

Nick Vincent

@nickmvincent.bsky.social

· Nov 19

Hi Bluesky (+ many friendly familiar faces). I'm a researcher in HCI + ML, assistant prof at Simon Fraser University up in BC, and working on "healthy data flow". Doing a quick thread recapping some recent writing (blogs, pre-prints, etc.) that capture the things I work on and talk about!

Reposted by Nick Vincent

"There are many challenges to transforming the AI ecosystem and strong interests resisting change. But we know change is possible, and we believe we have more allies in this effort than it may seem. There is a rebel in every Death Star."

🗣️ @b-cavello.bsky.social in our #4DCongress

🗣️ @b-cavello.bsky.social in our #4DCongress

October 28, 2025 at 4:36 PM

"There are many challenges to transforming the AI ecosystem and strong interests resisting change. But we know change is possible, and we believe we have more allies in this effort than it may seem. There is a rebel in every Death Star."

🗣️ @b-cavello.bsky.social in our #4DCongress

🗣️ @b-cavello.bsky.social in our #4DCongress

Heading to AIES, excited to catch up with folks there!

October 19, 2025 at 7:33 PM

Heading to AIES, excited to catch up with folks there!

New blog (a recap post): "How collective bargaining for information, public AI, and HCI research all fit together." Connecting these ideas + a short summary of various recent posts (of which there are many, perhaps too many!). On Substack, but also posted to leaflet

October 14, 2025 at 10:45 PM

New blog (a recap post): "How collective bargaining for information, public AI, and HCI research all fit together." Connecting these ideas + a short summary of various recent posts (of which there are many, perhaps too many!). On Substack, but also posted to leaflet

Reposted by Nick Vincent

V interesting twist on MCP! “user data is often fragmented across services and locked into specific providers, reinforcing user lock-in” - enter Human Context Protocol (HCP): “user-owned repositories of preferences designed for active, reflective control and consent-based sharing.” 1/

October 10, 2025 at 2:41 PM

V interesting twist on MCP! “user data is often fragmented across services and locked into specific providers, reinforcing user lock-in” - enter Human Context Protocol (HCP): “user-owned repositories of preferences designed for active, reflective control and consent-based sharing.” 1/

Anyone compiling discussions/thoughts on emerging licensing schemes and preference signals? eg rslstandard.org and github.com/creativecomm... ? externalizing some notes here datalicenses.org, but want to find where these discussions are happening!

RSL: Really Simple Licensing

The open content licensing standard for the AI-first Internet

rslstandard.org

September 18, 2025 at 6:43 PM

Anyone compiling discussions/thoughts on emerging licensing schemes and preference signals? eg rslstandard.org and github.com/creativecomm... ? externalizing some notes here datalicenses.org, but want to find where these discussions are happening!

Excited to be giving a talk on data leverage to the Singapore AI Safety Hub. Trying to capture updated thoughts from recent years, and have long wanted to better connect leverage/collective bargaining to the safety context.

August 14, 2025 at 8:05 AM

Excited to be giving a talk on data leverage to the Singapore AI Safety Hub. Trying to capture updated thoughts from recent years, and have long wanted to better connect leverage/collective bargaining to the safety context.

About a week away from the deadline to submit to the

✨ Workshop on Algorithmic Collective Action (ACA) ✨

acaworkshop.github.io

at NeurIPS 2025!

✨ Workshop on Algorithmic Collective Action (ACA) ✨

acaworkshop.github.io

at NeurIPS 2025!

About the workshop – ACA@NeurIPS

acaworkshop.github.io

August 14, 2025 at 7:56 AM

About a week away from the deadline to submit to the

✨ Workshop on Algorithmic Collective Action (ACA) ✨

acaworkshop.github.io

at NeurIPS 2025!

✨ Workshop on Algorithmic Collective Action (ACA) ✨

acaworkshop.github.io

at NeurIPS 2025!

🧵In several recent posts, I speculated that eventually, dataset details may become an important quality signal for consumers choosing AI products.

"This model is good for asking health questions, because 10,000 doctors attested to supporting training and/or eval". Etc.

"This model is good for asking health questions, because 10,000 doctors attested to supporting training and/or eval". Etc.

August 8, 2025 at 10:31 PM

🧵In several recent posts, I speculated that eventually, dataset details may become an important quality signal for consumers choosing AI products.

"This model is good for asking health questions, because 10,000 doctors attested to supporting training and/or eval". Etc.

"This model is good for asking health questions, because 10,000 doctors attested to supporting training and/or eval". Etc.

Around ICML with loose evening plans and an interest in "public AI", Canadian sovereign AI, or anything related? Swing by the Internet Archive Canada between 5p and 7p lu.ma/7rjoaxts

Oh Canada! An AI Happy Hour @ ICML 2025 · Luma

Whether you're Canadian or one of our friends from around the world, please join us for some drinks and conversation to chat about life, papers, AI, and...…

lu.ma

July 16, 2025 at 11:30 PM

Around ICML with loose evening plans and an interest in "public AI", Canadian sovereign AI, or anything related? Swing by the Internet Archive Canada between 5p and 7p lu.ma/7rjoaxts

[FAccT-related link round-up]: It was great to present on measuring Attentional Agency with Zachary Wojtowicz at FAccT. Here's our paper on ACM DL: dl.acm.org/doi/10.1145/...

On Thurs Aditya Karan will present on collective action dl.acm.org/doi/10.1145/... at 10:57 (New Stage A)

On Thurs Aditya Karan will present on collective action dl.acm.org/doi/10.1145/... at 10:57 (New Stage A)

Algorithmic Collective Action with Two Collectives | Proceedings of the 2025 ACM Conference on Fairness, Accountability, and Transparency

You will be notified whenever a record that you have chosen has been cited.

dl.acm.org

June 24, 2025 at 12:33 PM

[FAccT-related link round-up]: It was great to present on measuring Attentional Agency with Zachary Wojtowicz at FAccT. Here's our paper on ACM DL: dl.acm.org/doi/10.1145/...

On Thurs Aditya Karan will present on collective action dl.acm.org/doi/10.1145/... at 10:57 (New Stage A)

On Thurs Aditya Karan will present on collective action dl.acm.org/doi/10.1145/... at 10:57 (New Stage A)

“Attentional agency” — talk in new stage b at facct in the session right now!

June 24, 2025 at 7:48 AM

“Attentional agency” — talk in new stage b at facct in the session right now!

Off to FAccT; Excited to see faces old and new!

June 21, 2025 at 9:50 PM

Off to FAccT; Excited to see faces old and new!

Another blog post: a link roundup on AI's impact on jobs and power concentration, another proposal for Collective Bargaining for Information, and some additional thoughts on the topic:

dataleverage.substack.com/p/on-ai-driv...

dataleverage.substack.com/p/on-ai-driv...

On AI-driven Job Apocalypses and Collective Bargaining for Information

Reacting to a fresh wave of discussion about AI's impact on the economy and power concentration, and reiterating the potential role of collective bargaining.

dataleverage.substack.com

June 5, 2025 at 5:25 PM

Another blog post: a link roundup on AI's impact on jobs and power concentration, another proposal for Collective Bargaining for Information, and some additional thoughts on the topic:

dataleverage.substack.com/p/on-ai-driv...

dataleverage.substack.com/p/on-ai-driv...

New data leverage post: "Google and TikTok rank bundles of information; ChatGPT ranks grains."

dataleverage.substack.com/p/google-and...

This will be post 1/3 in a series about viewing many AI products as all competing around the same task: ranking bundles or grains of records made by people.

dataleverage.substack.com/p/google-and...

This will be post 1/3 in a series about viewing many AI products as all competing around the same task: ranking bundles or grains of records made by people.

Google and TikTok rank bundles of information; ChatGPT ranks grains.

Google and others solve our attentional problem by ranking discrete bundles of information, whereas ChatGPT ranks more granular chunks. This lens can help us reason about AI policy.

dataleverage.substack.com

May 27, 2025 at 3:45 PM

New data leverage post: "Google and TikTok rank bundles of information; ChatGPT ranks grains."

dataleverage.substack.com/p/google-and...

This will be post 1/3 in a series about viewing many AI products as all competing around the same task: ranking bundles or grains of records made by people.

dataleverage.substack.com/p/google-and...

This will be post 1/3 in a series about viewing many AI products as all competing around the same task: ranking bundles or grains of records made by people.

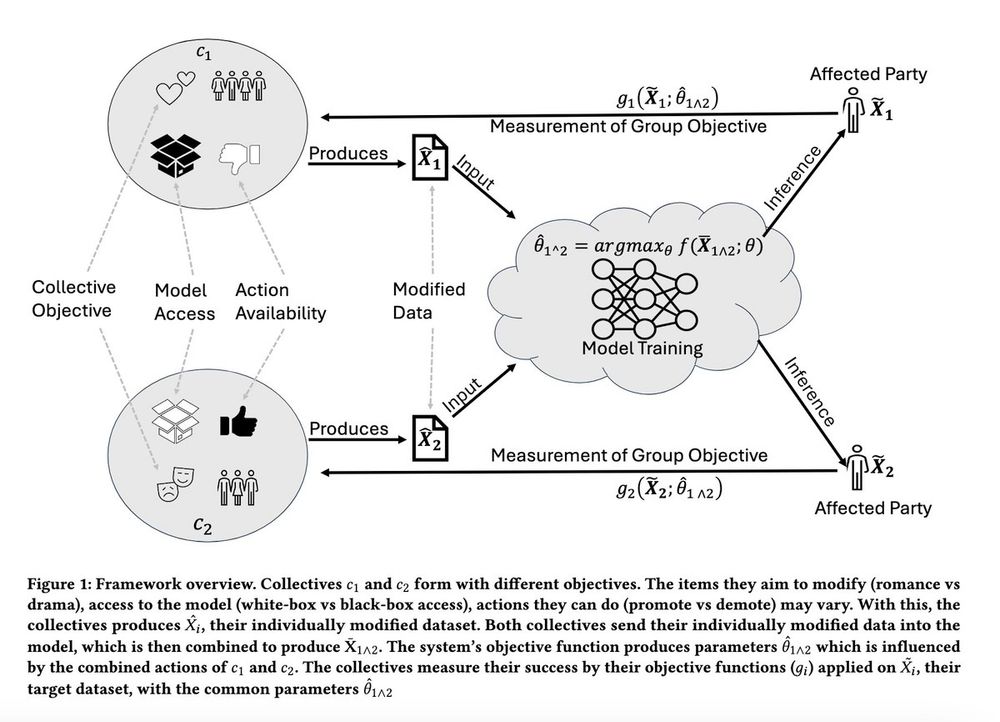

Sharing a new paper (led by Aditya Karan):

there's growing interest in algorithmic collective action, when a "collective" acts through data to impact a recommender system, classifier, or other model.

But... what happens if two collectives act at the same time?

there's growing interest in algorithmic collective action, when a "collective" acts through data to impact a recommender system, classifier, or other model.

But... what happens if two collectives act at the same time?

May 2, 2025 at 6:44 PM

Sharing a new paper (led by Aditya Karan):

there's growing interest in algorithmic collective action, when a "collective" acts through data to impact a recommender system, classifier, or other model.

But... what happens if two collectives act at the same time?

there's growing interest in algorithmic collective action, when a "collective" acts through data to impact a recommender system, classifier, or other model.

But... what happens if two collectives act at the same time?

New early draft post: "Public AI, Data Appraisal, and Data Debates"

"A consortium of Public AI labs can substantially improve data pricing, which may also help to concretize debates about the ethics and legality of training practices."

dataleverage.substack.com/p/public-ai-...

"A consortium of Public AI labs can substantially improve data pricing, which may also help to concretize debates about the ethics and legality of training practices."

dataleverage.substack.com/p/public-ai-...

Public AI, Data Appraisal, and Data Debates

A consortium of Public AI labs can substantially improve data pricing, which may also help to concretize debates about the ethics and legality of training practices.

dataleverage.substack.com

April 3, 2025 at 5:52 PM

New early draft post: "Public AI, Data Appraisal, and Data Debates"

"A consortium of Public AI labs can substantially improve data pricing, which may also help to concretize debates about the ethics and legality of training practices."

dataleverage.substack.com/p/public-ai-...

"A consortium of Public AI labs can substantially improve data pricing, which may also help to concretize debates about the ethics and legality of training practices."

dataleverage.substack.com/p/public-ai-...

Reposted by Nick Vincent

“Algo decision making systems are “leviathans”, harmful not for their arbitrariness or opacity, but systemacity of decisions"

- @christinalu.bsky.social on need for plural #AI model ontologies (sounds technical, but has big consequences for human #commons)

www.combinationsmag.com/model-plural...

- @christinalu.bsky.social on need for plural #AI model ontologies (sounds technical, but has big consequences for human #commons)

www.combinationsmag.com/model-plural...

Model Plurality

Current research in “plural alignment” concentrates on making AI models amenable to diverse human values. But plurality is not simply a safeguard against bias or an engine of efficiency: it’s a key in...

www.combinationsmag.com

April 2, 2025 at 7:57 AM

“Algo decision making systems are “leviathans”, harmful not for their arbitrariness or opacity, but systemacity of decisions"

- @christinalu.bsky.social on need for plural #AI model ontologies (sounds technical, but has big consequences for human #commons)

www.combinationsmag.com/model-plural...

- @christinalu.bsky.social on need for plural #AI model ontologies (sounds technical, but has big consequences for human #commons)

www.combinationsmag.com/model-plural...

New Data Leverage newsletter post. It's about... data leverage (specifically, evaluation-focused bargaining) and products du jour (deep research, agents).

dataleverage.substack.com/p/evaluation...

dataleverage.substack.com/p/evaluation...

Evaluation Data Leverage: Advances like "Deep Research" Highlight a Looming Opportunity for Bargaining Power

Research agents and increasingly general reasoning models open the door for immense "evaluation data leverage".

dataleverage.substack.com

March 3, 2025 at 6:26 PM

New Data Leverage newsletter post. It's about... data leverage (specifically, evaluation-focused bargaining) and products du jour (deep research, agents).

dataleverage.substack.com/p/evaluation...

dataleverage.substack.com/p/evaluation...

I have some new co-authored writing to share, along with a round-up of important articles for the "content ecosystems and AI" space.

I'm doing an experiment with microblogging directly to a GitHub repo that I can share across platforms...

I'm doing an experiment with microblogging directly to a GitHub repo that I can share across platforms...

February 14, 2025 at 6:25 PM

I have some new co-authored writing to share, along with a round-up of important articles for the "content ecosystems and AI" space.

I'm doing an experiment with microblogging directly to a GitHub repo that I can share across platforms...

I'm doing an experiment with microblogging directly to a GitHub repo that I can share across platforms...

Reposted by Nick Vincent

Global Dialogues has launched at the Paris #AIActionSummit.

Watch @audreyt.org give the announcement via @projectsyndicate.bsky.social

youtu.be/XkwqYQL6V4A?... (starts at 02:47:30)

Watch @audreyt.org give the announcement via @projectsyndicate.bsky.social

youtu.be/XkwqYQL6V4A?... (starts at 02:47:30)

PS Events: AI Action Summit

YouTube video by Project Syndicate

youtu.be

February 10, 2025 at 7:57 PM

Global Dialogues has launched at the Paris #AIActionSummit.

Watch @audreyt.org give the announcement via @projectsyndicate.bsky.social

youtu.be/XkwqYQL6V4A?... (starts at 02:47:30)

Watch @audreyt.org give the announcement via @projectsyndicate.bsky.social

youtu.be/XkwqYQL6V4A?... (starts at 02:47:30)

On Mon, wrote a post on the live-by-the-sword, die-by-the-sword nature of the current data paradigm. On Wed, there was quite a development on this front -- OpenAI came out with a statement that they have evidence that DeepSeek "used" OpenAI models in some fashion (this was faster than I expected!)

January 31, 2025 at 7:26 PM

On Mon, wrote a post on the live-by-the-sword, die-by-the-sword nature of the current data paradigm. On Wed, there was quite a development on this front -- OpenAI came out with a statement that they have evidence that DeepSeek "used" OpenAI models in some fashion (this was faster than I expected!)

Longer version: dataleverage.substack.com/p/live-by-th...

January 28, 2025 at 12:37 AM

Longer version: dataleverage.substack.com/p/live-by-th...

Haven’t seen any AI operators openly commenting yet on the “live by the sword, die by the sword” nature of ask for forgiveness-style data use.

But “open” model progress likely to increase the visibility of the tension, for better or worse.

But “open” model progress likely to increase the visibility of the tension, for better or worse.

January 27, 2025 at 5:53 PM

Haven’t seen any AI operators openly commenting yet on the “live by the sword, die by the sword” nature of ask for forgiveness-style data use.

But “open” model progress likely to increase the visibility of the tension, for better or worse.

But “open” model progress likely to increase the visibility of the tension, for better or worse.

Problem w/ "synthetic data" term is that (I posit) there's *always* some human action you can trace a "synthetic document" to. Problem w/ "AI agents" term is that there's *always* some humans who did the actuation and kick-off who hold moral agency. Obfuscating either is bad.

January 15, 2025 at 6:02 PM

Problem w/ "synthetic data" term is that (I posit) there's *always* some human action you can trace a "synthetic document" to. Problem w/ "AI agents" term is that there's *always* some humans who did the actuation and kick-off who hold moral agency. Obfuscating either is bad.

creativity-ai.github.io kicking off now at #NeurIPS2024... incredibly exciting group of people convened, and important urgent discussions happening

Workshop on Creativity & Generative AI

A dialogue between machine learning researchers and creative professionals

creativity-ai.github.io

December 14, 2024 at 5:09 PM

creativity-ai.github.io kicking off now at #NeurIPS2024... incredibly exciting group of people convened, and important urgent discussions happening