Blogging about data and steering AI (https://dataleverage.substack.com/)

And related, "eval leverage": dataleverage.substack.com/p/evaluation...

And related, "eval leverage": dataleverage.substack.com/p/evaluation...

The original post: dataleverage.substack.com/p/selling-ag...

The original post: dataleverage.substack.com/p/selling-ag...

More generally, I think there are interesting connections between current discourse &

More generally, I think there are interesting connections between current discourse &

But attestations provide another object to transact over. Valuable info (a Dr giving thumbs up/down on medical responses) may leak, but the AI developer

But attestations provide another object to transact over. Valuable info (a Dr giving thumbs up/down on medical responses) may leak, but the AI developer

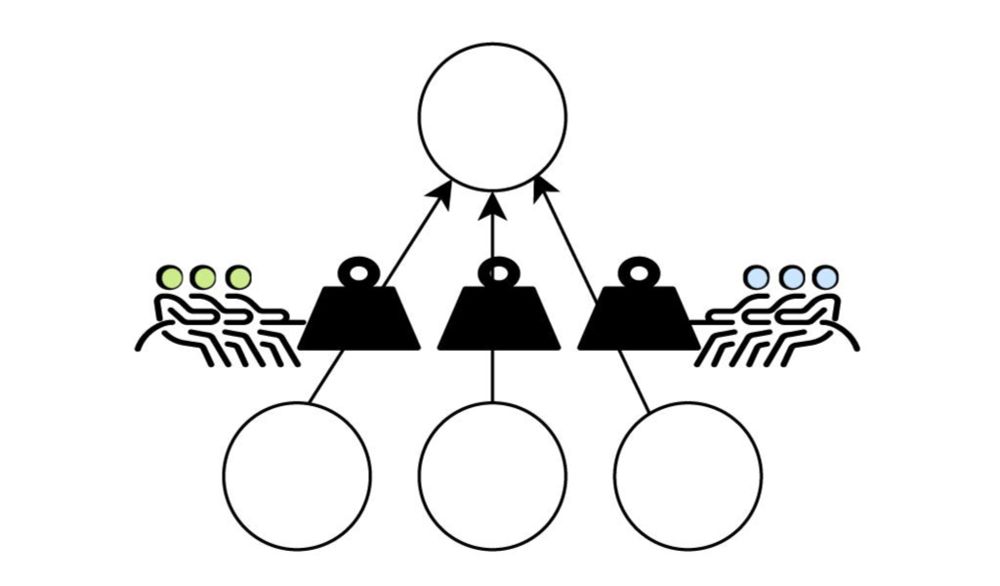

- individual property-ish rights over info (not a great way to go, IMO)

- rights that enable collective bargaining (good!)

- or...

- individual property-ish rights over info (not a great way to go, IMO)

- rights that enable collective bargaining (good!)

- or...

- genAI as ranking chunks of info: dataleverage.substack.com/p/google-and...

- utility of AI stems from people: dataleverage.substack.com/p/each-insta...

- connection to evals: dataleverage.substack.com/p/how-do-we-...

- genAI as ranking chunks of info: dataleverage.substack.com/p/google-and...

- utility of AI stems from people: dataleverage.substack.com/p/each-insta...

- connection to evals: dataleverage.substack.com/p/how-do-we-...