Currently at LTI at CMU. 🏳🌈

Our #EMNLP2025 paper reveals that crafting thoughtful refusals rather than detecting intent is the key to human-centered AI safety.

📄 arxiv.org/abs/2506.00195

🧵[1/9]

Our #EMNLP2025 paper reveals that crafting thoughtful refusals rather than detecting intent is the key to human-centered AI safety.

📄 arxiv.org/abs/2506.00195

🧵[1/9]

If you work on persona driven LLMs, social cognition, HCI, psychology, cognitive science, cultural modeling, or evaluation, do not miss the chance to submit.

Submit here: openreview.net/group?id=Neu...

If you work on persona driven LLMs, social cognition, HCI, psychology, cognitive science, cultural modeling, or evaluation, do not miss the chance to submit.

Submit here: openreview.net/group?id=Neu...

With EVALUESTEER, we find even the best RMs we tested exhibit their own value/style biases, and are unable to align with a user >25% of the time. 🧵

With EVALUESTEER, we find even the best RMs we tested exhibit their own value/style biases, and are unable to align with a user >25% of the time. 🧵

Standard benchmarks give every LLM the same questions. This is like testing 5th graders and college seniors with *one* exam! 🥴

Meet Fluid Benchmarking, a capability-adaptive eval method delivering lower variance, higher validity, and reduced cost.

🧵

Standard benchmarks give every LLM the same questions. This is like testing 5th graders and college seniors with *one* exam! 🥴

Meet Fluid Benchmarking, a capability-adaptive eval method delivering lower variance, higher validity, and reduced cost.

🧵

Each lecture is taught by a different LTI prof! It takes a village! maartensap.com/11705/Fall20...

Each lecture is taught by a different LTI prof! It takes a village! maartensap.com/11705/Fall20...

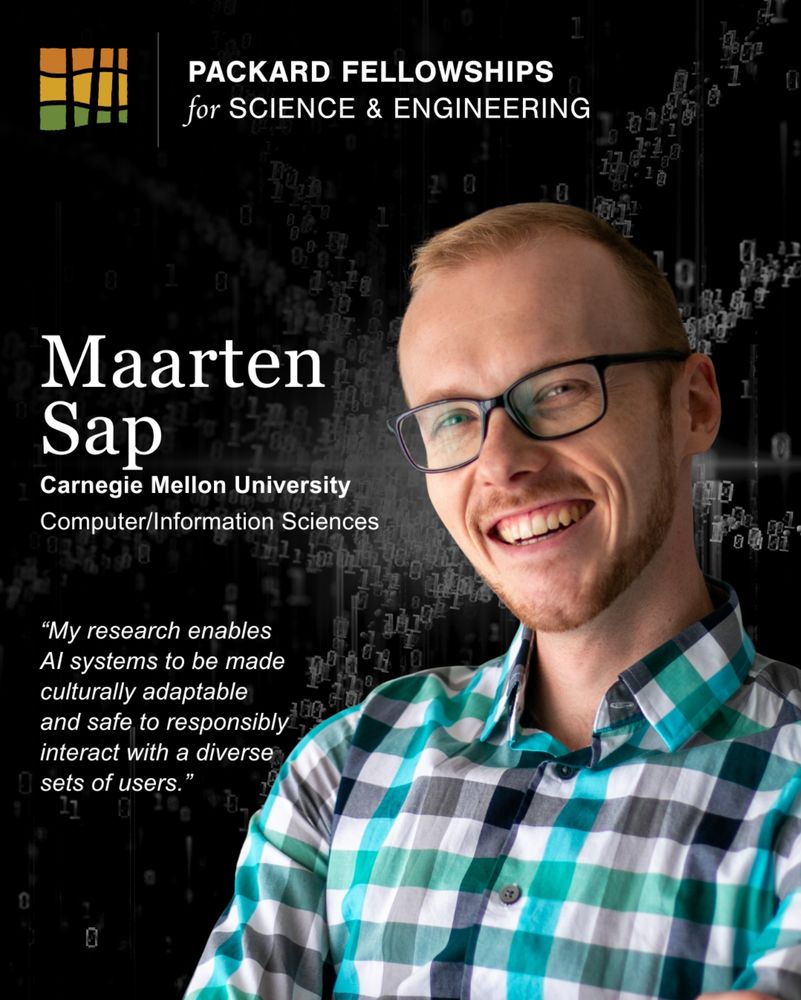

@maartensap.bsky.social, who's been awarded an

Okawa Research Grant for his work in his work in socially-aware artificial intelligence. lti.cmu.edu/news-and-eve...

lti.cmu.edu/news-and-eve...

lti.cmu.edu/news-and-eve...

lti.cmu.edu/news-and-eve...

lti.cmu.edu/news-and-eve...

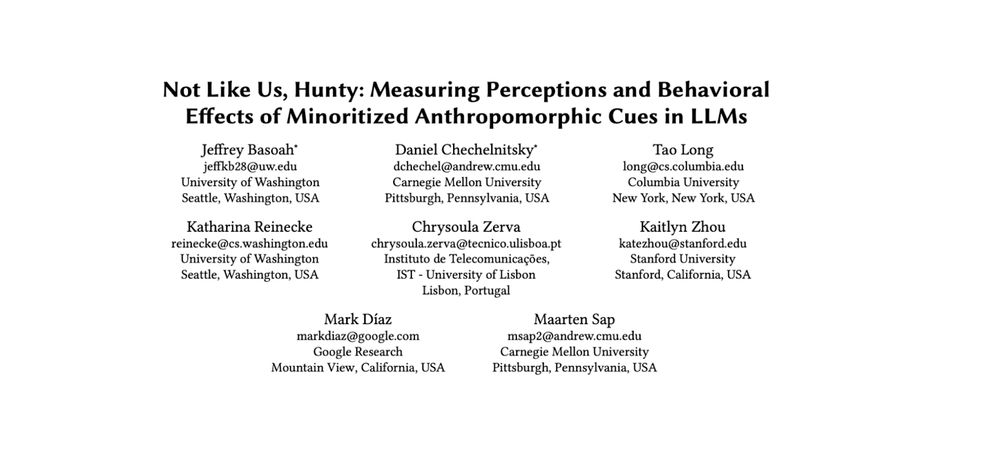

You: “Can you plan a trip?”

🤖 AI: “Yasss queen! let’s werk this babe✨💅”

LLMs can talk like us, but it shapes how we trust, rely on & relate to them 🧵

📣 our #FAccT2025 paper: bit.ly/3HJ6rWI

[1/9]

You: “Can you plan a trip?”

🤖 AI: “Yasss queen! let’s werk this babe✨💅”

LLMs can talk like us, but it shapes how we trust, rely on & relate to them 🧵

📣 our #FAccT2025 paper: bit.ly/3HJ6rWI

[1/9]

Check out our website: sites.google.com/andrew.cmu.e...

Call for submissions (extended abstracts) due June 19, 11:59pm AoE

#COLM2025 #LLMs #NLP #NLProc #ComputationalSocialScience

Check out our website: sites.google.com/andrew.cmu.e...

Call for submissions (extended abstracts) due June 19, 11:59pm AoE

#COLM2025 #LLMs #NLP #NLProc #ComputationalSocialScience

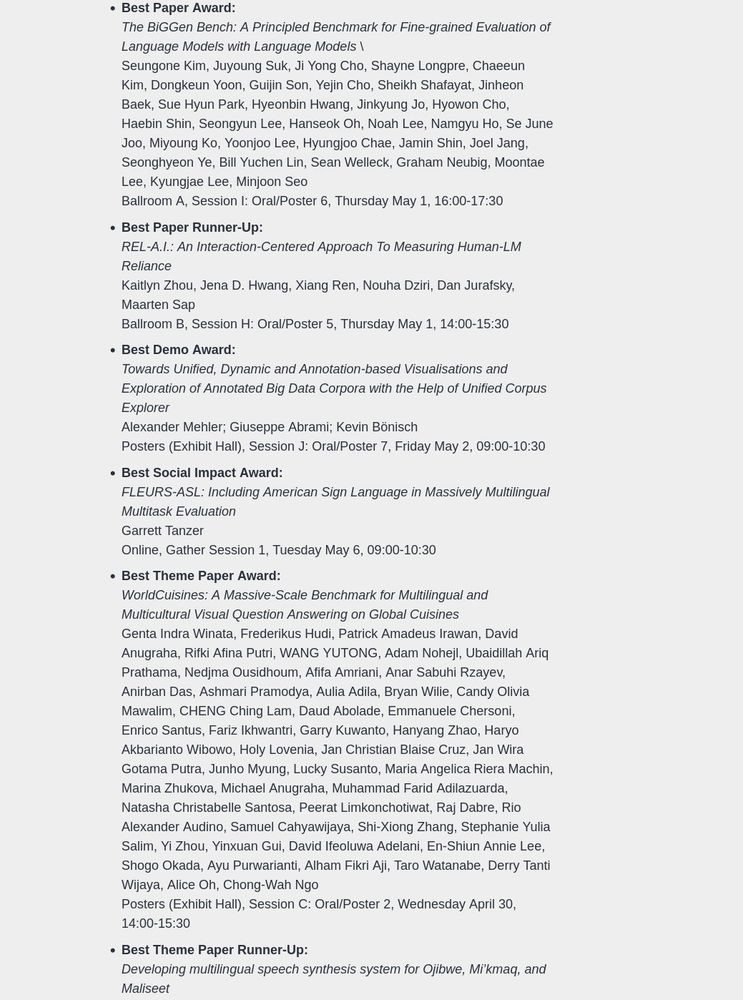

Our work (REL-A.I.) introduces an evaluation framework that measures human reliance on LLMs and reveals how contextual features like anthropomorphism, subject, and user history can significantly influence user reliance behaviors.

RAG systems excel on academic benchmarks - but are they robust to variations in linguistic style?

We find RAG systems are brittle. Small shifts in phrasing trigger cascading errors, driven by the complexity of the RAG pipeline 🧵

RAG systems excel on academic benchmarks - but are they robust to variations in linguistic style?

We find RAG systems are brittle. Small shifts in phrasing trigger cascading errors, driven by the complexity of the RAG pipeline 🧵

Excited to share our paper on biases against African American Language in reward models, accepted to #NAACL2025 Findings! 🎉

Paper: arxiv.org/abs/2502.12858 (1/10)

🤞means luck in US but deeply offensive in Vietnam 🚨

📣 We introduce MC-SIGNS, a test bed to evaluate how LLMs/VLMs/T2I handle such nonverbal behavior!

📜: arxiv.org/abs/2502.17710

Our new framework ALFA—ALignment with Fine-grained Attributes—teaches LLMs to PROACTIVE seek information through better questions through **structured rewards**🏥❓

(co-led with @jiminmun.bsky.social)

👉🏻🧵

Tired of coding agents wasting time and API credits, only to output broken code? What if they asked first instead of guessing? 🚀

(New work led by Sanidhya Vijay: www.linkedin.com/in/sanidhya-...)

Tired of coding agents wasting time and API credits, only to output broken code? What if they asked first instead of guessing? 🚀

(New work led by Sanidhya Vijay: www.linkedin.com/in/sanidhya-...)