These claims are delusional. As a synthetic biologist and LLM engineer, I felt compelled to write why for anyone who might care:

dreamofmachin.es/machine_prop...

www.nature.com/articles/d41...

www.nature.com/articles/d41...

There is lots to criticize about AI and plenty of real issues caused by AI, but the narrative that this is all a fake thing that will disappear doesn't help anyone.

There is lots to criticize about AI and plenty of real issues caused by AI, but the narrative that this is all a fake thing that will disappear doesn't help anyone.

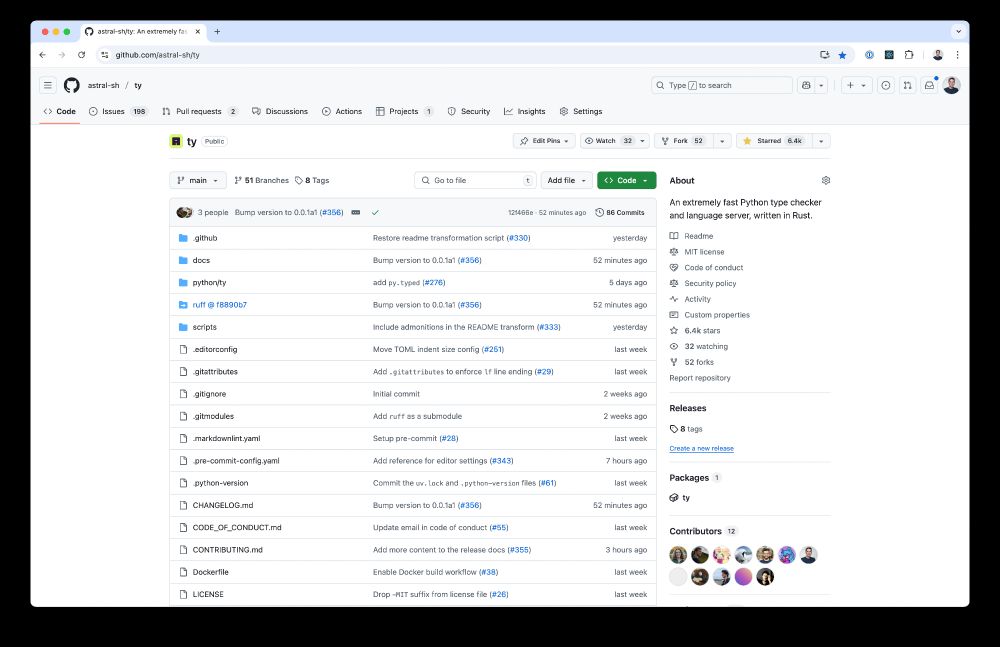

In early testing, it's 10x, 50x, even 100x faster than existing type checkers. (We've seen >600x speed-ups over Mypy in some real-world projects.)

In early testing, it's 10x, 50x, even 100x faster than existing type checkers. (We've seen >600x speed-ups over Mypy in some real-world projects.)

Learn more: cayimby.org/legislation/...

You know there’s new data on the origins question, right?

You know there’s new data on the origins question, right?

1. The Wuhan Institute of Virology had sampled the virus most identical to SARS-CoV-2

2. SARS-CoV-2 lineage B, but not lineage A, was found in Huanan market

By 2022, neither was true, so...

1. The Wuhan Institute of Virology had sampled the virus most identical to SARS-CoV-2

2. SARS-CoV-2 lineage B, but not lineage A, was found in Huanan market

By 2022, neither was true, so...

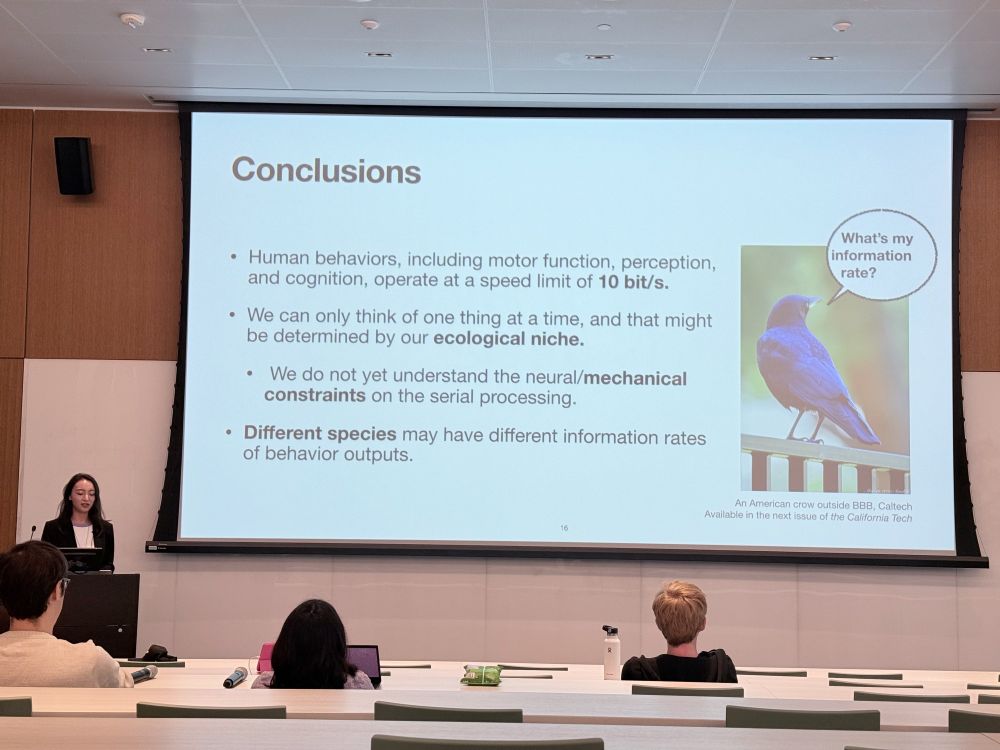

Thanks to Profs. @cfcamerer.bsky.social & Carlos for the invite!

Thanks to Profs. @cfcamerer.bsky.social & Carlos for the invite!

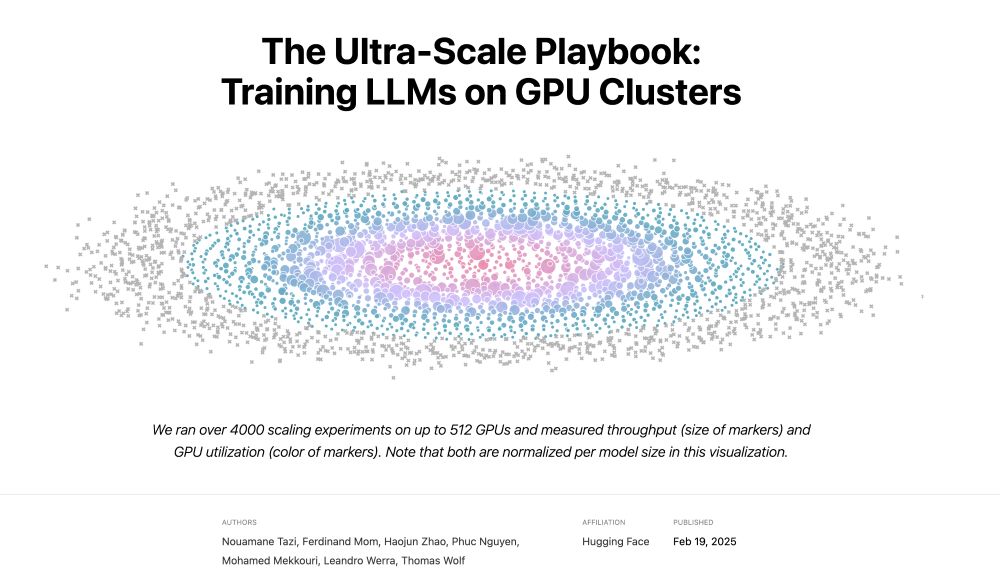

A book to learn all about 5D parallelism, ZeRO, CUDA kernels, how/why overlap compute & coms with theory, motivation, interactive plots and 4000+ experiments!

A book to learn all about 5D parallelism, ZeRO, CUDA kernels, how/why overlap compute & coms with theory, motivation, interactive plots and 4000+ experiments!

I’d like this lawyer whose primary expertise is scaremongering for EA money to explain how using “future kinds of models” starts pandemics.

Outline the model to pandemic pipeline for me.

I’d like this lawyer whose primary expertise is scaremongering for EA money to explain how using “future kinds of models” starts pandemics.

Outline the model to pandemic pipeline for me.

I also feel the dismantling of our scientific institutions & funding agencies for basic science is an attack on all scientists, wherever they might be (government or corporate lab, academic institution, ...).

Our collective identities are about advancing knowledge.

I also feel the dismantling of our scientific institutions & funding agencies for basic science is an attack on all scientists, wherever they might be (government or corporate lab, academic institution, ...).

Our collective identities are about advancing knowledge.

We hope that it helps you make the most of your computing resources. Enjoy!

We hope that it helps you make the most of your computing resources. Enjoy!

www.theatlantic.com/magazine/arc...

www.theatlantic.com/magazine/arc...

#MathSky

#MathSky

Hope this will inspire you to think about the brain from a new perspective!

Hope this will inspire you to think about the brain from a new perspective!

Our recent cross-institutional work asks: Does the available evidence match the current level of attention?

📜 arxiv.org/abs/2412.01946

Our recent cross-institutional work asks: Does the available evidence match the current level of attention?

📜 arxiv.org/abs/2412.01946